Model description

[More Information Needed]

Intended uses & limitations

[More Information Needed]

Training Procedure

Hyperparameters

The model is trained with below hyperparameters.

Click to expand

| Hyperparameter | Value |

|---|---|

| Cs | 10 |

| class_weight | |

| cv | StratifiedKFold(n_splits=5, random_state=1, shuffle=True) |

| dual | False |

| fit_intercept | True |

| intercept_scaling | 1.0 |

| l1_ratios | |

| max_iter | 100 |

| multi_class | auto |

| n_jobs | |

| penalty | l2 |

| random_state | 1 |

| refit | False |

| scoring | |

| solver | lbfgs |

| tol | 0.0001 |

| verbose | 0 |

Model Plot

The model plot is below.

LogisticRegressionCV(cv=StratifiedKFold(n_splits=5, random_state=1, shuffle=True),random_state=1, refit=False)Please rerun this cell to show the HTML repr or trust the notebook.

LogisticRegressionCV(cv=StratifiedKFold(n_splits=5, random_state=1, shuffle=True),random_state=1, refit=False)

Evaluation Results

You can find the details about evaluation process and the evaluation results.

| Metric | Value |

|---|---|

| accuracy | 0.982166 |

| f1 score | 0.982166 |

How to Get Started with the Model

[More Information Needed]

Model Card Authors

This model card is written by following authors:

[More Information Needed]

Model Card Contact

You can contact the model card authors through following channels: [More Information Needed]

Citation

Below you can find information related to citation.

BibTeX:

[More Information Needed]

citation_bibtex

@article{singh2022emb, title={Emb-GAM: an Interpretable and Efficient Predictor using Pre-trained Language Models}, author={Singh, Chandan and Gao, Jianfeng}, journal={arXiv preprint arXiv:2209.11799}, year={2022} }

get_started_code

from PIL import Image from skops import hub_utils import torch from transformers import AutoFeatureExtractor, AutoModel import pickle import os

load embedding model

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu') feature_extractor = AutoFeatureExtractor.from_pretrained('Ramos-Ramos/vicreg-resnet-50') model = AutoModel.from_pretrained('Ramos-Ramos/vicreg-resnet-50').eval().to(device)

load logistic regression

os.mkdir('emb-gam-vicreg-resnet') hub_utils.download(repo_id='Ramos-Ramos/emb-gam-vicreg-resnet', dst='emb-gam-vicreg-resnet')

with open('emb-gam-vicreg-resnet/model.pkl', 'rb') as file: logistic_regression = pickle.load(file)

load image

img = Image.open('examples/english_springer.png')

preprocess image

inputs = {k: v.to(device) for k, v in feature_extractor(img, return_tensors='pt').items()}

extract patch embeddings

with torch.no_grad(): patch_embeddings = model(*inputs).last_hidden_state[0].permute(1, 2, 0).view(77, 2048).cpu()

classify

pred = logistic_regression.predict(patch_embeddings.sum(dim=0, keepdim=True))

get patch contributions

patch_contributions = logistic_regression.coef_ @ patch_embeddings.T.numpy()

model_card_authors

Patrick Ramos and Ryan Ramos

limitations

This model is not intended to be used in production.

model_description

This is a LogisticRegressionCV model trained on averages of patch embeddings from the Imagenette dataset. This forms the GAM of an Emb-GAM extended to images. Patch embeddings are meant to be extracted with the Ramos-Ramos/vicreg-resnet-50 ResNet checkpoint.

eval_method

The model is evaluated using test split, on accuracy and F1 score with macro average.

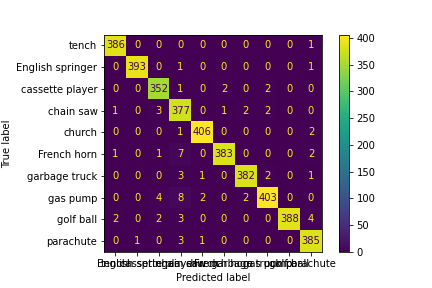

confusion_matrix

- Downloads last month

- 0