FLUX.1-dev-ControlNet-Depth

This repository contains a Depth ControlNet for FLUX.1-dev model jointly trained by researchers from InstantX Team and Shakker Labs.

Model Cards

- The model consists of 4 FluxTransformerBlock and 1 FluxSingleTransformerBlock.

- This checkpoint is trained on both real and generated image datasets, with 16*A800 for 70K steps. The batch size 16*4=64 with resolution=1024. The learning rate is set to 5e-6. We use Depth-Anything-V2 to extract depth maps.

- The recommended controlnet_conditioning_scale is 0.3-0.7.

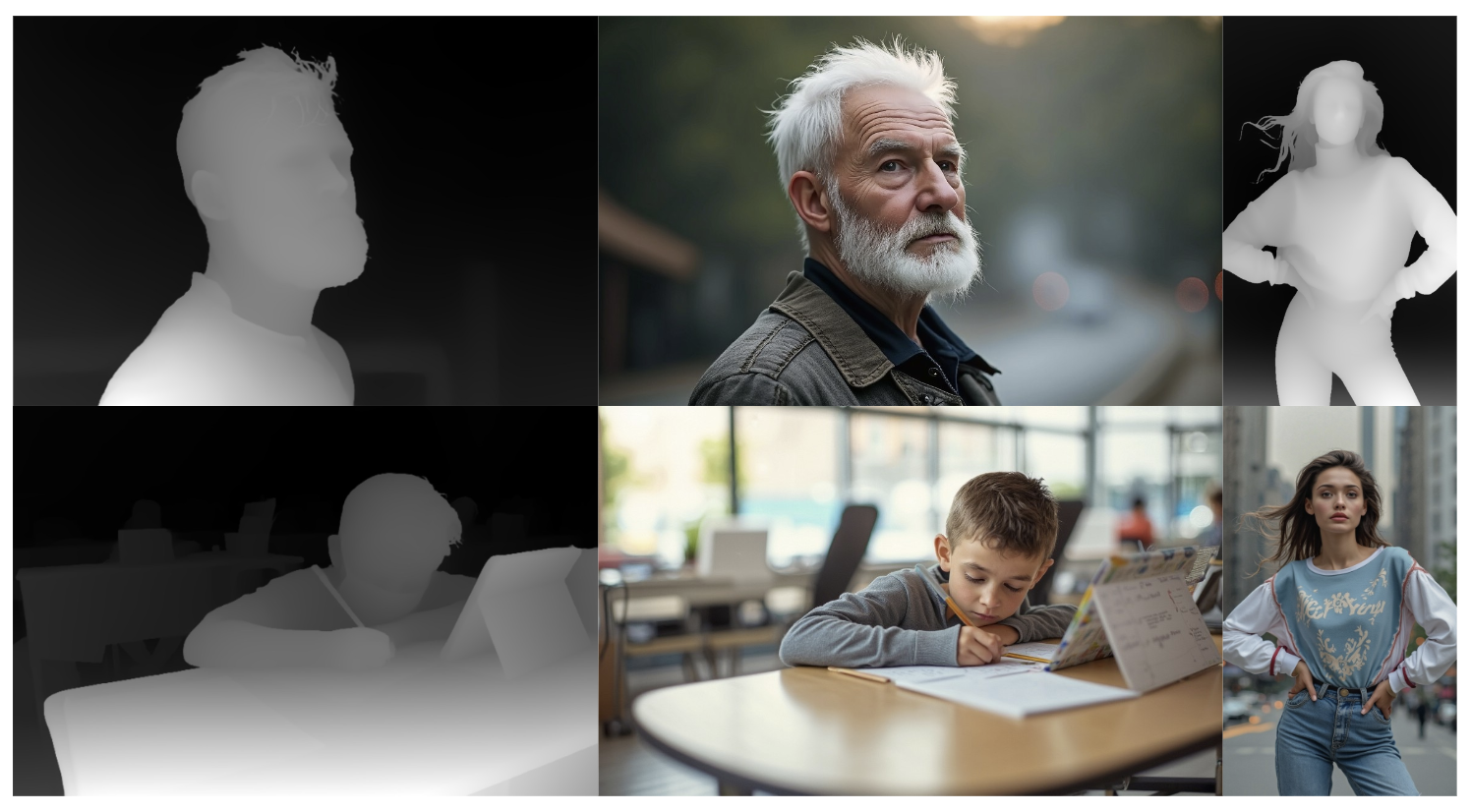

Showcases

Inference

import torch

from diffusers.utils import load_image

from diffusers import FluxControlNetPipeline, FluxControlNetModel

base_model = "black-forest-labs/FLUX.1-dev"

controlnet_model = "Shakker-Labs/FLUX.1-dev-ControlNet-Depth"

controlnet = FluxControlNetModel.from_pretrained(controlnet_model, torch_dtype=torch.bfloat16)

pipe = FluxControlNetPipeline.from_pretrained(

base_model, controlnet=controlnet, torch_dtype=torch.bfloat16

)

pipe.to("cuda")

control_image = load_image("https://huggingface.co/Shakker-Labs/FLUX.1-dev-ControlNet-Depth/resolve/main/assets/cond1.png")

prompt = "an old man with white hair"

image = pipe(prompt,

control_image=control_image,

controlnet_conditioning_scale=0.5,

width=control_image.size[0],

height=control_image.size[1],

num_inference_steps=24,

guidance_scale=3.5,

).images[0]

For multi-ControlNets support, please refer to Shakker-Labs/FLUX.1-dev-ControlNet-Union-Pro.

Resources

- InstantX/FLUX.1-dev-Controlnet-Canny

- Shakker-Labs/FLUX.1-dev-ControlNet-Depth

- Shakker-Labs/FLUX.1-dev-ControlNet-Union-Pro

Acknowledgements

This project is sponsored and released by Shakker AI. All copyright reserved.

- Downloads last month

- 2,810

This model does not have enough activity to be deployed to Inference API (serverless) yet. Increase its social

visibility and check back later, or deploy to Inference Endpoints (dedicated)

instead.

Model tree for Shakker-Labs/FLUX.1-dev-ControlNet-Depth

Base model

black-forest-labs/FLUX.1-dev