Introduction

We are excited to announce the release of the Skywork o1 Open model series, developed by the Skywork team at Kunlun Inc. This groundbreaking release introduces a series of models that incorporate o1-like slow thinking and reasoning capabilities. The Skywork o1 Open model series includes three advanced models:

Skywork o1 Open-Llama-3.1-8B: A robust chat model trained on Llama-3.1-8B, enhanced significantly with "o1-style" data to improve reasoning skills.

Skywork o1 Open-PRM-Qwen-2.5-1.5B: A specialized model designed to enhance reasoning capability through incremental process rewards, ideal for complex problem solving at a smaller scale.

Skywork o1 Open-PRM-Qwen-2.5-7B: Extends the capabilities of the 1.5B model by scaling up to handle more demanding reasoning tasks, pushing the boundaries of AI reasoning.

Different from mere reproductions of the OpenAI o1 model, the Skywork o1 Open model series not only exhibits innate thinking, planning, and reflecting capabilities in its outputs, but also shows significant improvements in reasoning skills on standard benchmarks. This series represents a strategic advancement in AI capabilities, moving a previously weaker base model towards the state-of-the-art (SOTA) in reasoning tasks.

Methods

The Skywork o1 Open series' remarkable cognitive abilities are developed through a three-stage training scheme:

Reflective Reasoning Training: Utilizing a proprietary multi-agent system to generate high-quality, diverse data for long-thinking tasks, followed by continuous pre-training and supervised fine-tuning.

Reinforcement Learning for Reasoning Capabilities: Introduction of the Skywork o1 Process Reward Model (PRM), tailored to enhance step-by-step reasoning. Our experiments confirm that the Skywork-PRM effectively captures the influence of intermediate reasoning steps on final outcomes, combined with proprietary reasoning reinforcement algorithms.

Reasoning Planning: Deploying Tiangong's proprietary Q* online reasoning algorithm alongside model-based thinking, searching for optimal reasoning paths. This marks the first implementation and public release of a Q* algorithm, significantly boosting the model's online reasoning capabilities.

Highlights

The Skywork o1 Open series stands out with the following capabilities:

- Enhanced model thinking and planning capabilities.

- Advanced self-reflection and self-verification abilities.

Compared to previous large models, the Skywork o1 Open series adeptly handles a variety of reasoning challenges, including common-sense, logical, mathematical, ethical decision-making, and logical trap problems.

Models

Skywork o1 Open 8B

The Skywork o1 Open 8B model shows notable improvements across various mathematical and coding benchmarks, pushing the performance of Llama-3.1-8B to the forefront of its category, outperforming prior SOTA models (with a similar size) Qwen-2.5-7B instruct.

Quickstart

To run inference with Skywork-o1-Open-Llama3.1-8B, simply provide a few lines of code as shown below.

import torch

from transformers import AutoModelForCausalLM, AutoTokenizer

system_prompt = """You are Skywork-o1, a thinking model developed by Skywork AI, specializing in solving complex problems involving mathematics, coding, and logical reasoning through deep thought. When faced with a user's request, you first engage in a lengthy and in-depth thinking process to explore possible solutions to the problem. After completing your thoughts, you then provide a detailed explanation of the solution process in your response."""

# An Example Case

problem = "Jane has 12 apples. She gives 4 apples to her friend Mark, then buys 1 more apple, and finally splits all her apples equally among herself and her 2 siblings. How many apples does each person get?"

user_message = problem

conversation = [

{

"role": "system",

"content": system_prompt

},

{

"role": "user",

"content": user_message

}

]

model_name = "Skywork-o1-Open-Llama3.1-8B"

model = AutoModelForCausalLM.from_pretrained(

model_name,

torch_dtype="auto",

device_map="auto"

)

tokenizer = AutoTokenizer.from_pretrained(model_name)

input_ids = tokenizer.apply_chat_template(

conversation,

tokenize=True,

add_generation_prompt=True,

return_tensors="pt").to(model.device)

generation = model.generate(

input_ids=input_ids,

max_new_tokens=2048,

do_sample=False,

pad_token_id=128009,

temperature=0)

completion = tokenizer.decode(

generation[0][len(input_ids[0]):],

skip_special_tokens=True,

clean_up_tokenization_spaces=True)

print(completion)

Reasoning Examples

Here are a few examples demonstrating how Skywork-o1-Open-Llama3.1-8B excels in planning, reasoning, and reflection.

Mathematical Problem in Chinese

Input: 一项工程,山东队独做需15天完成,江苏队独做需10天完成。两队合作,几天可以完成?

Output: 好的,让我们来解决这个有趣的工程问题。我会用轻松的语气,一步步地分析这个问题。

首先,让我们理解一下题目给出的信息:

1. 山东队独做需要15天完成工程

2. 江苏队独做需要10天完成工程

现在,我们需要找出两队合作时的完成时间。让我们从每队的工作效率开始分析:

1. 山东队的效率:

15天完成1个工程,所以每天完成的工程量是 1/15

2. 江苏队的效率:

10天完成1个工程,所以每天完成的工程量是 1/10

稍等,让我们再检查一下这个计算...没错,这个计算是正确的。

现在,两队合作时,每天完成的工程量是两队效率的和:

1/15 + 1/10 = 2/30 + 3/30 = 5/30 = 1/6

这意味着每天两队合作完成的工程量是1/6。

接下来,我们需要计算完成1个工程需要多少天。我们可以用以下等式:

(1/6) × x = 1

解这个等式:

x = 1 ÷ (1/6) = 6

所以,两队合作完成工程需要6天。

让我们再验证一下:

- 6天 × (1/6) = 1

- 这确实等于1,说明我们的计算是正确的。

因此,最终答案是:两队合作完成工程需要6天。

Mathematical Problem in English

Input: Determine the value of $y$ in its simplest form, where $y = \frac{p + q\sqrt{r}}{s}$, given that $\frac{7y}{5} - 2 = \frac{4}{y}$, and $p, q, r, s$ are integers. What is $\frac{pqr}{s}$?

Output: To determine the value of \( y \) in the form \( y = \frac{p + q\sqrt{r}}{s} \), we start with the given equation:

\[

\frac{7y}{5} - 2 = \frac{4}{y}

\]

First, we eliminate the denominators by multiplying both sides of the equation by \( 5y \):

\[

7y^2 - 10y = 20

\]

Next, we rearrange the equation to set it to zero:

\[

7y^2 - 10y - 20 = 0

\]

We solve this quadratic equation using the quadratic formula \( y = \frac{-b \pm \sqrt{b^2 - 4ac}}{2a} \), where \( a = 7 \), \( b = -10 \), and \( c = -20 \). Plugging in these values, we get:

\[

y = \frac{-(-10) \pm \sqrt{(-10)^2 - 4 \cdot 7 \cdot (-20)}}{2 \cdot 7} = \frac{10 \pm \sqrt{100 + 560}}{14} = \frac{10 \pm \sqrt{660}}{14}

\]

We simplify \( \sqrt{660} \) as follows:

\[

\sqrt{660} = \sqrt{4 \cdot 165} = 2\sqrt{165}

\]

Substituting this back into the quadratic formula, we have:

\[

y = \frac{10 \pm 2\sqrt{165}}{14} = \frac{5 \pm \sqrt{165}}{7}

\]

Since we are asked for the value of \( y \) in the form \( \frac{p + q\sqrt{r}}{s} \), we can choose either the positive or negative root. Let's take the positive root for simplicity:

\[

y = \frac{5 + \sqrt{165}}{7}

\]

Here, \( p = 5 \), \( q = 1 \), \( r = 165 \), and \( s = 7 \). We need to find the value of \( \frac{pqr}{s} \):

\[

\frac{pqr}{s} = \frac{5 \cdot 1 \cdot 165}{7} = \frac{825}{7}

\]

Thus, the final answer is:

\[

\boxed{\frac{825}{7}}

\]

Logical Problem in Chinese

Input: 火药∶鞭炮∶二踢脚 可以对应下列选项中的:A.乌铁∶刀具∶三棱刀\nB.红砖∶建筑∶四合院\nC.清水∶白酒∶五粮液\nD.杉木∶乐器∶六弦琴

Output: 好的,让我们一起来解决这个逻辑关系题。我们需要找出与"火药∶鞭炮∶二踢脚"最相似的关系。

首先,让我们分析给定的关系:

火药 → 鞭炮 → 二踢脚

1. 火药是制作鞭炮的原料。

2. 鞭炮是由火药制成的。

3. 二踢脚是鞭炮的一种。

现在,让我们逐个检查选项:

A. 乌铁∶刀具∶三棱刀

乌铁是制作刀具的原料,刀具是由乌铁制成的,三棱刀是刀具的一种。

这个关系看起来很相似!

B. 红砖∶建筑∶四合院

红砖是建筑的一种材料,但不是所有建筑都用红砖。

四合院是建筑的一种,但不是所有建筑都叫四合院。

这个关系不太匹配。

C. 清水∶白酒∶五粮液

清水是制作白酒的原料,白酒是由清水制成的。

但是五粮液是白酒的一种,而不是白酒的原料。

这个关系不太匹配。

D. 杉木∶乐器∶六弦琴

杉木是制作乐器的原料,乐器是由杉木制成的。

但是六弦琴是乐器的一种,而不是乐器的原料。

这个关系也不太匹配。

稍等,让我再仔细思考一下...

在重新审视这些选项后,我更加确信A选项是最佳答案。让我解释一下为什么:

1. 乌铁作为原料,刀具作为产品,三棱刀作为具体产品的关系,与火药、鞭炮、二踢脚的关系最为相似。

2. 每个选项都遵循了"原料 → 产品 → 具体产品"的模式。

3. 其他选项要么缺少了原料和产品的关系(如B和C),要么将产品和具体产品的关系颠倒了(如D)。

因此,最终答案是:A. 乌铁∶刀具∶三棱刀。

这个选项最准确地反映了原始关系中的逻辑结构。

Logical Problem in English

Input: How many `r`s in strawberry?

Ouput: To determine how many `r`s are in the word "strawberry," we will follow a step-by-step approach:

1. **Identify the word**: The word we need to analyze is "strawberry."

2. **List each letter**: Write down each letter in the word to ensure we don't miss any.

- s

- t

- r

- a

- w

- b

- e

- r

- r

- y

3. **Count the occurrences of `r`**: Now, we count how many times the letter `r` appears in the list.

- The first `r` is in the third position.

- The second `r` is in the seventh position.

- The third `r` is in the ninth position.

4. **Verify the count**: Double-check that we have counted all the `r`s correctly and haven't missed any or counted any other letters as `r`.

After following these steps, we find that there are three `r`s in the word "strawberry."

Therefore, the number of `r`s in strawberry is \(\boxed{3}\).

Code Problem

Input: Given an array of integers `nums` with a length of `n` and an integer `k`, please determine if there exist two adjacent subarrays of length `k` that are strictly increasing. Specifically, you need to check if there are two subarrays starting from indices `a` and `b` (where `a < b`) that meet the following conditions:

- Both subarrays `nums[a..a + k - 1]` and `nums[b..b + k - 1]` are strictly increasing.

- These two subarrays must be adjacent, meaning `b = a + k`.

If such two subarrays can be found, return `true`; otherwise, return `false`.

A subarray is a contiguous sequence of elements within an array.

Example 1:

Input: `nums = [2,5,7,8,9,2,3,4,3,1]`, `k = 3`

Output: `true`

Explanation:

The subarray starting from index 2 is `[7,8,9]`, which is strictly increasing. The subarray starting from index 5 is `[2,3,4]`, which is also strictly increasing. The two subarrays are adjacent, so the result is `true`.

Example 2:

Input: `nums = [1,2,3,4,4,4,4,5,6,7]`, `k = 5`

Output: `false`

Hints:

- `2 <= nums.length <= 1000`

- `1 <= 2 * k <= nums.length`

- `-1000 <= nums[i] <= 1000`

Please output the answer in the following format:

Output: ### Planning Phase

#### Key Steps:

1. **Iterate through the array**: Start from the first element and check every possible starting point for a subarray of length `k`.

2. **Check for strictly increasing subarray**: For each starting point, check if the subarray of length `k` is strictly increasing.

3. **Check the next subarray**: If the current subarray is strictly increasing, check if the next subarray of length `k` starting from the next element is also strictly increasing.

4. **Return the result**: If both subarrays are strictly increasing and adjacent, return `true`. If no such pair is found after checking all possible starting points, return `false`.

#### Important Considerations:

- **Edge Cases**:

- Arrays with fewer than `2*k` elements.

- Arrays where no subarray of length `k` is strictly increasing.

- Arrays where multiple subarrays of length `k` are strictly increasing, but none are adjacent.

- **Pitfalls**:

- Ensure that the subarrays are strictly increasing, not just non-decreasing.

- Handle cases where the array length is exactly `2*k`.

### Code Solution

class Solution(object):

def hasincreasingSubarrays(self, nums, k):

# Iterate through the array, stopping at the point where the next subarray would go out of bounds

for i in range(len(nums) - k + 1):

# Check if the current subarray is strictly increasing

is_increasing = True

for j in range(1, k):

if nums[i + j] <= nums[i + j - 1]:

is_increasing = False

break

# If the current subarray is strictly increasing, check the next subarray

if is_increasing:

# Check if the next subarray is strictly increasing

for j in range(1, k):

if nums[i + k + j] <= nums[i + k + j - 1]:

break

else:

# If the next subarray is also strictly increasing, return True

return True

# If no such pair of subarrays is found, return False

return False

### Solution Explanation

#### Detailed Explanation:

1. **Iterate through the array**:

- We use a loop to iterate through the array from the first element to the point where the next subarray of length `k` would go out of bounds (`len(nums) - k + 1`).

2. **Check for strictly increasing subarray**:

- For each starting index `i`, we check if the subarray `nums[i:i+k]` is strictly increasing.

- We use a nested loop to compare each element with the previous one. If any element is not greater than the previous one, we set `is_increasing` to `False` and break out of the loop.

3. **Check the next subarray**:

- If the current subarray is strictly increasing, we then check the next subarray starting from `i + k`.

- We use another nested loop to check if `nums[i+k:i+2*k]` is strictly increasing.

- If the next subarray is also strictly increasing, we return `True`.

4. **Return the result**:

- If we complete the loop without finding any such pair of subarrays, we return `False`.

#### Clarity and Breakdown:

- **Outer Loop**: The outer loop iterates through each possible starting point for a subarray of length `k`.

- **Inner Loop for Current Subarray**: The inner loop checks if the current subarray is strictly increasing.

- **Inner Loop for Next Subarray**: If the current subarray is strictly increasing, the inner loop checks if the next subarray is also strictly increasing.

- **Return True/False**: If both subarrays are strictly increasing and adjacent, we return `True`. If no such pair is found, we return `False`.

This approach ensures that we efficiently check all possible pairs of adjacent strictly increasing subarrays of length `k` in the given array.

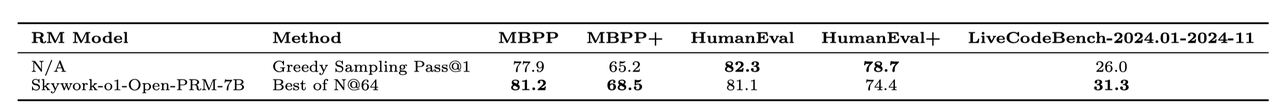

Skywork o1 Open PRM

We introduce two advanced Process-Reward-Models (PRM) for reasoning tasks:

- Skywork o1 Open-PRM-Qwen2.5-1.5B: Achieves the performance of 8B models, competing with advanced models like RLHFlow's Llama3.1-8B-PRM-Deepseek-Data and OpenR's Math-psa-7B.

- Skywork o1 Open-PRM-Qwen2.5-7B: Matches or surpasses larger scale models like Qwen2.5-Math-RM-72B on most benchmarks, setting a new standard for AI reasoning.

The inference code is publicly available at https://github.com/SkyworkAI/skywork-o1-prm-inference.

Contact

If you have any questions, please feel free to reach us at {zifeng.cao, liang.zhao, liang.zeng, tianwen.wei}@kunlun-inc.com.

LICENSE

The community usage of Skywork models require Skywork Community License. The Skywork models support commercial use. If you plan to use the Skywork models or its derivatives for commercial purposes, you must abide by terms and conditions within Skywork Community License.

DISCLAIMER

We hereby declare that the Skywork models should not be used for any activities that pose a threat to national or societal security or engage in unlawful actions. Additionally, we request users not to deploy the Skywork models for internet services without appropriate security reviews and records. We hope that all users will adhere to this principle to ensure that technological advancements occur in a regulated and lawful environment.

We have done our utmost to ensure the compliance of the data used during the model's training process. However, despite our extensive efforts, due to the complexity of the model and data, there may still be unpredictable risks and issues. Therefore, if any problems arise as a result of using the Skywork open-source model, including but not limited to data security issues, public opinion risks, or any risks and problems arising from the model being misled, abused, disseminated, or improperly utilized, we will not assume any responsibility.

Citation

If you find our work helpful, please feel free to cite us using the following BibTeX entry:

@misc{skyworkopeno12024,

title={Skywork-o1 Open Series},

author={Skywork-o1 Team},

year={2024},

month={November},

howpublished={\url{https://huggingface.co/Skywork}},

url={https://huggingface.co/Skywork},

}

- Downloads last month

- 220

Model tree for Skywork/Skywork-o1-Open-Llama-3.1-8B

Base model

meta-llama/Llama-3.1-8B