BeautifulPrompt

简介 Brief Introduction

我们开源了一个自动Prompt生成模型,您可以直接输入一个极其简单的Prompt,就可以得到经过语言模型优化过的Prompt,帮助您更简单地生成高颜值图像。

We release an automatic Prompt generation model, you can directly enter an extremely simple Prompt and get a Prompt optimized by the language model to help you generate more beautiful images simply.

- Github: EasyNLP

使用 Usage

from transformers import AutoTokenizer, AutoModelForCausalLM

tokenizer = AutoTokenizer.from_pretrained('alibaba-pai/pai-bloom-1b1-text2prompt-sd')

model = AutoModelForCausalLM.from_pretrained('alibaba-pai/pai-bloom-1b1-text2prompt-sd').eval().cuda()

raw_prompt = '1 girl'

input = f'Instruction: Give a simple description of the image to generate a drawing prompt.\nInput: {raw_prompt}\nOutput:'

input_ids = tokenizer.encode(input, return_tensors='pt').cuda()

outputs = model.generate(

input_ids,

max_length=384,

do_sample=True,

temperature=1.0,

top_k=50,

top_p=0.95,

repetition_penalty=1.2,

num_return_sequences=5)

prompts = tokenizer.batch_decode(outputs[:, input_ids.size(1):], skip_special_tokens=True)

prompts = [p.strip() for p in prompts]

print(prompts)

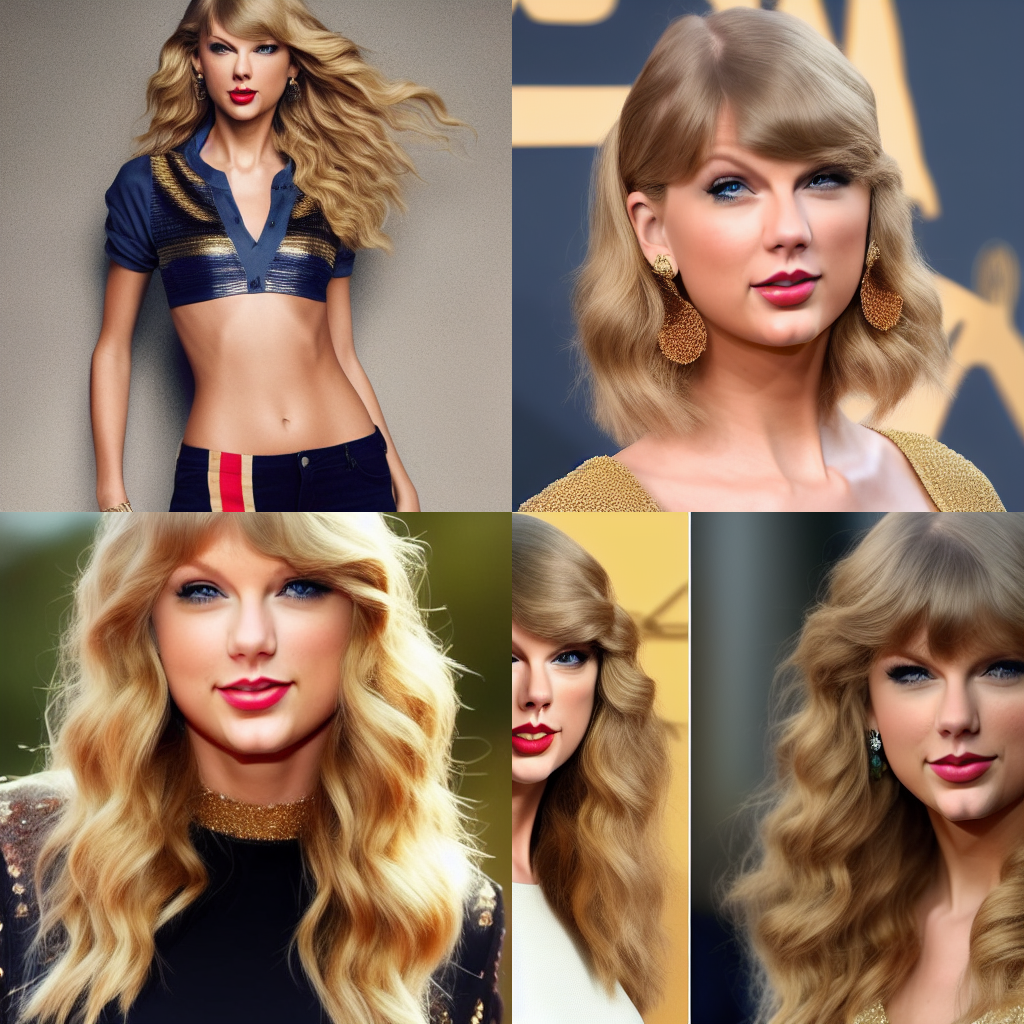

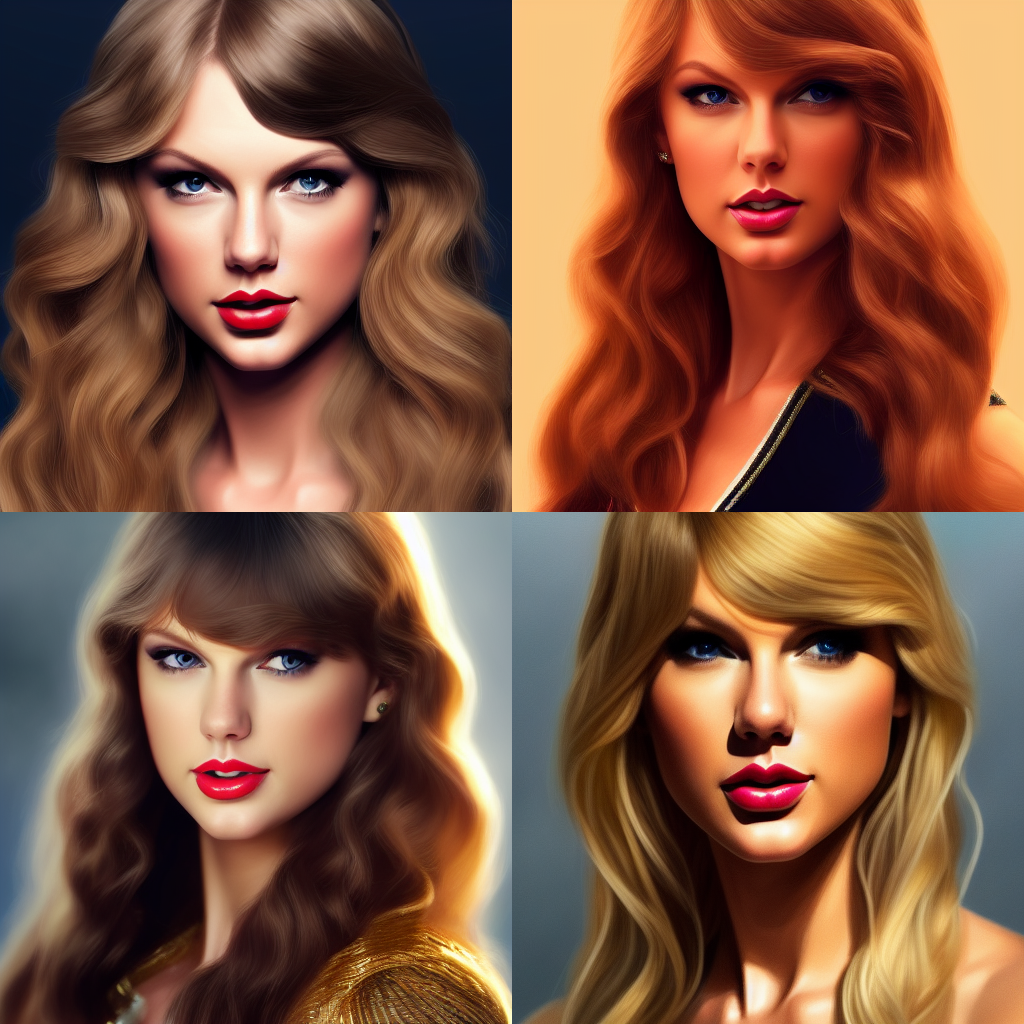

作品展示 Gallery

| Original | BeautifulPrompt |

|---|---|

| prompt: A majestic sailing ship | prompt: a massive sailing ship, epic, cinematic, artstation, greg rutkowski, james gurney, sparth |

|

|

使用须知 Notice for Use

使用上述模型需遵守AIGC模型开源特别条款。

If you want to use this model, please read this document carefully and abide by the terms.

Paper Citation

If you find the model useful, please consider cite the paper:

@inproceedings{emnlp2023a,

author = {Tingfeng Cao and

Chengyu Wang and

Bingyan Liu and

Ziheng Wu and

Jinhui Zhu and

Jun Huang},

title = {BeautifulPrompt: Towards Automatic Prompt Engineering for Text-to-Image Synthesis},

booktitle = {Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing: Industry Track},

pages = {1--11},

year = {2023}

}

- Downloads last month

- 106

This model does not have enough activity to be deployed to Inference API (serverless) yet. Increase its social

visibility and check back later, or deploy to Inference Endpoints (dedicated)

instead.