Style-Bert-VITS2向けの事前学習モデル

Style-Bert-VITS2で使用できる以下の学習データで学習を行ったクリーンな(*1)事前学習データになります

(*1) ここでいうクリーンは事前学習に使用した学習データが明記されていることを指しています

学習データセット

- つくよみちゃんコーパス│声優統計コーパス(JVSコーパス準拠)

- みんなで作るJSUTコーパスbasic5000 BASIC5000_0001~BASIC5000_0600 (夢前黎担当部分を許可を得て使用)

- 黄鏡博人さん からボイスデータをご提供いただきました

- あみたろの声素材工房

学習パラメータ

- 学習ステップ数 : 600k step

- bfloat16 : false

config.json

{

"model_name": "pretraing",

"train": {

"log_interval": 200,

"eval_interval": 2000,

"seed": 42,

"epochs": 2100,

"learning_rate": 0.0001,

"betas": [

0.8,

0.99

],

"eps": 1e-09,

"batch_size": 8,

"bf16_run": false,

"fp16_run": false,

"lr_decay": 0.99996,

"segment_size": 16384,

"init_lr_ratio": 1,

"warmup_epochs": 0,

"c_mel": 45,

"c_kl": 1.0,

"c_commit": 100,

"skip_optimizer": false,

"freeze_ZH_bert": false,

"freeze_JP_bert": false,

"freeze_EN_bert": false,

"freeze_emo": false,

"freeze_style": false,

"freeze_decoder": false

},

"data": {

"use_jp_extra": true,

"training_files": "Data/pretraing/train.list",

"validation_files": "Data/pretraing/val.list",

"max_wav_value": 32768.0,

"sampling_rate": 44100,

"filter_length": 2048,

"hop_length": 512,

"win_length": 2048,

"n_mel_channels": 128,

"mel_fmin": 0.0,

"mel_fmax": null,

"add_blank": true,

"n_speakers": 1,

"cleaned_text": true,

"spk2id": {

"pretraing": 0

}

},

"model": {

"use_spk_conditioned_encoder": true,

"use_noise_scaled_mas": true,

"use_mel_posterior_encoder": false,

"use_duration_discriminator": false,

"use_wavlm_discriminator": true,

"inter_channels": 192,

"hidden_channels": 192,

"filter_channels": 768,

"n_heads": 2,

"n_layers": 6,

"kernel_size": 3,

"p_dropout": 0.1,

"resblock": "1",

"resblock_kernel_sizes": [

3,

7,

11

],

"resblock_dilation_sizes": [

[

1,

3,

5

],

[

1,

3,

5

],

[

1,

3,

5

]

],

"upsample_rates": [

8,

8,

2,

2,

2

],

"upsample_initial_channel": 512,

"upsample_kernel_sizes": [

16,

16,

8,

2,

2

],

"n_layers_q": 3,

"use_spectral_norm": false,

"gin_channels": 512,

"slm": {

"model": "./slm/wavlm-base-plus",

"sr": 16000,

"hidden": 768,

"nlayers": 13,

"initial_channel": 64

}

},

"version": "2.4.1-JP-Extra"

}

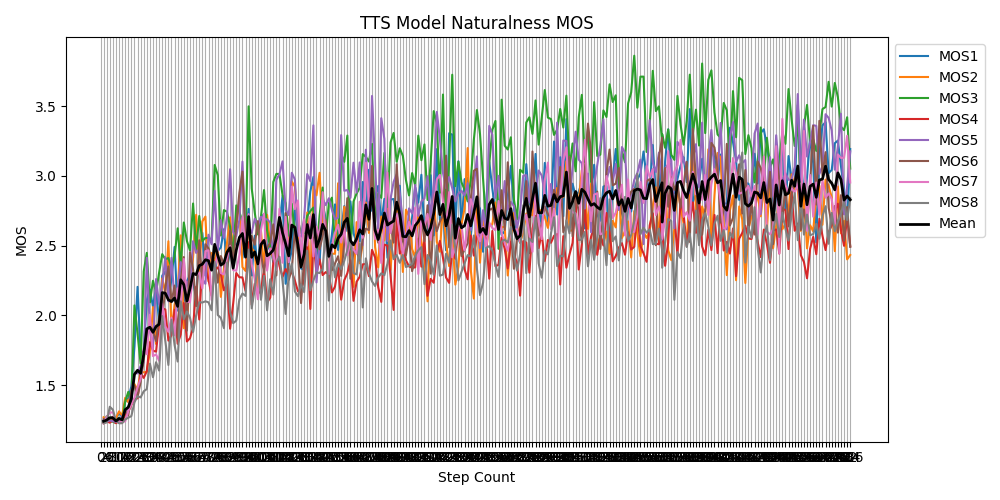

SpeechMOSによる自然性評価

mos_pretraing.csvも同封しています

ライセンス

ライセンスは、以下に準じます