datasets:

- benchang1110/TaiVision-pretrain-1M-v2.0

language:

- zh

library_name: transformers

pipeline_tag: image-text-to-text

base_model:

- benchang1110/TaiVisionLM-base-v1

Model Card for Model ID

Model Details

English

TaiVisionLM: The First of Its Kind! 🚀

🌟 This is a small (only 1.2B parameters) visual language model on Hugging Face that responds to Traditional Chinese instructions given an image input! 🌟

✨ Developed compatible with the Transformers library, TaiVisionLM is quick to load, fine-tune, and use for lightning-fast inferences without needing any external libraries! ⚡️

Ready to experience the Traditional Chinese visual language model? Let's go! 🖼️🤖

繁體中文

台視: 台灣視覺語言模型!! 🚀

🌟 TaiVisionLM 是一個小型的視覺語言模型(僅有 12 億參數),可以根據圖像輸入來回覆繁體中文指令!🌟

✨ TaiVisionLM 可以用 transformers 載入、微調和使用!⚡️

準備好體驗"臺視"了嗎?讓我們開始吧!🖼️🤖

Model Description

English

This model is a multimodal large language model that combines SigLIP as its vision encoder with Tinyllama as its language model. The vision projector connects the two modalities together. Its architecture closely resembles PaliGemma.

Here's the summary of the development process:

Unimodal pretraining

- In this stage, instead of pretraining both modalities from scratch, I leverage the image encoder from google/siglip-base-patch16-224-multilingual and the language model trained by ourselves (https://huggingface.co/benchang1110/Taiwan-tinyllama-v1.0-chat).

Feature Alignment

- We trained the vision projector and language model using LoRA using 1M image-text pairs to align visual and textual features.

This model is the finetuned version of benchang1110/TaiVisionLM-base-v1. We fintuned the model using 1M image-text pairs. The finetuned model will generate a longer and more detailed description of the image.

- We trained the vision projector and language model using LoRA using 1M image-text pairs to align visual and textual features.

Task Specific Training

- The aligned model undergoes further training for tasks such as short captioning, detailed captioning, and simple visual question answering. We will undergo this stage after the dataset is ready!

- Developed by: benchang1110

- Model type: Image-Text-to-Text

- Language(s) (NLP): Traditional Chinese

繁體中文

這個模型是一個多模態的語言模型,結合了 SigLIP 作為其視覺編碼器,並使用 Tinyllama 作為語言模型。視覺投影器將這兩種模態結合在一起。

其架構與 PaliGemma 非常相似。

以下是開發過程的摘要:

- 單模態預訓練

- 在這個階段,我利用了 google/siglip-base-patch16-224-multilingual 的圖像編碼器,以及我們自己訓練的語言模型(Taiwan-tinyllama-v1.0-chat)。

- 特徵對齊

- 我們使用了100萬個圖片和文本的配對來訓練圖像投影器 (visual projector),並使用 LoRA 來微調語言模型的權重。 這個模型是 benchang1110/TaiVisionLM-base-v1 的微調版本。我們使用了100萬個圖片和文本的配對來微調模型。微調後的模型將生成更長、更詳細的圖片描述。

- 任務特定訓練

- 對齊後的模型將進行進一步的訓練,針對短描述、詳細描述和簡單視覺問答等任務。我們將在數據集準備好後進行這一階段的訓練!

- 創作者: benchang1110

- 模型類型: Image-Text-to-Text

- 語言: 繁體中文

How to Get Started with the Model

English

In Transformers, you can load the model and do inference as follows:

IMPORTANT NOTE: TaiVisionLM model is not yet integrated natively into the Transformers library. So you need to set trust_remote_code=True when loading the model. It will download the configuration_taivisionlm.py, modeling_taivisionlm.py and processing_taivisionlm.py files from the repo. You can check out the content of these files under the Files and Versions tab and pin the specific versions if you have any concerns regarding malicious code.

from transformers import AutoProcessor, AutoModelForCausalLM, AutoConfig

from PIL import Image

import requests

import torch

config = AutoConfig.from_pretrained("benchang1110/TaiVisionLM-base-v2",trust_remote_code=True)

processor = AutoProcessor.from_pretrained("benchang1110/TaiVisionLM-base-v2",trust_remote_code=True)

model = AutoModelForCausalLM.from_pretrained("benchang1110/TaiVisionLM-base-v2",trust_remote_code=True,torch_dtype=torch.float16,attn_implementation="sdpa").to('cuda')

model.eval()

url = "https://media.wired.com/photos/598e35fb99d76447c4eb1f28/master/pass/phonepicutres-TA.jpg"

image = Image.open(requests.get(url, stream=True).raw).convert("RGB")

text = "描述圖片"

inputs = processor(text=text,images=image, return_tensors="pt",padding=False).to('cuda')

outputs = processor.tokenizer.decode(model.generate(**inputs,max_length=512)[0])

print(outputs)

中文

利用 transformers,可以用下面程式碼進行推論:

重要通知: 台視 (TaiVisionLM) 還沒被整合進transformers,因此在下載模型時要使用 trust_remote_code=True,下載模型將會使用configuration_taivisionlm.py、 modeling_taivisionlm.py 和 processing_taivisionlm.py 這三個檔案,若擔心有惡意程式碼,請先點選右方 Files and Versions 來查看程式碼內容。

from transformers import AutoProcessor, AutoModelForCausalLM, AutoConfig

from PIL import Image

import requests

import torch

config = AutoConfig.from_pretrained("benchang1110/TaiVisionLM-base-v2",trust_remote_code=True)

processor = AutoProcessor.from_pretrained("benchang1110/TaiVisionLM-base-v2",trust_remote_code=True)

model = AutoModelForCausalLM.from_pretrained("benchang1110/TaiVisionLM-base-v2",trust_remote_code=True,torch_dtype=torch.float16,attn_implementation="sdpa").to('cuda')

model.eval()

url = "https://media.wired.com/photos/598e35fb99d76447c4eb1f28/master/pass/phonepicutres-TA.jpg"

image = Image.open(requests.get(url, stream=True).raw).convert("RGB")

text = "描述圖片"

inputs = processor(text=text,images=image, return_tensors="pt",padding=False).to('cuda')

outputs = processor.tokenizer.decode(model.generate(**inputs,max_length=512)[0])

print(outputs)

Comparision with prior model (benchang1110/TaiVisionLM-base-v1)

- TaiVisionLM-base-v1:

卡通插圖描繪掛在家門口的標誌,上下方以卡通插圖的方式呈現。 - TaiVisionLM-base-v2:

這張圖片呈現了發人深省的對比。圖片中央,白色文字中的「Smile」以粗體黑色字母書寫。文字略微有些傾斜,為原本靜止的圖片增添了動感。背景是一個鮮明的白色,突顯文字並確立其在圖片中的重要性。 背景並非僅僅是白色的;它與黑色文字形成鮮明對比,創造出引人注目的視覺效果。文字、背景和形狀和諧合作,每個元素都互相襯托,形成和諧的構圖。 圖片底部右角有微妙的脊狀邊緣。脊狀的輪廓為圖片增添了一種深度,吸引觀眾的注意力,探索圖片的整體背景。脊狀邊緣與圖片整體的設計相輔相成,增強了節奏和能量氛圍。 整體而言,這張圖片是一個色彩和形狀的和諧結合,每個元素都經過精心放置,創造出視覺上令人愉悅的構圖。使用黑色、粗體字和微妙的脊狀邊緣增添了神秘感,將其印象擴展到更深層,既引人入勝又引人思考。

- TaiVisionLM-base-v1:

這是一幅攝影作品,展示了巴黎的鐵塔被水景所環繞 - TaiVisionLM-base-v2:

這幅圖片捕捉到法國著名地標艾菲爾鐵塔的令人驚嘆的景觀。天空呈現明亮的藍色,與周圍的綠意交織,形成令人驚嘆的構圖。這座高聳的拱門塗上淺棕色的艾菲爾鐵塔,自豪地矗立在畫面右側。它旁邊是河流,它的平靜水域反射著上方的藍天。 在遠處,其他著名地標的蹤影可見,包括一座標誌性的橋樑和一座城堡般的摩天大樓,為場景增添深度和尺度。前景中的樹木增添了一抹綠意,為鐵塔的淺褐色和藍天的色彩提供了清新的對比。 這張圖片是從水面上觀看艾菲爾鐵塔的角度拍攝的,提供了對整個景觀的鳥瞰視角。這個視角可以全面地觀察到艾菲爾鐵塔及其周圍環境,展現了它的壯麗以及位於其中的生命。這張圖片中沒有任何虛構的內容,所有描述都是基於圖片中可見的元素。

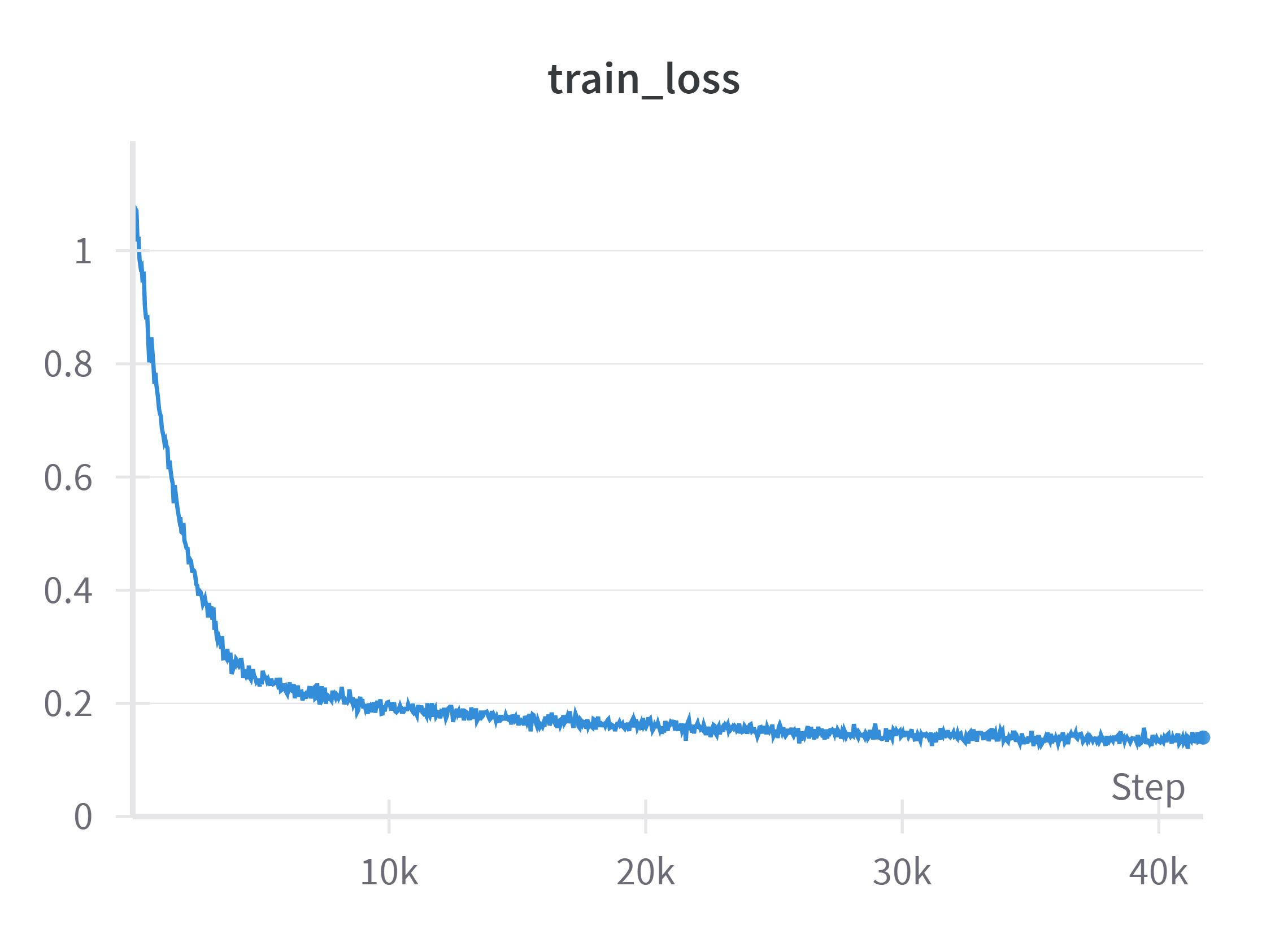

Training Procedure

- Feature Alignment

| Data size | Global Batch Size | Learning Rate | Epochs | Max Length | Weight Decay |

|---|---|---|---|---|---|

| 1.35M | 4 | 5e-3 | 1 | 1024 | 0 |

We use full-parameter finetuning for the projector and apply LoRA to the language model.

We will update the training procedure once we have more resources to train the model on the whole dataset.

Compute Infrastructure

- Feature Alignment 1xV100(32GB), took approximately 45 GPU hours.