Datasets:

text

stringlengths 454

608k

| url

stringlengths 17

896

| dump

stringlengths 9

15

⌀ | source

stringclasses 1

value | word_count

int64 101

114k

| flesch_reading_ease

float64 50

104

|

|---|---|---|---|---|---|

# Patroni cluster (with Zookeeper) in a docker swarm on a local machine

Intro

-----

There probably is no way one who stores some crucial data (in particular, using SQL databases) can possibly dodge from the thoughts of building some kind of safe cluster, distant guardian to protect consistency and availability at all times. Even if the main server with all the precious data gets knocked out deadly - the show must go on, right? This basically means the database must still be available and data be up-to-date with the one on the failed server.

As you might have noticed, there are dozens of ways to go and Patroni is just one of them. There is plenty of articles providing a more or less detailed comparison of the options available, so I assume I'm free to skip the part of luring you into Patroni's side. Let's start off from the point where among others you are already leaning towards Patroni and are willing to try that out in a more or less real-case setup.

As for myself, I did try a couple of other solutions and in one of them (won't name it) my issue seems to be still hanging open and not answered on their GitHub even though months have passed.

Btw, I am not a DevOps engineer originally so when the need for the high-availability cluster arose and I went on I would hit my bones against the bottom of every single bump and every single post and rock down the road. Hope this tutorial will help you out to get the job done with as little pain as it is possible.

If you don't want any more explanations and lead-ins, jump right in.

Otherwise, you might want to read some more notes on the setup I went on with.

One more Patroni tut, huh?### Do we need one more Patroni tut?

Let's face it, there are quite enough tutorials published on how to set up the Patroni cluster. This one is covering deployment in a docker swarm with Zookeeper as a DCS. So why zookeeper and why docker swarm?

#### Why Zookeeper?

Actually, it's something you might want to consider seriously choosing a Patroni setup for your production.

The thing is that Patroni uses third-party services basically to establish and maintain communication among its nodes, the so-called DCS (Dynamic Configuration Storage).

If you have already studied tutorials on Patroni you probably noticed that the most common case is to implement communication through the 'etcd' cluster.

The notable thing about etcd is here (from its faq page):

```

Since etcd writes data to disk, its performance strongly depends on disk

performance. For this reason, SSD is highly recommended.

```

If you don't have SSD on each machine you are planning to run your etcd cluster, it's probably not a good idea to choose it as a DCS for Patroni. In a real production scenario, it is possible that you simply overwhelm your etcd cluster, which might lead to IO errors. Doesn't sound good, right?

So here comes Zookeeper which stores all its data in memory and might actually come in handy if your servers lack SDDs but have got plenty of RAM.

### Why docker swarm?

In my situation, I had no other choice as it was one of the business requirements to set it up in a docker swarm. So if by circumstances it's your case as well, you're exactly in the right spot!

But for the rest of the readers with the "testing and trying" purposes, it comes across as quite a distant choice too as you don't need to install/prepare any third-party services (except for docker of course) or place on your machine dozens of dependencies.

Guess it's not far from the truth that we all have Docker engine installed and set up everywhere anyway and it's convenient to keep everything in containers. With one-command tuning, docker is good enough to run your first Patroni cluster locally without virtual machines, Kubernetes, and such.

So if you don't want to dig into other tools and want to accomplish everything neat and clean in a well-known docker environment this tutorial could be the right way to go.

Some extra

----------

In this tutorial, I'm also planning to show various ways to check on the cluster stats (to be concrete will cover all 3 of them) and provide a simple script and strategy for a test run.

Suppose it's enough of talking, let's go ahead and start practicing.

Docker swarm

------------

For a quick test of deployment in the docker swarm, we don't really need multiple nodes in our cluster. As we are able to scale our services at our needs (imitating failing nodes), we are going to be fine with just one node working in a swarm mode.

I come from the notion that you already have the Docker engine installed and running. From this point all you need is to run this command:

```

docker swarm init

//now check your single-node cluster

docker node ls

ID HOSTNAME STATUS AVAILABILITY

a9ej2flnv11ka1hencoc1mer2 * floitet Ready Active

```

> The most important feature of the docker swarm is that we are now able to manipulate not just simple containers, but services. Services are basically abstractions on top of containers. Referring to the OOP paradigm, docker service would be a class, storing a set of rules and container would be an object of this class. Rules for services are defined in docker-compose files.

>

>

Notice your node's hostname, we're going to make use of it quite soon.

Well, as a matter of fact, that's pretty much it for the docker swarm setup.

Seem like we're doing fine so far, let's keep up!

Zookeeper

---------

Before we start deploying Patroni services we need to set up DCS first which is Zookeeper in our case. I'm gonna go for the 3.4 version. From my experience, it works just fine.

Below are the full docker-compose config and some notes on details I find that it'd be reasonable to say a few words about.

docker-compose-zookeeper.yml

```

version: '3.7'

services:

zoo1:

image: zookeeper:3.4

hostname: zoo1

ports:

- 2191:2181

networks:

- patroni

environment:

ZOO_MY_ID: 1

ZOO_SERVERS: server.1=0.0.0.0:2888:3888 server.2=zoo2:2888:3888 server.3=zoo3:2888:3888

deploy:

replicas: 1

placement:

constraints:

- node.hostname == floitet

restart_policy:

condition: any

zoo2:

image: zookeeper:3.4

hostname: zoo2

networks:

- patroni

ports:

- 2192:2181

environment:

ZOO_MY_ID: 2

ZOO_SERVERS: server.1=zoo1:2888:3888 server.2=0.0.0.0:2888:3888 server.3=zoo3:2888:3888

deploy:

replicas: 1

placement:

constraints:

- node.hostname == floitet

restart_policy:

condition: any

zoo3:

image: zookeeper:3.4

hostname: zoo3

networks:

- patroni

ports:

- 2193:2181

environment:

ZOO_MY_ID: 3

ZOO_SERVERS: server.1=zoo1:2888:3888 server.2=zoo2:2888:3888 server.3=0.0.0.0:2888:3888

deploy:

replicas: 1

placement:

constraints:

- node.hostname == floitet

restart_policy:

condition: any

networks:

patroni:

driver: overlay

attachable: true

```

DetailsThe important thing of course is to give every node its unique service name and published port. The hostname is preferably be set to the same as the service name.

```

zoo1:

image: zookeeper:3.4

hostname: zoo1

ports:

- 2191:2181

```

Notice how we list servers in this line, changing the service we bind depending on the service number. So for the first (zoo1) service server.1 is bound to 0.0.0.0, but for zoo2 it will be the server.2 accordingly.

```

ZOO_SERVERS: server.1=0.0.0.0:2888:3888 server.2=zoo2:2888:3888 server.3=zoo3:2888:3888

```

This is how we control the deployment among nodes. As we have only one node, we set a constraint to this node for all services. When you have multiple nodes in your docker swarm cluster and want to spread services among all nodes - just replace node.hostname with the name of the desirable node (use 'docker node ls' command).

```

placement:

constraints:

- node.hostname == floitet

```

And the final thing we need to take care of is the network. We're going to deploy Zookeeper and Patroni clusters in one overlay network so that they could communicate with each other in an isolated environment using the service names.

```

networks:

patroni:

driver: overlay

// we need to mark this network as attachable

// so that to be able to connect patroni services to this network later on

attachable: true

```

Guess it's time to deploy the thing!

```

sudo docker stack deploy --compose-file docker-compose-zookeeper.yml patroni

```

Now let's check if the job is done right. The first step to take is this:

```

sudo docker service ls

gxfj9rs3po7z patroni_zoo1 replicated 1/1 zookeeper:3.4 *:2191->2181/tcp

ibp0mevmiflw patroni_zoo2 replicated 1/1 zookeeper:3.4 *:2192->2181/tcp

srucfm8jrt57 patroni_zoo3 replicated 1/1 zookeeper:3.4 *:2193->2181/tcp

```

And the second step is to actually ping the zookeeper service with the special Four-Letter-Command:

```

echo mntr | nc localhost 2191

// with the output being smth like this

zk_version 3.4.14-4c25d480e66aadd371de8bd2fd8da255ac140bcf, built on 03/06/2019 16:18 GMT

zk_avg_latency 6

zk_max_latency 205

zk_min_latency 0

zk_packets_received 1745

zk_packets_sent 1755

zk_num_alive_connections 3

zk_outstanding_requests 0

zk_server_state follower

zk_znode_count 16

zk_watch_count 9

zk_ephemerals_count 4

zk_approximate_data_size 1370

zk_open_file_descriptor_count 34

zk_max_file_descriptor_count 1048576

zk_fsync_threshold_exceed_count 0

```

Which means that the zookeeper node is responding and doing its job. You could also check the zookeeper service logs if you wish

```

docker service logs $zookeeper-service-id

// service-id comes from 'docker service ls' command.

// in my case it could be

docker service logs gxfj9rs3po7z

```

Okay, great! Now we have the Zookeeper cluster running. Thus it's time to move on and finally, get to the Patroni itself.

Patroni

-------

And here we are down to the main part of the tutorial where we handle the Patroni cluster deployment. The first thing I need to mention is that we actually need to build a Patroni image before we move forward. I'll try to be as detailed and precise as possible showing the most important parts we need to be aware of managing this task.

We're going to need multiple files to get the job done so you might want to keep them together. Let's create a 'patroni-test' directory and cd to it. Below we are going to discuss the files that we need to create there.

* **patroni.yml**

This is the main config file. The thing about Patroni is that we are able to set parameters from different places and here is one of them. This file gets copied into our custom docker image and thus updating it requires rebuilding the image. I personally prefer to store here parameters that I see as 'stable', 'permanent'. The ones I'm not planning to change a lot. Below I provide the very basic config. You might want to configure more parameters for a PostgreSQL engine, for example (i.e. max\_connections, etc). But for the test deployment, I think this one should be fine.

patroni.yml

```

scope: patroni

namespace: /service/

bootstrap:

dcs:

ttl: 30

loop_wait: 10

retry_timeout: 10

maximum_lag_on_failover: 1048576

postgresql:

use_pg_rewind: true

postgresql:

use_pg_rewind: true

initdb:

- encoding: UTF8

- data-checksums

pg_hba:

- host replication all all md5

- host all all all md5

zookeeper:

hosts:

- zoo1:2181

- zoo2:2181

- zoo3:2181

postgresql:

data_dir: /data/patroni

bin_dir: /usr/lib/postgresql/11/bin

pgpass: /tmp/pgpass

parameters:

unix_socket_directories: '.'

tags:

nofailover: false

noloadbalance: false

clonefrom: false

nosync: false

```

DetailsWhat we should be aware of is that we need to specify 'bin\_dir' correctly for Patroni to find Postgres binaries. In my use case, I have Postgres 11 so my directory looks like this: '/usr/lib/postgresql/11/bin'. This is the directory Patroni is going to be looking for an inside container. And 'data\_dir' is where the data will be stored inside the container. Later on, we'll bind it to the actual volume on our drive so that not to lose all the data if the Patroni cluster for some reason fails.

```

postgresql:

data_dir: /data/patroni

bin_dir: /usr/lib/postgresql/11/bin

```

I also list all the zookeeper servers here to feed them to patronictl later. Note that, If you don't specify it here we'll end up with a broken patroni command tool (patronictl). Also, I'd like to point out that we don't use IPs to locate zookeeper servers, but we feed Patroni with 'service names' instead. It's a feature of a docker swarm we are taking advantage of.

```

zookeeper:

hosts:

- zoo1:2181

- zoo2:2181

- zoo3:2181

```

* **patroni\_entrypoint.sh**

The next one is where most settings come from in my setup. It's a script that will be executed after the docker container is started.

patroni\_entrypoint.sh

```

#!/bin/sh

readonly CONTAINER_IP=$(hostname --ip-address)

readonly CONTAINER_API_ADDR="${CONTAINER_IP}:${PATRONI_API_CONNECT_PORT}"

readonly CONTAINER_POSTGRE_ADDR="${CONTAINER_IP}:5432"

export PATRONI_NAME="${PATRONI_NAME:-$(hostname)}"

export PATRONI_RESTAPI_CONNECT_ADDRESS="$CONTAINER_API_ADDR"

export PATRONI_RESTAPI_LISTEN="$CONTAINER_API_ADDR"

export PATRONI_POSTGRESQL_CONNECT_ADDRESS="$CONTAINER_POSTGRE_ADDR"

export PATRONI_POSTGRESQL_LISTEN="$CONTAINER_POSTGRE_ADDR"

export PATRONI_REPLICATION_USERNAME="$REPLICATION_NAME"

export PATRONI_REPLICATION_PASSWORD="$REPLICATION_PASS"

export PATRONI_SUPERUSER_USERNAME="$SU_NAME"

export PATRONI_SUPERUSER_PASSWORD="$SU_PASS"

export PATRONI_approle_PASSWORD="$POSTGRES_APP_ROLE_PASS"

export PATRONI_approle_OPTIONS="${PATRONI_admin_OPTIONS:-createdb, createrole}"

exec /usr/local/bin/patroni /etc/patroni.yml

```

Details: Important!Actually, the main point of even having this ***patroni\_entrypoint.sh*** is that Patroni won't simply start without knowing the IP address of its host. And for the host being a docker container we are in a situation where we somehow need to first get to know which IP was granted to the container and only then execute the Patroni start-up command. This indeed crucial task is handled here

```

readonly CONTAINER_IP=$(hostname --ip-address)

readonly CONTAINER_API_ADDR="${CONTAINER_IP}:${PATRONI_API_CONNECT_PORT}"

readonly CONTAINER_POSTGRE_ADDR="${CONTAINER_IP}:5432"

...

export PATRONI_RESTAPI_CONNECT_ADDRESS="$CONTAINER_API_ADDR"

export PATRONI_RESTAPI_LISTEN="$CONTAINER_API_ADDR"

export PATRONI_POSTGRESQL_CONNECT_ADDRESS="$CONTAINER_POSTGRE_ADDR"

```

As you can see, in this script, we take advantage of the 'Environment configuration' available for Patroni. It's another way aside from the patroni.yml config file, where we can set the parameters. 'PATRONI\_*RESTAPI\_*CONNECT*ADDRESS', 'PATRONI\_RESTAPI\_*LISTEN', 'PATRONI\_*POSTGRESQL*CONNECT\_ADDRESS' are those environment variables Patroni knows of and is applying automatically as setup parameters. And btw, they overwrite the ones set locally in patroni.yml

And here is another thing. Patroni docs do not recommend using superuser to connect your apps to the database. So we are going to this another user for connection which can be created with the lines below. It is also set through special env variables Patroni is aware of. Just replace 'approle' with the name you like to create the user with any name of your preference.

```

export PATRONI_approle_PASSWORD="$POSTGRES_APP_ROLE_PASS"

export PATRONI_approle_OPTIONS="${PATRONI_admin_OPTIONS:-createdb, createrole}"

```

And with this last line, where everything is ready for the start we execute Patroni with a link to patroni.yml

```

exec /usr/local/bin/patroni /etc/patroni.yml

```

* **Dockerfile**

As for the Dockerfile I decided to keep it as simple as possible. Let's see what we've got here.

Dockerfile

```

FROM postgres:11

RUN apt-get update -y\

&& apt-get install python3 python3-pip -y\

&& pip3 install --upgrade setuptools\

&& pip3 install psycopg2-binary \

&& pip3 install patroni[zookeeper] \

&& mkdir /data/patroni -p \

&& chown postgres:postgres /data/patroni \

&& chmod 700 /data/patroni

COPY patroni.yml /etc/patroni.yml

COPY patroni_entrypoint.sh ./entrypoint.sh

USER postgres

ENTRYPOINT ["bin/sh", "/entrypoint.sh"]

```

DetailsThe most important thing here is the directory we will be creating inside the container and its owner. Later, when we mount it to a volume on our hard drive, we're gonna need to take care of it the same way we do it here in Dockerfile.

```

// the owner should be 'postgres' and the mode is 700

mkdir /data/patroni -p \

chown postgres:postgres /data/patroni \

chmod 700 /data/patroni

...

// we set active user inside container to postgres

USER postgres

```

The files we created earlier are copied here:

```

COPY patroni.yml /etc/patroni.yml

COPY patroni_entrypoint.sh ./entrypoint.sh

```

And like it was mentioned above at the start we want to execute our entry point script:

```

ENTRYPOINT ["bin/sh", "/entrypoint.sh"]

```

That's it for handling the pre-requisites. Now we can finally build our patroni image. Let's give it a sound name 'patroni-test':

```

docker build -t patroni-test .

```

When the image is ready we can discuss the last but not least file we're gonna need here and it's the compose file, of course.

* **docker-compose-patroni.yml**

A well-configured compose file is something really crucial in this scenario, so let's pinpoint what we should take care of and which details we need to keep in mind.

docker-compose-patroni.yml

```

version: "3.4"

networks:

patroni_patroni:

external: true

services:

patroni1:

image: patroni-test

networks: [ patroni_patroni ]

ports:

- 5441:5432

- 8091:8091

hostname: patroni1

volumes:

- /patroni1:/data/patroni

environment:

PATRONI_API_CONNECT_PORT: 8091

REPLICATION_NAME: replicator

REPLICATION_PASS: replpass

SU_NAME: postgres

SU_PASS: supass

POSTGRES_APP_ROLE_PASS: appass

deploy:

replicas: 1

placement:

constraints: [node.hostname == floitet]

patroni2:

image: patroni-test

networks: [ patroni_patroni ]

ports:

- 5442:5432

- 8092:8091

hostname: patroni2

volumes:

- /patroni2:/data/patroni

environment:

PATRONI_API_CONNECT_PORT: 8091

REPLICATION_NAME: replicator

REPLICATION_PASS: replpass

SU_NAME: postgres

SU_PASS: supass

POSTGRES_APP_ROLE_PASS: appass

deploy:

replicas: 1

placement:

constraints: [node.hostname == floitet]

patroni3:

image: patroni-test

networks: [ patroni_patroni ]

ports:

- 5443:5432

- 8093:8091

hostname: patroni3

volumes:

- /patroni3:/data/patroni

environment:

PATRONI_API_CONNECT_PORT: 8091

REPLICATION_NAME: replicator

REPLICATION_PASS: replpass

SU_NAME: postgres

SU_PASS: supass

POSTGRES_APP_ROLE_PASS: appass

deploy:

replicas: 1

placement:

constraints: [node.hostname == floitet]

```

Details. also important The first detail that pops-up is the network thing we talked about earlier. We want to deploy the Patroni services in the same network as the Zookeeper services. This way 'zoo1', 'zoo2', 'zoo3' names we listed in ***patroni.yml*** providing zookeeper servers are going to work out for us.

```

networks:

patroni_patroni:

external: true

```

As for the ports, we have a database and API and both of them require its pair of ports.

```

ports:

- 5441:5432

- 8091:8091

...

environment:

PATRONI_API_CONNECT_PORT: 8091

// we need to make sure that we set Patroni API connect port

// the same with the one that is set as a target port for docker service

```

Of course, we also need to provide all the rest of the environment variables we kind of promised to provide configuring our entry point script for Patroni, but that's not it. There is an issue with a mount directory we need to take care of.

```

volumes:

- /patroni3:/data/patroni

```

As you can see '/data/patroni' we create in Dockerfile is mounted to a local folder we actually need to create. And not only create but also set the proper use and access mode just like in this example:

```

sudo mkdir /patroni3

sudo chown 999:999 /patroni3

sudo chmod 700 /patroni3

// 999 is the default uid for postgres user

// repeat these steps for each patroni service mount dir

```

With all these steps being done properly we are ready to deploy patroni cluster at last:

```

sudo docker stack deploy --compose-file docker-compose-patroni.yml patroni

```

After the deployment has been finished in the service logs we should see something like this indicating that the cluster is doing well:

```

INFO: Lock owner: patroni3; I am patroni1

INFO: does not have lock

INFO: no action. i am a secondary and i am following a leader

```

But it would be painful if we had no choice but to read through the logs every time we want to check on the cluster health, so let's dig into patronictl. What we need to do is to get the id of the actual container that is running any of the Patroni services:

```

sudo docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

a0090ce33a05 patroni-test:latest "bin/sh /entrypoint.…" 3 hours ago Up 3 hours 5432/tcp patroni_patroni1.1.tgjzpjyuip6ge8szz5lsf8kcq

...

```

And simply exec into this container with the following command:

```

sudo docker exec -ti a0090ce33a05 /bin/bash

// inside container

// we need to specify cluster name to list its memebers

// it is 'scope' parameter in patroni.yml ('patroni' in our case)

patronictl list patroni

// and oops

Error: 'Can not find suitable configuration of distributed configuration store\nAvailable implementations: exhibitor, kubernetes, zookeeper'

```

The thing is that patronictl requires patroni.yml to retrieve the info of zookeeper servers. And it doesn't know where did we put our config so we need to explicitly specify its path like so:

```

patronictl -c /etc/patroni.yml list patroni

// and here is the nice output with the current states

+ Cluster: patroni (6893104757524385823) --+----+-----------+

| Member | Host | Role | State | TL | Lag in MB |

+----------+-----------+---------+---------+----+-----------+

| patroni1 | 10.0.1.93 | Replica | running | 8 | 0 |

| patroni2 | 10.0.1.91 | Replica | running | 8 | 0 |

| patroni3 | 10.0.1.92 | Leader | running | 8 | |

+----------+-----------+---------+---------+----+-----------+

```

HA Proxy

--------

Now everything seems to be set the way we wanted and we can easily access PostgreSQL on the leader service and perform operations we are meant to. But there is this last problem we should get rid of and this being: how do we know where is that leader at the runtime? Do we get to check every time and manually switch to another node when the leader crashes? That'd be extremely unpleasant, no doubt. No worries, this is the job for HA Proxy. Just like we did with Patroni we might want to create a separate folder for all the build/deploy files and then create the following files there:

* **haproxy.cfg**

The config file we're gonna need to copy into our custom haproxy image.

haproxy.cfg

```

global

maxconn 100

stats socket /run/haproxy/haproxy.sock

stats timeout 2m # Wait up to 2 minutes for input

defaults

log global

mode tcp

retries 2

timeout client 30m

timeout connect 4s

timeout server 30m

timeout check 5s

listen stats

mode http

bind *:7000

stats enable

stats uri /

listen postgres

bind *:5000

option httpchk

http-check expect status 200

default-server inter 3s fall 3 rise 2 on-marked-down shutdown-sessions

server patroni1 patroni1:5432 maxconn 100 check port 8091

server patroni2 patroni2:5432 maxconn 100 check port 8091

server patroni3 patroni3:5432 maxconn 100 check port 8091

```

DetailsHere we specify ports we want to access the service from:

```

// one is for stats

listen stats

mode http

bind *:7000

// the second one for connection to postgres

listen postgres

bind *:5000

```

And simply list all the patroni services we have created earlier:

```

server patroni1 patroni1:5432 maxconn 100 check port 8091

server patroni2 patroni2:5432 maxconn 100 check port 8091

server patroni3 patroni3:5432 maxconn 100 check port 8091

```

And the last thing. This line showed below we need if we want to check our Patroni cluster stats from the Haproxy stats tool in the terminal from within a docker container:

```

stats socket /run/haproxy/haproxy.sock

```

* **Dockerfile**

In the Dockerfile it's not much to explain, I guess it's pretty self-explanatory.

Dockerfile

```

FROM haproxy:1.7

COPY haproxy.cfg /usr/local/etc/haproxy/haproxy.cfg

RUN mkdir /run/haproxy &&\

apt-get update -y &&\

apt-get install -y hatop &&\

apt-get clean

```

* **docker-compose-haproxy.yml**

And the compose file for HaProxy looks this way and it's also quite an easy shot comparing to other services we've already covered:

docker-compose-haproxy.yml

```

version: "3.7"

networks:

patroni_patroni:

external: true

services:

haproxy:

image: haproxy-patroni

networks:

- patroni_patroni

ports:

- 5000:5000

- 7000:7000

hostname: haproxy

deploy:

mode: replicated

replicas: 1

placement:

constraints: [node.hostname == floitet]

```

After we have all the files created, let's build the image and deploy it :

```

// build

docker build -t haproxy-patroni

// deploy

docker stack deploy --compose-file docker-compose-haproxy.yml

```

When we got Haproxy up running we can exec into its container and check the Patroni cluster stats from there. It's done with the following commands:

```

sudo docker ps | grep haproxy

sudo docker exec -ti $container_id /bin/bash

hatop -s /var/run/haproxy/haproxy.sock

```

And with this command we'll get the output of this kind:

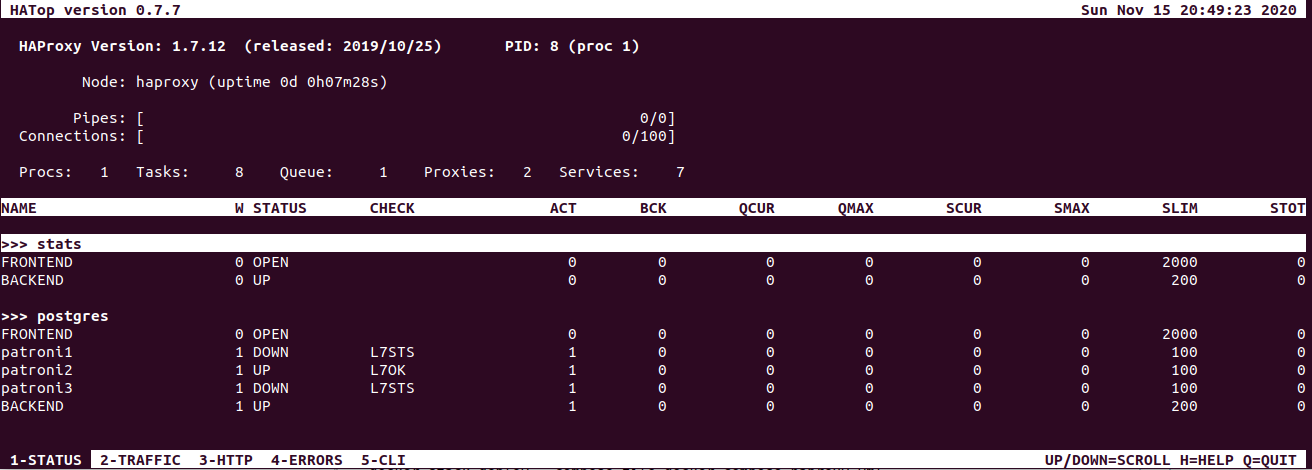

To be honest, I personally prefer to check the status with patronictl but HaProxy is another option which is also nice to have in the administrating toolset. In the very beginning, I promised to show 3 ways to access cluster stats. So the third way of doing it is to use Patroni API directly, which is a cool way as It provides expanded, ample info.

Patroni API

-----------

Full detailed overview of its options you can find in Patroni docs and here I'm gonna quickly show the most common ones I use and how to use 'em in our docker swarm setup.

We won't be able to access any of the Patroni services APIs from outside of the 'patroni\_patroni' network we created to keep all our services together. So what we can do is to build a simple custom curl image to retrieve info in a human-readable format.

Dockerfile

```

FROM alpine:3.10

RUN apk add --no-cache curl jq bash

CMD ["/bin/sh"]

```

And then run a container with this image connected to the 'patroni\_patroni' network.

```

docker run --rm -ti --network=patroni_patroni curl-jq

```

Now we can call Patroni nodes by their names and get stats like so:

Node stats

```

curl -s patroni1:8091/patroni | jq

{

"patroni": {

"scope": "patroni",

"version": "2.0.1"

},

"database_system_identifier": "6893104757524385823",

"postmaster_start_time": "2020-11-15 19:47:33.917 UTC",

"timeline": 10,

"xlog": {

"received_location": 100904544,

"replayed_timestamp": null,

"replayed_location": 100904544,

"paused": false

},

"role": "replica",

"cluster_unlocked": false,

"state": "running",

"server_version": 110009

}

```

Cluster stats

```

curl -s patroni1:8091/cluster | jq

{

"members": [

{

"port": 5432,

"host": "10.0.1.5",

"timeline": 10,

"lag": 0,

"role": "replica",

"name": "patroni1",

"state": "running",

"api_url": "http://10.0.1.5:8091/patroni"

},

{

"port": 5432,

"host": "10.0.1.4",

"timeline": 10,

"role": "leader",

"name": "patroni2",

"state": "running",

"api_url": "http://10.0.1.4:8091/patroni"

},

{

"port": 5432,

"host": "10.0.1.3",

"lag": "unknown",

"role": "replica",

"name": "patroni3",

"state": "running",

"api_url": "http://10.0.1.3:8091/patroni"

}

]

}

```

Pretty much everything we can do with the Patroni cluster can be done through Patroni API, so if you want to get to know better the options available feel free to read official docs on this topic.

PostgreSQL Connection

---------------------

The same thing here: first run a container with the Postgres instance and then from within this container get connected.

```

docker run --rm -ti --network=patroni_patroni postgres:11 /bin/bash

// access to the concrete patroni node

psql --host patroni1 --port 5432 -U approle -d postgres

// access to the leader with haproxy

psql --host haproxy --port 5000 -U approle -d postgres

// user 'approle' doesn't have a default database

// so we need to specify one with the '-d' flag

```

Wrap-up

-------

Now we can experiment with the Patroni cluster as if it was an actual 3-node setup by simply scaling services. In my case when patroni3 happened to be the leader, I can go ahead and do this:

```

docker service scale patroni_patroni3=0

```

This command will disable the Patroni service by killing its only running container. Now I can make sure that failover has happened and the leader role moved to another service:

```

postgres@patroni1:/$ patronictl -c /etc/patroni.yml list patroni

+ Cluster: patroni (6893104757524385823) --+----+-----------+

| Member | Host | Role | State | TL | Lag in MB |

+----------+-----------+---------+---------+----+-----------+

| patroni1 | 10.0.1.93 | Leader | running | 9 | |

| patroni2 | 10.0.1.91 | Replica | running | 9 | 0 |

+----------+-----------+---------+---------+----+-----------+

```

After I scale it back to "1", I'll get my 3-node cluster with patroni3, the former leader, back in shape but in a replica mode.

From this point, you are able to run your experiments with the Patroni cluster and see for yourself how it handles critical situations.

Outroduction

------------

As I promised, I'm going to provide a sample test script and instructions on how to approach it. So if you need something for a quick test-run, you are more than welcomed to read under a spoiler section. If you already have your own test scenarios in mind and don't need any pre-made solutions, just skip it without any worries.

Patroni cluster testSo for the readers who want to put their hands-on testing the Patroni cluster right away with something pre-made, I created [this script](https://pastebin.com/p23sp83L). It's super simple and I believe you won't have problems getting a grasp of it. Basically, it just writes the current time in the database through the haproxy gateway each second. Below I'm going to show step by step how to approach it and what was the outcome from the test-run on my local stand.

* **Step 1.**

Assume you've already downloaded the script from the link and put it somewhere on your machine. If not, do this preparation and follow up. From here we'll move on and create a docker container from an official Microsoft SDK image like so:

```

docker run --rm -ti --network=patroni_patroni -v /home/floitet/Documents/patroni-test-script:/home mcr.microsoft.com/dotnet/sdk /bin/bash

```

The important thing is that we get connected to the 'patroni\_patroni' network. And another crucial detail is that we want to mount this container to a directory where you've put the script. This way you can easily access it from within a container in the '/home' directory.

* **Step2.**

Now we need to take care of getting the only dll we are going to need for our script to compile. Standing in the '/home' directory let's create a new folder for the console app. I'm gonna call it 'patroni-test'. Then cd to this directory and run the following command:

```

dotnet new console

// and the output be like:

Processing post-creation actions...

Running 'dotnet restore' on /home/patroni-test/patroni-test.csproj...

Determining projects to restore...

Restored /home/patroni-test/patroni-test.csproj (in 61 ms).

Restore succeeded.

```

And from here we can add the package we'll be using as a dependency for our script:

```

dotnet add package npgsql

```

And after that simply pack the project:

```

dotnet pack

```

If everything went as expected you'll get 'Ngsql.dll' sitting here:

'patroni-test/bin/Debug/net5.0/Npgsql.dll'.

It is exactly the path we reference in our script so if yours differs from mine, you're gonna need to change it in the script.

And what we do next is just run the script:

```

dotnet fsi /home/patroni-test.fsx

// and get the output like this:

11/18/2020 22:29:32 +00:00

11/18/2020 22:29:33 +00:00

11/18/2020 22:29:34 +00:00

```

> Make sure you keep the terminal with the running script open

>

>

* **Step 3.**

Let's check the Patroni cluster to see where is the leader using patronictl, PatroniAPI, or HaProxy, either way, is fine. In my case the leader status was on 'patroni2':

```

+ Cluster: patroni (6893104757524385823) --+----+-----------+

| Member | Host | Role | State | TL | Lag in MB |

+----------+-----------+---------+---------+----+-----------+

| patroni1 | 10.0.1.18 | Replica | running | 21 | 0 |

| patroni2 | 10.0.1.22 | Leader | running | 21 | |

| patroni3 | 10.0.1.24 | Replica | running | 21 | 0 |

+----------+-----------+---------+---------+----+-----------+

```

So what we need to get done at this point is to open another terminal and fail the leader node:

```

docker service ls | grep patroni

docker service scale $patroni2-id=0

```

After some time in the terminal with the script we'll see logs throwing error:

```

// let's memorize the time we got the last successfull insert

11/18/2020 22:33:06 +00:00

Error

Error

Error

```

If we check the Patroni cluster stats at this time we might see some delay though and patroni2 still indicating a healthy state running as a leader. But after some time it's going to fail and cluster, through a short stage of the leadership elections, will come to the following state:

```

+ Cluster: patroni (6893104757524385823) --+----+-----------+

| Member | Host | Role | State | TL | Lag in MB |

+----------+-----------+---------+---------+----+-----------+

| patroni1 | 10.0.1.18 | Replica | running | 21 | 0 |

| patroni3 | 10.0.1.24 | Leader | running | 21 | |

+----------+-----------+---------+---------+----+-----------+

```

If we go back to our script output we should notice that the connection has finally recovered and the logs are as follows:

```

Error

Error

Error

11/18/2020 22:33:48 +00:00

11/18/2020 22:33:49 +00:00

11/18/2020 22:33:50 +00:00

11/18/2020 22:33:51 +00:00

```

* **Step 4.**

Let's go ahead and check if the database is in a proper state after a failover:

```

docker run --rm -ti --network=patroni_patroni postgres:11 /bin/bash

psql --host haproxy --port 5000 -U approle -d postgres

postgres=> \c patronitestdb

You are now connected to database "patronitestdb" as user "approle".

// I set the time a little earlier than the crash happened

patronitestdb=> select * from records where time > '22:33:04' limit 15;

time

-----------------

22:33:04.171641

22:33:05.205022

22:33:06.231735

// as we can see in my case it required around 42 seconds

// for connection to recover

22:33:48.345111

22:33:49.36756

22:33:50.374771

22:33:51.383118

22:33:52.391474

22:33:53.399774

22:33:54.408107

22:33:55.416225

22:33:56.424595

22:33:57.432954

22:33:58.441262

22:33:59.449541

```

**Summary**

From this little experiment, we can conclude that Patroni managed to serve its purpose. After the failure occurred and the leader was re-elected we managed to reconnect and keep working with the database. And the previous data is all present on the leader node which is what we expected. Maybe it could have switched to another node a little faster than in 42 seconds, but at the end of the day, it's not that critical.

I suppose we should consider our work with this tutorial finished. Hope it helped you figure out the basics of a Patroni cluster setup and hopefully it was useful. Thanks for your attention and let the Patroni guardian keep you and your data safe at all times! | https://habr.com/ru/post/527370/ | null | null | 5,638 | 52.09 |

29 August 2012 11:59 [Source: ICIS news]

SINGAPORE (ICIS)--?xml:namespace>

Jiangsu Sopo made a net profit of CNY7.47m in the same period a year earlier.

The company’s operating loss for the period was down by more than four times at CNY27.2m, according to the statement.

Jiangsu Sopo produced 52,400 tonnes of caustic soda from January to June this year, which represents 44.4% of its yearly target in 2012, down by 3.5% compared with the same period in 2011, according to the statement.

Furthermore, the company invested CNY28.5m in the construction of various projects in the first half of this year. An 80,000 tonne/year ion-exchange membrane caustic soda project cost CNY19.7m and it is 70% completed, according to the statement.

Jiangsu Sopo Chemical Industry Shareholding is a subsidiary of Jiangsu Sopo Group and its main products include caustic soda and bleaching powder.

( | http://www.icis.com/Articles/2012/08/29/9590649/chinas-jiangsu-sopo-swings-to-h1-net-loss-on-weak-demand.html | CC-MAIN-2014-10 | refinedweb | 154 | 68.97 |

The new features, bug fixes and improvements for PHP and the Web, and takes on the latest improvements in IntelliJ Platform.

New code style setting: blank lines before namespace

We’ve added a new code style setting to specify the minimum blank lines before namespace. Now you are able to tune this part according to your preferred code style. The default value is set to 1.

Align assignment now affects shorthand operators

Now the option Settings|Code Style|PHP|Wrapping and Braces|Assignment Statement|Align consecutive assignments takes into account shorthand operators.

“Download from…” option in deployment

On your request, we’ve implemented an option “Download from…” in deployment actions that allows choosing from which server file or folder should be downloaded. Before the introduction of this option, files could be downloaded only from a default deployment server.

New “Copy Type” and “Jump to Type Source” actions from the Debugger Variables View

We’ve added two new actions to Debugger Variables View: Jump to Type Source and Copy Type. Jump to Type Source allows you to navigate directly to the class of the current variable to inspect its code. While Copy Type action copies the type of the variable into the clipboard for later usage in your code or for sending it as a reference to your colleague.

See the full list of bug-fixes and improvements list in our issue tracker and the complete release notes.

Download PhpStorm 2017.1 EAP build 171.2152 for your platform from the project EAP page or click “Update” in your JetBrains Toolbox and please do report any bugs and feature request to our Issue Tracker.

Your JetBrains PhpStorm Team

The Drive to Develop | https://blog.jetbrains.com/phpstorm/2017/01/phpstorm-2017-1-eap-171-2152/ | CC-MAIN-2020-10 | refinedweb | 281 | 61.67 |

Prerequisites

- You must have an Amazon Web Services account ().

- You must have signed up to use the Alexa Site Thumbnail ().

- Assumes python 2.4 or later.

Running the Sample

- Extract the .zip file into a working directory.

- Edit the ThumbnailUtility.py file to include your Access Key ID and Secret Access Key.

- Open up the python interpreter and run the following (or you can include this in some app):

from ThumbnailUtility import *And for a list:

create_thumbnail('kelvinism.com', 'Large')from ThumbnailUtility import *

all_sites = ['kelvinism.com', 'alexa.com', 'amazon.com']

create_thumbnail_list(all_sites, 'Small')

Note: I think most people use Python (for doing web things) in a framework, so this code snippet doesn't include the mechanism of actually displaying the images, it just returns the image location. However, take a look in readme.txt for more examples of actually displaying the images and more advanced usage.

If you need help or run into a stumbling block, don't hesitate contacting me: kelvin [-at-] kelvinism.com | http://aws.amazon.com/code/Python/818 | CC-MAIN-2015-22 | refinedweb | 165 | 60.41 |

[[!img Logo]

[[!format rawhtml """

!html

"""]]:

* [[!format txt """

void good_function(void) {

if (a) { printf("Hello World!\n"); a = 0; } } """]]And this is wrong: [[!format txt """ void bad_function(void) { if (a) { printf("Hello World!\n"); a = 0; } } """]]

- Avoid unnecessary curly braces. Good code:

* [[!format txt """

if (!braces_needed) printf("This is compact and neat.\n"); """]]Bad code: [[!format txt """ if (!braces_needed) { printf("This is superfluous and noisy.\n"); } """]]

- Don't put the return type of a function on a separate line. This is good:

* [[!format txt """

int good_function(void) { } """]]and this is bad: [[!format txt """ int bad_function(void) { } """]]

- On function calls and definitions, don't put an extra space between the function name and the opening parenthesis of the argument list. This good:

- [[!format txt """ double sin(double x); """]]This bad: [[!format txt """:

* [[!format txt """

typedef enum pa_resample_method { / ... / } pa_resample_method_t; """]]

- No C++ comments please! i.e. this is good:

* [[!format txt """

/ This is a good comment / """]]and this is bad: [[!format txt """ //:

* [[!format txt """

void good_code(int a, int b) { pa_assert(a > 47); pa_assert(b != 0);

/ ... / } """]]Bad code: [[!format txt """ void bad_code(int a, int b) { pa_assert(a > 47 && b != 0);

}

"""]]

1. Errors are returned from functions as negative values. Success is returned as 0 or positive value.

1. Check for error codes on every system call, and every external library call. If you you are sure that calls like that cannot return an error, make that clear by wrapping it in

pa_assert_se(). i.e.:

* [[!format txt """

pa_assert_se(close(fd) == 0);

"""]]Please note that

pa_assert_se() is identical to

pa_assert(), except that it is not optimized away if NDEBUG is defined. (

se stands for side effect)

1. Every .c file should have a matching .h file (exceptions allowed)

1. In .c files we include .h files whose definitions we make use of with

#include <>. Header files which we implement are to be included with

#include "".

1. If

#include <> is used the full path to the file relative to

src/ should be used.

1..

1.:

[[!format txt """ A few of us had an IRC conversation about whether or not to localise PulseAudio's log messages. The suggestion/outcome is to add localisation only if all of the following points are fulfilled:

1) The message is of type "warning" or "error". (Debug and info levels are seldom exposed to end users.)

2) The translator is likely to understand the sentence. (Otherwise we'll have a useless translation anyway.)

3) It's at least remotely likely that someone will ever encounter it. (Otherwise we're just wasting translator's time.):

[[!format txt """ )) """]] | http://freedesktop.org/wiki/Software/PulseAudio/Documentation/Developer/CodingStyle/?action=SyncPages | CC-MAIN-2013-20 | refinedweb | 424 | 78.14 |

Lexical Dispatch in Python

A recent article on Ikke’s blog shows how to emulate a C switch statement using Python. (I’ve adapted the code slightly for the purposes of this note).

def handle_one(): return 'one' def handle_two(): return 'two' def handle_three(): return 'three' def handle_default(): return 'unknown' cases = dict(one=handle_one, two=handle_two, three=handle_three) for i in 'one', 'two', 'three', 'four': handler = cases.get(i, handle_default) print handler()

Here the

cases dict maps strings to functions and the subsequent switch is a simple matter of looking up and dispatching to the correct function. This is good idiomatic Python code. When run, it outputs:

one two three unknown

Here’s an alternative technique which I’ve sometimes found useful. Rather than build an explicit

cases dict, we can just use one of the dicts lurking behind the scenes — in this case the one supplied by the built-in

globals() function. Leaving the

handle_*() functions as before, we could write:

for i in 'one', 'two', 'three', 'four': handler = globals().get('handle_%s' % i, handle_default) print handler()

Globals() returns us a

dict mapping names in the current scope to their values. Since our handler functions are uniformly named, some string formatting combined with a simple dictionary look-up gets the required function.

A warning: it’s unusual to access objects in the global scope in this way, and in this particular case, the original explicit dictionary dispatch would be better. In other situations though, when the scope narrows to a class or a module, it may well be worth remembering that classes and modules behave rather like dicts which map names to values. The built-in

getattr() function can then be used as a function dispatcher. Here’s a class-based example:

PLAIN, BOLD, LINK, DATE = 'PLAIN BOLD LINK DATE'.split() class Paragraph(object): def __init__(self): self.text_so_far = '' def __str__(self): return self._do_tag(self.text_so_far, 'p') def _do_tag(self, text, tag): return '<%s>%s</%s>' % (tag, text, tag) def do_bold(self, text): return self._do_tag(text, 'b') def do_link(self, text): return '<a href="%s">%s</a>' % (text, text) def do_plain(self, text): return text def append(self, text, markup=PLAIN): handler = getattr(self, 'do_%s' % markup.lower()) self.text_so_far += handler(text) return self

Maybe not the most fully-formed of classes, but I hope you get the idea! Incidentally,

append() returns a reference to

self so clients can chain calls together.

>>> print Paragraph().append("Word Aligned", BOLD ... ).append(" is at " ... ).append("", LINK) <p><b>Word Aligned</b> is at <a href=""></a></p>

By the way, “Lexical Dispatch” isn’t an official term or one I’ve heard before. It’s just a fancy way of saying “call things based on their name” — and the term “call by name” has already been taken | http://wordaligned.org/articles/lexical-dispatch-in-python | CC-MAIN-2015-32 | refinedweb | 466 | 62.58 |

Say I am reading an xml file using SAX Parser : Here is the format of xml file

<BookList> <BookTitle_1> C++ For Dummies </BookTitle_1> <BookAurthor_1> Charles </BookAuthor> <BookISBN_1> ISBN -1023-234 </BookISBN_2> <BookTitle_2> Java For Dummies </Booktitle_2> <BookAuthor_2> Henry </BookAuthor_2> <BookISN_2> ISBN - 231-235 </BookISN_2> </BookList>

And then I have class call Books:

public class Book { private String Name; private String Author; private String ISBN; public void SetName(String Title) { this.Name = Title; } public String getName() { return this.Name; } public void setAuthor(String _author) { this.Author = _author; } public String getAuthor() { }

And then I have an ArrayList class of type Book as such:

public class BookList { private List <Book> books ; BookList(Book _books) { books = = new ArrayList<Book>(); books.add(_books); } }

And lastly I have the main class,

where I parse and read the books from the xml file. Currently when I parse an xml file and say I read the tag <BookTitle_1> I call the SetName() and then call BookList() to create a new arraylist for the new book and add it to the arraylist.

But how can I create a dynamic setters and getters method so that whenever a new book title is read it calls the new setter and getter method to add it to arraylist.

Currently my code over writes the previously stored book and prints that book out. I have heard there is something called reflection. If I should use reflection, please can some one show me an example?

Thank you.

This post has been edited by jon.kiparsky: 28 July 2013 - 04:37 PM

Reason for edit:: Recovered content from closed version of same thread | http://www.dreamincode.net/forums/topic/325810-how-can-i-create-dynamic-setters-and-getters-accessors-and-mutators/page__pid__1880640__st__0 | CC-MAIN-2016-07 | refinedweb | 268 | 66.78 |

Alex Karasulu wrote:

> Hi all,

>

> On Jan 16, 2008 5:26 AM, Emmanuel Lecharny <[email protected]

> <mailto:[email protected]>> wrote:

>

> Hi Alex, PAM,

>

> if we are to go away from JNDI, Option 2 is out of question.

> Anyway, the

> backend role is to store data, which has nothing in common with

> Naming,

> isn't it ?

>

>

> Well if you mean the tables yes you're right it has little to do with

> javax.naming except for one little thing that keeps pissing me off

> which is the fact that normalization is needed by indices to function

> properly. And normalizers generate NamingExceptions. If anyone has

> any idea on how best to deal with this please let me know.

Well, indices should have been normalized *before* being stored, and

this is what we are working on with the new ServerEntry/Attribute/Value

stuff, so your problem will vanish soon ;) (well, not _that_ soon, but ...)

>

>

> and I

> see no reason to depend on NamingException when we have nothing to do

> with LDAP.

>

>

> We still have some residual dependence on LDAP at higher levels like

> when we talk about Index or Partition because of the nature of their

> interfaces. Partitions are still partial to the LDAP namespace or we

> would be screwed. They still need to be tailored to the LDAP

> namespace. There are in my mind 2 layers of abstractions and their

> interfaces which I should probably clarify

>

> Partition Abstraction Layer

> --------------------------------

> o layer between the server and the entry store/search engine

> (eventually we might separate search from stores)

> o interfaces highly dependent on the LDAP namespace

>

> BTree Partition Layer

> -------------------------

> o layer between an abstract partition implementation with concrete

> search engine which uses a concrete search engine based on a two

> column db design backed by BTree (or similar primitive data structures)

> o moderately dependent on the namespace

>

> Note the BTree Partition Layer is where we have interfaces defined

> like Table, and Index. These structures along with Cursors are to be

> used by this default search engine to conduct search. We can then

> swap out btree implementations between in memory, JE and JDBM easily

> without messing with the search algorithm.

This is where things get tricky... But as soon as we can clearly define

the different layers without having some kind of overlap, then we will

be done. The pb with our current impl is that we are mixing the search

engine with the way data are stored. Your 'cursor' implementation will

help a lot solving this problem.

--

--

cordialement, regards,

Emmanuel Lécharny

directory.apache.org | http://mail-archives.apache.org/mod_mbox/directory-dev/200801.mbox/%[email protected]%3E | CC-MAIN-2014-42 | refinedweb | 423 | 59.13 |

A very practical version of an Action Menu Item (AMI) is a variant that will run an application or a script on your local computer. For this to work you need to set up a connection between your browser and the script or application you wish to run. This link is called a custom browser protocol.

You may want to set up a type of link where if a user clicks on it, it will launch the [foo] application. Instead of having ‘http’ as the prefix, you need to designate a custom protocol, such as ‘foo’. Ideally you want a link that looks like:

foo://some/info/here.

The operating system has to be informed how to handle protocols. By default, all of the current operating systems know that ‘http’ should be handled by the default web browser, and ‘mailto’ should be handled by the default mail client. Sometimes when applications are installed, they register with the OS and tell it to launch the applications for a specific protocol.

As an example, if you install RV, the application registers

rvlink:// with the OS and tells it that RV will handle all

rvlink:// protocol requests to show an image or sequence in RV. So when a user clicks on a link that starts with

rvlink://, as you can do in Shotgun, the operating system will know to launch RV with the link and the application will parse the link and know how to handle it.

See the RV User Manual for more information about how RV can act as a protocol handler for URLs and the “rvlink” protocol.

Registering a protocol

Registering a protocol on Windows

On Windows, registering protocol handlers involves modifying the Windows Registry. Here is a generic example of what you want the registry key to look like:

HKEY_CLASSES_ROOT foo (Default) = "URL:foo Protocol" URL Protocol = "" shell open command (Default) = "foo_path" "%1"

The target URL would look like:

foo://host/path...

Note: For more information, please see.

Windows QT/QSetting example

If the application you are developing is written using the QT (or PyQT / PySide) framework, you can leverage the QSetting object to manage the creation of the registry keys for you.

This is what the code looks like to automatically have the application set up the registry keys:

// cmdLine points to the foo path. //Add foo to the Os protocols and set foobar to handle the protocol QSettings fooKey("HKEY_CLASSES_ROOT\\foo", QSettings::NativeFormat); mxKey.setValue(".", "URL:foo Protocol"); mxKey.setValue("URL Protocol", ""); QSettings fooOpenKey("HKEY_CLASSES_ROOT\\foo\\shell\\open\\command", QSettings::NativeFormat); mxOpenKey.setValue(".", cmdLine);

Windows example that starts a Python script via a Shotgun AMI

A lot of AMIs that run locally may opt to start a simple Python script via the Python interpreter. This allows you to run simple scripts or even apps with GUIs (PyQT, PySide or your GUI framework of choice). Let’s look at a practical example that should get you started in this direction.

Step 1: Set up the custom :// protocol to launch the

python interpreter with the first argument being the script

sgTriggerScript.py and the second argument being

%1. It is important to understand that

%1 will be replaced by the URL that was clicked in the browser or the URL of the AMI that was invoked. This will become the first argument to your Python script.

Note: You may need to have full paths to your Python interpreter and your Python script. Please adjust accordingly.

Step 2: Parse the incoming URL in your Python script

In your script you will take the first argument that was provided, the URL, and parse it down to its components in order to understand the context in which the AMI was invoked. We’ve provided some simple scaffolding that shows how to do this in the following code.

Python script

import sys import urlparse import pprint def main(args): # Make sure we have only one arg, the URL if len(args) != 1: return 1 # Parse the URL: protocol, fullPath = args[0].split(":", 1) path, fullArgs = fullPath.split("?", 1) action = path.strip("/") args = fullArgs.split("&") params = urlparse.parse_qs(fullArgs) # This is where you can do something productive based on the params and the # action value in the URL. For now we'll just print out the contents of the # parsed URL. fh = open('output.txt', 'w') fh.write(pprint.pformat((action, params))) fh.close() if __name__ == '__main__': sys.exit(main(sys.argv[1:]))

Step 3: Connect the:// URL which will be redirected to your script via the registered custom protocol.

In the

output.txt file in the same directory as your script you should now see something like this:

('processVersion', {'cols': ['code', 'image', 'entity', 'sg_status_list', 'user', 'description', 'created_at'], 'column_display_names': ['Version Name', 'Thumbnail', 'Link', 'Status', 'Artist', 'Description', 'Date Created'], 'entity_type': ['Version'], 'ids': ['6933,6934,6935'], 'page_id': ['4606'], 'project_id': ['86'], 'project_name': ['Test'], 'referrer_path': ['/detail/HumanUser/24'], 'selected_ids': ['6934'], 'server_hostname': ['patrick.shotgunstudio.com'], 'session_uuid': ['9676a296-7e16-11e7-8758-0242ac110004'], 'sort_column': ['created_at'], 'sort_direction': ['asc'], 'user_id': ['24'], 'user_login': ['shotgun_admin'], 'view': ['Default']})

Possible variants

By varying the keyword after the

// part of the URL in your AMI, you can change the contents of the

action variable in your script, all the while keeping the same

shotgun:// protocol and registering only a single custom protocol. Then, based on the content of the

action variable and the contents of the parameters, your script can understand what the intended behavior should be.

Using this methodology you could open applications, upload content via services like FTP, archive data, send email, or generate PDF reports.

Registering a protocol on OSX

To register a protocol on OSX you need to create a .app bundle that is configured to run your application or script.

Start by writing the following script in the AppleScript Script Editor:

on open location this_URL do shell script "sgTriggerScript.py '" & this_URL & "'" end open location

Pro tip: To ensure you are running Python from a specific shell, such as tcsh, you can change the do shell script for something like the following:

do shell script "tcsh -c \"sgTriggerScript.py '" & this_URL & "'\""

In the Script Editor, save your short script as an “Application Bundle”.

Find the saved Application Bundle, and Open Contents. Then, open the info.plist file and add the following to the plist dict:

<key>CFBundleIdentifier</key> <string>com.mycompany.AppleScript.Shotgun</string> <key>CFBundleURLTypes</key> <array> <dict> <key>CFBundleURLName</key> <string>Shotgun</string> <key>CFBundleURLSchemes</key> <array> <string>shotgun</string> </array> </dict> </array>

You may want to change the following three strings:

com.mycompany.AppleScript://, the

.app bundle will respond to it and pass the URL over to your Python script. At this point the same script that was used in the Windows example can be used and all the same possibilities apply.

Registering a protocol on Linux

Use the following code:

gconftool-2 -t string -s /desktop/gnome/url-handlers/foo/command 'foo "%s"' gconftool-2 -s /desktop/gnome/url-handlers/foo/needs_terminal false -t bool gconftool-2 -s /desktop/gnome/url-handlers/foo/enabled true -t bool

Then use the settings from your local GConf file in the global defaults in:

/etc/gconf/gconf.xml.defaults/%gconf-tree.xml

Even though the change is only in the GNOME settings, it also works for KDE. Firefox and GNU IceCat defer to gnome-open regardless of what window manager you are running when it encounters a prefix it doesn’t understand (such as

foo://). So, other browsers, like Konqueror in KDE, won’t work under this scenario.

See for more information on setting up protocol handlers for Action Menu Items in Ubuntu. | https://support.shotgunsoftware.com/hc/en-us/articles/219031308-Launching-Applications-Using-Custom-Browser-Protocols | CC-MAIN-2020-24 | refinedweb | 1,259 | 53.21 |

#include <sys/conf.h> #include <sys/ddi.h> #include <sys/sunddi.h> int ddi_dev_is_sid(dev_info_t *dip);

Solaris DDI specific (Solaris DDI).

A pointer to the device's dev_info structure.

The ddi_dev_is_sid() function tells the caller whether the device described by dip is self-identifying, that is, a device that can unequivocally tell the system that it exists. This is useful for drivers that support both a self-identifying as well as a non-self-identifying variants of a device (and therefore must be probed).

Device is self-identifying.

Device is not self-identifying.

The ddi_dev_is_sid() function can be called from user, interrupt, or kernel context.

1 ... 2 int 3 bz_probe(dev_info_t *dip) 4 { 5 ... 6 if (ddi_dev_is_sid(dip) == DDI_SUCCESS) { 7 /* 8 * This is the self-identifying version (OpenBoot). 9 * No need to probe for it because we know it is there. 10 * The existence of dip && ddi_dev_is_sid() proves this. 11 */ 12 return (DDI_PROBE_DONTCARE); 13 } 14 /* 15 * Not a self-identifying variant of the device. Now we have to 16 * do some work to see whether it is really attached to the 17 * system. 18 */ 19 ...

probe(9E) Writing Device Drivers for Oracle Solaris 11.2 | http://docs.oracle.com/cd/E36784_01/html/E36886/ddi-dev-is-sid-9f.html | CC-MAIN-2016-40 | refinedweb | 195 | 60.41 |

Elvis Chitsungo1,817 Points

Can someone help.

Can someone help

public class Spaceship{ public String shipType; public String getShipType() { return shipType; } public void setShipType(String shipType) { this.shipType = shipType; }

6 Answers

Calin Bogdan14,623 Points

There is a ‘}’ missing at the end of the file, the one that wraps the class up.

Calin Bogdan14,623 Points

One issue would be that shipType property is public instead of being private.

Elvis Chitsungo1,817 Points

U mean changing this ''public void'' to private void.

Elvis Chitsungo1,817 Points

After changing to private in now getting below errors.

./Spaceship.java:9: error: reached end of file while parsing } ^ JavaTester.java:138: error: shipType has private access in Spaceship ship.shipType = "TEST117"; ^ JavaTester.java:140: error: shipType has private access in Spaceship if (!tempReturn.equals(ship.shipType)) { ^ JavaTester.java:203: error: shipType has private access in Spaceship ship.shipType = "TEST117"; ^ JavaTester.java:205: error: shipType has private access in Spaceship if (!ship.shipType.equals("TEST249")) { ^ 5 errors

Calin Bogdan14,623 Points

That's because you have to use ship.getShipType() to get its value and ship.setShipType(type) to set its value. It is recommended to do so to ensure encapsulation and scalability.

Here are some solid reasons for using encapsulation in your code:

- Encapsulation of behaviour.

Elvis Chitsungo1,817 Points

This is now becoming more complicated, i don't know much about java. But with the first one i was only having one error. i am referring to my first post. Let me re-type it again

./Spaceship.java:9: error: reached end of file while parsing } ^ 1 error

Elvis Chitsungo1,817 Points

Calin Bogdan thanks, u have managed to give me the best answer.

Calin Bogdan14,623 Points

Calin Bogdan14,623 Points

This is correct:

The only change you have to make is public String shipType -> private String shipType.

Fields (shipType) have to be private, their accessors (getShipType, setShipType) have to be public. | https://teamtreehouse.com/community/can-someone-help-13 | CC-MAIN-2020-40 | refinedweb | 321 | 60.01 |

evalFunction

This example shows how to evaluate the expression

x+y in Python®. To evaluate an expression, pass a Python

dict value for the

globals namespace parameter.

Read the help for eval.

py.help('eval')

Help on built-in function eval in module builtins:.

Create a Python

dict variable for the

x and

y values.

workspace = py.dict(pyargs('x',1,'y',6))

workspace = Python dict with no properties. {'y': 6.0, 'x': 1.0}

Evaluate the expression.

res = py.eval('x+y',workspace)

res = 7

Add two numbers without assigning variables. Pass an empty

dict value for the

globals parameter.

res = py.eval('1+6',py.dict)

res = Python int with properties: denominator: [1×1 py.int] imag: [1×1 py.int] numerator: [1×1 py.int] real: [1×1 py.int] 7 | https://nl.mathworks.com/help/matlab/matlab_external/call-python-eval-function.html | CC-MAIN-2019-35 | refinedweb | 134 | 63.86 |

Sharepoint foundation 2010 webpart jobs

.. experience

urgent requirement for SharePoint developer who knows the office 365

..,

...speakers are welcomed ! The work can be

I am looking to learn advanced MS access

I am looking for a help with my database with Query / Report /etc"

My Windows Small Business Server 2011 needs a Maintenance/Update. Update Exchange 2010 with SP2 / Check for Errors Update SSL certificates. Check System for Errors.

...useful for website Support the web site for next 3 months after delivery Set up a preliminary project plan in qdPM ( Although this is small project but this would be a foundation for future collaboration ) Setup Matomo and configure the dashboard for web data analytics What is not our purpose or goal of this web site: Ecommerce Blog Product

..

I have MS Access application file(*.accdb). There are many tables on this file. Freelancer should convert these tables into mysql database. Please contact me with writing proper solution on bid text. Thanks.

We need foundation details sketch to be drafted in AutoCAD. The drawing should be done accurately.

Hi, We have hotel system so we need to modify us some forms and reports, our system developed VB.net 2010 and SQL Server 2008.

Zlecenie polega na przepisaniu na nowo kodu JS i CSS w celu

We did an interview with the trevor noah foundation that has been transcribed and needs to be written into a captivating article that attracts the reader and references his autobiography "born a crime" in regard to his mission

Microsoft Access Advanced 2010

.. at the start and end of each month. By start I

I would like to isolate bootstrap v3.3.7 to avoid css conflicts with SharePoint online. New namespace would be 'bootstrap-htech'. and user will see a result according to his answers. For example if user have 40 point from his answers he will get Answer A, if it's more than 60 he will get "Answer B". Please contact me if you can make...

We are a small construction company interested in having someone work with us to set up our SharePoint site, give instruction concerning use, & do some programming for custom functionality. The right person must be an expert in SharePoint design & able to communicate slowly & clearly.

I am trying to find someone that can create a brochure for me. I will need it base of my website which is [login to view URL] Please let me know if you are available to do it. Thank you!

need help on sharepoint small requirement.

I would like to Advanced Microsoft Access 2010/2013 ( report, Query, Search)

Sharepoint expertise... Look for a proposal on Sharepoint applications All default application from Microsoft migration to 2016. One has to list the features and functionalities of each module. Migration steps we plan to do when we migrate from sharepoint 2013 to sharepoint 2016. My price is 50$.

...com/watch?v=YAyc2bYRYP4 Background:

I would like the three documents: 1. 26.02.2015 Meditsiiniseadmed...At this URL: [login to view URL] Converted to Microsoft Excel format - so that they can be opened in Microsoft Excel 2010. Please start your response with the word "Rosebud", so that I know you have read what is required.

...existing emails with the same originator and titles and automatically merge incoming emails as they come in. Requirements and limitation: - Project must work on Outlook 2010 and Outlook 2016. - Must be develop using Yeoman, CANNOT be develop using Visual Studio. - Build as custom Outlook Add-in. - The Add-in allows two modes (can can activated.

Preaching and Teaching self and others. First Prize in Poetry Competition, ManMeet 2010 at IIM-Bangalore Paper presented at Annamalai University, sponsored by UGC, entitled “Innovative Time Management”

For Police Dept. Heavy on the collaboration/social media aspect. Announcements, Calendars, Outlook email (if possible), Workflows, Projects, Tableau Dashboards (already developed, just display), possibly Yammer [login to view URL]

Fiberglass pole calculations and foundation drawings.

...Your server will not need to support any methods beyond GET, although there is extra credit available for supporting other methods. The project template provides the basic foundation of your server in C++ and will allow you to focus more on the technical systems programming aspect of this lab, rather than needing to come up with a maintainable design

I need a developer to recreate the following assessment and build it...[login to view URL] I will provide the text and the maturity model categories and the method for scoring (e.g. 2/5). Must be able to set up foundation so that we can add maturity categories, questions and scores based on answers to those questions.. | https://www.freelancer.com/work/sharepoint-foundation-2010-webpart/ | CC-MAIN-2018-22 | refinedweb | 773 | 64.41 |

Synopsis edit

-

- lassign list varName ?varName ...?

Documentation edit

- official reference

- TIP 57

- proposed making the TclX lassign command a built-in Tcl command

Description editlassign assigns values from a list to the specified variables, and returns the remaining values. For example:

set end [lassign {1 2 3 4 5} a b c]will set $a to 1, $b to 2, $c to 3, and $end to 4 5.In lisp parlance:

set cdr [lassign $mylist car]The k trick is sometimes used with lassign to improve performance by causing the Tcl_Obj hlding $mylist to be unshared so that it can be re-used to hold the return value of lassign:

set cdr [lassign $mylist[set mylist {}] car]If there are more varNames than there are items in the list, the extra varNames are set to the empty string:

% lassign {1 2} a b c % puts $a 1 % puts $b 2 % puts $c %In Tcl prior to 8.5, foreach was used to achieve the functionality of lassign:

foreach {var1 var2 var3} $list break

DKF: The foreach trick was sometimes written as:

foreach {var1 var2 var3} $list {}This was unwise, as it would cause a second iteration (or more) to be done when $list contains more than 3 items (in this case). Putting the break in makes the behaviour predictable.

Example: Perl-ish shift editDKF cleverly points out that lassign makes a Perl-ish shift this easy:

proc shift {} { global argv set argv [lassign $argv v] return $v }On the other hand, Hemang Lavana observes that TclXers already have lvarpop ::argv, an exact synonym for shift.On the third hand, RS would use our old friend K to code like this:

proc shift {} { K [lindex $::argv 0] [set ::argv [lrange $::argv[set ::argv {}] 1 end]] }Lars H: Then I can't resist doing the above without the K:

proc shift {} { lindex $::argv [set ::argv [lrange $::argv[set ::argv {}] 1 end]; expr 0] }

Default Value editFM: here's a quick way to assign with default value, using apply:

proc args {spec list} { apply [list $spec [list foreach {e} $spec { uplevel 2 [list set [lindex $e 0] [set [lindex $e 0]]] }]] {*}$list } set L {} args {{a 0} {b 0} {c 0} args} $LAMG: Clever. Here's my version, which actually uses lassign, plus it matches lassign's value-variable ordering. It uses lcomp for brevity.

proc args {vals args} { set vars [lcomp {$name} for {name default} inside $args] set allvals "\[list [join [lcomp {"\[set [list $e]\]"} for e in $vars]]\]" apply [list $args "uplevel 2 \[list lassign $allvals $vars\]"] {*}$vals }Without lcomp:

proc args {vals args} { lassign "" scr vars foreach varspec $args { append scr " \[set [list [lindex $varspec 0]]\]" lappend vars [lindex $varspec 0] } apply [list $args "uplevel 2 \[list lassign \[list$scr\] $vars\]"] {*}$vals }This code reminds me of the movie "Inception" [1]. It exists, creates itself, and operates at and across multiple levels of interpretation. There's the caller, there's [args], there's [apply], then there's the [uplevel 2] that goes back to the caller. The caller is the waking world, [args] is the dream, [apply] is its dream-within-a-dream, and [uplevel] is its dream-within-a-dream that is used to implant an idea (or variable) into the waking world (the caller). And of course, the caller could itself be a child stack frame, so maybe reality is just another dream! ;^)Or maybe this code is a Matryoshka nesting doll [2] whose innermost doll contains the outside doll. ;^)Okay, now that I've put a cross-cap in reality [3], let me demonstrate how [args] is used:

args {1 2 3} a b c ;# a=1 b=2 c=3 args {1 2} a b {c 3} ;# a=1 b=2 c=3 args {} {a 1} {b 2} {c 3} ;# a=1 b=2 c=3 args {1 2 3 4 5} a b c args ;# a=1 b=2 c=3 args={4 5}FM: to conform to the AMG (and lassign) syntax.

proc args {values args} { apply [list $args [list foreach e $args { uplevel 2 [list set [lindex $e 0] [set [lindex $e 0]]] }]] {*}$values }both versions seem to have the same speed.PYK, 2015-03-06, wonders why lassign decided to mess with variable values that were already set, preventing default values from being set beforehand:

#warning, hypothetical semantics set color green lassign 15 size color set color ;# -> greenAMG: I'm pretty sure this behavior is imported from [foreach] which does the same thing. [foreach] is often used as a substitute for [lassign] on older Tcl.So, what to do when there are more variable names than list elements? I can think of four approaches:

- Set the extras to empty string. This is current [foreach] and [lassign] behavior.

- Leave the extras unmodified. This is PYK's preference.

- Unset the extras if they currently exist. Their existence can be tested later to see if they got a value.

- Throw an error. This is what Brush proposes, but now I may be leaning towards PYK's idea.

Gotcha: Ambiguity List Items that are the Empty String editCMcC 2005-11-14: I may be just exceptionally grumpy this morning, but the behavior of supplying default empty values to extra variables means you can't distinguish between a trailing var with no matching value, and one with a value of the empty string. Needs an option, -greedy or something, to distinguish between the two cases. Oh, and it annoys me that lset is already taken, because lassign doesn't resonate well with set.Kristian Scheibe: I agree with CMcC on both counts - supplying a default empty value when no matching value is provided is bad form; and lset/set would have been better than lassign/set. However, I have a few other tweaks I would suggest, then I'll tie it all together with code to do what I suggest.First, there is a fundamental asymmetry between the set and lassign behaviors: set copies right to left, while lassign goes from left to right. In fact, most computer languages use the idiom of right to left for assignment. However, there are certain advantages to the left to right behavior of lassign (in Tcl). For example, when assigning a list of variables to the contents of args. Using the right to left idiom would require eval.Still, the right-to-left behavior also has its benefits. It allows you to perform computations on the values before performing the assignment. Take, for example, this definition of factorial (borrowed from Tail call optimization):