Datasets:

language:

- en

license: cc-by-4.0

size_categories:

- 1K<n<10K

task_categories:

- visual-question-answering

modalities:

- Video

- Text

configs:

- config_name: action_antonym

data_files:

- split: train

path: action_antonym/train-*

- config_name: action_count

data_files:

- split: train

path: action_count/train-*

- config_name: action_localization

data_files:

- split: train

path: action_localization/train-*

- config_name: action_sequence

data_files:

- split: train

path: action_sequence/train-*

- config_name: egocentric_sequence

data_files:

- split: train

path: egocentric_sequence/train-*

- config_name: moving_direction

data_files:

- split: train

path: moving_direction/train-*

- config_name: object_count

data_files:

- split: train

path: object_count/train-*

- config_name: object_shuffle

data_files:

- split: train

path: object_shuffle/train-*

- config_name: scene_transition

data_files:

- split: train

path: scene_transition/train-*

- config_name: unexpected_action

data_files:

- split: train

path: unexpected_action/train-*

dataset_info:

- config_name: action_antonym

features:

- name: video

dtype: string

- name: question

dtype: string

- name: answer

dtype: string

- name: candidates

sequence: string

- name: video_length

dtype: int64

splits:

- name: train

num_bytes: 51780

num_examples: 320

download_size: 6963

dataset_size: 51780

- config_name: action_count

features:

- name: video

dtype: string

- name: question

dtype: string

- name: candidates

sequence: string

- name: answer

dtype: string

splits:

- name: train

num_bytes: 72611

num_examples: 536

download_size: 6287

dataset_size: 72611

- config_name: action_localization

features:

- name: video

dtype: string

- name: question

dtype: string

- name: candidates

sequence: string

- name: answer

dtype: string

- name: start

dtype: float64

- name: end

dtype: float64

- name: accurate_start

dtype: float64

- name: accurate_end

dtype: float64

splits:

- name: train

num_bytes: 47290

num_examples: 160

download_size: 12358

dataset_size: 47290

- config_name: action_sequence

features:

- name: video

dtype: string

- name: question

dtype: string

- name: answer

dtype: string

- name: candidates

sequence: string

- name: question_id

dtype: string

- name: start

dtype: float64

- name: end

dtype: float64

splits:

- name: train

num_bytes: 67660

num_examples: 437

download_size: 13791

dataset_size: 67660

- config_name: egocentric_sequence

features:

- name: video

dtype: string

- name: question

dtype: string

- name: answer

dtype: string

- name: candidates

sequence: string

splits:

- name: train

num_bytes: 217705

num_examples: 200

download_size: 24816

dataset_size: 217705

- config_name: moving_direction

features:

- name: video

dtype: string

- name: question

dtype: string

- name: answer

dtype: string

- name: candidates

sequence: string

splits:

- name: train

num_bytes: 47563

num_examples: 232

download_size: 4818

dataset_size: 47563

- config_name: object_count

features:

- name: video

dtype: string

- name: question

dtype: string

- name: answer

dtype: string

- name: candidates

sequence: string

- name: is_seq

dtype: bool

- name: question_id

dtype: int64

splits:

- name: train

num_bytes: 16835

num_examples: 148

download_size: 4486

dataset_size: 16835

- config_name: object_shuffle

features:

- name: video

dtype: string

- name: question

dtype: string

- name: candidates

sequence: string

- name: answer

dtype: string

splits:

- name: train

num_bytes: 76815

num_examples: 225

download_size: 4915

dataset_size: 76815

- config_name: scene_transition

features:

- name: video

dtype: string

- name: question

dtype: string

- name: candidates

sequence: string

- name: answer

dtype: string

splits:

- name: train

num_bytes: 40269

num_examples: 185

download_size: 14169

dataset_size: 40269

- config_name: unexpected_action

features:

- name: video

dtype: string

- name: question

dtype: string

- name: answer

dtype: string

- name: candidates

sequence: string

splits:

- name: train

num_bytes: 18694

num_examples: 82

download_size: 4060

dataset_size: 18694

TVBench: Redesigning Video-Language Evaluation

Daniel Cores*, Michael Dorkenwald*, Manuel Mucientes, Cees G. M. Snoek, Yuki M. Asano

Updates

25 October 2024: Revised annotations for Action Sequence and removed duplicate samples for Action Sequence and Unexpected Action.

TVBench

TVBench is a new benchmark specifically created to evaluate temporal understanding in video QA. We identified three main issues in existing datasets: (i) static information from single frames is often sufficient to solve the tasks (ii) the text of the questions and candidate answers is overly informative, allowing models to answer correctly without relying on any visual input (iii) world knowledge alone can answer many of the questions, making the benchmarks a test of knowledge replication rather than visual reasoning. In addition, we found that open-ended question-answering benchmarks for video understanding suffer from similar issues while the automatic evaluation process with LLMs is unreliable, making it an unsuitable alternative.

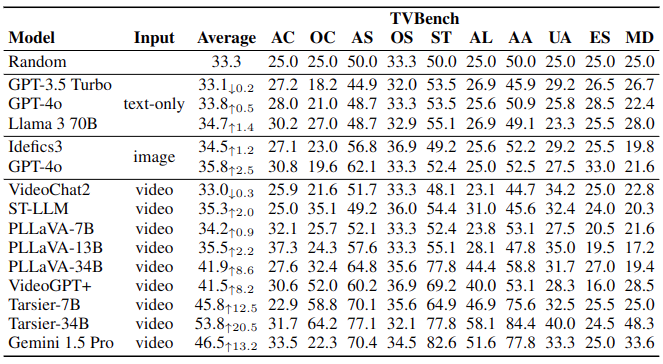

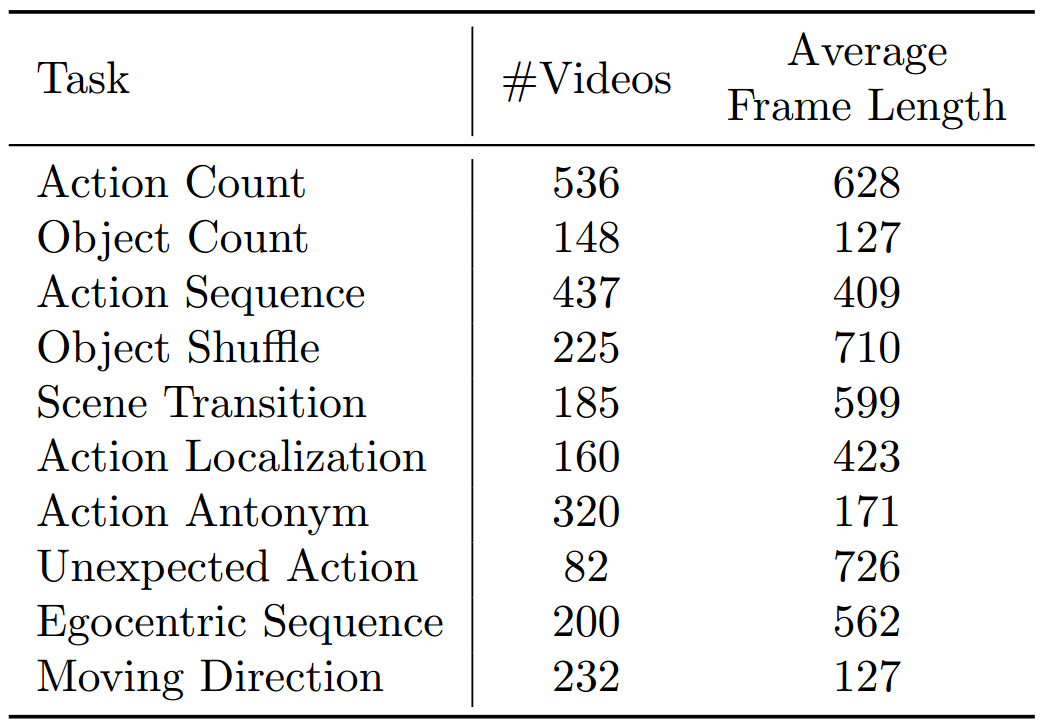

We defined 10 temporally challenging tasks that either require repetition counting (Action Count), properties about moving objects (Object Shuffle, Object Count, Moving Direction), temporal localization (Action Localization, Unexpected Action), temporal sequential ordering (Action Sequence, Scene Transition, Egocentric Sequence) and distinguishing between temporally hard Action Antonyms such as "Standing up" and "Sitting down".

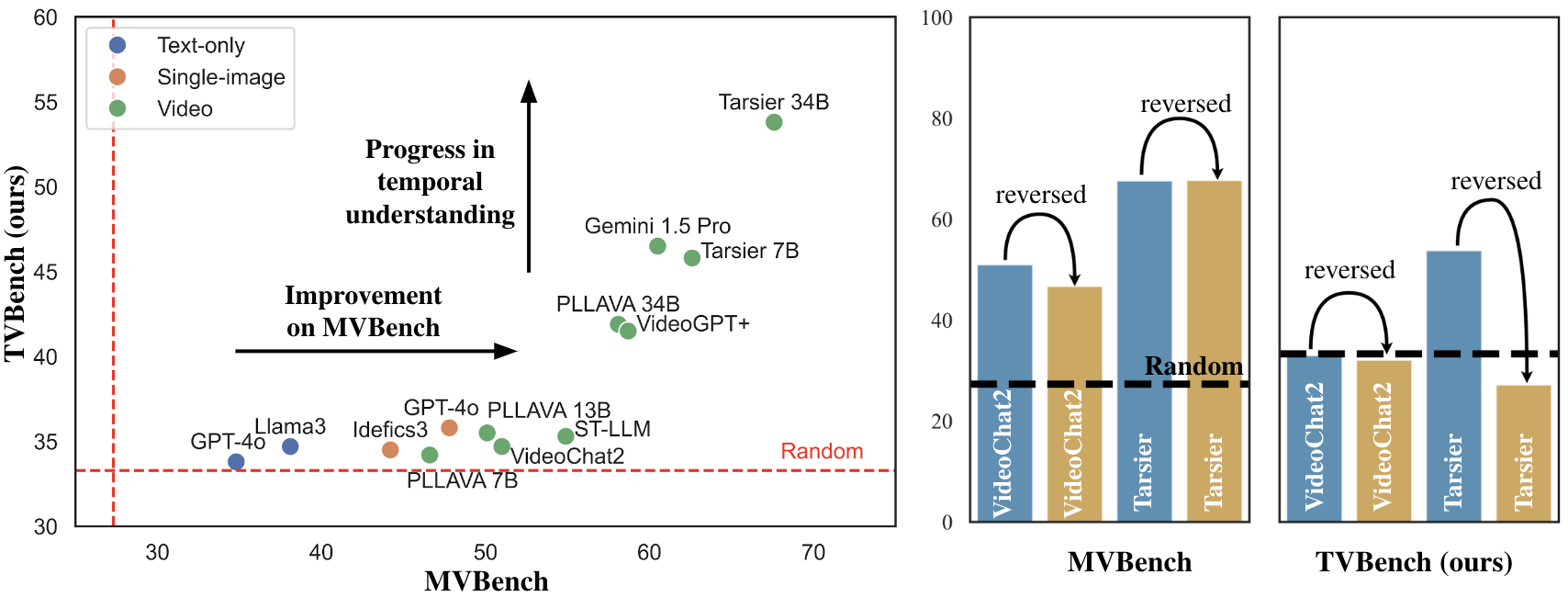

In TVBench, state-of-the-art text-only, image-based, and most video-language models perform close to random chance, with only the latest strong temporal models, such as Tarsier, outperforming the random baseline. In contrast to MVBench, the performance of these temporal models significantly drops when videos are reversed.

Dataset statistics:

The table below shows the number of samples and the average frame length for each task in TVBench.

Download

Question and answers are provided as a json file for each task.

Videos in TVBench are sourced from Perception Test, CLEVRER, STAR, MoVQA, Charades-STA, NTU RGB+D, FunQA and CSV. All videos are included in this repository, except for those from NTU RGB+D, which can be downloaded from the official website. It is not necessary to download the full dataset, as NTU RGB+D provides a subset specifically for TVBench with the required videos. These videos are required by th Action Antonym task and should be stored in the video/action_antonym folder.

Leaderboard

Citation

If you find this benchmark useful, please consider citing:

@misc{cores2024tvbench,

author = {Daniel Cores and Michael Dorkenwald and Manuel Mucientes and Cees G. M. Snoek and Yuki M. Asano},

title = {TVBench: Redesigning Video-Language Evaluation},

year = {2024},

eprint = {arXiv:2410.07752},

}