language:

- en

license: cc-by-4.0

size_categories:

- 100M<n<1B

pretty_name: OBELICS

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

- config_name: opt_out_docs_removed_2023_07_12

data_files:

- split: train

path: opt_out_docs_removed_2023_07_12/train-*

dataset_info:

- config_name: default

features:

- name: images

sequence: string

- name: metadata

dtype: string

- name: general_metadata

dtype: string

- name: texts

sequence: string

splits:

- name: train

num_bytes: 715724717192

num_examples: 141047697

download_size: 71520629655

dataset_size: 715724717192

- config_name: opt_out_docs_removed_2023_07_12

features:

- name: images

sequence: string

- name: metadata

dtype: string

- name: general_metadata

dtype: string

- name: texts

sequence: string

splits:

- name: train

num_bytes: 684638314215

num_examples: 134648855

download_size: 266501092920

dataset_size: 684638314215

Dataset Card for OBELICS

Dataset Description

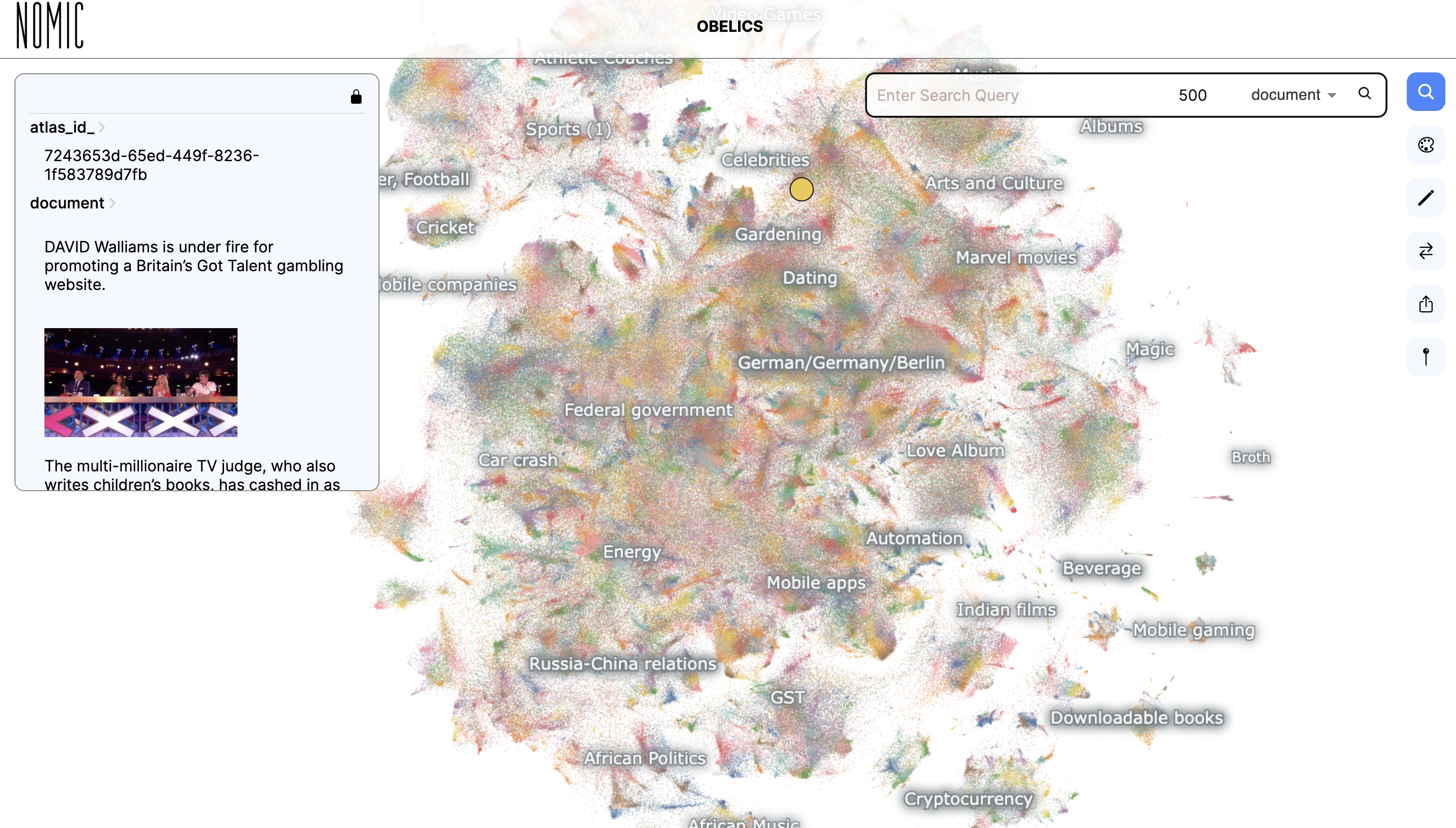

- Visualization of OBELICS web documents: https://huggingface.co/spaces/HuggingFaceM4/obelics_visualization

- Paper: OBELICS: An Open Web-Scale Filtered Dataset of Interleaved Image-Text Documents

- Repository: https://github.com/huggingface/OBELICS

- Point of Contact: [email protected]

OBELICS is an open, massive, and curated collection of interleaved image-text web documents, containing 141M English documents, 115B text tokens, and 353M images, extracted from Common Crawl dumps between February 2020 and February 2023. The collection and filtering steps are described in our paper.

Interleaved image-text web documents are a succession of text paragraphs interleaved by images, such as web pages that contain images. Models trained on these web documents outperform vision and language models trained solely on image-text pairs on various benchmarks. They can also generate long and coherent text about a set of multiple images. As an example, we trained IDEFICS, a visual language model that accepts arbitrary sequences of image and text inputs and produces text outputs.

We provide an interactive visualization of OBELICS that allows exploring the content of OBELICS. The map shows a subset of 11M of the 141M documents.

Data Fields

An example of a sample looks as follows:

# The example has been cropped

{

'images': [

'https://cdn.motor1.com/images/mgl/oRKO0/s1/lamborghini-urus-original-carbon-fiber-accessories.jpg',

None

],

'metadata': '[{"document_url": "https://lamborghinichat.com/forum/news/vw-group-allegedly-receives-offer-to-sell-lamborghini-for-9-2-billion.728/", "unformatted_src": "https://cdn.motor1.com/images/mgl/oRKO0/s1/lamborghini-urus-original-carbon-fiber-accessories.jpg", "src": "https://cdn.motor1.com/images/mgl/oRKO0/s1/lamborghini-urus-original-carbon-fiber-accessories.jpg", "formatted_filename": "lamborghini urus original carbon fiber accessories", "alt_text": "VW Group Allegedly Receives Offer To Sell Lamborghini For $9.2 Billion", "original_width": 1920, "original_height": 1080, "format": "jpeg"}, null]',

'general_metadata': '{"url": "https://lamborghinichat.com/forum/news/vw-group-allegedly-receives-offer-to-sell-lamborghini-for-9-2-billion.728/", "warc_filename": "crawl-data/CC-MAIN-2021-25/segments/1623488528979.69/warc/CC-MAIN-20210623011557-20210623041557-00312.warc.gz", "warc_record_offset": 322560850, "warc_record_length": 17143}',

'texts': [

None,

'The buyer would get everything, including Lambo\'s headquarters.\n\nThe investment groupQuantum Group AG has submitted a€7.5 billion ($9.2 billion at current exchange rates) offer to purchase Lamborghini from Volkswagen Group, Autocar reports. There\'s no info yet about whether VW intends to accept the offer or further negotiate the deal.\n\nQuantum ... Group Chief Executive Herbert Diess said at the time.'

]

}

Each sample is composed of the same 4 fields: images, texts, metadata, and general_metadata. images and texts are two lists of the same size, where for each index, one element and only one is not None. For example, for the interleaved web document <image_1>text<image_2>, we would find [image_1, None, image_2] in images and [None, text, None] in texts.

The images are replaced by their URLs, and the users need to download the images, for instance, with the library img2dataset.

metadata is the string representation of a list containing information about each of the images. It has the same length as texts and images and logs for each image relevant information such as original source document, unformatted source, alternative text if present, etc.

general_metadata is the string representation of a dictionary containing the URL of the document, and information regarding the extraction from Common Crawl snapshots.

Size and Data Splits

There is only one split, train, that contains 141,047,697 documents.

OBELICS with images replaced by their URLs weighs 666.6 GB (😈) in arrow format and 377 GB in the uploaded parquet format.

Considerations for Using the Data

Discussion of Biases

A subset of this dataset train, of ~50k was evaluated using the Data Measuremnts Tool, with a particular focus on the nPMI metric

nPMI scores for a word help to identify potentially problematic associations, ranked by how close the association is. nPMI bias scores for paired words help to identify how word associations are skewed between the selected selected words (Aka et al., 2021). You can select from gender and sexual orientation identity terms that appear in the dataset at least 10 times. The resulting ranked words are those that co-occur with both identity terms. The more positive the score, the more associated the word is with the first identity term. The more negative the score, the more associated the word is with the second identity term.

While there was a positive skew of words relating occupations e.g government, jobs towards she, her, and similar attributions of the masculine and feminine words to they and them, more harmful words attributions such as escort and even colour presented with greater attributions to she, her and him, his, respectively.

We welcome users to explore the Data Measurements nPMI Visualitons for OBELICS further and to see the idefics-9b model card for further Bias considerations.

Opted-out content

To respect the preferences of content creators, we removed from OBELICS all images for which creators explicitly opted out of AI model training. We used the Spawning API to verify that the images in the dataset respect the original copyright owners’ choices.

However, due to an error on our side, we did not remove entire documents (i.e., URLs) that opted out of AI model training. As of July 12, 2023, it represents 4.25% of the totality of OBELICS. The config opt_out_docs_removed_2023_07_12 applies the correct filtering at the web document level as of July 2023: ds = load_dataset("HuggingFaceM4/OBELICS", "opt_out_docs_removed_2023_07_12").

We recommend users of OBELICS to regularly check every document against the API.

Content warnings

Despite our efforts in filtering, OBELICS contains a small proportion of documents that are not suitable for all audiences. For instance, while navigating the interactive map, you might find the cluster named "Sex" which predominantly contains descriptions of pornographic movies along with pornographic images. Other clusters would contain advertising for sex workers or reports of violent shootings. In our experience, these documents represent a small proportion of all the documents.

Terms of Use

By using the dataset, you agree to comply with the original licenses of the source content as well as the dataset license (CC-BY-4.0). Additionally, if you use this dataset to train a Machine Learning model, you agree to disclose your use of the dataset when releasing the model or an ML application using the model.

Licensing Information

License CC-BY-4.0.

Citation Information

If you are using this dataset, please cite

@misc{laurencon2023obelics,

title={OBELICS: An Open Web-Scale Filtered Dataset of Interleaved Image-Text Documents},

author={Hugo Laurençon and Lucile Saulnier and Léo Tronchon and Stas Bekman and Amanpreet Singh and Anton Lozhkov and Thomas Wang and Siddharth Karamcheti and Alexander M. Rush and Douwe Kiela and Matthieu Cord and Victor Sanh},

year={2023},

eprint={2306.16527},

archivePrefix={arXiv},

primaryClass={cs.IR}

}