Altogether-FT

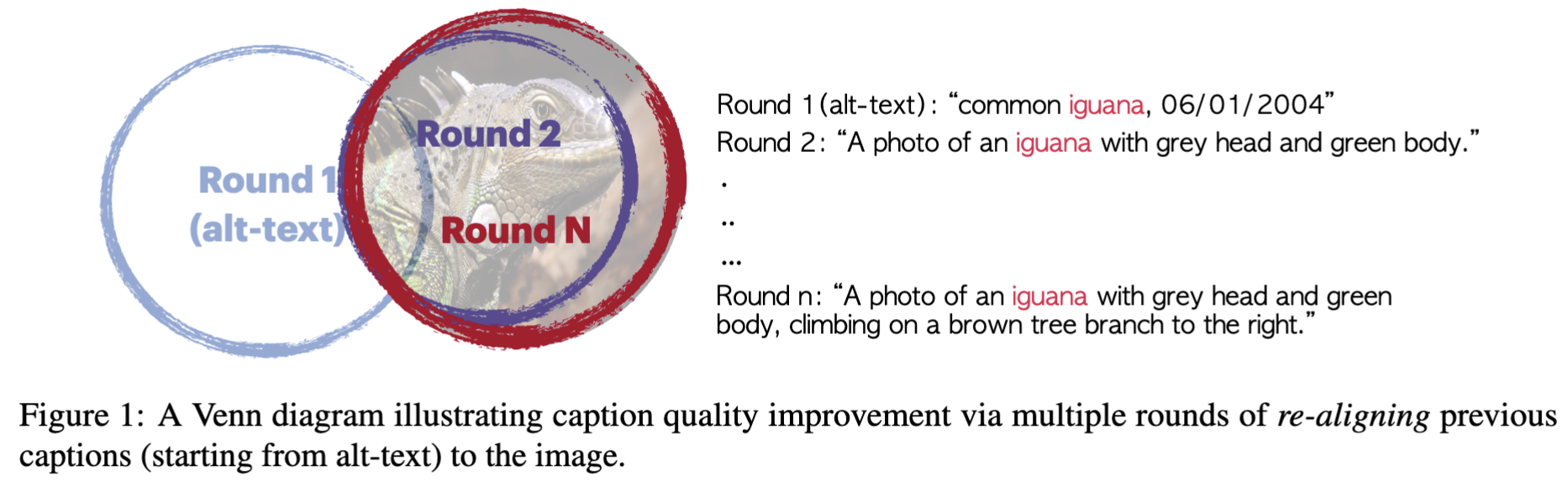

(EMNLP 2024) Altogether-FT is a dataset that transforms/re-aligns Internet-scale alt-texts into dense captions. It does not caption images from scratch and generate naive captions that provide little value to an average user (e.g., "a dog is walking in the park" offer minimal utility to users not blind). Instead, it complements and completes alt-texts into dense captions, while preserving supervisions in alt-texts by expert human/agents around the world (that describe the images an average annotators do not understand).

It contains 15448 examples for training and 500 examples for evaluation from WIT and DataComp.

We use this re-aligned captions to train MetaCLIPv2.

@inproceedings{xu2024altogether,

title={Altogether: Image Captioning via Re-aligning Alt-text},

author={Hu Xu, Po-Yao Huang, Xiaoqing Ellen Tan, Ching-Feng Yeh, Jacob Kahn, Christine Jou, Gargi Ghosh, Omer Levy, Luke Zettlemoyer, Wen-tau Yih, Shang-Wen Li, Saining Xie and Christoph Feichtenhofer},

journal={arXiv preprint arXiv:xxxx.xxxxx},

year={2024}

}

Altogether-FT

from datasets import load_dataset

train_dataset = load_dataset("json", data_files="https://huggingface.co/datasets/activebus/Altogether-FT/resolve/main/altogether_ft_train.json", field="data")

eval_dataset = load_dataset("json", data_files="https://huggingface.co/datasets/activebus/Altogether-FT/resolve/main/altogether_ft_eval.json", field="data")

License

The majority of Altogether-FT is licensed under CC-BY-NC, portions of the project are available under separate license terms: CLIPCap is licensed MIT and open_clip is licensed under the https://github.com/mlfoundations/open_clip license.