metadata

dataset_info:

features:

- name: x

dtype: float64

- name: 'y'

dtype: float64

- name: language

dtype: string

- name: corpus

dtype: string

splits:

- name: train

num_bytes: 247037602

num_examples: 5785741

download_size: 112131877

dataset_size: 247037602

license: apache-2.0

What follows is research code. It is by no means optimized for speed, efficiency, or readability.

Data loading, tokenizing and sharding

import os

import numpy as np

import pandas as pd

from sklearn.feature_extraction.text import TfidfTransformer

from sklearn.decomposition import TruncatedSVD

from tqdm.notebook import tqdm

from openTSNE import TSNE

import datashader as ds

import colorcet as cc

from dask.distributed import Client

import dask.dataframe as dd

import dask_ml

import dask.bag as db

from transformers import AutoTokenizer

from datasets import load_dataset

from datasets.utils.py_utils import convert_file_size_to_int

def batch_tokenize(batch):

return {'tokenized': [' '.join(e.tokens) for e in tokenizer(batch['text']).encodings]} # "text" column hard encoded

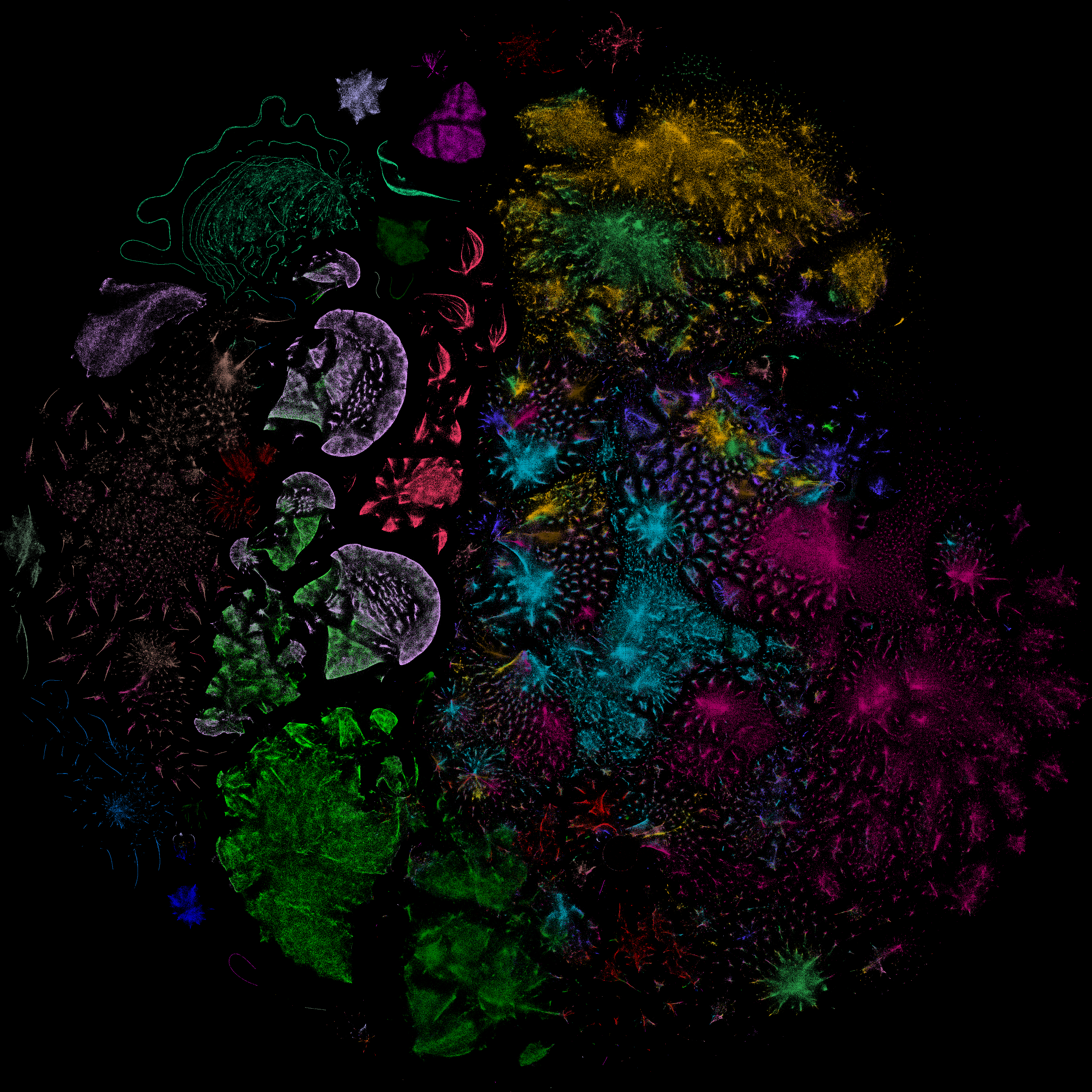

# The original viz used a subset of the ROOTS Corpus.

# More info on the entire dataset here: https://huggingface.co/bigscience-data

# And here: https://arxiv.org/abs/2303.03915

dset = load_dataset(..., split="train")

dset = dset.map(batch_tokenize, batched=True, batch_size=64, num_proc=28)

dset_name = "roots_subset"

max_shard_size = convert_file_size_to_int('300MB')

dataset_nbytes = dset.data.nbytes

num_shards = int(dataset_nbytes / max_shard_size) + 1

num_shards = max(num_shards, 1)

print(f"Sharding into {num_shards} files.")

os.makedirs(f"{dset_name}/tokenized", exist_ok=True)

for shard_index in tqdm(range(num_shards)):

shard = dset.shard(num_shards=num_shards, index=shard_index, contiguous=True)

shard.to_parquet(f"{dset_name}/tokenized/tokenized-{shard_index:03d}.parquet")

Embedding

client = Client() # To keep track of dask computation

client

df = dd.read_parquet(f'{dset_name}/tokenized/')

vect = dask_ml.feature_extraction.text.CountVectorizer(tokenizer=str.split,

token_pattern=None,

vocabulary=vocab)

tokenized_bag = df['tokenized'].to_bag()

X = vect.transform(tokenized_bag)

counts = X.compute()

client.shutdown()

tfidf_transformer = TfidfTransformer(sublinear_tf=True, norm="l2")

tfidf = tfidf_transformer.fit_transform(counts)

svd = TruncatedSVD(n_components=160)

X_svd = svd.fit_transform(tfidf)

tsne = TSNE(

perplexity=30, # not sure what param setting resulted in the plot

n_jobs=28,

random_state=42,

verbose=True,

)

tsne_embedding = tsne.fit(X)

Plotting

df = pd.DataFrame(data=tsne_embedding, columns=['x','y'])

agg = ds.Canvas(plot_height=600, plot_width=600).points(df, 'x', 'y')

img = ds.tf.shade(agg, cmap=cc.fire, how='eq_hist')

ds.tf.set_background(img, "black")