license: cc-by-4.0

datasets:

- abisee/cnn_dailymail

language:

- en

tags:

- NLP

- Text-Summarization

- CNN

metrics:

- rouge

pipeline_tag: summarization

library_name: keras

Seq2Seq Model with Attention for Text Summarization

This repository contains a Sequence-to-Sequence (Seq2Seq) model with attention, trained on the CNN/DailyMail dataset for text summarization tasks. The model is built using Keras and leverages pre-trained GloVe embeddings for enhanced word representations. It consists of an encoder-decoder architecture using LSTM layers with attention to capture long-term dependencies.

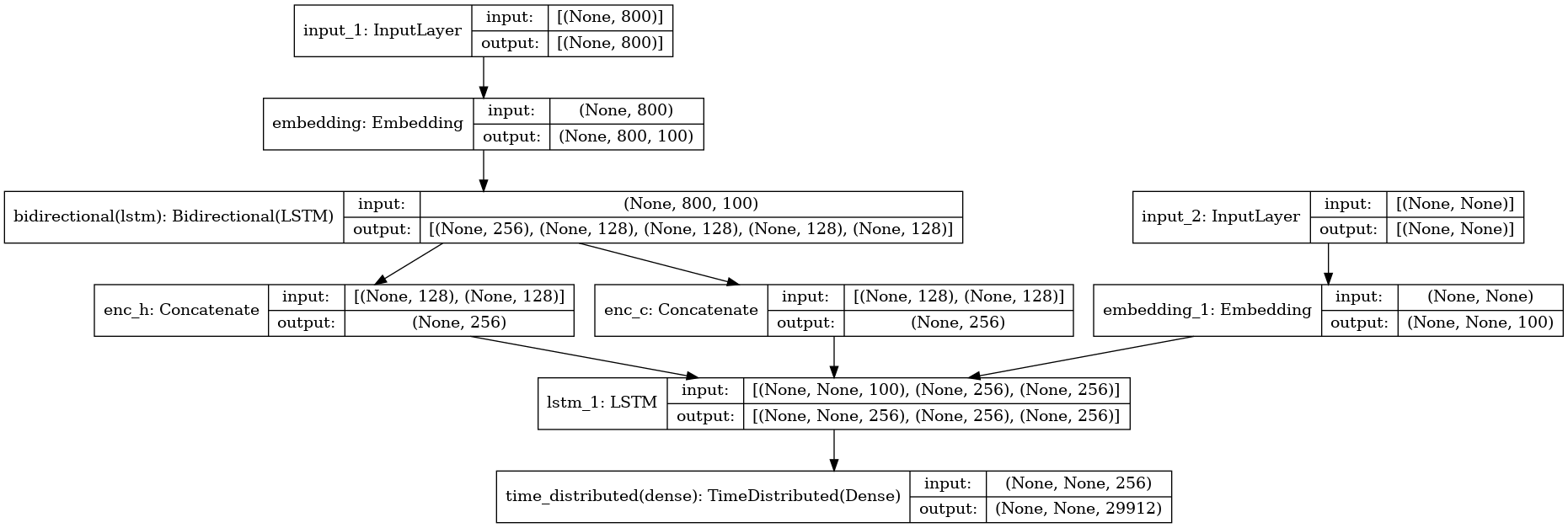

Model Architecture

The model follows the classic encoder-decoder structure, with attention to handle long sequences:

- Embedding Layer: Uses pre-trained GloVe embeddings (100-dimensional) for both the input (article) and target (summary) texts.

- Encoder: A bidirectional LSTM to encode the input sequence. The forward and backward hidden states are concatenated.

- Decoder: An LSTM initialized with the encoder's hidden and cell states to generate the target sequence (summary).

- Attention Mechanism: While the base code does not explicitly implement attention, this can be easily integrated to improve summarization by focusing on relevant parts of the input sequence during decoding.

Embeddings

We use GloVe embeddings (100-dimensional) pre-trained on a large corpus of text data. The embedding matrix is constructed for both the input (text) and output (summary) using the GloVe embeddings.

embedding_index = {}

embed_dim = 100

with open('../input/glove6b100dtxt/glove.6B.100d.txt') as f:

for line in f:

values = line.split()

word = values[0]

coefs = np.asarray(values[1:], dtype='float32')

embedding_index[word] = coefs

# Embedding for input (articles)

t_embed = np.zeros((t_max_features, embed_dim))

for word, i in t_tokenizer.word_index.items():

vec = embedding_index.get(word)

if i < t_max_features and vec is not None:

t_embed[i] = vec

# Embedding for output (summaries)

s_embed = np.zeros((s_max_features, embed_dim))

for word, i in s_tokenizer.word_index.items():

vec = embedding_index.get(word)

if i < s_max_features and vec is not None:

s_embed[i] = vec

Encoder

A bidirectional LSTM is used for encoding the input text. The forward and backward hidden and cell states are concatenated to pass as the initial states to the decoder.

latent_dim = 128

enc_input = Input(shape=(maxlen_text,))

enc_embed = Embedding(t_max_features, embed_dim, input_length=maxlen_text, weights=[t_embed], trainable=False)(enc_input)

enc_lstm = Bidirectional(LSTM(latent_dim, return_state=True))

enc_output, enc_fh, enc_fc, enc_bh, enc_bc = enc_lstm(enc_embed)

# Concatenate the forward and backward states

enc_h = Concatenate(axis=-1)([enc_fh, enc_bh])

enc_c = Concatenate(axis=-1)([enc_fc, enc_bc])

Decoder

The decoder is an LSTM that takes the encoder's final states as the initial states to generate the output summary sequence.

dec_input = Input(shape=(None,))

dec_embed = Embedding(s_max_features, embed_dim, weights=[s_embed], trainable=False)(dec_input)

dec_lstm = LSTM(latent_dim * 2, return_sequences=True, return_state=True, dropout=0.3, recurrent_dropout=0.2)

dec_outputs, _, _ = dec_lstm(dec_embed, initial_state=[enc_h, enc_c])

# Dense layer with softmax activation for final output

dec_dense = TimeDistributed(Dense(s_max_features, activation='softmax'))

dec_output = dec_dense(dec_outputs)

Model Summary

The full Seq2Seq model with an attention mechanism is compiled using sparse categorical crossentropy loss and the RMSProp optimizer.

Model Visualization

A diagram of the model is generated using Keras' plot_model function:

Training

The model is trained with early stopping to prevent overfitting. The model is fit using batches of 128 and a maximum of 10 epochs, with validation data for performance monitoring.

early_stop = keras.callbacks.EarlyStopping(monitor='val_loss', mode='min', verbose=1, patience=2)

model.fit([train_x, train_y[:, :-1]],

train_y.reshape(train_y.shape[0], train_y.shape[1], 1)[:, 1:],

epochs=10,

callbacks=[early_stop],

batch_size=128,

verbose=2,

validation_data=([val_x, val_y[:, :-1]], val_y.reshape(val_y.shape[0], val_y.shape[1], 1)[:, 1:]))

Dataset

The CNN/DailyMail dataset is used for training and validation. It contains news articles and their corresponding summaries, which makes it suitable for the text summarization task.

- Train set: Used to train the model on article-summary pairs.

- Validation set: Used for model performance evaluation and to apply early stopping.

Requirements

- Python 3.x

- Keras

- TensorFlow

- NumPy

- GloVe Embeddings

How to Run

- Download the CNN/DailyMail dataset and pre-trained GloVe embeddings.

- Preprocess the dataset and prepare the embedding matrices.

- Train the model using the provided code.

- Evaluate the model on a validation set and generate summaries for new text inputs.

Results

The model generates abstractive summaries of news articles. You can tweak the latent dimensions, embedding sizes, and add attention for improved performance.

Future Work

- Attention Mechanism: Implementing Bahdanau or Luong Attention for better results.

- Beam Search: Incorporating beam search for enhanced summary generation.