metadata

license: apache-2.0

language:

- multilingual

- af

- am

- ar

- az

- be

- bg

- bn

- ca

- ceb

- co

- cs

- cy

- da

- de

- el

- en

- eo

- es

- et

- eu

- fa

- fi

- fil

- fr

- fy

- ga

- gd

- gl

- gu

- ha

- haw

- hi

- hmn

- ht

- hu

- hy

- ig

- is

- it

- iw

- ja

- jv

- ka

- kk

- km

- kn

- ko

- ku

- ky

- la

- lb

- lo

- lt

- lv

- mg

- mi

- mk

- ml

- mn

- mr

- ms

- mt

- my

- ne

- nl

- 'no'

- ny

- pa

- pl

- ps

- pt

- ro

- ru

- sd

- si

- sk

- sl

- sm

- sn

- so

- sq

- sr

- st

- su

- sv

- sw

- ta

- te

- tg

- th

- tr

- uk

- und

- ur

- uz

- vi

- xh

- yi

- yo

- zh

- zu

library_name: transformers

Links for Reference

- Repository: https://github.com/kaistAI/LangBridge

- Paper: LangBridge: Multilingual Reasoning Without Multilingual Supervision

- Point of Contact: [email protected]

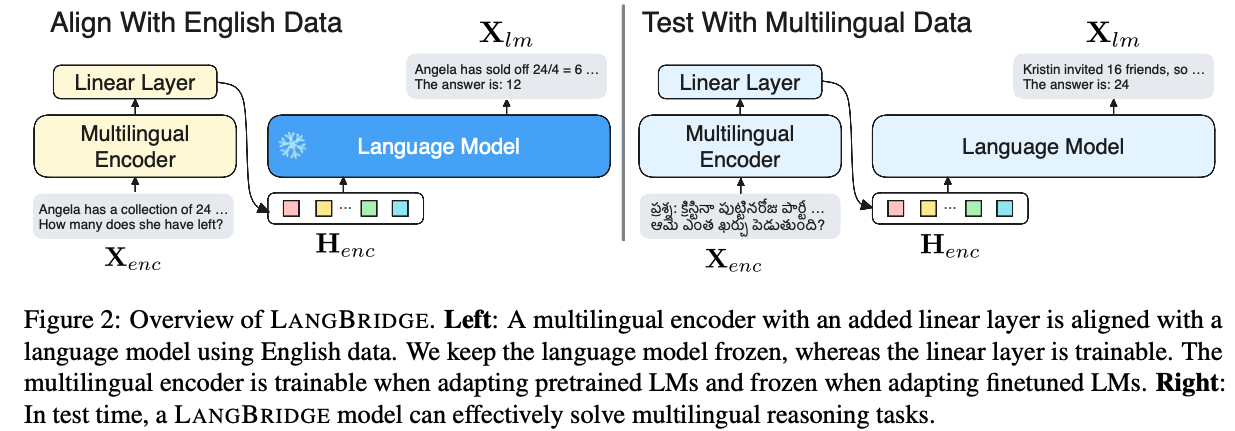

TL;DR

🤔LMs good at reasoning are mostly English-centric (MetaMath, Orca 2, etc).

😃Let’s adapt them to solve multilingual tasks. BUT without using multilingual data!

LangBridge “bridges” mT5 encoder and the target LM together while utilizing only English data. In test time, LangBridge models can solve multilingual reasoning tasks effectively.

Usage

Please refer to the Github repository for detailed usage examples.

Related Models

Check out other LangBridge models.

We have:

- Llama 2

- Llemma

- MetaMath

- Code Llama

- Orca 2

Citation

If you find the following model helpful, please consider citing our paper!

BibTeX:

@misc{yoon2024langbridge,

title={LangBridge: Multilingual Reasoning Without Multilingual Supervision},

author={Dongkeun Yoon and Joel Jang and Sungdong Kim and Seungone Kim and Sheikh Shafayat and Minjoon Seo},

year={2024},

eprint={2401.10695},

archivePrefix={arXiv},

primaryClass={cs.CL}

}