csg-wukong-ablation-chinese-random [中文] [English]

[OpenCSG Community] [github] [wechat] [Twitter]

**Chinese Fineweb Edu** dataset is a meticulously constructed high-quality Chinese pre-training corpus, specifically designed for natural language processing tasks in the education domain. This dataset undergoes a rigorous selection and deduplication process, using a scoring model trained on a small amount of data for evaluation. From vast amounts of raw data, it extracts high-value education-related content, ensuring the quality and diversity of the data. Ultimately, the dataset contains approximately 90 million high-quality Chinese text entries, with a total size of about 300GB.Selection Method

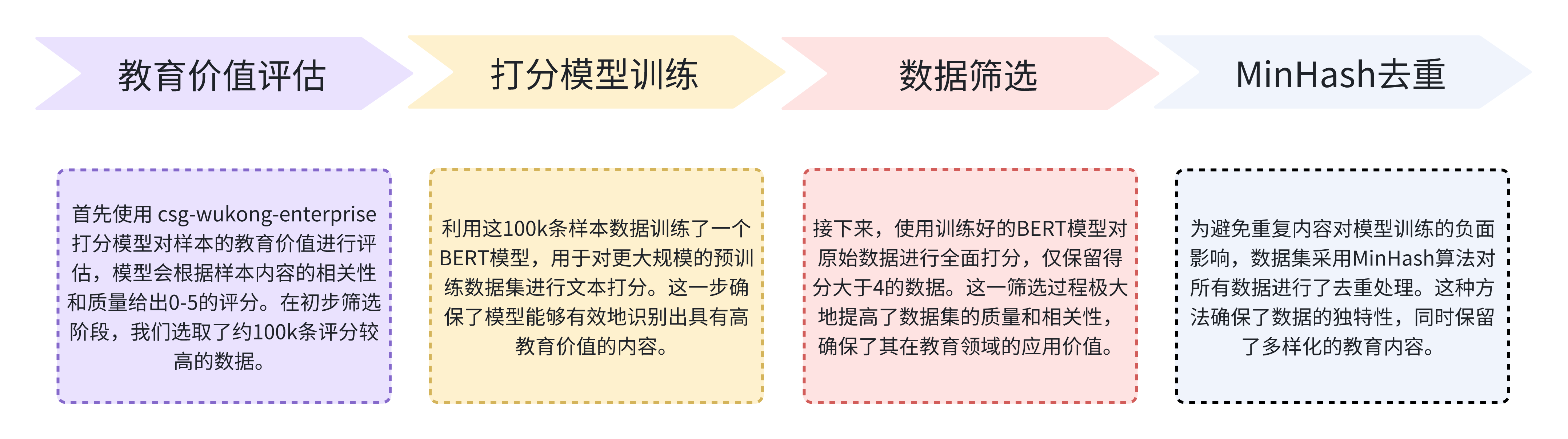

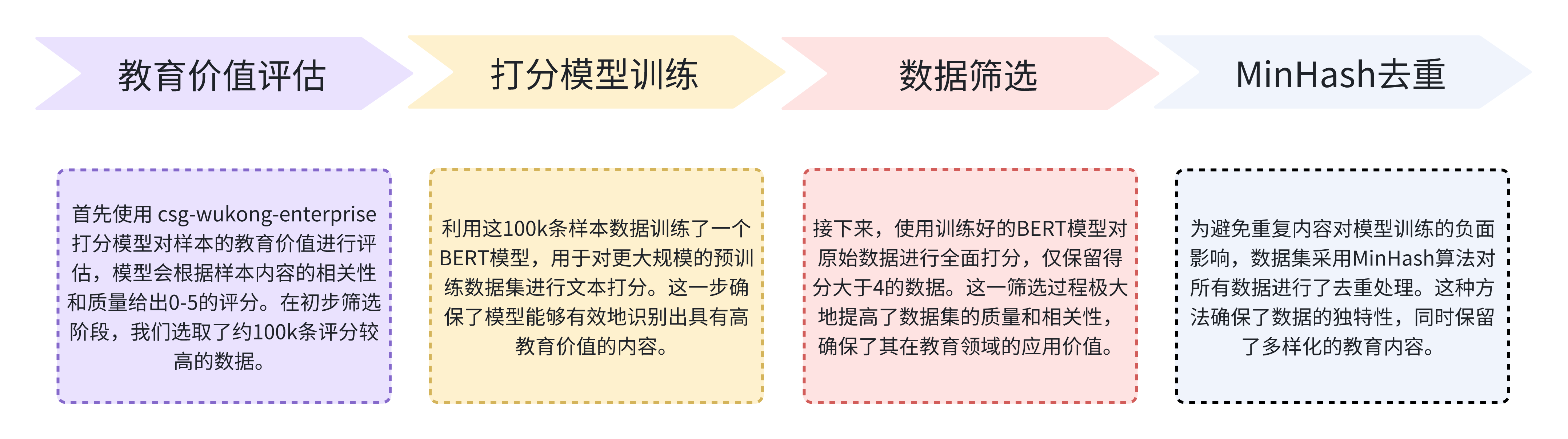

During the data selection process, the Chinese Fineweb Edu dataset adopted a strategy similar to that of Fineweb-Edu, with a focus on the educational value and content quality of the data. The specific selection steps are as follows:

- Educational Value Assessment: Initially, the csg-wukong-enterprise scoring model was used to evaluate the educational value of the samples. The model provided a score ranging from 0 to 5 based on the relevance and quality of the content. In the preliminary selection phase, we selected approximately 100,000 high-scoring samples.

- Scoring Model Training: Using these 100,000 samples, a BERT model was trained to score a larger pre-training dataset. This step ensured that the model could effectively identify content with high educational value.

- Data Selection: Next, the trained BERT model was used to comprehensively score the raw data, retaining only data with a score greater than 4. This selection process significantly enhanced the quality and relevance of the dataset, ensuring its applicability in the educational domain.

- MinHash Deduplication: To avoid the negative impact of duplicate content on model training, the dataset was deduplicated using the MinHash algorithm. This method ensured the uniqueness of the data while preserving a diverse range of educational content.

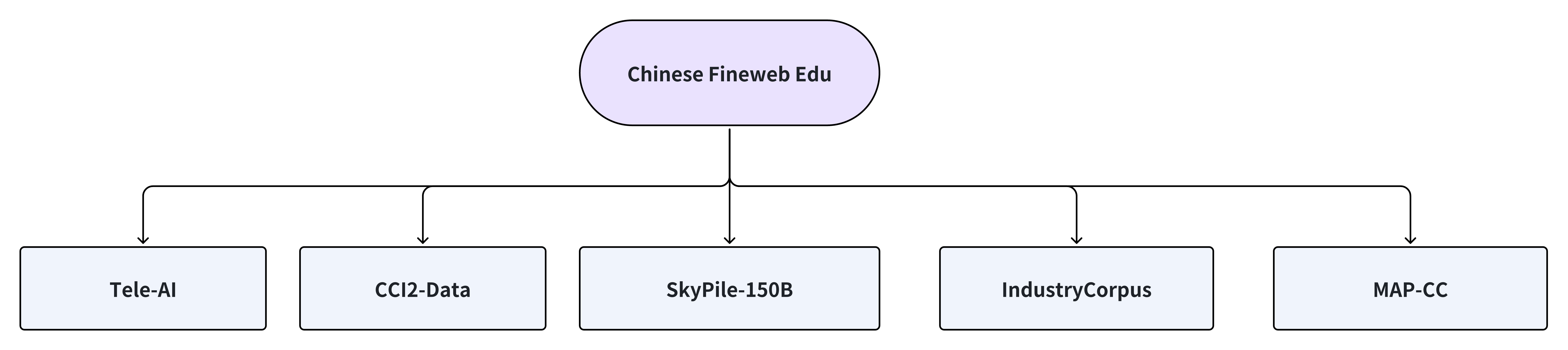

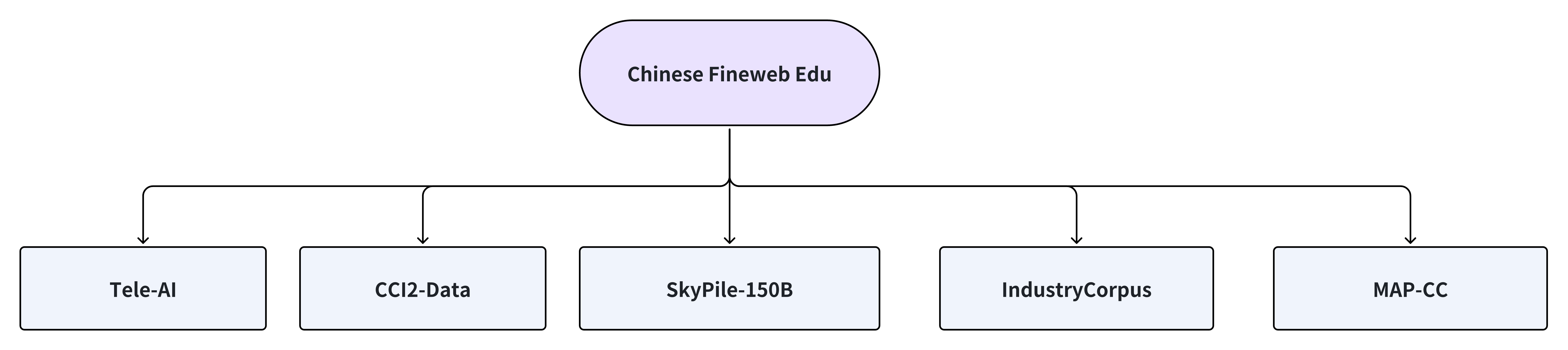

Original Data Sources

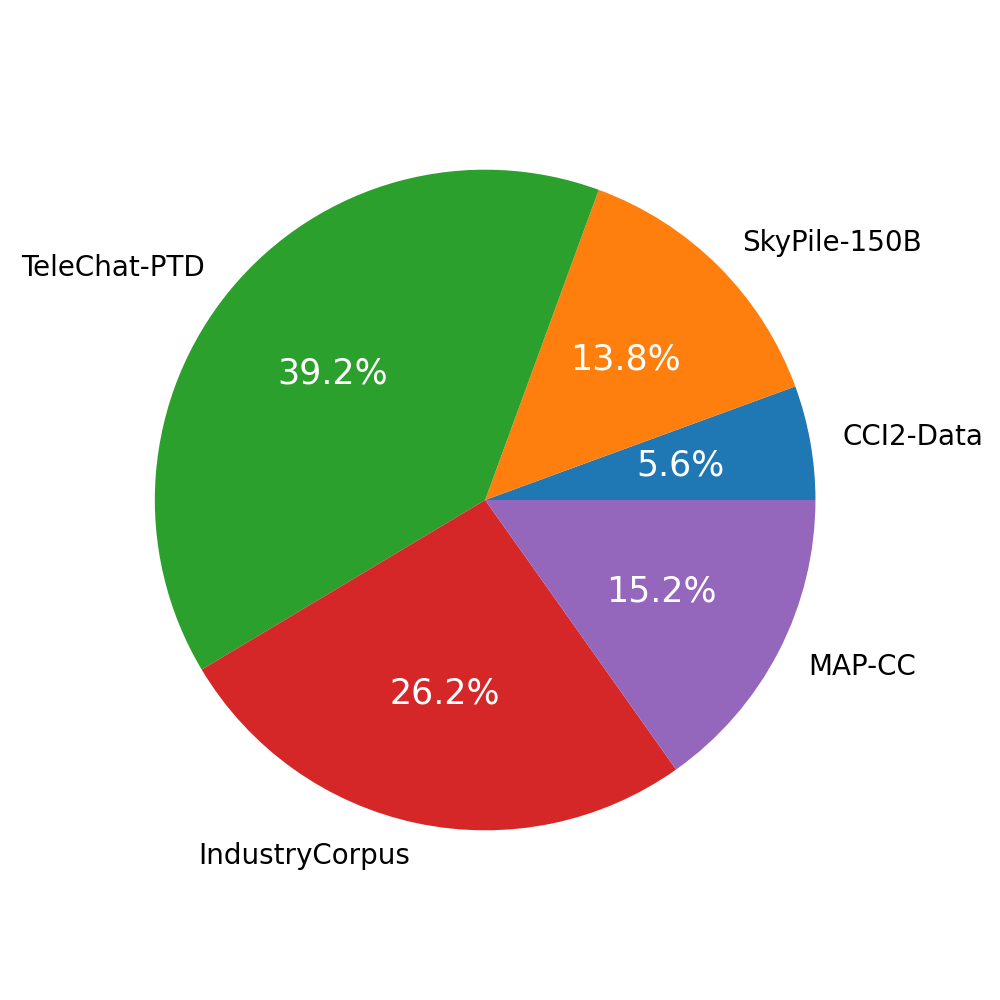

The Chinese Fineweb Edu dataset is built upon a wide range of original data sources, encompassing several mainstream Chinese pre-training datasets. While these datasets vary in scale and coverage, through meticulous selection and processing, they have collectively laid a solid foundation for the Chinese Fineweb Edu dataset. The main data sources include:

- CCI2-Data: A high-quality and reliable Chinese safety dataset that has undergone rigorous cleaning, deduplication, and quality filtering processes.

- SkyPile-150B: A large-scale dataset with 150 billion tokens sourced from the Chinese internet, processed with complex filtering and deduplication techniques.

- IndustryCorpus: A Chinese pre-training dataset covering multiple industries, containing 1TB of Chinese data, particularly suited for industry-specific model training.

- Tele-AI: A high-quality, large-scale Chinese dataset extracted from the pre-training corpus of the telecom large language model TeleChat, containing approximately 270 million pure Chinese texts that have been strictly filtered and deduplicated.

- MAP-CC: A massive Chinese pre-training corpus combining high-quality data from multiple sources, specifically optimized for training Chinese language models.

These diverse data sources not only provide a rich content foundation for the Chinese Fineweb Edu dataset but also enhance its broad applicability and comprehensiveness by integrating data from different fields and sources. This data integration approach ensures that the model can maintain excellent performance and high-quality output when faced with diverse educational scenarios.

Scoring Model

We utilized OpenCSG's enterprise-grade large language model, csg-wukong-enterprise, as the scoring model. By designing prompts, we enabled the model to score each pre-training sample on a scale of 0 to 5, divided into six levels:

0 points: If the webpage provides no educational value whatsoever and consists entirely of irrelevant information (e.g., advertisements or promotional materials).

1 point: If the webpage offers some basic information related to educational topics, even if it includes some unrelated or non-academic content (e.g., advertisements or promotional materials).

2 points: If the webpage contains certain elements related to education but does not align well with educational standards. It might mix educational content with non-educational material, provide a shallow overview of potentially useful topics, or present information in an incoherent writing style.

3 points: If the webpage is suitable for educational use and introduces key concepts related to school curricula. The content is coherent but may not be comprehensive or might include some irrelevant information. It could resemble the introductory section of a textbook or a basic tutorial, suitable for learning but with notable limitations, such as covering concepts that might be too complex for middle school students.

4 points: If the webpage is highly relevant and beneficial for educational purposes at or below the high school level, exhibiting a clear and consistent writing style. It might resemble a chapter in a textbook or tutorial, providing substantial educational content, including exercises and solutions, with minimal irrelevant information. The concepts are not overly complex for middle school students. The content is coherent, with clear emphasis, and valuable for structured learning.

5 points: If the excerpt demonstrates excellent educational value, being entirely suitable for elementary or middle school instruction. It follows a detailed reasoning process, with a writing style that is easy to understand, providing deep and comprehensive insights into the subject without including any non-educational or overly complex content.

We recorded 100,000 data samples along with their scores, creating the dataset fineweb_edu_classifier_chinese_data. Using the scores from this dataset as labels, we trained a Chinese BERT model, fineweb_edu_classifier_chinese, which can assign a score of 0-5 to each input text. We plan to further optimize this scoring model, and in the future, the OpenCSG algorithm team will open-source the fineweb_edu_classifier_chinese_data and the fineweb_edu_classifier_chinese scoring model to further promote community development and collaboration. This dataset contains meticulously annotated and scored educational text data, providing high-quality training data for researchers and developers.

Abaltion experiments

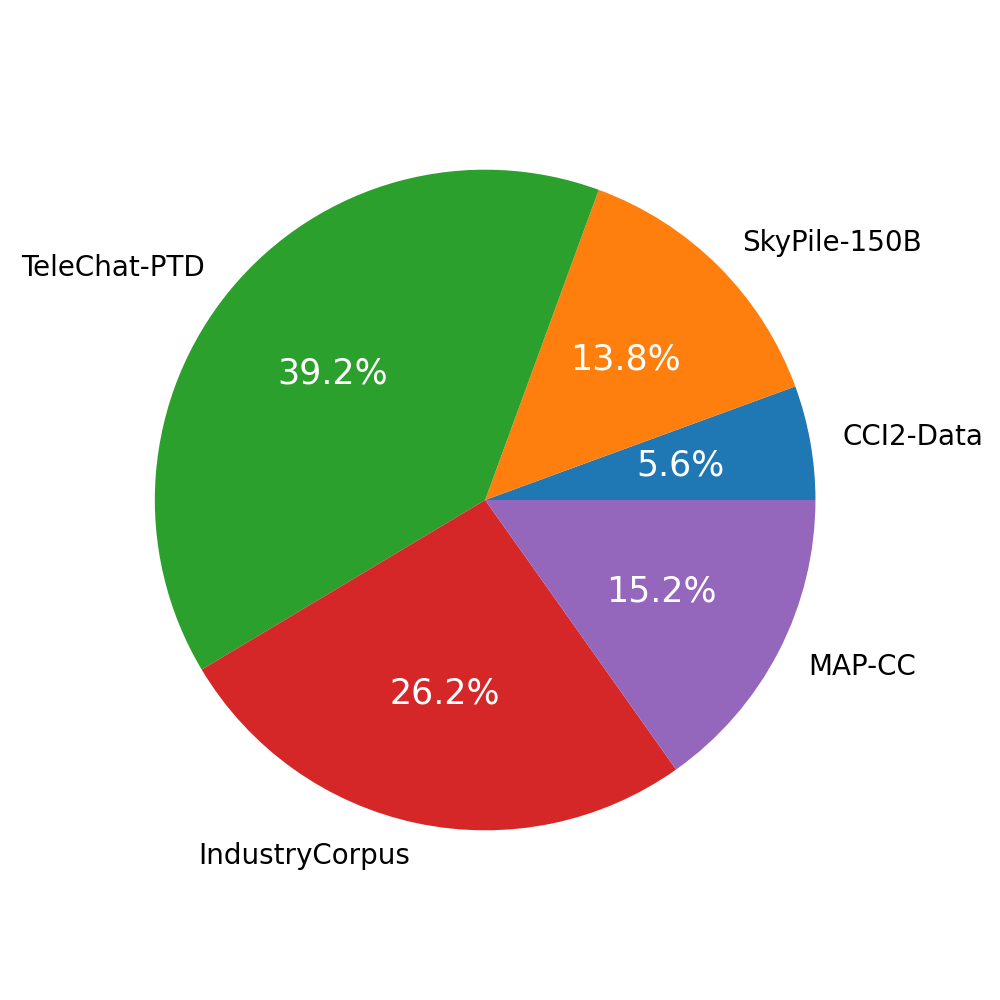

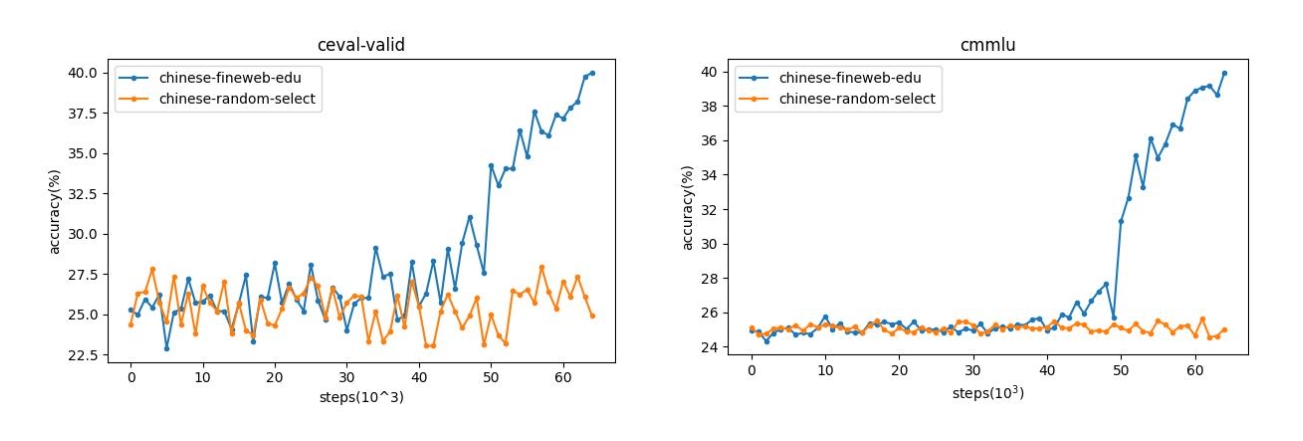

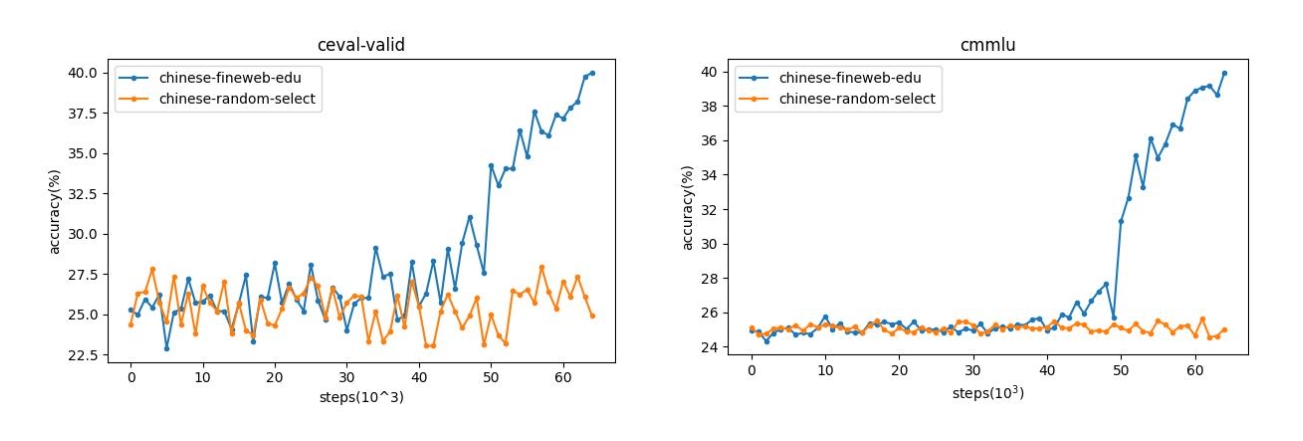

After meticulously designed ablation studies, we aimed to contrast the effects between the Chinese-fineweb-edu dataset and traditional Chinese pre-training corpora. For this purpose, we randomly selected samples from five datasets—CCI2-Data, SkyPile-150B, TeleChat-PTD, IndustryCorpus, and MAP-CC—proportional to the Chinese-fineweb-edu dataset, constructing a comparison dataset named chinese-random-select. In our experiments, we utilized a model with 2.1 billion parameters, training it for 65k steps on both datasets respectively. Throughout the training, we periodically saved checkpoints of the model and conducted validations on Chinese evaluation benchmarks CEval and CMMLU. The graph below displays the performance trends of these two datasets in evaluation tasks. The results distinctly show that the dataset trained on Chinese-fineweb-edu significantly outperforms the chinese-random-select dataset in both evaluation tasks, especially demonstrating considerable advantages in the later stages of training. This underscores the effectiveness and adaptability of Chinese-fineweb-edu in Chinese language tasks. Furthermore, these experimental outcomes also highlight the critical impact of dataset selection and construction on the ultimate performance of models.

The experimental results reveal that in the later stages of training, as it enters the second epoch and the learning rate rapidly decreases, the model trained with the chinese-fineweb-edu data shows a significant increase in accuracy, whereas the model trained with randomly selected data remains at a lower level. This proves that the high-quality data of chinese-fineweb-edu significantly aids in training effectiveness. With the same training duration, it can enhance model capabilities faster and save training resources. This outcome also shares a striking similarity with the data ablation experiments conducted by HuggingFace on fineweb edu.

We warmly invite developers and researchers interested in this field to follow and engage with the community, working together to advance the technology. Stay tuned for the open-source release of the dataset!

License Agreement

Usage of the Chinese Fineweb Edu dataset requires adherence to the OpenCSG Community License. The Chinese Fineweb Edu dataset supports commercial use. If you plan to use the OpenCSG model or its derivatives for commercial purposes, you must comply with the terms and conditions outlined in the OpenCSG Community License as well as the Apache 2.0 License. For commercial use, please send an email to [email protected] and obtain permission.

csg-wukong-ablation-chinese-random

[OpenCSG 社区] [github] [微信] [推特]

**Chinese Fineweb Edu** 数据集是一个精心构建的高质量中文预训练语料数据集,专为教育领域的自然语言处理任务设计。该数据集通过严格的筛选和去重流程,利用少量数据训练打分模型进行评估,从海量的原始数据中提取出高价值的教育相关内容,确保数据的质量和多样性。最终,数据集包含约90M条高质量的中文文本数据,总大小约为300GB。筛选方法

在数据筛选过程中,Chinese Fineweb Edu 数据集采用了与 Fineweb-Edu 类似的筛选策略,重点关注数据的教育价值和内容质量。具体筛选步骤如下:

教育价值评估:首先使用Opencsg的csg-wukong-enterprise企业版大模型对样本的教育价值进行评估,模型会根据样本内容的相关性和质量给出0-5的评分。在初步筛选阶段,我们选取了约100k条评分较高的数据。

打分模型训练:利用这100k条样本数据训练了一个BERT模型,用于对更大规模的预训练数据集进行文本打分。这一步确保了模型能够有效地识别出具有高教育价值的内容。

数据筛选:接下来,使用训练好的BERT模型对原始数据进行全面打分,仅保留得分大于4的数据。这一筛选过程极大地提高了数据集的质量和相关性,确保了其在教育领域的应用价值。

MinHash去重:为避免重复内容对模型训练的负面影响,数据集采用MinHash算法对所有数据进行了去重处理。这种方法确保了数据的独特性,同时保留了多样化的教育内容。

原始数据来源

Chinese Fineweb Edu 数据集的原始数据来源广泛,涵盖了多个国内主流的中文预训练数据集。这些数据集虽然在规模和覆盖领域上各有不同,但通过精细筛选和处理,最终为Chinese Fineweb Edu 数据集提供了坚实的基础。主要数据来源包括:

- CCI2-Data:经过严格的清洗、去重和质量过滤处理,一个高质量且可靠的中文安全数据集。

- SkyPile-150B:一个来自中国互联网上的1500亿token大规模数据集,经过复杂的过滤和去重处理

- IndustryCorpus:一个涵盖多个行业的中文预训练数据集,包含1TB的中文数据,特别适合行业特定的模型训练

- Tele-AI:一个从电信星辰大模型TeleChat预训练语料中提取出的高质量大规模中文数据集,包含约2.7亿条经过严格过滤和去重处理的纯中文文本。

- MAP-CC:一个规模庞大的中文预训练语料库,结合了多种来源的高质量数据,特别针对中文语言模型的训练进行了优化

这些多样化的数据来源不仅为Chinese Fineweb Edu数据集提供了丰富的内容基础,还通过不同领域和来源的数据融合,提升了数据集的广泛适用性和全面性。这种数据整合方式确保了模型在面对多样化的教育场景时,能够保持卓越的表现和高质量的输出。

打分模型

我们使用OpenCSG的csg-wukong-enterprise企业版大模型作为打分模型,通过设计prompt,让其对每一条预训练样本进行打分,分数分为0-5分共6个等级:

0分:如果网页没有提供任何教育价值,完全由无关信息(如广告、宣传材料)组成。

1分:如果网页提供了一些与教育主题相关的基本信息,即使包含一些无关或非学术内容(如广告和宣传材料)。

2分:如果网页涉及某些与教育相关的元素,但与教育标准不太吻合。它可能将教育内容与非教育材料混杂,对潜在有用的主题进行浅显概述,或以不连贯的写作风格呈现信息。

3分:如果网页适合教育使用,并介绍了与学校课程相关的关键概念。内容连贯但可能不全面,或包含一些无关信息。它可能类似于教科书的介绍部分或基础教程,适合学习但有明显局限,如涉及对中学生来说过于复杂的概念。

4分:如果网页对不高于中学水平的教育目的高度相关和有益,表现出清晰一致的写作风格。它可能类似于教科书的一个章节或教程,提供大量教育内容,包括练习和解答,极少包含无关信息,且概念对中学生来说不会过于深奥。内容连贯、重点突出,对结构化学习有价值。

5分:如果摘录在教育价值上表现出色,完全适合小学或中学教学。它遵循详细的推理过程,写作风格易于理解,对主题提供深刻而全面的见解,不包含任何非教育性或复杂内容。

我们记录了100k条数据及其得分,形成fineweb_edu_classifier_chinese_data。将数据集中的得分作为文本打分的标签,我们训练了一个中文Bert模型 fineweb_edu_classifier_chinese,此模型能够为每条输入文本给出0-5分的得分。我们会进一步优化这个打分模型,未来,OpenCSG算法团队将开源fineweb_edu_classifier_chinese_data数据集以及fineweb_edu_classifier_chinese打分模型,以进一步推动社区的发展和交流。该数据集包含了经过精细标注打分的教育领域文本数据,能够为研究人员和开发者提供高质量的训练数据。

消融实验

经过精心设计的消融实验,我们旨在对比 Chinese-fineweb-edu 数据集与传统中文预训练语料的效果差异。为此,我们从 CCI2-Data、SkyPile-150B、TeleChat-PTD、IndustryCorpus 和 MAP-CC 这五个数据集中,随机抽取了与 Chinese-fineweb-edu 数据比例相同的样本,构建了一个对比数据集chinese-random-select。 实验中,我们使用了一个 2.1B 参数规模的模型,分别使用这两种数据集,训练 65k 步。在训练过程中,我们定期保存模型的 checkpoint,并在中文评测基准 CEval 和 CMMLU 数据集上进行了验证。下图展示了这两个数据集在评测任务中的表现变化趋势。 从结果可以清晰看出,使用 Chinese-fineweb-edu 训练的数据集在两个评测任务中均显著优于 chinese-random-select 数据集,特别是在训练到后期时表现出极大的优势,证明了 Chinese-fineweb-edu 在中文语言任务中的有效性和适配性。这一实验结果也进一步表明,数据集的选择和构建对模型的最终性能有着关键性的影响。

通过实验结果可以发现,在训练的靠后阶段,由于进入了第2个epoch,且学习率进入快速下降阶段此时,使用chinese-fineweb-edu训练的模型,准确率有了明显的上升,而使用随机抽取的数据训练,则一直处于较低水平 这证明了chinese-fineweb-edu高质量数据对于模型训练效果有显著帮助,在同样训练时间下,能够更快的提升模型能力,节省训练资源,这个结果也和HuggingFace fineweb edu 的数据消融实验有异曲同工之妙。

我们诚邀对这一领域感兴趣的开发者和研究者关注和联系社区,共同推动技术的进步。敬请期待数据集的开源发布!

许可协议

使用 Chinese Fineweb Edu 数据集需要遵循 OpenCSG 社区许可证。Chinese Fineweb Edu 数据集支持商业用途。如果您计划将 OpenCSG 模型或其衍生产品用于商业目的,您必须遵守 OpenCSG 社区许可证以及 Apache 2.0 许可证中的条款和条件。如用于商业用途,需发送邮件至 [email protected],并获得许可。

- Downloads last month

- 16