license: apache-2.0

tags:

- endpoints-template

FORK of google/flan-ul2

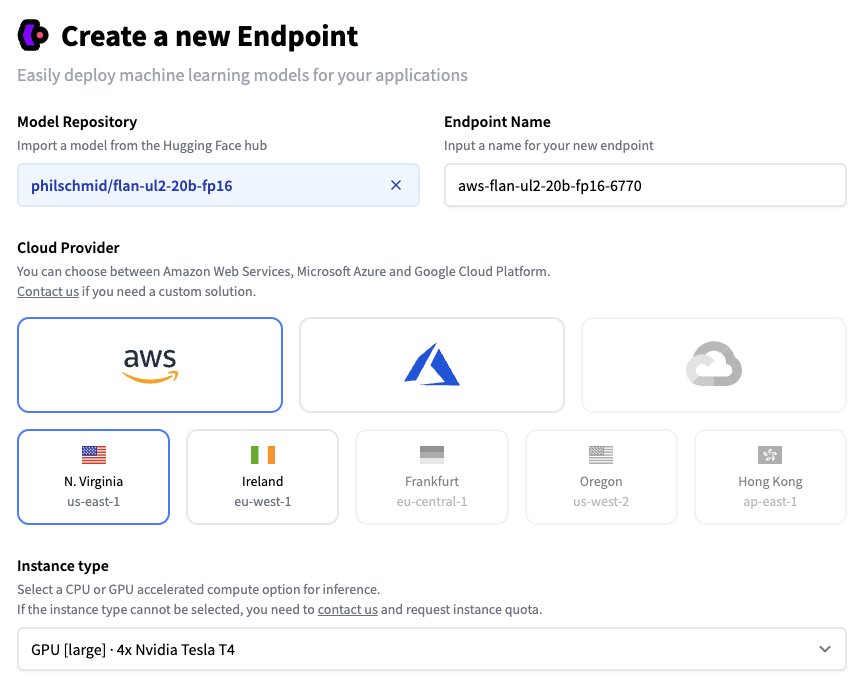

This is a fork of google/flan-ul2 20B implementing a custom

handler.pyfor deploying the model to inference-endpoints on a 4x NVIDIA T4.

You can deploy the flan-ul2 with a 1-click.

Note: Creation of the endpoint can take 2 hours due super long building process, be patient. We are working on improving this!

TL;DR

Flan-UL2 is an encoder decoder model based on the T5 architecture. It uses the same configuration as the UL2 model released earlier last year. It was fine tuned using the "Flan" prompt tuning

and dataset collection.

According ot the original blog here are the notable improvements:

- The original UL2 model was only trained with receptive field of 512, which made it non-ideal for N-shot prompting where N is large.

- The Flan-UL2 checkpoint uses a receptive field of 2048 which makes it more usable for few-shot in-context learning.

- The original UL2 model also had mode switch tokens that was rather mandatory to get good performance. However, they were a little cumbersome as this requires often some changes during inference or finetuning. In this update/change, we continue training UL2 20B for an additional 100k steps (with small batch) to forget “mode tokens” before applying Flan instruction tuning. This Flan-UL2 checkpoint does not require mode tokens anymore.

Important: For more details, please see sections 5.2.1 and 5.2.2 of the paper.

Contribution

This model was originally contributed by Yi Tay, and added to the Hugging Face ecosystem by Younes Belkada & Arthur Zucker.

Citation

If you want to cite this work, please consider citing the blogpost announcing the release of Flan-UL2.