metadata

license: apache-2.0

datasets:

- multi-train/coco_captions_1107

- visual_genome

language:

- en

pipeline_tag: text-to-image

tags:

- scene_graph

- transformers

- laplacian

- autoregressive

- vqvae

trf-sg2im

Model card for the paper "Transformer-Based Image Generation from Scene Graphs". Original GitHub implementation here.

Model

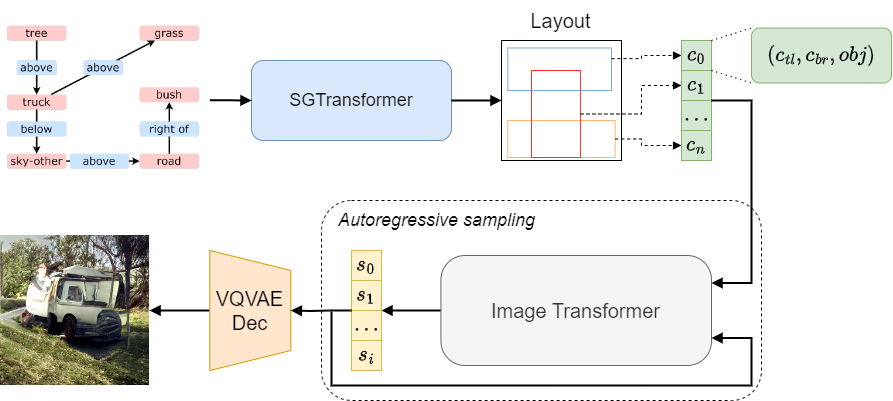

This model is a two-stage scene-graph-to-image approach. It takes a scene graph as input and generates a layout using a transformer-based architecture with Laplacian Positional Encoding. Then, it uses this estimated layout to condition an autoregressive GPT-like transformer to compose the image in the latent, discrete space, converted into the final image by a VQVAE.

Usage

For usage instructions, please refer to the original GitHub repo.

Results

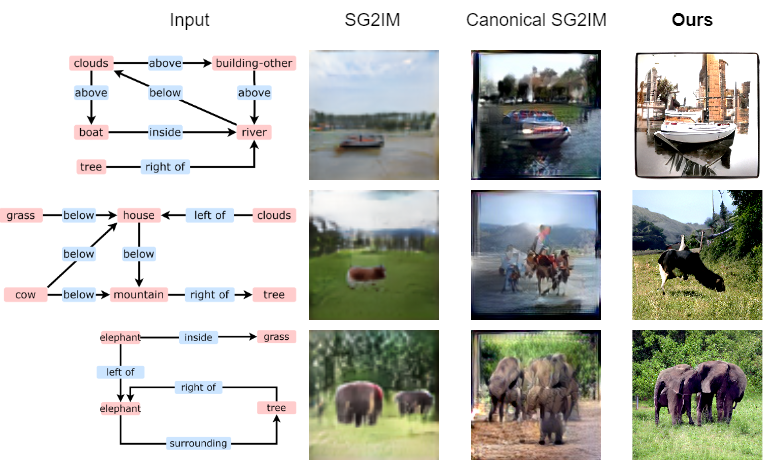

Comparison with other state-of-the-art approaches