Spaces:

Running

title: README

emoji: 🏃

colorFrom: pink

colorTo: red

sdk: static

pinned: false

Knowledge Fusion of Large Language Models

| 📑 FuseLLM Paper @ICLR2024 | 📑 FuseChat Tech Report | 🤗 HuggingFace Repo | 🐱 GitHub Repo |

FuseAI

FuseAI is an open-source research community focused on model fusion topics.

The community members currently applying model fusion on Foundation and Chat LLMs, with future plans to fuse Agent/MoE LLMs.

Welcome to join us!

News

FuseChat [SOTA 7B LLM on MT-Bench]

Aug 16, 2024: 🔥🔥🔥🔥 We update the FuseChat tech report and release FuseChat-7B-v2.0, which is the fusion of six prominent chat LLMs with diverse architectures and scales, namely OpenChat-3.5-7B, Starling-LM-7B-alpha, NH2-Solar-10.7B, InternLM2-Chat-20B, Mixtral-8x7B-Instruct, and Qwen1.5-Chat-72B. FuseChat-7B-v2.0 achieves an average performance of 7.38 on MT-Bench (GPT-4-0125-Preview as judge LLM), which is comparable to Mixtral-8x7B-Instruct and approaches GPT-3.5-Turbo-1106.

Mar 13, 2024: 🔥🔥🔥 We release a HuggingFace Space for FuseChat-7B, try it now!

Feb 26, 2024: 🔥🔥 We release FuseChat-7B-VaRM, which is the fusion of three prominent chat LLMs with diverse architectures and scales, namely NH2-Mixtral-8x7B, NH2-Solar-10.7B, and OpenChat-3.5-7B. FuseChat-7B-VaRM achieves an average performance of 8.22 on MT-Bench, outperforming various powerful chat LLMs like Starling-7B, Yi-34B-Chat, and Tulu-2-DPO-70B, even surpassing GPT-3.5 (March), Claude-2.1, and approaching Mixtral-8x7B-Instruct.

Feb 25, 2024: 🔥 We release FuseChat-Mixture, which is a comprehensive training dataset covers different styles and capabilities, featuring both human-written and model-generated, and spanning general instruction-following and specific skills.

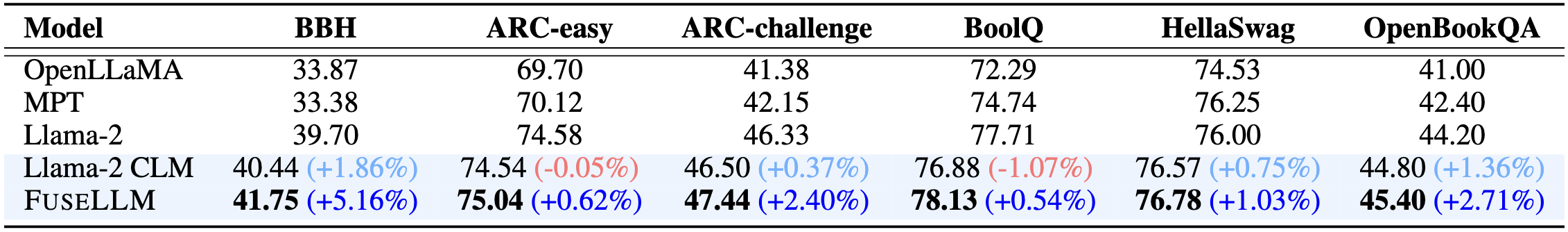

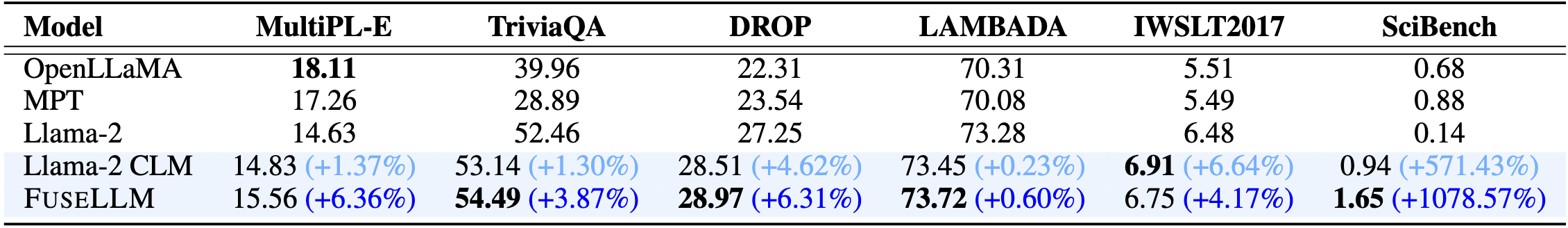

FuseLLM [Surpassing Llama-2-7B]

- Jan 22, 2024: 🔥 We release FuseLLM-7B, which is the fusion of three open-source foundation LLMs with distinct architectures, including Llama-2-7B, OpenLLaMA-7B, and MPT-7B.

Citation

Please cite the following paper if you reference our model, code, data, or paper related to FuseLLM.

@inproceedings{wan2024knowledge,

title={Knowledge Fusion of Large Language Models},

author={Fanqi Wan and Xinting Huang and Deng Cai and Xiaojun Quan and Wei Bi and Shuming Shi},

booktitle={The Twelfth International Conference on Learning Representations},

year={2024},

url={https://openreview.net/pdf?id=jiDsk12qcz}

}

Please cite the following paper if you reference our model, code, data, or paper related to FuseChat.

@article{wan2024fusechat,

title={FuseChat: Knowledge Fusion of Chat Models},

author={Fanqi Wan and Longguang Zhong and Ziyi Yang and Ruijun Chen and Xiaojun Quan},

journal={arXiv preprint arXiv:2408.07990},

year={2024}

}