Spaces:

Runtime error

Explaining a new state-of-the-art monocular depth estimation model: Depth Anything ✨ It has just been integrated in transformers for super-easy use. We compared it against DPTs and benchmarked it as well! You can the usage, benchmark, demos and more below 👇

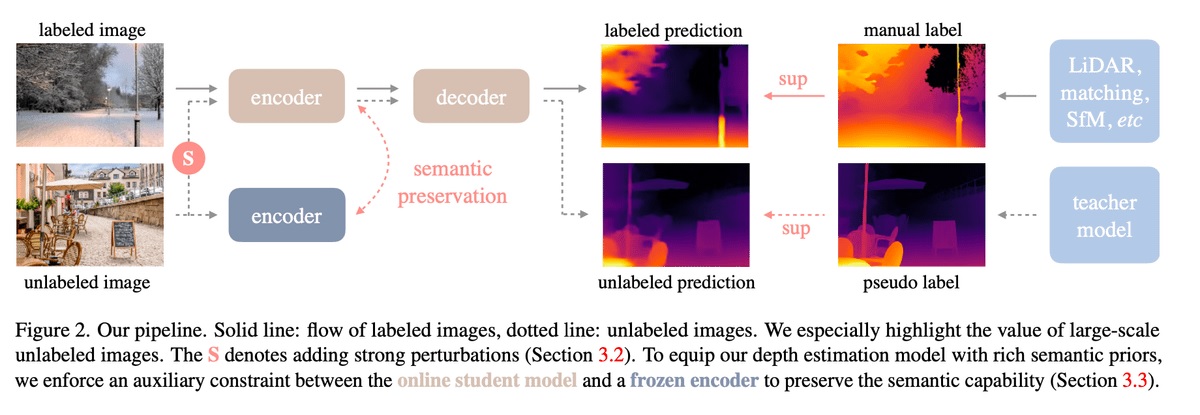

The paper starts with highlighting previous depth estimation methods and the limitations regarding the data coverage. 👀 The model's success heavily depends on unlocking the use of unlabeled datasets, although initially the authors used self-training and failed.

What the authors have done:

➰ Train a teacher model on labelled dataset

➰ Guide the student using teacher and also use unlabelled datasets pseudolabelled by the teacher. However, this was the cause of the failure, as both architectures were similar, the outputs were the same.

So the authors have added a more difficult optimization target for student to learn additional knowledge on unlabeled images that went through color jittering, distortions, Gaussian blurring and spatial distortion, so it can learn more invariant representations from them.

The architecture consists of DINOv2 encoder to extract the features followed by DPT decoder. At first, they train the teacher model on labelled images, and then they jointly train the student model and add in the dataset pseudo-labelled by ViT-L.

Thanks to this, Depth Anything performs very well! I have also benchmarked the inference duration of the model against different models here. I also ran torch.compile benchmarks across them and got nice speed-ups 🚀

On T4 GPU, mean of 30 inferences for each. Inferred using pipeline (pre-processing and post-processing included with model inference).

| Model/Batch Size | 16 | 4 | 1 |

|---|---|---|---|

| intel/dpt-large | 2709.652 | 667.799 | 172.617 |

| facebook/dpt-dinov2-small-nyu | 2534.854 | 654.822 | 159.754 |

| facebook/dpt-dinov2-base-nyu | 4316.8733 | 1090.824 | 266.699 |

| Intel/dpt-beit-large-512 | 7961.386 | 2036.743 | 497.656 |

| depth-anything-small | 1692.368 | 415.915 | 143.379 |

torch.compile’s benchmarks with reduce-overhead mode: we have compiled the model and loaded it to the pipeline for the benchmarks to be fair.

| Model/Batch Size | 16 | 4 | 1 |

|---|---|---|---|

| intel/dpt-large | 2556.668 | 645.750 | 155.153 |

| facebook/dpt-dinov2-small-nyu | 2415.25 | 610.967 | 148.526 |

| facebook/dpt-dinov2-base-nyu | 4057.909 | 1035.672 | 245.692 |

| Intel/dpt-beit-large-512 | 7417.388 | 1795.882 | 426.546 |

| depth-anything-small | 1664.025 | 384.688 | 97.865 |

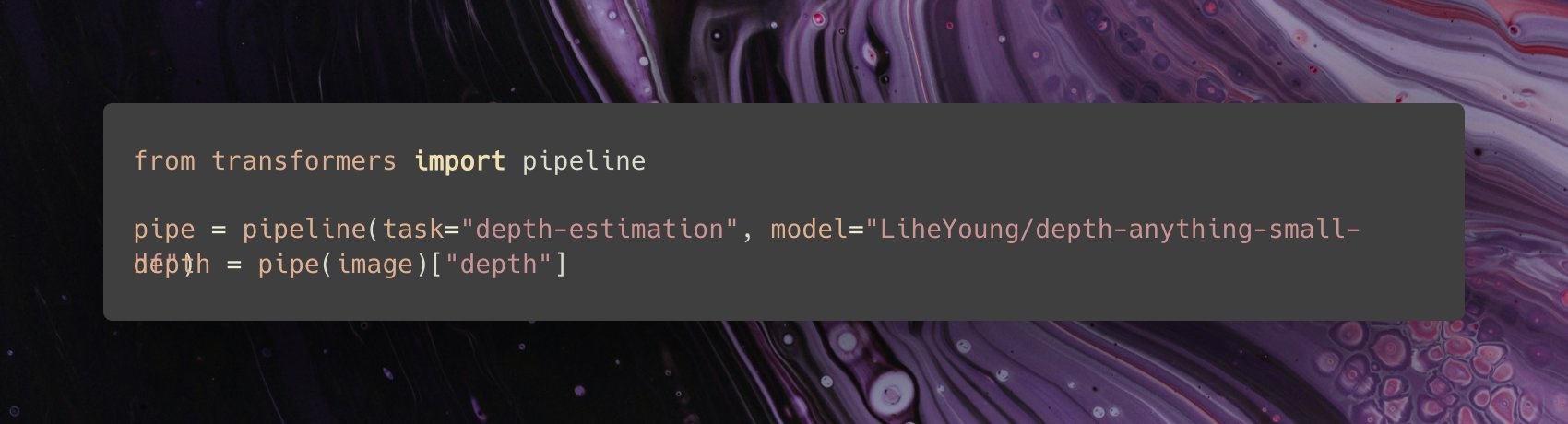

You can use Depth Anything easily thanks to 🤗 Transformers with three lines of code! ✨ We have also built an app for you to compare different depth estimation models 🐝 🌸 See all the available Depth Anything checkpoints here.

Ressources:

Depth Anything: Unleashing the Power of Large-Scale Unlabeled Data by Lihe Yang, Bingyi Kang, Zilong Huang, Xiaogang Xu, Jiashi Feng, Hengshuang Zhao (2024) GitHub Hugging Face documentation

Original tweet (January 25, 2024)