Spaces:

Runtime error

A newer version of the Streamlit SDK is available:

1.39.0

DocOwl 1.5 is the state-of-the-art document understanding model by Alibaba with Apache 2.0 license 😍📝 time to dive in and learn more 🧶

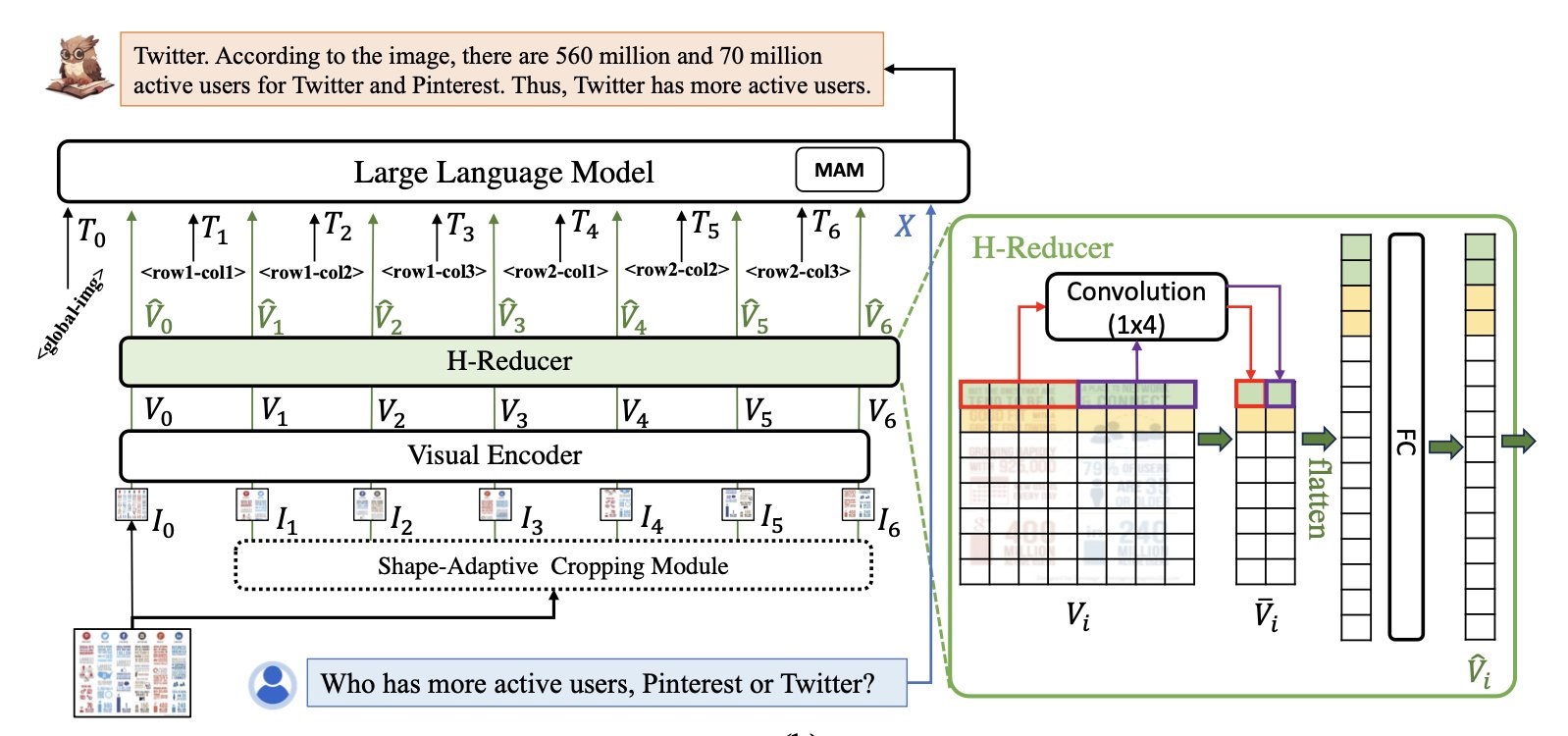

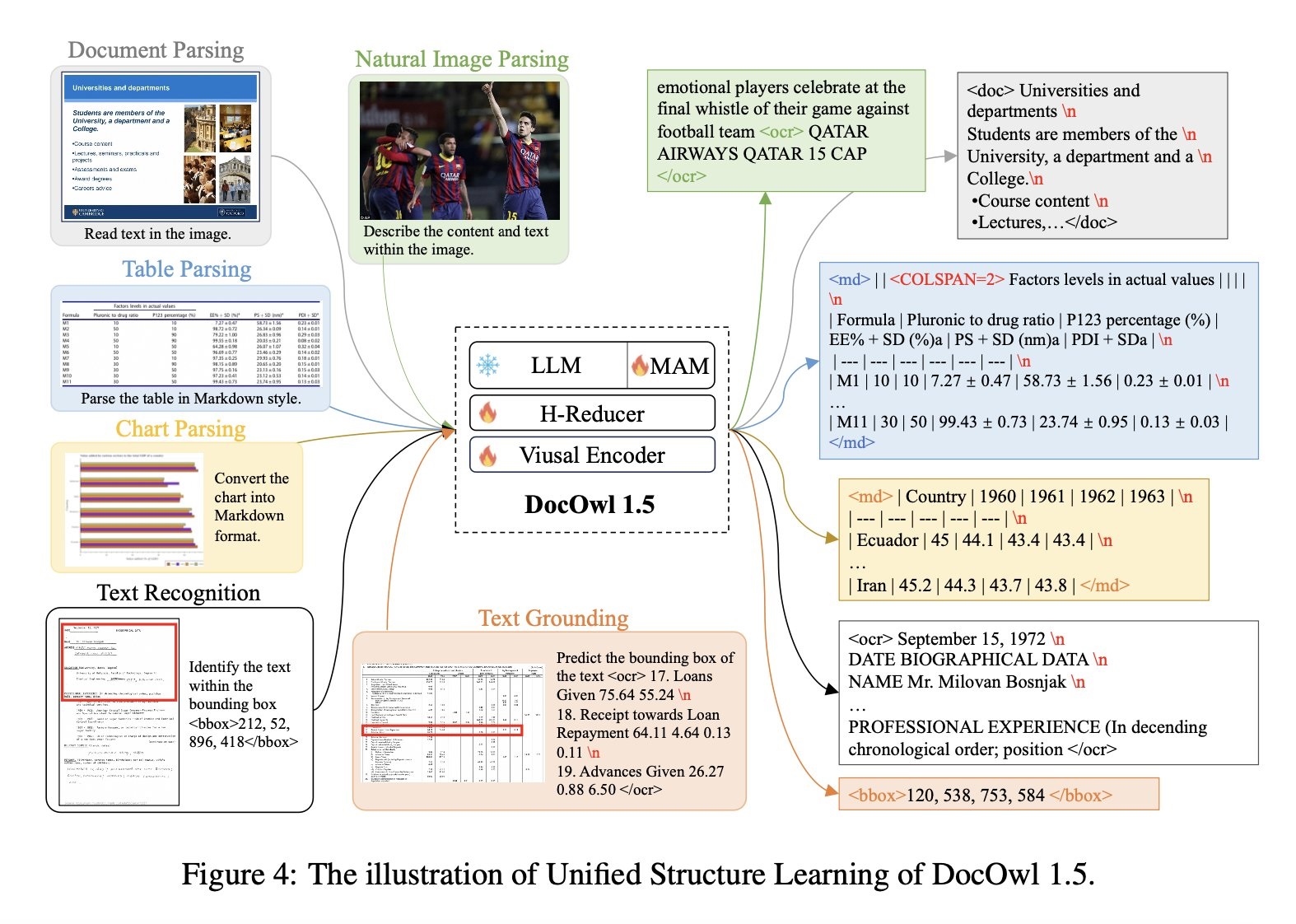

This model consists of a ViT-based visual encoder part that takes in crops of image and the original image itself Then the outputs of the encoder goes through a convolution based model, after that the outputs are merged with text and then fed to LLM

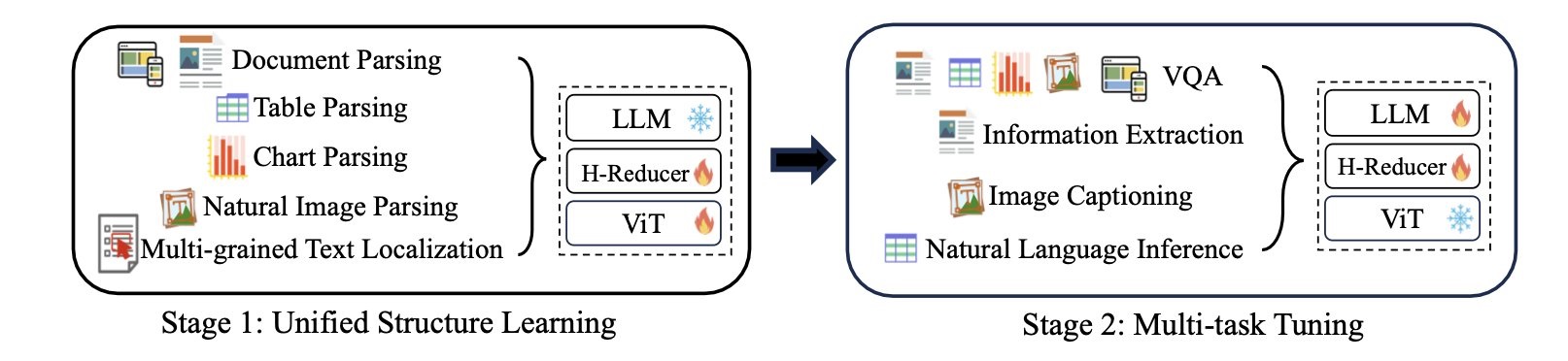

Initially, the authors only train the convolution based part (called H-Reducer) and vision encoder while keeping LLM frozen Then for fine-tuning (on image captioning, VQA etc), they freeze vision encoder and train H-Reducer and LLM

Also they use simple linear projection on text and documents. You can see below how they model the text prompts and outputs 🤓

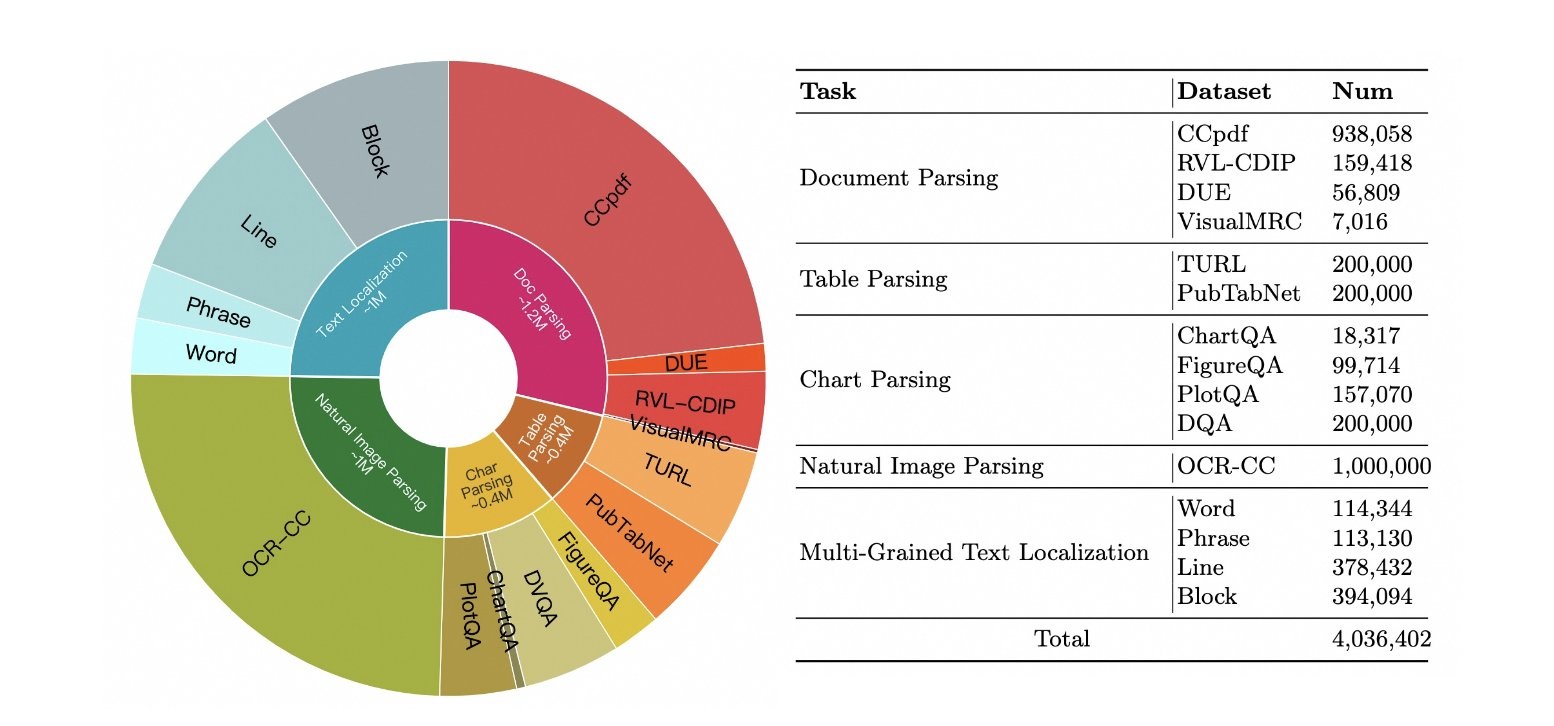

They train the model various downstream tasks including:

- document understanding (DUE benchmark and more)

- table parsing (TURL, PubTabNet)

- chart parsing (PlotQA and more)

- image parsing (OCR-CC)

- text localization (DocVQA and more)

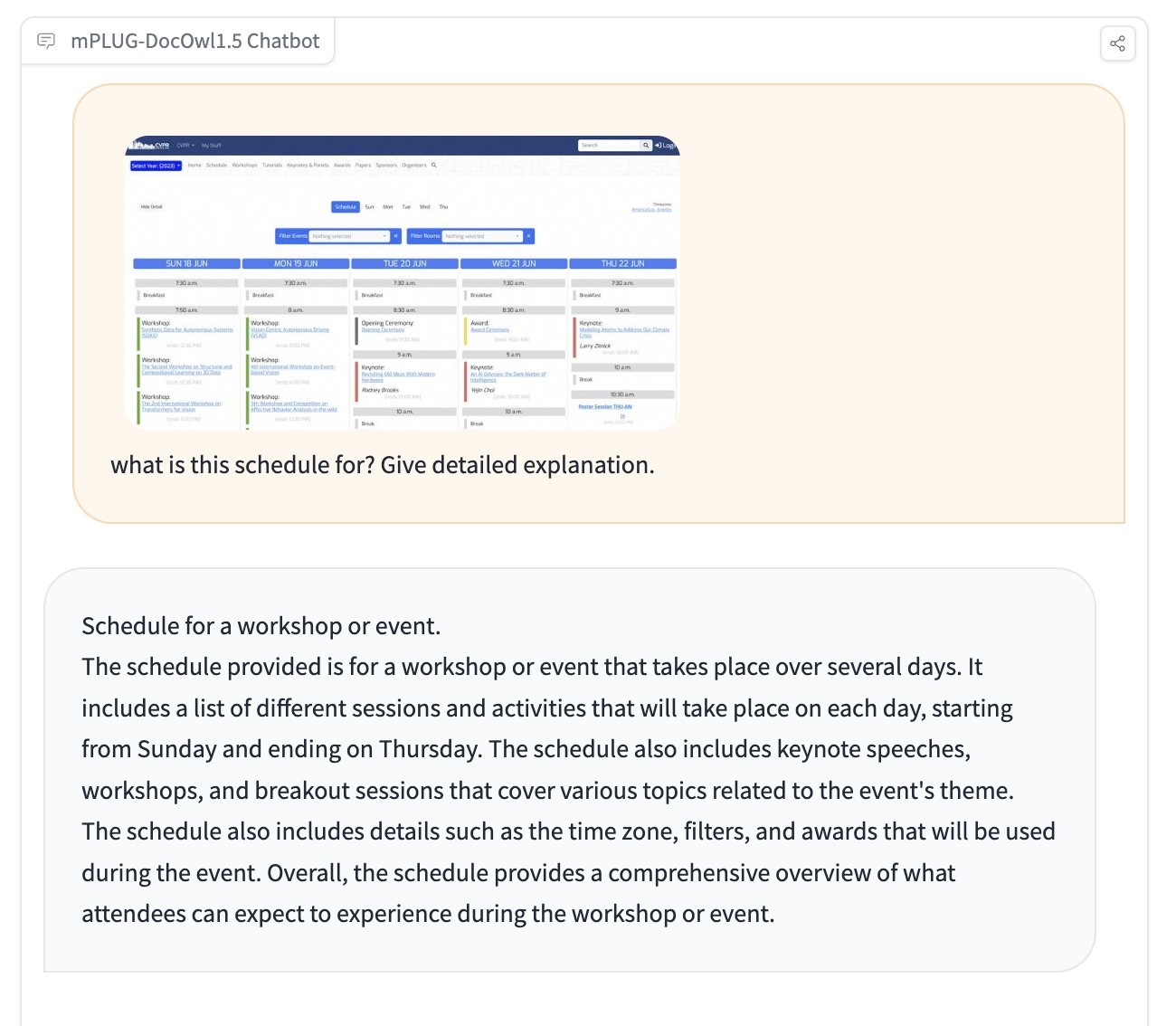

They contribute a new model called DocOwl 1.5-Chat by:

- creating a new document-chat dataset with questions from document VQA datasets

- feeding them to ChatGPT to get long answers

- fine-tune the base model with it (which IMO works very well!)

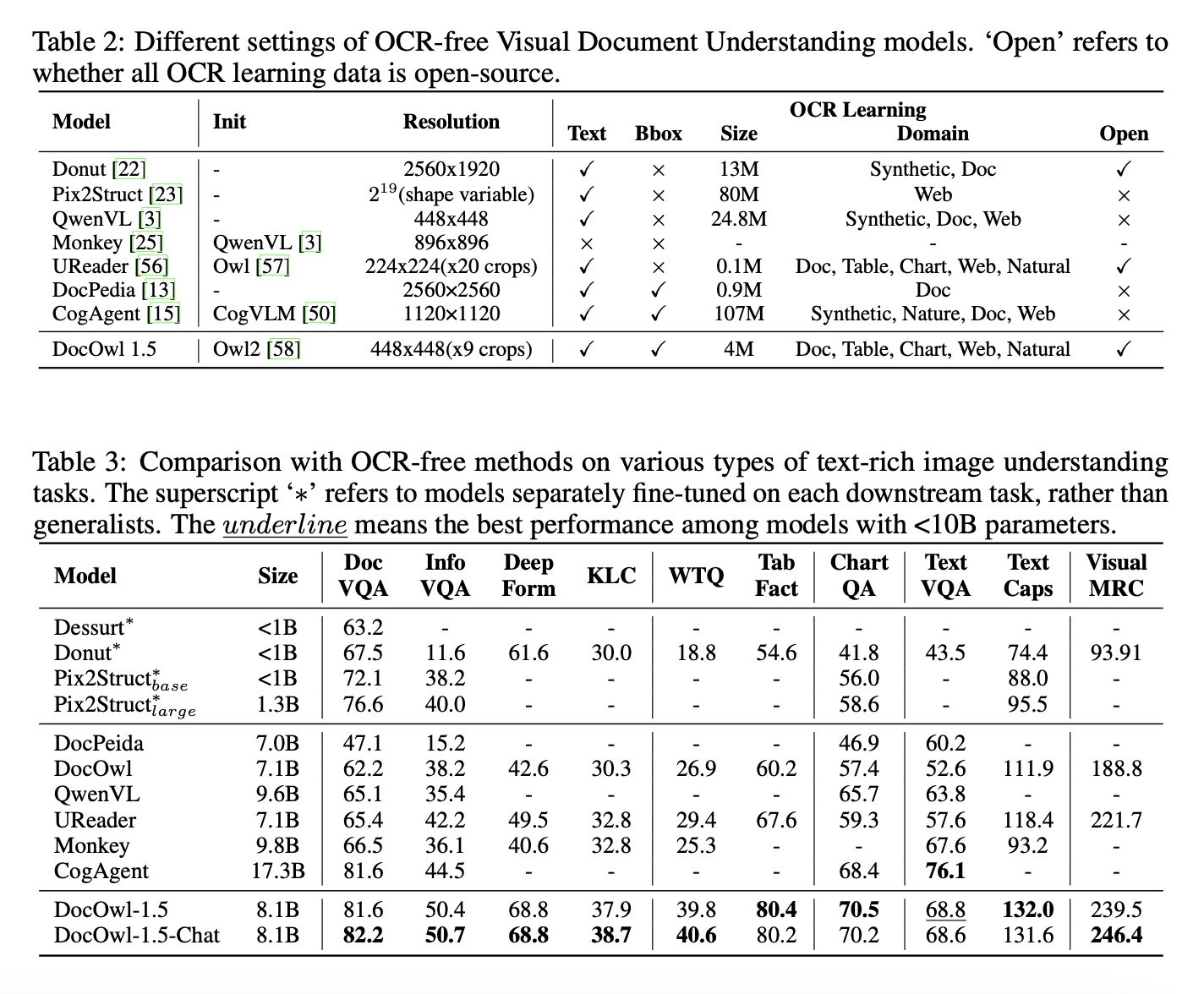

Resulting generalist model and the chat model are pretty much state-of-the-art 😍 Below you can see how it compares to fine-tuned models

Very good paper, read it here.

All the models and the datasets (also some eval datasets on above tasks!) are in this organization.

The Space.

Thanks a lot for reading!

Ressources:

mPLUG-DocOwl 1.5: Unified Structure Learning for OCR-free Document Understanding by Anwen Hu, Haiyang Xu, Jiabo Ye, Ming Yan, Liang Zhang, Bo Zhang, Chen Li, Ji Zhang, Qin Jin, Fei Huang, Jingren Zhou (2024) GitHub

Original tweet (April 22, 2024)