Spaces:

Running

A newer version of the Gradio SDK is available:

5.5.0

title: MangaColorizer

emoji: 🖌️🎨

colorFrom: green

colorTo: gray

sdk: gradio

app_file: src/main.py

pinned: false

license: mit

MangaColorizer

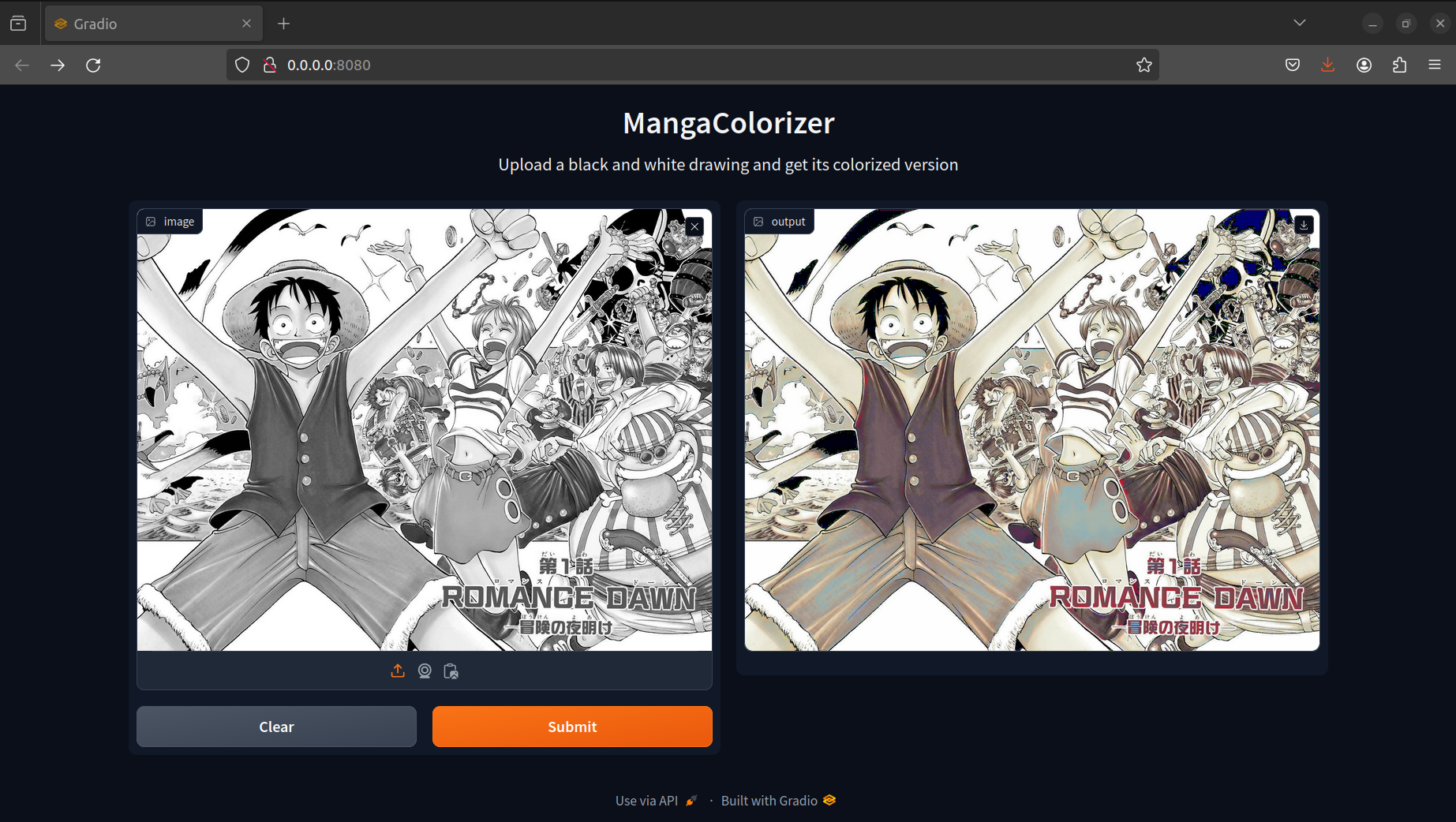

This project is a colorizer of grayscale images, and in particular for manga, comics or drawings.

Given a black and white (grayscale) image, the model produces a colorized version of it.

How I built this project:

The data I used to train this model contains 755 colored images from some chapters of Bleach, Dragon Ball Super, Naruto, One Piece and Attack on Titan. I also used 215 other images for the validation set, as well as 109 other images for the test set.

In the current version, I trained an encoder-decoder model from scratch with the following architecture:

MangaColorizer(

(encoder): Sequential(

(0): Conv2d(1, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): ReLU(inplace=True)

(2): Conv2d(64, 128, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1))

(3): ReLU(inplace=True)

(4): Conv2d(128, 256, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1))

(5): ReLU(inplace=True)

)

(decoder): Sequential(

(0): ConvTranspose2d(256, 128, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1))

(1): ReLU(inplace=True)

(2): ConvTranspose2d(128, 64, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1))

(3): ReLU(inplace=True)

(4): ConvTranspose2d(64, 3, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(5): Tanh()

)

)

The inputs to the model are the grayscale version of the manga images, and the target is the colored version of the image.

The loss function used is the MSE of all the pixel values produced by the model (compared to the target pixel values).

Currently, it achieves an MSE of 0.00859 on the test set.

For more details, you can refer to the docs directory.