This model is finetune on Japanese and English prompt

Usage:

Init model:

To use in code:

import torch

import peft

from transformers import LlamaTokenizer, LlamaForCausalLM, GenerationConfig

tokenizer = LlamaTokenizer.from_pretrained(

"decapoda-research/llama-7b-hf"

)

model = LlamaForCausalLM.from_pretrained(

"tamdiep106/alpaca_lora_ja_en_emb-7b",

load_in_8bit=False,

device_map="auto",

torch_dtype=torch.float16

)

tokenizer.pad_token_id = 0 # unk. we want this to be different from the eos token

tokenizer.bos_token_id = 1

tokenizer.eos_token_id = 2

Try this model

To try out this model, use this colab space GOOGLE COLAB LINK

Recommend Generation parameters:

temperature: 0.5~0.7

top p: 0.65~1.0

top k: 30~50

repeat penalty: 1.03~1.17

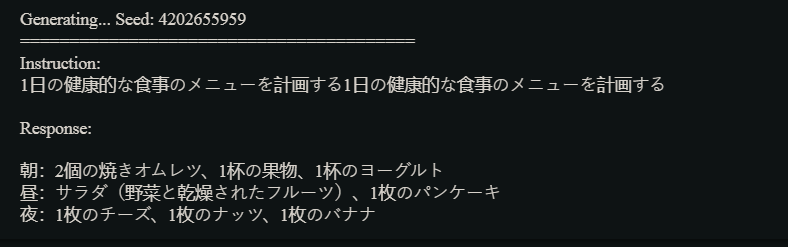

Japanese prompt:

instruction_input_JP = 'あなたはアシスタントです。以下に、タスクを説明する指示と、さらなるコンテキストを提供する入力を組み合わせます。 リクエストを適切に完了するレスポンスを作成します。'

instruction_no_input_JP = 'あなたはアシスタントです。以下はタスクを説明する指示です。 リクエストを適切に完了するレスポンスを作成します。'

prompt = """{}

### Instruction:

{}

### Response:"""

if input=='':

prompt = prompt.format(

instruction_no_input_JP, instruction

)

else:

prompt = prompt.format("{}\n\n### input:\n{}""").format(

instruction_input_JP, instruction, input

)

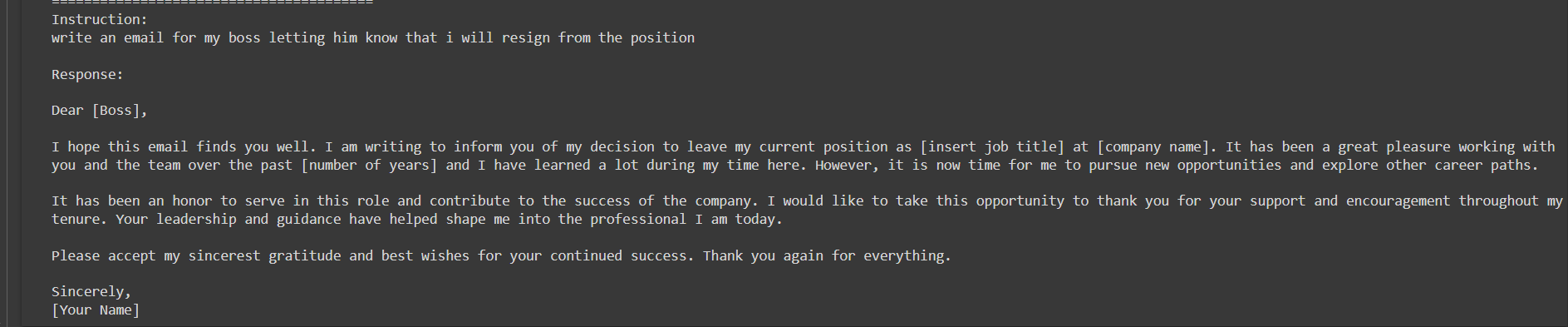

English prompt:

instruction_input_EN = 'You are an Assistant, below is an instruction that describes a task, paired with an input that provides further context. Write a response that appropriately completes the request.'

instruction_no_input_EN = 'You are an Assistant, below is an instruction that describes a task. Write a response that appropriately completes the request.'

prompt = """{}

### Instruction:

{}

### Response:"""

instruction = "write an email for my boss letting him know that i will resign from the position" #@param {type:"string"}

input = "" #@param {type:"string"}

if input=='':

prompt = prompt.format(

instruction_no_input_EN, instruction

)

else:

prompt = prompt.format("{}\n\n### input:\n{}""").format(

instruction_input_EN, instruction, input

)

Use this code to decode output of model

for s in generation_output.sequences:

result = tokenizer.decode(s).strip()

result = result.replace(prompt, '')

result = result.replace("<s>", "")

result = result.replace("</s>", "")

if result=='':

print('No output')

print(prompt)

print(result)

continue

print('\nResponse: ')

print(result)

Training:

Dataset:

Jumtra/oasst1_ja

Jumtra/jglue_jsquads_with_input

Jumtra/dolly_oast_jglue_ja

Aruno/guanaco_jp

yahma/alpaca-cleaned

databricks/databricks-dolly-15k

with about 750k entries, 2k entries used for evaluate process

Training setup

I trained this model on an instance from vast.ai

1 NVIDIA RTX 4090

90 GB Storage

Time spend about 3 and a half days

use

python export.pyto merge weightTraining script: https://github.com/Tamminhdiep97/alpaca-lora_finetune/tree/master

Result

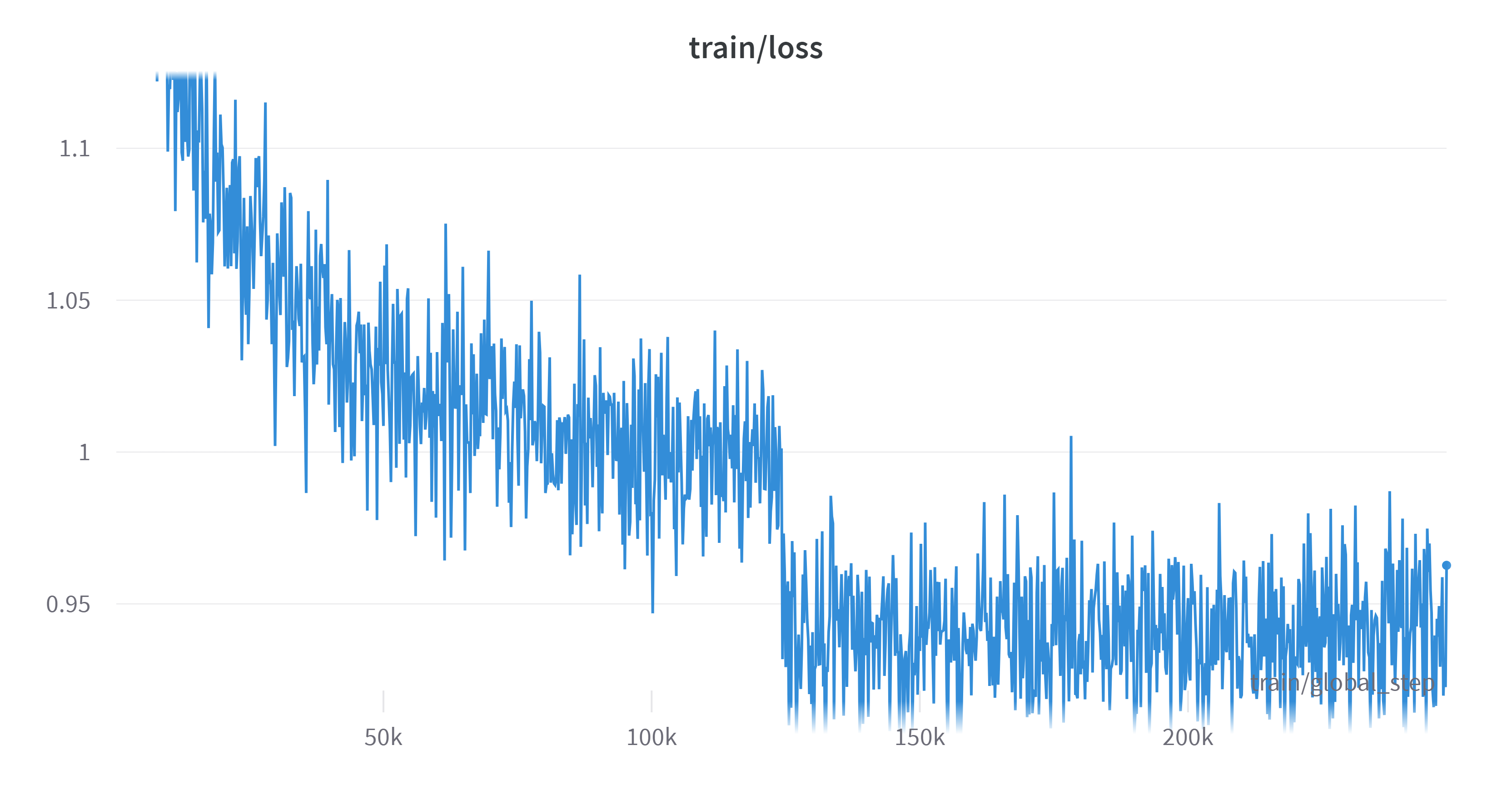

- Training loss

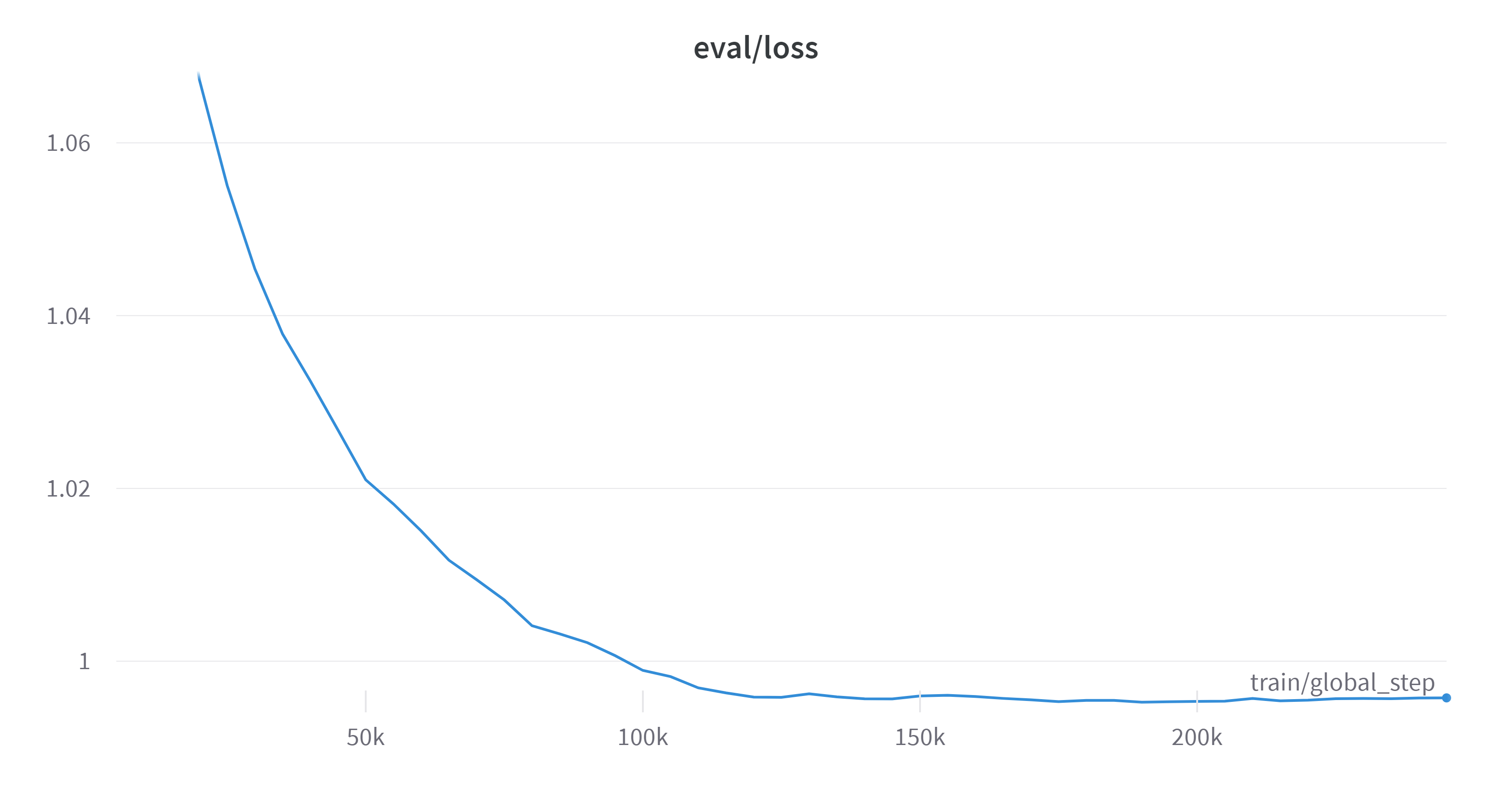

- Eval loss chart

Acknowledgement

- Special thank to KBlueLeaf and the repo https://huggingface.co/KBlueLeaf/guanaco-7b-leh-v2 that helped and inspired me to train this model, without this help, i wouldn't never thought that i could finetune a llm myself

- Downloads last month

- 12

This model does not have enough activity to be deployed to Inference API (serverless) yet. Increase its social

visibility and check back later, or deploy to Inference Endpoints (dedicated)

instead.