license: apache-2.0

language:

- en

widget:

- text: It is raining and my family

example_title: Example 1

- text: We entered into the forest and

example_title: Example 2

- text: I sat for doing my homework

example_title: Example 3

Custom GPT Model

Model Description

This model, designed and pretrained from scratch, was developed without utilizing the Hugging Face library.

Model Parameters

- Block Size:

256(Maximum sequence length) - Vocab Size:

50257(Includes 50,000 BPE merges, 256 byte-level tokens, and 1 special token) - Number of Layers:

8 - Number of Heads:

8 - Embedding Dimension:

768 - Max Learning Rate:

0.0006 - Min Learning Rate:

0.00006(10% of max_lr) - Warmup Steps:

715 - Max Steps:

52000 - Total Batch Size:

524288(Number of tokens per batch) - Micro Batch Size:

128 - Sequence Length:

256

Model Parameters Details

Decayed Parameters

- Total Decayed Parameters: 95,453,184

Decayed parameters typically include weights from the model's various layers (like the transformer blocks), which are subject to weight decay during optimization. This technique helps in regularizing the model, potentially reducing overfitting by penalizing large weights.

Non-Decayed Parameters

- Total Non-Decayed Parameters: 81,408

Non-decayed parameters generally involve biases and layer normalization parameters. These parameters are excluded from weight decay as applying decay can adversely affect the training process by destabilizing the learning dynamics.

Total Parameters

- Overall Total Parameters: 95,534,592

The calculated total number of parameters includes both decayed and non-decayed tensors, summing up to over 95 million parameters.

Dataset Description

Overview

For the training of this model, a significant subset of the HuggingFaceFW/fineweb-edu dataset was utilized. Specifically, the model was pretrained on 3 billion tokens selected from the "Sample 10B" segment of the dataset. This dataset provides a rich corpus compiled from educational and academic web sources, making it an excellent foundation for developing language models with a strong grasp of academic and formal text.

Dataset Source

The dataset is hosted and maintained on Hugging Face's dataset repository. More detailed information and access to the dataset can be found through its dedicated page: HuggingFaceFW/fineweb-edu Sample 10B

Training Details

- Total Tokens Used for Training: 3 billion tokens

- Training Duration: The model was trained over 3 epochs to ensure sufficient exposure to the data while optimizing the learning trajectory.

Model Evaluation on HellaSwag Dataset

Performance Overview

The evaluation of our model, "orator," on the HellaSwag dataset demonstrates significant progress in understanding context-based predictions. Below, we detail the performance through loss and accuracy graphs, accompanied by specific metrics.

Graph Analysis

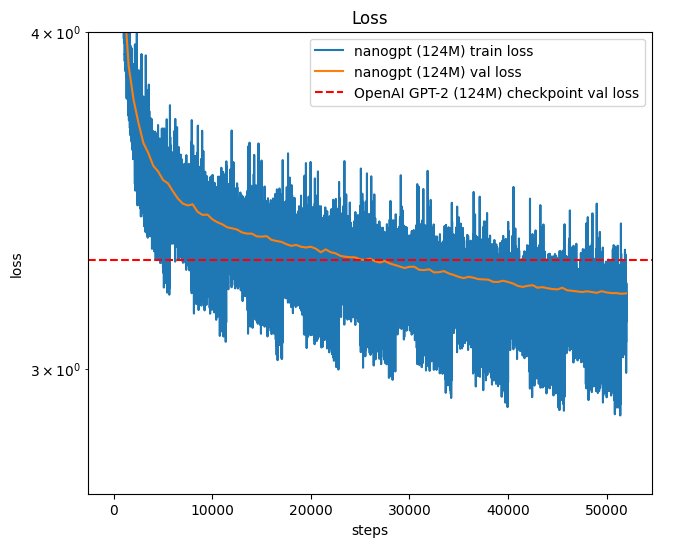

Loss Graph

- Blue Line (Train Loss): Represents the model's loss on the training set over the number of training steps. It shows a sharp decline initially, indicating rapid learning, followed by fluctuations that gradually stabilize.

- Orange Line (Validation Loss): Shows the loss on the validation set. This line is smoother than the training loss, indicating general stability and effectiveness of the model against unseen data.

- Red Dashed Line: Marks the validation loss of a baseline model, OpenAI's GPT-2 (124M), for comparison. Our model achieves lower validation loss, indicating improved performance.

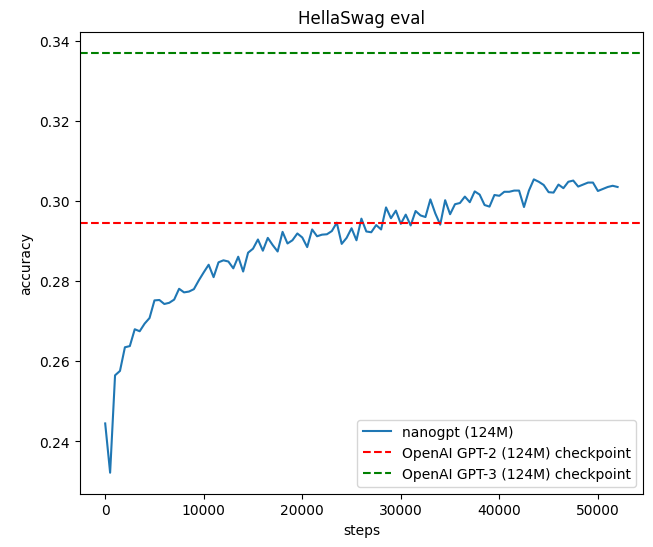

Accuracy Graph (HellaSwag Eval)

- Blue Line: This line represents the accuracy of the "orator" model on the HellaSwag evaluation set. It shows a steady increase in accuracy, reflecting the model's improving capability to correctly predict or complete new scenarios.

- Red Dashed Line: This is the accuracy of the baseline OpenAI GPT-2 (124M) model. Our model consistently surpasses this benchmark after initial training phases.

Key Metrics

- Minimum Training Loss:

2.883471 - Minimum Validation Loss:

3.1989 - Maximum HellaSwag Evaluation Accuracy:

0.3054

Tokenization

For tokenization, this model uses:

tokenizer = tiktoken.get_encoding("gpt2")

How to Use the Model

Load and Generate Text

Below is a Python example on how to load the model and generate text:

import torch

from torch.nn import functional as F

from gpt_class import GPTConfig, GPT

import tiktoken

# Set up the device

device = "cuda" if torch.cuda.is_available() else "cpu"

# Load the model

state_dict = torch.load('model_51999.pt', map_location=device)

config = state_dict['config']

model = GPT(config)

model.load_state_dict(state_dict['model'])

model.to(device)

model.eval()

# Seed for reproducibility

torch.manual_seed(42)

torch.cuda.manual_seed_all(42)

# Tokenizer

tokenizer = tiktoken.get_encoding("gpt2")

def Generate(model, tokenizer, example, num_return_sequences, max_length):

model.eval()

tokens = tokenizer.encode(example)

tokens = torch.tensor(tokens, dtype=torch.long).unsqueeze(0).repeat(num_return_sequences, 1)

tokens = tokens.to(device)

sample_rng = torch.Generator(device=device)

xgen = tokens

while xgen.size(1) < max_length:

with torch.no_grad():

with torch.autocast(device_type=device):

logits, _ = model(xgen)

logits = logits[:, -1, :]

probs = F.softmax(logits, dim=-1)

topk_probs, topk_indices = torch.topk(probs, 50, dim=-1)

ix = torch.multinomial(topk_probs, 1, generator=sample_rng)

xcol = torch.gather(topk_indices, -1, ix)

xgen = torch.cat((xgen, xcol), dim=1)

for i in range(num_return_sequences):

tokens = xgen[i, :max_length].tolist()

decoded = tokenizer.decode(tokens)

print(f"Sample {i+1}: {decoded}")

# Example usage

Generate(model, tokenizer, example="As we entered the forest we saw", num_return_sequences=4, max_length=32)

Sample Output

Sample 1: As we entered the forest we saw huge white pine fells at the tops of the high plateaus (the great peaks) and trees standing at ground level.

Sample 2: As we entered the forest we saw a few trees that were too large. We realized they were not going to be very big. There was one tree that was

Sample 3: As we entered the forest we saw a group of small, wood-dwelling bees who had managed to escape a predator. A farmer was holding a handful

Sample 4: As we entered the forest we saw giant, blue-eyed, spotted beetles on the ground, a grayling beetle in my lawn next to the pond, an

Accessing the Original Code

The original code for the Orator model (for architecture, Pre-Training, Evaluating, Generating) can be accessed through the GitHub repository.

GitHub Repository: my-temporary-name/my_gpt2