Salient Object-Aware Background Generation using Text-Guided Diffusion Models

This repository accompanies our paper, Salient Object-Aware Background Generation using Text-Guided Diffusion Models, which has been accepted for publication in CVPR 2024 Generative Models for Computer Vision workshop.

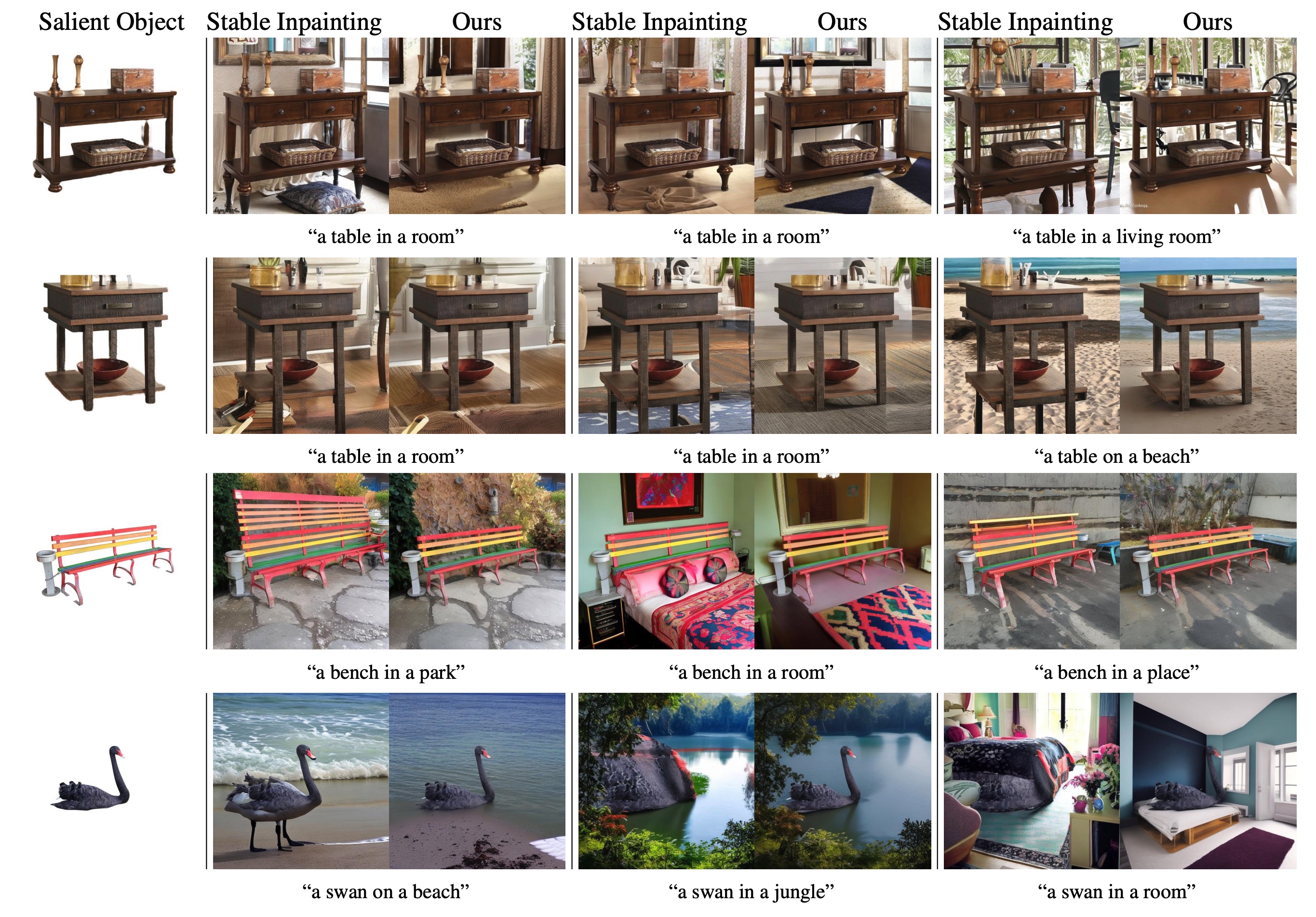

The paper addresses an issue we call "object expansion" when generating backgrounds for salient objects using inpainting diffusion models. We show that models such as Stable Inpainting can sometimes arbitrarily expand or distort the salient object, which is undesirable in applications where the object's identity should be preserved, such as e-commerce ads. We provide some examples of object expansion as follows:

Inference

Load pipeline

from diffusers import DiffusionPipeline

model_id = "yahoo-inc/photo-background-generation"

pipeline = DiffusionPipeline.from_pretrained(model_id, custom_pipeline=model_id)

pipeline = pipeline.to('cuda')

Load an image and extract its background and foreground

from PIL import Image, ImageOps

import requests

from io import BytesIO

from transparent_background import Remover

def resize_with_padding(img, expected_size):

img.thumbnail((expected_size[0], expected_size[1]))

# print(img.size)

delta_width = expected_size[0] - img.size[0]

delta_height = expected_size[1] - img.size[1]

pad_width = delta_width // 2

pad_height = delta_height // 2

padding = (pad_width, pad_height, delta_width - pad_width, delta_height - pad_height)

return ImageOps.expand(img, padding)

seed = 0

image_url = 'https://upload.wikimedia.org/wikipedia/commons/thumb/1/16/Granja_comary_Cisne_-_Escalavrado_e_Dedo_De_Deus_ao_fundo_-Teres%C3%B3polis.jpg/2560px-Granja_comary_Cisne_-_Escalavrado_e_Dedo_De_Deus_ao_fundo_-Teres%C3%B3polis.jpg'

response = requests.get(image_url)

img = Image.open(BytesIO(response.content))

img = resize_with_padding(img, (512, 512))

# Load background detection model

remover = Remover() # default setting

remover = Remover(mode='base') # nightly release checkpoint

# Get foreground mask

fg_mask = remover.process(img, type='map') # default setting - transparent background

Background generation

seed = 13

mask = ImageOps.invert(fg_mask)

img = resize_with_padding(img, (512, 512))

generator = torch.Generator(device='cuda').manual_seed(seed)

prompt = 'A dark swan in a bedroom'

cond_scale = 1.0

with torch.autocast("cuda"):

controlnet_image = pipeline(

prompt=prompt, image=img, mask_image=mask, control_image=mask, num_images_per_prompt=1, generator=generator, num_inference_steps=20, guess_mode=False, controlnet_conditioning_scale=cond_scale

).images[0]

controlnet_image

Citations

If you found our work useful, please consider citing our paper:

@misc{eshratifar2024salient,

title={Salient Object-Aware Background Generation using Text-Guided Diffusion Models},

author={Amir Erfan Eshratifar and Joao V. B. Soares and Kapil Thadani and Shaunak Mishra and Mikhail Kuznetsov and Yueh-Ning Ku and Paloma de Juan},

year={2024},

eprint={2404.10157},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

Maintainers

- Erfan Eshratifar: [email protected]

- Joao Soares: [email protected]

License

This project is licensed under the terms of the Apache 2.0 open source license. Please refer to LICENSE for the full terms.

- Downloads last month

- 9,656