metadata

license: apache-2.0

tags:

- moe

model-index:

- name: MixTAO-7Bx2-MoE-Instruct-v7.0

results:

- task:

type: text-generation

name: Text Generation

dataset:

name: AI2 Reasoning Challenge (25-Shot)

type: ai2_arc

config: ARC-Challenge

split: test

args:

num_few_shot: 25

metrics:

- type: acc_norm

value: 74.23

name: normalized accuracy

source:

url: >-

https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard?query=zhengr/MixTAO-7Bx2-MoE-Instruct-v7.0

name: Open LLM Leaderboard

- task:

type: text-generation

name: Text Generation

dataset:

name: HellaSwag (10-Shot)

type: hellaswag

split: validation

args:

num_few_shot: 10

metrics:

- type: acc_norm

value: 89.37

name: normalized accuracy

source:

url: >-

https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard?query=zhengr/MixTAO-7Bx2-MoE-Instruct-v7.0

name: Open LLM Leaderboard

- task:

type: text-generation

name: Text Generation

dataset:

name: MMLU (5-Shot)

type: cais/mmlu

config: all

split: test

args:

num_few_shot: 5

metrics:

- type: acc

value: 64.54

name: accuracy

source:

url: >-

https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard?query=zhengr/MixTAO-7Bx2-MoE-Instruct-v7.0

name: Open LLM Leaderboard

- task:

type: text-generation

name: Text Generation

dataset:

name: TruthfulQA (0-shot)

type: truthful_qa

config: multiple_choice

split: validation

args:

num_few_shot: 0

metrics:

- type: mc2

value: 74.26

source:

url: >-

https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard?query=zhengr/MixTAO-7Bx2-MoE-Instruct-v7.0

name: Open LLM Leaderboard

- task:

type: text-generation

name: Text Generation

dataset:

name: Winogrande (5-shot)

type: winogrande

config: winogrande_xl

split: validation

args:

num_few_shot: 5

metrics:

- type: acc

value: 87.77

name: accuracy

source:

url: >-

https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard?query=zhengr/MixTAO-7Bx2-MoE-Instruct-v7.0

name: Open LLM Leaderboard

- task:

type: text-generation

name: Text Generation

dataset:

name: GSM8k (5-shot)

type: gsm8k

config: main

split: test

args:

num_few_shot: 5

metrics:

- type: acc

value: 69.14

name: accuracy

source:

url: >-

https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard?query=zhengr/MixTAO-7Bx2-MoE-Instruct-v7.0

name: Open LLM Leaderboard

MixTAO-7Bx2-MoE-Instruct

MixTAO-7Bx2-MoE-Instruct is a Mixure of Experts (MoE).

💻 Usage

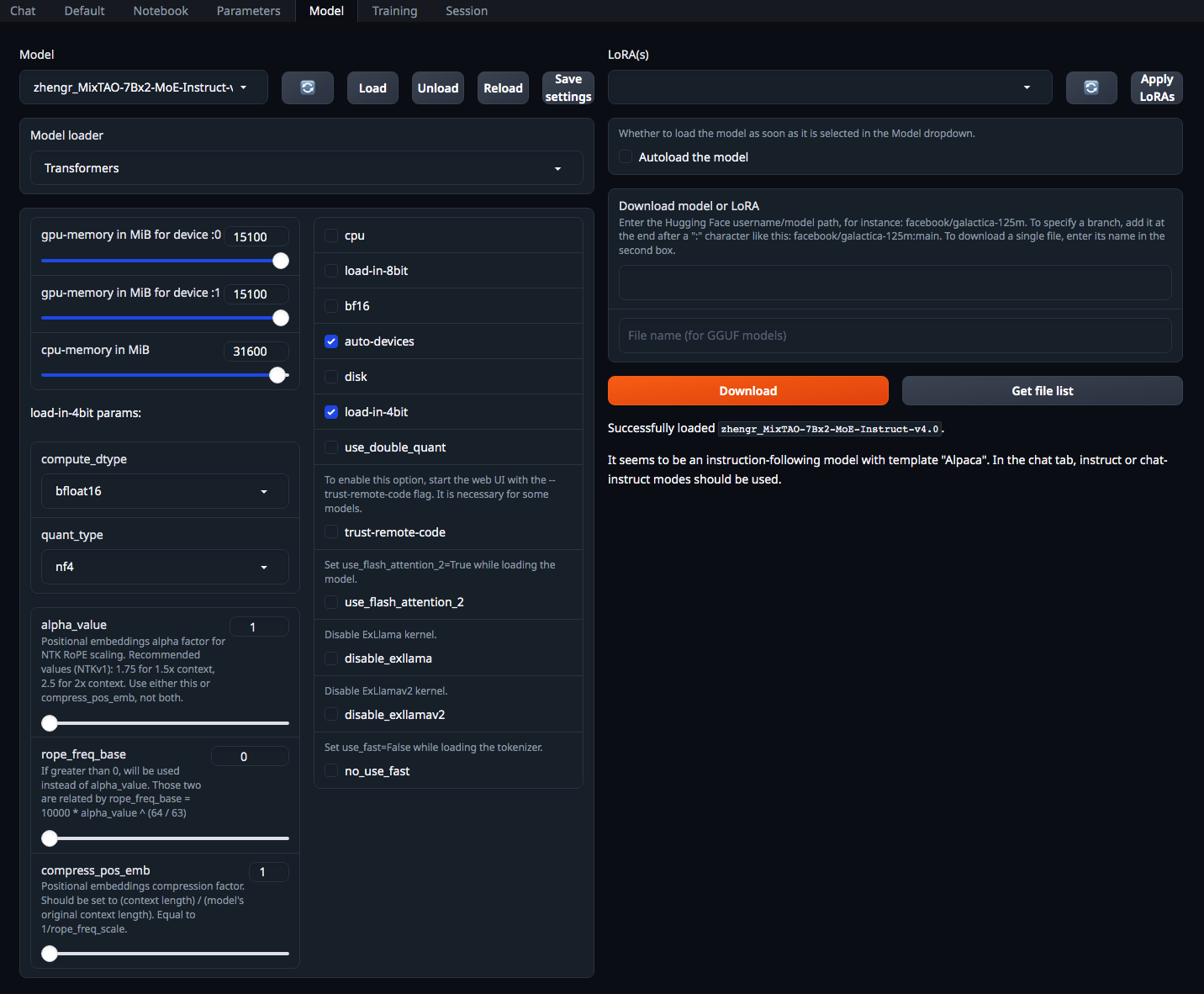

text-generation-webui - Model Tab

Chat template

{%- for message in messages %}

{%- if message['role'] == 'system' -%}

{{- message['content'] + '\n\n' -}}

{%- else -%}

{%- if message['role'] == 'user' -%}

{{- name1 + ': ' + message['content'] + '\n'-}}

{%- else -%}

{{- name2 + ': ' + message['content'] + '\n' -}}

{%- endif -%}

{%- endif -%}

{%- endfor -%}

Instruction template :Alpaca

Change this according to the model/LoRA that you are using. Used in instruct and chat-instruct modes.

{%- set ns = namespace(found=false) -%}

{%- for message in messages -%}

{%- if message['role'] == 'system' -%}

{%- set ns.found = true -%}

{%- endif -%}

{%- endfor -%}

{%- if not ns.found -%}

{{- '' + 'Below is an instruction that describes a task. Write a response that appropriately completes the request.' + '\n\n' -}}

{%- endif %}

{%- for message in messages %}

{%- if message['role'] == 'system' -%}

{{- '' + message['content'] + '\n\n' -}}

{%- else -%}

{%- if message['role'] == 'user' -%}

{{-'### Instruction:\n' + message['content'] + '\n\n'-}}

{%- else -%}

{{-'### Response:\n' + message['content'] + '\n\n' -}}

{%- endif -%}

{%- endif -%}

{%- endfor -%}

{%- if add_generation_prompt -%}

{{-'### Response:\n'-}}

{%- endif -%}

Open LLM Leaderboard Evaluation Results

Detailed results can be found here

| Metric | Value |

|---|---|

| Avg. | 76.55 |

| AI2 Reasoning Challenge (25-Shot) | 74.23 |

| HellaSwag (10-Shot) | 89.37 |

| MMLU (5-Shot) | 64.54 |

| TruthfulQA (0-shot) | 74.26 |

| Winogrande (5-shot) | 87.77 |

| GSM8k (5-shot) | 69.14 |