license: cc-by-nc-nd-4.0

ConsistencyTTA: Accelerating Diffusion-Based Text-to-Audio Generation with Consistency Distillation

This page shares the official model checkpoints of the paper

ConsistencyTTA: Accelerating Diffusion-Based Text-to-Audio Generation with Consistency Distillation

from Microsoft Applied Science Group and UC Berkeley

by Yatong Bai,

Trung Dang,

Dung Tran,

Kazuhito Koishida,

and Somayeh Sojoudi.

[🤗 Live Demo] [Preprint Paper] [Project Homepage] [Code] [Model Checkpoints] [Generation Examples]

Description

2024/06 Updates:

- We have hosted an interactive live demo of ConsistencyTTA at 🤗 Huggingface.

- ConsistencyTTA has been accepted to INTERSPEECH 2024! We look forward to meeting you in Kos Island.

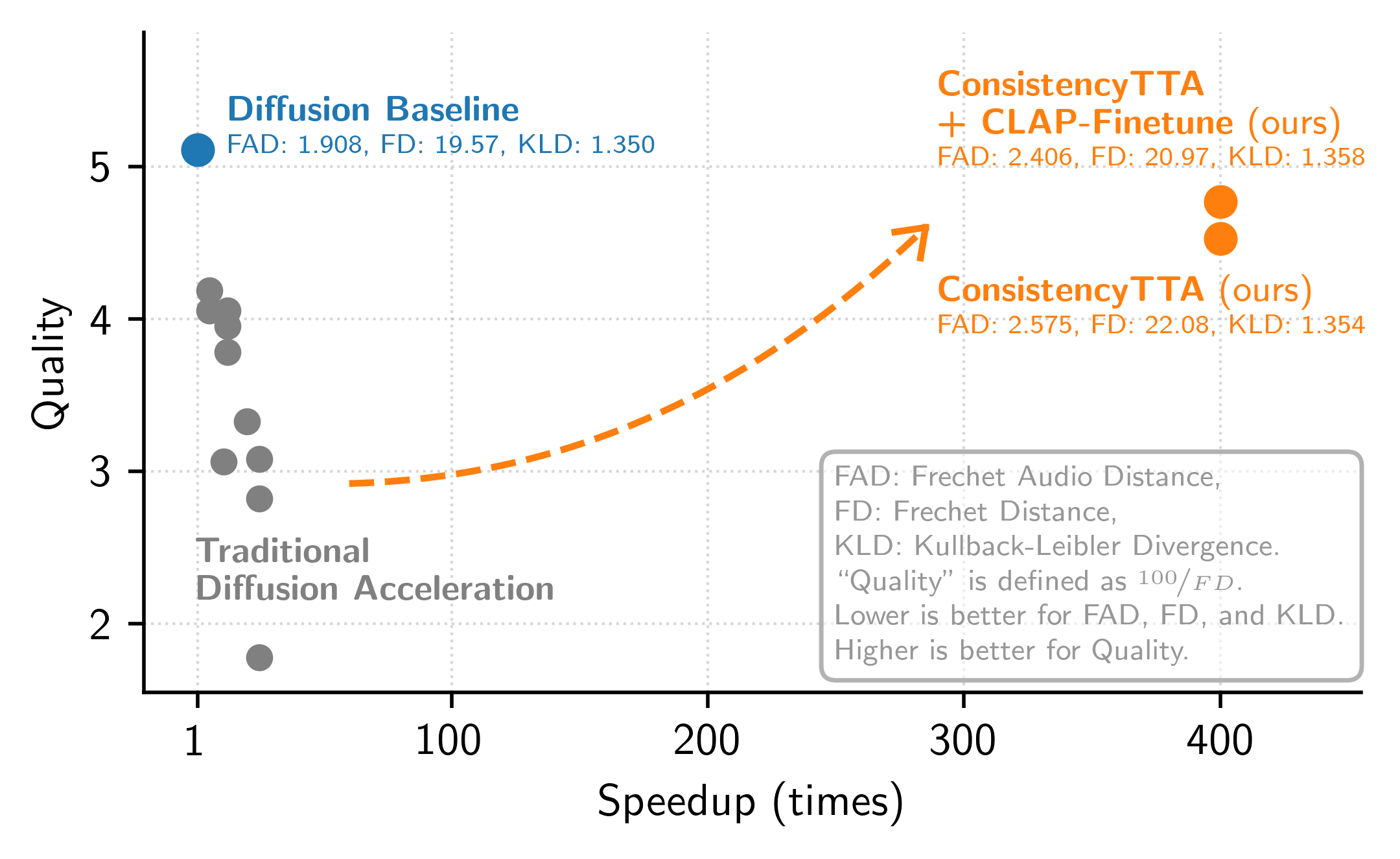

This work proposes a consistency distillation framework to train text-to-audio (TTA) generation models that only require a single neural network query, reducing the computation of the core step of diffusion-based TTA models by a factor of 400. By incorporating classifier-free guidance into the distillation framework, our models retain diffusion models' impressive generation quality and diversity. Furthermore, the non-recurrent differentiable structure of the consistency model allows for end-to-end fine-tuning with novel loss functions such as the CLAP score, further boosting performance.

Model Details

We share three model checkpoints:

- ConsistencyTTA directly distilled from a diffusion model;

- ConsistencyTTA fine-tuned by optimizing the CLAP score;

- The diffusion teacher model from which ConsistencyTTA is distilled.

The first two models are capable of high-quality single-step text-to-audio generation. Generations are 10 seconds long.

After downloading and unzipping the files, place them in the saved directory.

The training and inference code are on our GitHub page. Please refer to the GitHub page for usage details.