text

stringlengths 23

371k

| source

stringlengths 32

152

|

|---|---|

ow to ask a question on the Hugging Face forums?

If you have a general question or are looking to debug your code, the forums are the place to ask. In this video we will teach you how to write a good question, to maximize the chances you will get an answer.

First things first, to login on the forums, you need a Hugging Face account. If you haven't created one yet, go to hf.co and click Sign Up. There is also a direct link below.

Fill your email and password, then continue the steps ot pick a username and update a profile picture.

Once this is done, go to discuss.huggingface.co (linked below) and click Log In. Use the same login information as for the Hugging Face website.

You can search the forums by clicking on the magnifying glass. Someone may have already asked your question in a topic! If you find you can't post a new topic as a new user, it may be because of the antispam filters. Make sure you spend some time reading existing topics to deactivate it.

When you are sure your question hasn't been asked yet, click on the New Topic button.

For this example, we will use the following code,

that produces an error, as we saw in the "What to do when I get an error?" video.

The first step is to pick a category for our new topic. Since our error has to do with the Transformers library, we pick this category. New, choose a title that summarizes your error well. Don't be too vague or users that get the same error you did in the future won't be able to find your topic. Once you have finished typing your topic, make sure the question hasn't been answered in the topics Discourse suggests you. Click on the cross to remove that window when you have double-checked. This is an example of what not to do when posting an error: the message is very vague so no one else will be able to guess what went wrong for you, and it tags too many people. Tagging people (especially moderators) might have the opposite effect of what you want. As you send them a notification (and they get plenty), they will probably not bother replying to you, and users you didn't tag will probably ignore the question since they see tagged users. Only tag a user when you are completely certain they are the best placed to answer your question. Be precise in your text, and if you have an error coming from a specific piece of code, include that code in your post. To make sure your post looks good, place your question between three backticks like this. You can check on the right how your post will appear once posted. If your question is about an error, it's even better to include the full traceback. As explained in the "what to do when I get an error?' video, expand the traceback if you are on Colab. like for the code, put it between two lines containing three backticks for proper formatting. Our last advice is to remember to be nice, a please and a thank you will go a long way into getting others to help you. With all that done properly, your question should get an answer pretty quickly! | huggingface/course/blob/main/subtitles/en/raw/chapter8/03_forums.md |

!---

Copyright 2023 The HuggingFace Team. All rights reserved.

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License.

-->

# 🤗 Datasets Notebooks

You can find here a list of the official notebooks provided by Hugging Face.

Also, we would like to list here interesting content created by the community.

If you wrote some notebook(s) leveraging 🤗 Datasets and would like it to be listed here, please open a

Pull Request so it can be included under the Community notebooks.

## Hugging Face's notebooks 🤗

### Documentation notebooks

You can open any page of the documentation as a notebook in Colab (there is a button directly on said pages) but they are also listed here if you need them:

| Notebook | Description | | |

|:----------|:-------------|:-------------|------:|

| [Quickstart](https://github.com/huggingface/notebooks/blob/main/datasets_doc/en/quickstart.ipynb) | A quick presentation on integrating Datasets into a model training workflow |[](https://colab.research.google.com/github/huggingface/notebooks/blob/main/datasets_doc/en/quickstart.ipynb)| [](https://studiolab.sagemaker.aws/import/github/huggingface/notebooks/blob/main/datasets_doc/en/quickstart.ipynb)|

| huggingface/datasets/blob/main/notebooks/README.md |

!--Copyright 2023 The HuggingFace Team. All rights reserved.

Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with

the License. You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on

an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the

specific language governing permissions and limitations under the License.

⚠️ Note that this file is in Markdown but contain specific syntax for our doc-builder (similar to MDX) that may not be

rendered properly in your Markdown viewer.

-->

# LLaVa

## Overview

LLaVa is an open-source chatbot trained by fine-tuning LlamA/Vicuna on GPT-generated multimodal instruction-following data. It is an auto-regressive language model, based on the transformer architecture. In other words, it is an multi-modal version of LLMs fine-tuned for chat / instructions.

The LLaVa model was proposed in [Visual Instruction Tuning](https://arxiv.org/abs/2304.08485) and improved in [Improved Baselines with Visual Instruction Tuning](https://arxiv.org/pdf/2310.03744) by Haotian Liu, Chunyuan Li, Yuheng Li and Yong Jae Lee.

The abstract from the paper is the following:

*Large multimodal models (LMM) have recently shown encouraging progress with visual instruction tuning. In this note, we show that the fully-connected vision-language cross-modal connector in LLaVA is surprisingly powerful and data-efficient. With simple modifications to LLaVA, namely, using CLIP-ViT-L-336px with an MLP projection and adding academic-task-oriented VQA data with simple response formatting prompts, we establish stronger baselines that achieve state-of-the-art across 11 benchmarks. Our final 13B checkpoint uses merely 1.2M publicly available data, and finishes full training in ∼1 day on a single 8-A100 node. We hope this can make state-of-the-art LMM research more accessible. Code and model will be publicly available*

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/transformers/model_doc/llava_architecture.jpg"

alt="drawing" width="600"/>

<small> LLaVa architecture. Taken from the <a href="https://arxiv.org/abs/2304.08485">original paper.</a> </small>

This model was contributed by [ArthurZ](https://huggingface.co/ArthurZ) and [ybelkada](https://huggingface.co/ybelkada).

The original code can be found [here](https://github.com/haotian-liu/LLaVA/tree/main/llava).

## Usage tips

- We advise users to use `padding_side="left"` when computing batched generation as it leads to more accurate results. Simply make sure to call `processor.tokenizer.padding_side = "left"` before generating.

- Note the model has not been explicitly trained to process multiple images in the same prompt, although this is technically possible, you may experience inaccurate results.

- For better results, we recommend users to prompt the model with the correct prompt format:

```bash

"USER: <image>\n<prompt>ASSISTANT:"

```

For multiple turns conversation:

```bash

"USER: <image>\n<prompt1>ASSISTANT: <answer1>USER: <prompt2>ASSISTANT: <answer2>USER: <prompt3>ASSISTANT:"

```

### Using Flash Attention 2

Flash Attention 2 is an even faster, optimized version of the previous optimization, please refer to the [Flash Attention 2 section of performance docs](https://huggingface.co/docs/transformers/perf_infer_gpu_one).

## Resources

A list of official Hugging Face and community (indicated by 🌎) resources to help you get started with BEiT.

<PipelineTag pipeline="image-to-text"/>

- A [Google Colab demo](https://colab.research.google.com/drive/1qsl6cd2c8gGtEW1xV5io7S8NHh-Cp1TV?usp=sharing) on how to run Llava on a free-tier Google colab instance leveraging 4-bit inference.

- A [similar notebook](https://github.com/NielsRogge/Transformers-Tutorials/blob/master/LLaVa/Inference_with_LLaVa_for_multimodal_generation.ipynb) showcasing batched inference. 🌎

## LlavaConfig

[[autodoc]] LlavaConfig

## LlavaProcessor

[[autodoc]] LlavaProcessor

## LlavaForConditionalGeneration

[[autodoc]] LlavaForConditionalGeneration

- forward

| huggingface/transformers/blob/main/docs/source/en/model_doc/llava.md |

Create a dataset for training

There are many datasets on the [Hub](https://huggingface.co/datasets?task_categories=task_categories:text-to-image&sort=downloads) to train a model on, but if you can't find one you're interested in or want to use your own, you can create a dataset with the 🤗 [Datasets](hf.co/docs/datasets) library. The dataset structure depends on the task you want to train your model on. The most basic dataset structure is a directory of images for tasks like unconditional image generation. Another dataset structure may be a directory of images and a text file containing their corresponding text captions for tasks like text-to-image generation.

This guide will show you two ways to create a dataset to finetune on:

- provide a folder of images to the `--train_data_dir` argument

- upload a dataset to the Hub and pass the dataset repository id to the `--dataset_name` argument

<Tip>

💡 Learn more about how to create an image dataset for training in the [Create an image dataset](https://huggingface.co/docs/datasets/image_dataset) guide.

</Tip>

## Provide a dataset as a folder

For unconditional generation, you can provide your own dataset as a folder of images. The training script uses the [`ImageFolder`](https://huggingface.co/docs/datasets/en/image_dataset#imagefolder) builder from 🤗 Datasets to automatically build a dataset from the folder. Your directory structure should look like:

```bash

data_dir/xxx.png

data_dir/xxy.png

data_dir/[...]/xxz.png

```

Pass the path to the dataset directory to the `--train_data_dir` argument, and then you can start training:

```bash

accelerate launch train_unconditional.py \

--train_data_dir <path-to-train-directory> \

<other-arguments>

```

## Upload your data to the Hub

<Tip>

💡 For more details and context about creating and uploading a dataset to the Hub, take a look at the [Image search with 🤗 Datasets](https://huggingface.co/blog/image-search-datasets) post.

</Tip>

Start by creating a dataset with the [`ImageFolder`](https://huggingface.co/docs/datasets/image_load#imagefolder) feature, which creates an `image` column containing the PIL-encoded images.

You can use the `data_dir` or `data_files` parameters to specify the location of the dataset. The `data_files` parameter supports mapping specific files to dataset splits like `train` or `test`:

```python

from datasets import load_dataset

# example 1: local folder

dataset = load_dataset("imagefolder", data_dir="path_to_your_folder")

# example 2: local files (supported formats are tar, gzip, zip, xz, rar, zstd)

dataset = load_dataset("imagefolder", data_files="path_to_zip_file")

# example 3: remote files (supported formats are tar, gzip, zip, xz, rar, zstd)

dataset = load_dataset(

"imagefolder",

data_files="https://download.microsoft.com/download/3/E/1/3E1C3F21-ECDB-4869-8368-6DEBA77B919F/kagglecatsanddogs_3367a.zip",

)

# example 4: providing several splits

dataset = load_dataset(

"imagefolder", data_files={"train": ["path/to/file1", "path/to/file2"], "test": ["path/to/file3", "path/to/file4"]}

)

```

Then use the [`~datasets.Dataset.push_to_hub`] method to upload the dataset to the Hub:

```python

# assuming you have ran the huggingface-cli login command in a terminal

dataset.push_to_hub("name_of_your_dataset")

# if you want to push to a private repo, simply pass private=True:

dataset.push_to_hub("name_of_your_dataset", private=True)

```

Now the dataset is available for training by passing the dataset name to the `--dataset_name` argument:

```bash

accelerate launch --mixed_precision="fp16" train_text_to_image.py \

--pretrained_model_name_or_path="runwayml/stable-diffusion-v1-5" \

--dataset_name="name_of_your_dataset" \

<other-arguments>

```

## Next steps

Now that you've created a dataset, you can plug it into the `train_data_dir` (if your dataset is local) or `dataset_name` (if your dataset is on the Hub) arguments of a training script.

For your next steps, feel free to try and use your dataset to train a model for [unconditional generation](unconditional_training) or [text-to-image generation](text2image)! | huggingface/diffusers/blob/main/docs/source/en/training/create_dataset.md |

Gradio Demo: neon-tts-plugin-coqui

### This demo converts text to speech in 14 languages.

```

!pip install -q gradio neon-tts-plugin-coqui==0.4.1a9

```

```

# Downloading files from the demo repo

import os

!wget -q https://github.com/gradio-app/gradio/raw/main/demo/neon-tts-plugin-coqui/packages.txt

```

```

import tempfile

import gradio as gr

from neon_tts_plugin_coqui import CoquiTTS

LANGUAGES = list(CoquiTTS.langs.keys())

coquiTTS = CoquiTTS()

def tts(text: str, language: str):

with tempfile.NamedTemporaryFile(suffix=".wav", delete=False) as fp:

coquiTTS.get_tts(text, fp, speaker = {"language" : language})

return fp.name

inputs = [gr.Textbox(label="Input", value=CoquiTTS.langs["en"]["sentence"], max_lines=3),

gr.Radio(label="Language", choices=LANGUAGES, value="en")]

outputs = gr.Audio(label="Output")

demo = gr.Interface(fn=tts, inputs=inputs, outputs=outputs)

demo.launch()

```

| gradio-app/gradio/blob/main/demo/neon-tts-plugin-coqui/run.ipynb |

@gradio/tooltip

## 0.1.0

## 0.1.0-beta.2

### Features

- [#6136](https://github.com/gradio-app/gradio/pull/6136) [`667802a6c`](https://github.com/gradio-app/gradio/commit/667802a6cdbfb2ce454a3be5a78e0990b194548a) - JS Component Documentation. Thanks [@freddyaboulton](https://github.com/freddyaboulton)!

## 0.1.0-beta.1

### Fixes

- [#6046](https://github.com/gradio-app/gradio/pull/6046) [`dbb7de5e0`](https://github.com/gradio-app/gradio/commit/dbb7de5e02c53fee05889d696d764d212cb96c74) - fix tests. Thanks [@pngwn](https://github.com/pngwn)!

## 0.1.0-beta.0

### Features

- [#5498](https://github.com/gradio-app/gradio/pull/5498) [`681f10c31`](https://github.com/gradio-app/gradio/commit/681f10c315a75cc8cd0473c9a0167961af7696db) - release first version. Thanks [@pngwn](https://github.com/pngwn)!

| gradio-app/gradio/blob/main/js/tooltip/CHANGELOG.md |

``python

import argparse

import os

import torch

from torch.optim import AdamW

from torch.utils.data import DataLoader

from peft import (

get_peft_config,

get_peft_model,

get_peft_model_state_dict,

set_peft_model_state_dict,

LoraConfig,

PeftType,

PrefixTuningConfig,

PromptEncoderConfig,

)

import evaluate

from datasets import load_dataset

from transformers import AutoModelForSequenceClassification, AutoTokenizer, get_linear_schedule_with_warmup, set_seed

from tqdm import tqdm

```

```python

batch_size = 32

model_name_or_path = "roberta-large"

task = "mrpc"

peft_type = PeftType.LORA

device = "cuda"

num_epochs = 20

```

```python

peft_config = LoraConfig(task_type="SEQ_CLS", inference_mode=False, r=8, lora_alpha=16, lora_dropout=0.1)

lr = 3e-4

```

```python

if any(k in model_name_or_path for k in ("gpt", "opt", "bloom")):

padding_side = "left"

else:

padding_side = "right"

tokenizer = AutoTokenizer.from_pretrained(model_name_or_path, padding_side=padding_side)

if getattr(tokenizer, "pad_token_id") is None:

tokenizer.pad_token_id = tokenizer.eos_token_id

datasets = load_dataset("glue", task)

metric = evaluate.load("glue", task)

def tokenize_function(examples):

# max_length=None => use the model max length (it's actually the default)

outputs = tokenizer(examples["sentence1"], examples["sentence2"], truncation=True, max_length=None)

return outputs

tokenized_datasets = datasets.map(

tokenize_function,

batched=True,

remove_columns=["idx", "sentence1", "sentence2"],

)

# We also rename the 'label' column to 'labels' which is the expected name for labels by the models of the

# transformers library

tokenized_datasets = tokenized_datasets.rename_column("label", "labels")

def collate_fn(examples):

return tokenizer.pad(examples, padding="longest", return_tensors="pt")

# Instantiate dataloaders.

train_dataloader = DataLoader(tokenized_datasets["train"], shuffle=True, collate_fn=collate_fn, batch_size=batch_size)

eval_dataloader = DataLoader(

tokenized_datasets["validation"], shuffle=False, collate_fn=collate_fn, batch_size=batch_size

)

```

```python

model = AutoModelForSequenceClassification.from_pretrained(model_name_or_path, return_dict=True)

model = get_peft_model(model, peft_config)

model.print_trainable_parameters()

model

```

```python

optimizer = AdamW(params=model.parameters(), lr=lr)

# Instantiate scheduler

lr_scheduler = get_linear_schedule_with_warmup(

optimizer=optimizer,

num_warmup_steps=0.06 * (len(train_dataloader) * num_epochs),

num_training_steps=(len(train_dataloader) * num_epochs),

)

```

```python

model.to(device)

for epoch in range(num_epochs):

model.train()

for step, batch in enumerate(tqdm(train_dataloader)):

batch.to(device)

outputs = model(**batch)

loss = outputs.loss

loss.backward()

optimizer.step()

lr_scheduler.step()

optimizer.zero_grad()

model.eval()

for step, batch in enumerate(tqdm(eval_dataloader)):

batch.to(device)

with torch.no_grad():

outputs = model(**batch)

predictions = outputs.logits.argmax(dim=-1)

predictions, references = predictions, batch["labels"]

metric.add_batch(

predictions=predictions,

references=references,

)

eval_metric = metric.compute()

print(f"epoch {epoch}:", eval_metric)

```

## Share adapters on the 🤗 Hub

```python

model.push_to_hub("smangrul/roberta-large-peft-lora", use_auth_token=True)

```

## Load adapters from the Hub

You can also directly load adapters from the Hub using the commands below:

```python

import torch

from peft import PeftModel, PeftConfig

from transformers import AutoModelForCausalLM, AutoTokenizer

peft_model_id = "smangrul/roberta-large-peft-lora"

config = PeftConfig.from_pretrained(peft_model_id)

inference_model = AutoModelForSequenceClassification.from_pretrained(config.base_model_name_or_path)

tokenizer = AutoTokenizer.from_pretrained(config.base_model_name_or_path)

# Load the Lora model

inference_model = PeftModel.from_pretrained(inference_model, peft_model_id)

inference_model.to(device)

inference_model.eval()

for step, batch in enumerate(tqdm(eval_dataloader)):

batch.to(device)

with torch.no_grad():

outputs = inference_model(**batch)

predictions = outputs.logits.argmax(dim=-1)

predictions, references = predictions, batch["labels"]

metric.add_batch(

predictions=predictions,

references=references,

)

eval_metric = metric.compute()

print(eval_metric)

```

| huggingface/peft/blob/main/examples/sequence_classification/LoRA.ipynb |

!--Copyright 2023 The HuggingFace Team. All rights reserved.

Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with

the License. You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on

an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the

specific language governing permissions and limitations under the License.

-->

# InstructBLIP

## Overview

The InstructBLIP model was proposed in [InstructBLIP: Towards General-purpose Vision-Language Models with Instruction Tuning](https://arxiv.org/abs/2305.06500) by Wenliang Dai, Junnan Li, Dongxu Li, Anthony Meng Huat Tiong, Junqi Zhao, Weisheng Wang, Boyang Li, Pascale Fung, Steven Hoi.

InstructBLIP leverages the [BLIP-2](blip2) architecture for visual instruction tuning.

The abstract from the paper is the following:

*General-purpose language models that can solve various language-domain tasks have emerged driven by the pre-training and instruction-tuning pipeline. However, building general-purpose vision-language models is challenging due to the increased task discrepancy introduced by the additional visual input. Although vision-language pre-training has been widely studied, vision-language instruction tuning remains relatively less explored. In this paper, we conduct a systematic and comprehensive study on vision-language instruction tuning based on the pre-trained BLIP-2 models. We gather a wide variety of 26 publicly available datasets, transform them into instruction tuning format and categorize them into two clusters for held-in instruction tuning and held-out zero-shot evaluation. Additionally, we introduce instruction-aware visual feature extraction, a crucial method that enables the model to extract informative features tailored to the given instruction. The resulting InstructBLIP models achieve state-of-the-art zero-shot performance across all 13 held-out datasets, substantially outperforming BLIP-2 and the larger Flamingo. Our models also lead to state-of-the-art performance when finetuned on individual downstream tasks (e.g., 90.7% accuracy on ScienceQA IMG). Furthermore, we qualitatively demonstrate the advantages of InstructBLIP over concurrent multimodal models.*

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/transformers/model_doc/instructblip_architecture.jpg"

alt="drawing" width="600"/>

<small> InstructBLIP architecture. Taken from the <a href="https://arxiv.org/abs/2305.06500">original paper.</a> </small>

This model was contributed by [nielsr](https://huggingface.co/nielsr).

The original code can be found [here](https://github.com/salesforce/LAVIS/tree/main/projects/instructblip).

## Usage tips

InstructBLIP uses the same architecture as [BLIP-2](blip2) with a tiny but important difference: it also feeds the text prompt (instruction) to the Q-Former.

## InstructBlipConfig

[[autodoc]] InstructBlipConfig

- from_vision_qformer_text_configs

## InstructBlipVisionConfig

[[autodoc]] InstructBlipVisionConfig

## InstructBlipQFormerConfig

[[autodoc]] InstructBlipQFormerConfig

## InstructBlipProcessor

[[autodoc]] InstructBlipProcessor

## InstructBlipVisionModel

[[autodoc]] InstructBlipVisionModel

- forward

## InstructBlipQFormerModel

[[autodoc]] InstructBlipQFormerModel

- forward

## InstructBlipForConditionalGeneration

[[autodoc]] InstructBlipForConditionalGeneration

- forward

- generate | huggingface/transformers/blob/main/docs/source/en/model_doc/instructblip.md |

!---

Copyright 2020 The HuggingFace Team. All rights reserved.

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License.

-->

# Generating the documentation

To generate the documentation, you first have to build it. Several packages are necessary to build the doc,

you can install them with the following command, at the root of the code repository:

```bash

pip install -e ".[docs]"

```

Then you need to install our special tool that builds the documentation:

```bash

pip install git+https://github.com/huggingface/doc-builder

```

---

**NOTE**

You only need to generate the documentation to inspect it locally (if you're planning changes and want to

check how they look before committing for instance). You don't have to `git commit` the built documentation.

---

## Building the documentation

Once you have setup the `doc-builder` and additional packages, you can generate the documentation by typing

the following command:

```bash

doc-builder build datasets docs/source/ --build_dir ~/tmp/test-build

```

You can adapt the `--build_dir` to set any temporary folder that you prefer. This command will create it and generate

the MDX files that will be rendered as the documentation on the main website. You can inspect them in your favorite

Markdown editor.

## Previewing the documentation

To preview the docs, first install the `watchdog` module with:

```bash

pip install watchdog

```

Then run the following command:

```bash

doc-builder preview datasets docs/source/

```

The docs will be viewable at [http://localhost:3000](http://localhost:3000). You can also preview the docs once you have opened a PR. You will see a bot add a comment to a link where the documentation with your changes lives.

---

**NOTE**

The `preview` command only works with existing doc files. When you add a completely new file, you need to update `_toctree.yml` & restart `preview` command (`ctrl-c` to stop it & call `doc-builder preview ...` again).

## Adding a new element to the navigation bar

Accepted files are Markdown (.md or .mdx).

Create a file with its extension and put it in the source directory. You can then link it to the toc-tree by putting

the filename without the extension in the [`_toctree.yml`](https://github.com/huggingface/datasets/blob/main/docs/source/_toctree.yml) file.

## Renaming section headers and moving sections

It helps to keep the old links working when renaming the section header and/or moving sections from one document to another. This is because the old links are likely to be used in Issues, Forums and Social media and it'd make for a much more superior user experience if users reading those months later could still easily navigate to the originally intended information.

Therefore we simply keep a little map of moved sections at the end of the document where the original section was. The key is to preserve the original anchor.

So if you renamed a section from: "Section A" to "Section B", then you can add at the end of the file:

```

Sections that were moved:

[ <a href="#section-b">Section A</a><a id="section-a"></a> ]

```

and of course if you moved it to another file, then:

```

Sections that were moved:

[ <a href="../new-file#section-b">Section A</a><a id="section-a"></a> ]

```

Use the relative style to link to the new file so that the versioned docs continue to work.

For an example of a rich moved sections set please see the very end of [the transformers Trainer doc](https://github.com/huggingface/transformers/blob/main/docs/source/en/main_classes/trainer.md).

## Writing Documentation - Specification

The `huggingface/datasets` documentation follows the

[Google documentation](https://sphinxcontrib-napoleon.readthedocs.io/en/latest/example_google.html) style for docstrings,

although we can write them directly in Markdown.

### Adding a new tutorial

Adding a new tutorial or section is done in two steps:

- Add a new file under `./source`. This file can either be ReStructuredText (.rst) or Markdown (.md).

- Link that file in `./source/_toctree.yml` on the correct toc-tree.

Make sure to put your new file under the proper section. If you have a doubt, feel free to ask in a Github Issue or PR.

### Writing source documentation

Values that should be put in `code` should either be surrounded by backticks: \`like so\`. Note that argument names

and objects like True, None or any strings should usually be put in `code`.

When mentioning a class, function or method, it is recommended to use our syntax for internal links so that our tool

adds a link to its documentation with this syntax: \[\`XXXClass\`\] or \[\`function\`\]. This requires the class or

function to be in the main package.

If you want to create a link to some internal class or function, you need to

provide its path. For instance: \[\`table.InMemoryTable\`\]. This will be converted into a link with

`table.InMemoryTable` in the description. To get rid of the path and only keep the name of the object you are

linking to in the description, add a ~: \[\`~table.InMemoryTable\`\] will generate a link with `InMemoryTable` in the description.

The same works for methods so you can either use \[\`XXXClass.method\`\] or \[~\`XXXClass.method\`\].

#### Defining arguments in a method

Arguments should be defined with the `Args:` (or `Arguments:` or `Parameters:`) prefix, followed by a line return and

an indentation. The argument should be followed by its type, with its shape if it is a tensor, a colon and its

description:

```

Args:

n_layers (`int`): The number of layers of the model.

```

If the description is too long to fit in one line, another indentation is necessary before writing the description

after the argument.

Here's an example showcasing everything so far:

```

Args:

input_ids (`torch.LongTensor` of shape `(batch_size, sequence_length)`):

Indices of input sequence tokens in the vocabulary.

Indices can be obtained using [`AlbertTokenizer`]. See [`~PreTrainedTokenizer.encode`] and

[`~PreTrainedTokenizer.__call__`] for details.

[What are input IDs?](../glossary#input-ids)

```

For optional arguments or arguments with defaults we follow the following syntax: imagine we have a function with the

following signature:

```

def my_function(x: str = None, a: float = 1):

```

then its documentation should look like this:

```

Args:

x (`str`, *optional*):

This argument controls ...

a (`float`, *optional*, defaults to 1):

This argument is used to ...

```

Note that we always omit the "defaults to \`None\`" when None is the default for any argument. Also note that even

if the first line describing your argument type and its default gets long, you can't break it into several lines. You can

however write as many lines as you want in the indented description (see the example above with `input_ids`).

#### Writing a multi-line code block

Multi-line code blocks can be useful for displaying examples. They are done between two lines of three backticks as usual in Markdown:

````

```

# first line of code

# second line

# etc

```

````

#### Writing a return block

The return block should be introduced with the `Returns:` prefix, followed by a line return and an indentation.

The first line should be the type of the return, followed by a line return. No need to indent further for the elements

building the return.

Here's an example of a single value return:

```

Returns:

`List[int]`: A list of integers in the range [0, 1] --- 1 for a special token, 0 for a sequence token.

```

Here's an example of tuple return, comprising several objects:

```

Returns:

`tuple(torch.FloatTensor)` comprising various elements depending on the configuration ([`BertConfig`]) and inputs:

- ** loss** (*optional*, returned when `masked_lm_labels` is provided) `torch.FloatTensor` of shape `(1,)` --

Total loss as the sum of the masked language modeling loss and the next sequence prediction (classification) loss.

- **prediction_scores** (`torch.FloatTensor` of shape `(batch_size, sequence_length, config.vocab_size)`) --

Prediction scores of the language modeling head (scores for each vocabulary token before SoftMax).

```

#### Adding an image

Due to the rapidly growing repository, it is important to make sure that no files that would significantly weigh down the repository are added. This includes images, videos and other non-text files. We prefer to leverage a hf.co hosted `dataset` like

the ones hosted on [`hf-internal-testing`](https://huggingface.co/hf-internal-testing) in which to place these files and reference

them by URL. We recommend putting them in the following dataset: [huggingface/documentation-images](https://huggingface.co/datasets/huggingface/documentation-images).

If an external contribution, feel free to add the images to your PR and ask a Hugging Face member to migrate your images

to this dataset.

## Writing documentation examples

The syntax for Example docstrings can look as follows:

```

Example:

```py

>>> from datasets import load_dataset

>>> ds = load_dataset("rotten_tomatoes", split="validation")

>>> def add_prefix(example):

... example["text"] = "Review: " + example["text"]

... return example

>>> ds = ds.map(add_prefix)

>>> ds[0:3]["text"]

['Review: compassionately explores the seemingly irreconcilable situation between conservative christian parents and their estranged gay and lesbian children .',

'Review: the soundtrack alone is worth the price of admission .',

'Review: rodriguez does a splendid job of racial profiling hollywood style--casting excellent latin actors of all ages--a trend long overdue .']

# process a batch of examples

>>> ds = ds.map(lambda example: tokenizer(example["text"]), batched=True)

# set number of processors

>>> ds = ds.map(add_prefix, num_proc=4)

```

```

The docstring should give a minimal, clear example of how the respective class or function is to be used in practice and also include the expected (ideally sensible) output.

Often, readers will try out the example before even going through the function

or class definitions. Therefore, it is of utmost importance that the example

works as expected.

| huggingface/datasets/blob/main/docs/README.md |

--

title: NIST_MT

emoji: 🤗

colorFrom: purple

colorTo: red

sdk: gradio

sdk_version: 3.19.1

app_file: app.py

pinned: false

tags:

- evaluate

- metric

- machine-translation

description:

DARPA commissioned NIST to develop an MT evaluation facility based on the BLEU score.

---

# Metric Card for NIST's MT metric

## Metric Description

DARPA commissioned NIST to develop an MT evaluation facility based on the BLEU

score. The official script used by NIST to compute BLEU and NIST score is

mteval-14.pl. The main differences are:

- BLEU uses geometric mean of the ngram overlaps, NIST uses arithmetic mean.

- NIST has a different brevity penalty

- NIST score from mteval-14.pl has a self-contained tokenizer (in the Hugging Face implementation we rely on NLTK's

implementation of the NIST-specific tokenizer)

## Intended Uses

NIST was developed for machine translation evaluation.

## How to Use

```python

import evaluate

nist_mt = evaluate.load("nist_mt")

hypothesis1 = "It is a guide to action which ensures that the military always obeys the commands of the party"

reference1 = "It is a guide to action that ensures that the military will forever heed Party commands"

reference2 = "It is the guiding principle which guarantees the military forces always being under the command of the Party"

nist_mt.compute(hypothesis1, [reference1, reference2])

# {'nist_mt': 3.3709935957649324}

```

### Inputs

- **predictions**: tokenized predictions to score. For sentence-level NIST, a list of tokens (str);

for corpus-level NIST, a list (sentences) of lists of tokens (str)

- **references**: potentially multiple tokenized references for each prediction. For sentence-level NIST, a

list (multiple potential references) of list of tokens (str); for corpus-level NIST, a list (corpus) of lists

(multiple potential references) of lists of tokens (str)

- **n**: highest n-gram order

- **tokenize_kwargs**: arguments passed to the tokenizer (see: https://github.com/nltk/nltk/blob/90fa546ea600194f2799ee51eaf1b729c128711e/nltk/tokenize/nist.py#L139)

### Output Values

- **nist_mt** (`float`): NIST score

Output Example:

```python

{'nist_mt': 3.3709935957649324}

```

## Citation

```bibtex

@inproceedings{10.5555/1289189.1289273,

author = {Doddington, George},

title = {Automatic Evaluation of Machine Translation Quality Using N-Gram Co-Occurrence Statistics},

year = {2002},

publisher = {Morgan Kaufmann Publishers Inc.},

address = {San Francisco, CA, USA},

booktitle = {Proceedings of the Second International Conference on Human Language Technology Research},

pages = {138–145},

numpages = {8},

location = {San Diego, California},

series = {HLT '02}

}

```

## Further References

This Hugging Face implementation uses [the NLTK implementation](https://github.com/nltk/nltk/blob/develop/nltk/translate/nist_score.py)

| huggingface/evaluate/blob/main/metrics/nist_mt/README.md |

SSL ResNet

**Residual Networks**, or **ResNets**, learn residual functions with reference to the layer inputs, instead of learning unreferenced functions. Instead of hoping each few stacked layers directly fit a desired underlying mapping, residual nets let these layers fit a residual mapping. They stack [residual blocks](https://paperswithcode.com/method/residual-block) ontop of each other to form network: e.g. a ResNet-50 has fifty layers using these blocks.

The model in this collection utilises semi-supervised learning to improve the performance of the model. The approach brings important gains to standard architectures for image, video and fine-grained classification.

Please note the CC-BY-NC 4.0 license on theses weights, non-commercial use only.

## How do I use this model on an image?

To load a pretrained model:

```py

>>> import timm

>>> model = timm.create_model('ssl_resnet18', pretrained=True)

>>> model.eval()

```

To load and preprocess the image:

```py

>>> import urllib

>>> from PIL import Image

>>> from timm.data import resolve_data_config

>>> from timm.data.transforms_factory import create_transform

>>> config = resolve_data_config({}, model=model)

>>> transform = create_transform(**config)

>>> url, filename = ("https://github.com/pytorch/hub/raw/master/images/dog.jpg", "dog.jpg")

>>> urllib.request.urlretrieve(url, filename)

>>> img = Image.open(filename).convert('RGB')

>>> tensor = transform(img).unsqueeze(0) # transform and add batch dimension

```

To get the model predictions:

```py

>>> import torch

>>> with torch.no_grad():

... out = model(tensor)

>>> probabilities = torch.nn.functional.softmax(out[0], dim=0)

>>> print(probabilities.shape)

>>> # prints: torch.Size([1000])

```

To get the top-5 predictions class names:

```py

>>> # Get imagenet class mappings

>>> url, filename = ("https://raw.githubusercontent.com/pytorch/hub/master/imagenet_classes.txt", "imagenet_classes.txt")

>>> urllib.request.urlretrieve(url, filename)

>>> with open("imagenet_classes.txt", "r") as f:

... categories = [s.strip() for s in f.readlines()]

>>> # Print top categories per image

>>> top5_prob, top5_catid = torch.topk(probabilities, 5)

>>> for i in range(top5_prob.size(0)):

... print(categories[top5_catid[i]], top5_prob[i].item())

>>> # prints class names and probabilities like:

>>> # [('Samoyed', 0.6425196528434753), ('Pomeranian', 0.04062102362513542), ('keeshond', 0.03186424449086189), ('white wolf', 0.01739676296710968), ('Eskimo dog', 0.011717947199940681)]

```

Replace the model name with the variant you want to use, e.g. `ssl_resnet18`. You can find the IDs in the model summaries at the top of this page.

To extract image features with this model, follow the [timm feature extraction examples](../feature_extraction), just change the name of the model you want to use.

## How do I finetune this model?

You can finetune any of the pre-trained models just by changing the classifier (the last layer).

```py

>>> model = timm.create_model('ssl_resnet18', pretrained=True, num_classes=NUM_FINETUNE_CLASSES)

```

To finetune on your own dataset, you have to write a training loop or adapt [timm's training

script](https://github.com/rwightman/pytorch-image-models/blob/master/train.py) to use your dataset.

## How do I train this model?

You can follow the [timm recipe scripts](../scripts) for training a new model afresh.

## Citation

```BibTeX

@article{DBLP:journals/corr/abs-1905-00546,

author = {I. Zeki Yalniz and

Herv{\'{e}} J{\'{e}}gou and

Kan Chen and

Manohar Paluri and

Dhruv Mahajan},

title = {Billion-scale semi-supervised learning for image classification},

journal = {CoRR},

volume = {abs/1905.00546},

year = {2019},

url = {http://arxiv.org/abs/1905.00546},

archivePrefix = {arXiv},

eprint = {1905.00546},

timestamp = {Mon, 28 Sep 2020 08:19:37 +0200},

biburl = {https://dblp.org/rec/journals/corr/abs-1905-00546.bib},

bibsource = {dblp computer science bibliography, https://dblp.org}

}

```

<!--

Type: model-index

Collections:

- Name: SSL ResNet

Paper:

Title: Billion-scale semi-supervised learning for image classification

URL: https://paperswithcode.com/paper/billion-scale-semi-supervised-learning-for

Models:

- Name: ssl_resnet18

In Collection: SSL ResNet

Metadata:

FLOPs: 2337073152

Parameters: 11690000

File Size: 46811375

Architecture:

- 1x1 Convolution

- Batch Normalization

- Bottleneck Residual Block

- Convolution

- Global Average Pooling

- Max Pooling

- ReLU

- Residual Block

- Residual Connection

- Softmax

Tasks:

- Image Classification

Training Techniques:

- SGD with Momentum

- Weight Decay

Training Data:

- ImageNet

- YFCC-100M

Training Resources: 64x GPUs

ID: ssl_resnet18

LR: 0.0015

Epochs: 30

Layers: 18

Crop Pct: '0.875'

Batch Size: 1536

Image Size: '224'

Weight Decay: 0.0001

Interpolation: bilinear

Code: https://github.com/rwightman/pytorch-image-models/blob/9a25fdf3ad0414b4d66da443fe60ae0aa14edc84/timm/models/resnet.py#L894

Weights: https://dl.fbaipublicfiles.com/semiweaksupervision/model_files/semi_supervised_resnet18-d92f0530.pth

Results:

- Task: Image Classification

Dataset: ImageNet

Metrics:

Top 1 Accuracy: 72.62%

Top 5 Accuracy: 91.42%

- Name: ssl_resnet50

In Collection: SSL ResNet

Metadata:

FLOPs: 5282531328

Parameters: 25560000

File Size: 102480594

Architecture:

- 1x1 Convolution

- Batch Normalization

- Bottleneck Residual Block

- Convolution

- Global Average Pooling

- Max Pooling

- ReLU

- Residual Block

- Residual Connection

- Softmax

Tasks:

- Image Classification

Training Techniques:

- SGD with Momentum

- Weight Decay

Training Data:

- ImageNet

- YFCC-100M

Training Resources: 64x GPUs

ID: ssl_resnet50

LR: 0.0015

Epochs: 30

Layers: 50

Crop Pct: '0.875'

Batch Size: 1536

Image Size: '224'

Weight Decay: 0.0001

Interpolation: bilinear

Code: https://github.com/rwightman/pytorch-image-models/blob/9a25fdf3ad0414b4d66da443fe60ae0aa14edc84/timm/models/resnet.py#L904

Weights: https://dl.fbaipublicfiles.com/semiweaksupervision/model_files/semi_supervised_resnet50-08389792.pth

Results:

- Task: Image Classification

Dataset: ImageNet

Metrics:

Top 1 Accuracy: 79.24%

Top 5 Accuracy: 94.83%

--> | huggingface/pytorch-image-models/blob/main/hfdocs/source/models/ssl-resnet.mdx |

--

title: What's new in Diffusers? 🎨

thumbnail: /blog/assets/102_diffusers_2nd_month/inpainting.png

authors:

- user: osanseviero

---

# What's new in Diffusers? 🎨

A month and a half ago we released `diffusers`, a library that provides a modular toolbox for diffusion models across modalities. A couple of weeks later, we released support for Stable Diffusion, a high quality text-to-image model, with a free demo for anyone to try out. Apart from burning lots of GPUs, in the last three weeks the team has decided to add one or two new features to the library that we hope the community enjoys! This blog post gives a high-level overview of the new features in `diffusers` version 0.3! Remember to give a ⭐ to the [GitHub repository](https://github.com/huggingface/diffusers).

- [Image to Image pipelines](#image-to-image-pipeline)

- [Textual Inversion](#textual-inversion)

- [Inpainting](#experimental-inpainting-pipeline)

- [Optimizations for Smaller GPUs](#optimizations-for-smaller-gpus)

- [Run on Mac](#diffusers-in-mac-os)

- [ONNX Exporter](#experimental-onnx-exporter-and-pipeline)

- [New docs](#new-docs)

- [Community](#community)

- [Generate videos with SD latent space](#stable-diffusion-videos)

- [Model Explainability](#diffusers-interpret)

- [Japanese Stable Diffusion](#japanese-stable-diffusion)

- [High quality fine-tuned model](#waifu-diffusion)

- [Cross Attention Control with Stable Diffusion](#cross-attention-control)

- [Reusable seeds](#reusable-seeds)

## Image to Image pipeline

One of the most requested features was to have image to image generation. This pipeline allows you to input an image and a prompt, and it will generate an image based on that!

Let's see some code based on the official Colab [notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/image_2_image_using_diffusers.ipynb).

```python

from diffusers import StableDiffusionImg2ImgPipeline

pipe = StableDiffusionImg2ImgPipeline.from_pretrained(

"CompVis/stable-diffusion-v1-4",

revision="fp16",

torch_dtype=torch.float16,

use_auth_token=True

)

# Download an initial image

# ...

init_image = preprocess(init_img)

prompt = "A fantasy landscape, trending on artstation"

images = pipe(prompt=prompt, init_image=init_image, strength=0.75, guidance_scale=7.5, generator=generator)["sample"]

```

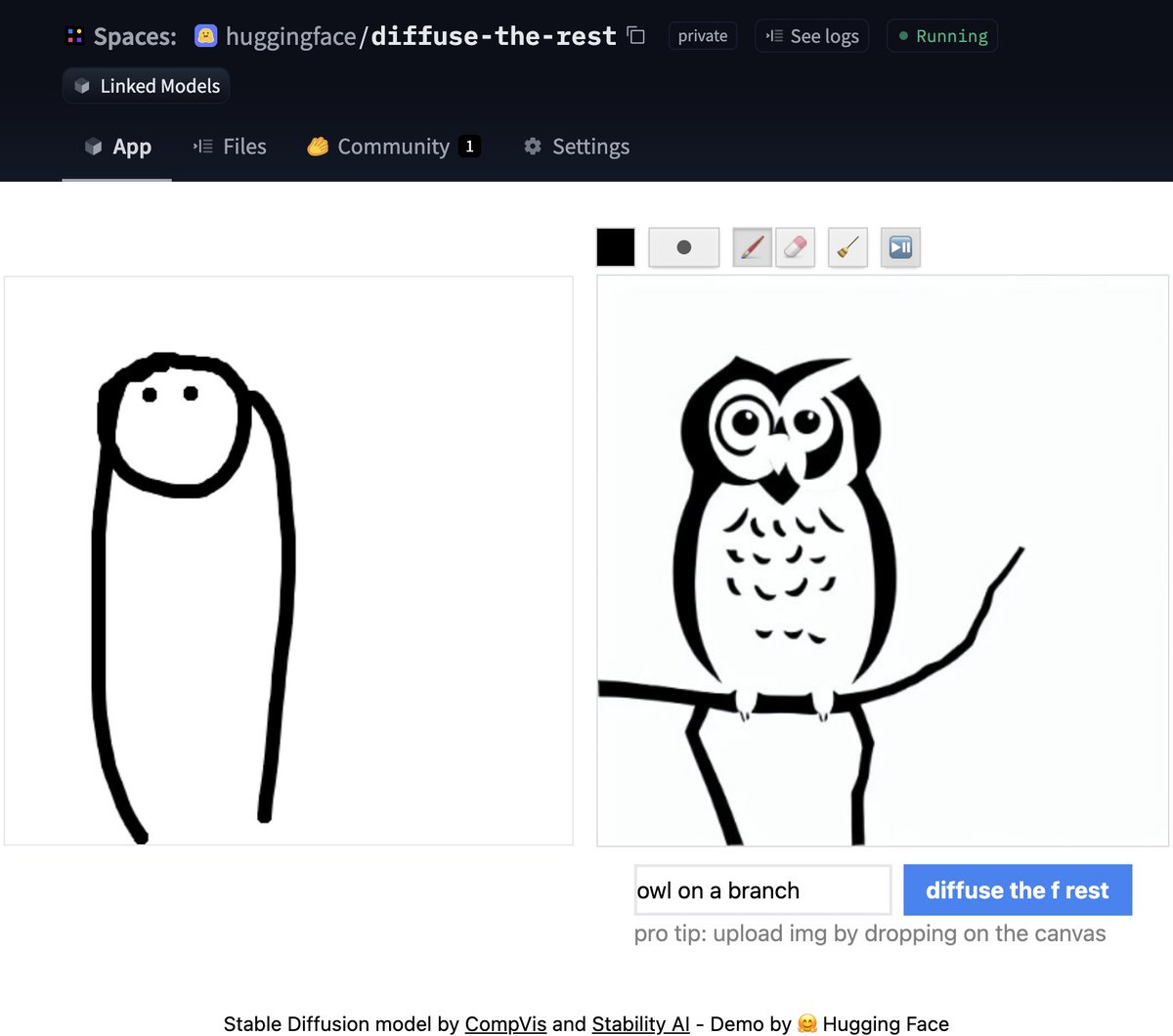

Don't have time for code? No worries, we also created a [Space demo](https://huggingface.co/spaces/huggingface/diffuse-the-rest) where you can try it out directly

## Textual Inversion

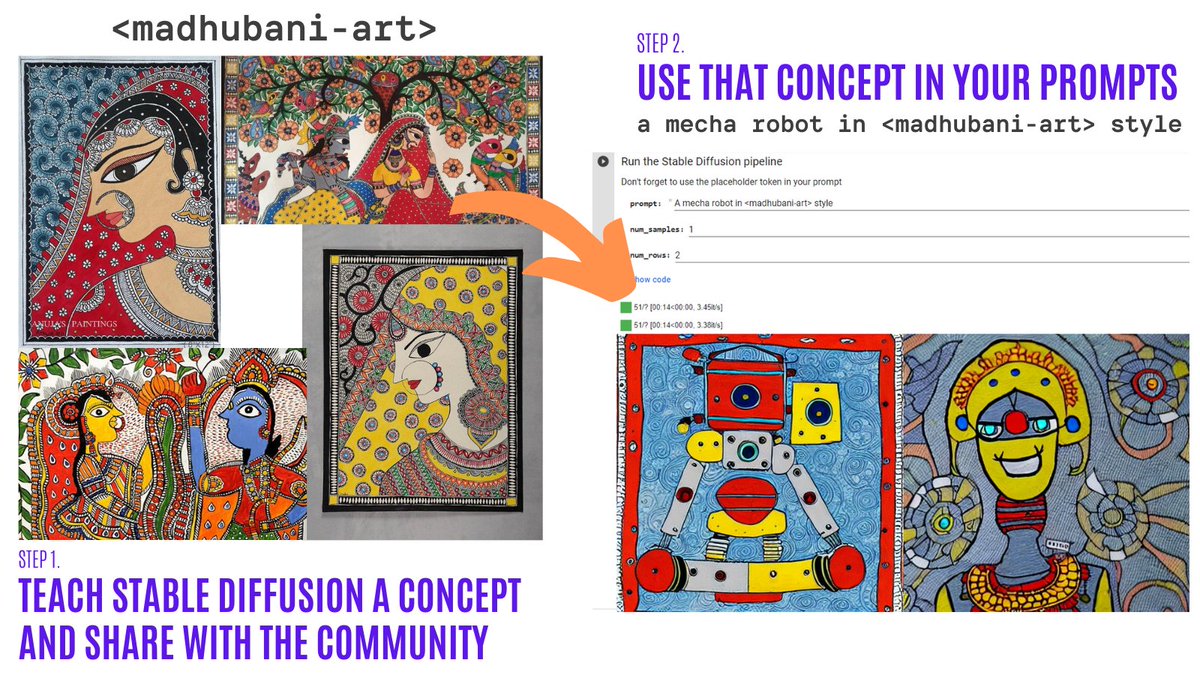

Textual Inversion lets you personalize a Stable Diffusion model on your own images with just 3-5 samples. With this tool, you can train a model on a concept, and then share the concept with the rest of the community!

In just a couple of days, the community shared over 200 concepts! Check them out!

* [Organization](https://huggingface.co/sd-concepts-library) with the concepts.

* [Navigator Colab](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_diffusion_textual_inversion_library_navigator.ipynb): Browse visually and use over 150 concepts created by the community.

* [Training Colab](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb): Teach Stable Diffusion a new concept and share it with the rest of the community.

* [Inference Colab](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb): Run Stable Diffusion with the learned concepts.

## Experimental inpainting pipeline

Inpainting allows to provide an image, then select an area in the image (or provide a mask), and use Stable Diffusion to replace the mask. Here is an example:

<figure class="image table text-center m-0 w-full">

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/diffusers-2nd-month/inpainting.png" alt="Example inpaint of owl being generated from an initial image and a prompt"/>

</figure>

You can try out a minimal Colab [notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/in_painting_with_stable_diffusion_using_diffusers.ipynb) or check out the code below. A demo is coming soon!

```python

from diffusers import StableDiffusionInpaintPipeline

pipe = StableDiffusionInpaintPipeline.from_pretrained(

"CompVis/stable-diffusion-v1-4",

revision="fp16",

torch_dtype=torch.float16,

use_auth_token=True

).to(device)

images = pipe(

prompt=["a cat sitting on a bench"] * 3,

init_image=init_image,

mask_image=mask_image,

strength=0.75,

guidance_scale=7.5,

generator=None

).images

```

Please note this is experimental, so there is room for improvement.

## Optimizations for smaller GPUs

After some improvements, the diffusion models can take much less VRAM. 🔥 For example, Stable Diffusion only takes 3.2GB! This yields the exact same results at the expense of 10% of speed. Here is how to use these optimizations

```python

from diffusers import StableDiffusionPipeline

pipe = StableDiffusionPipeline.from_pretrained(

"CompVis/stable-diffusion-v1-4",

revision="fp16",

torch_dtype=torch.float16,

use_auth_token=True

)

pipe = pipe.to("cuda")

pipe.enable_attention_slicing()

```

This is super exciting as this will reduce even more the barrier to use these models!

## Diffusers in Mac OS

🍎 That's right! Another widely requested feature was just released! Read the full instructions in the [official docs](https://huggingface.co/docs/diffusers/optimization/mps) (including performance comparisons, specs, and more).

Using the PyTorch mps device, people with M1/M2 hardware can run inference with Stable Diffusion. 🤯 This requires minimal setup for users, try it out!

```python

from diffusers import StableDiffusionPipeline

pipe = StableDiffusionPipeline.from_pretrained("CompVis/stable-diffusion-v1-4", use_auth_token=True)

pipe = pipe.to("mps")

prompt = "a photo of an astronaut riding a horse on mars"

image = pipe(prompt).images[0]

```

## Experimental ONNX exporter and pipeline

The new experimental pipeline allows users to run Stable Diffusion on any hardware that supports ONNX. Here is an example of how to use it (note that the `onnx` revision is being used)

```python

from diffusers import StableDiffusionOnnxPipeline

pipe = StableDiffusionOnnxPipeline.from_pretrained(

"CompVis/stable-diffusion-v1-4",

revision="onnx",

provider="CPUExecutionProvider",

use_auth_token=True,

)

prompt = "a photo of an astronaut riding a horse on mars"

image = pipe(prompt).images[0]

```

Alternatively, you can also convert your SD checkpoints to ONNX directly with the exporter script.

```

python scripts/convert_stable_diffusion_checkpoint_to_onnx.py --model_path="CompVis/stable-diffusion-v1-4" --output_path="./stable_diffusion_onnx"

```

## New docs

All of the previous features are very cool. As maintainers of open-source libraries, we know about the importance of high quality documentation to make it as easy as possible for anyone to try out the library.

💅 Because of this, we did a Docs sprint and we're very excited to do a first release of our [documentation](https://huggingface.co/docs/diffusers/v0.3.0/en/index). This is a first version, so there are many things we plan to add (and contributions are always welcome!).

Some highlights of the docs:

* Techniques for [optimization](https://huggingface.co/docs/diffusers/optimization/fp16)

* The [training overview](https://huggingface.co/docs/diffusers/training/overview)

* A [contributing guide](https://huggingface.co/docs/diffusers/conceptual/contribution)

* In-depth API docs for [schedulers](https://huggingface.co/docs/diffusers/api/schedulers)

* In-depth API docs for [pipelines](https://huggingface.co/docs/diffusers/api/pipelines/overview)

## Community

And while we were doing all of the above, the community did not stay idle! Here are some highlights (although not exhaustive) of what has been done out there

### Stable Diffusion Videos

Create 🔥 videos with Stable Diffusion by exploring the latent space and morphing between text prompts. You can:

* Dream different versions of the same prompt

* Morph between different prompts

The [Stable Diffusion Videos](https://github.com/nateraw/stable-diffusion-videos) tool is pip-installable, comes with a Colab notebook and a Gradio notebook, and is super easy to use!

Here is an example

```python

from stable_diffusion_videos import walk

video_path = walk(['a cat', 'a dog'], [42, 1337], num_steps=3, make_video=True)

```

### Diffusers Interpret

[Diffusers interpret](https://github.com/JoaoLages/diffusers-interpret) is an explainability tool built on top of `diffusers`. It has cool features such as:

* See all the images in the diffusion process

* Analyze how each token in the prompt influences the generation

* Analyze within specified bounding boxes if you want to understand a part of the image

(Image from the tool repository)

```python

# pass pipeline to the explainer class

explainer = StableDiffusionPipelineExplainer(pipe)

# generate an image with `explainer`

prompt = "Corgi with the Eiffel Tower"

output = explainer(

prompt,

num_inference_steps=15

)

output.normalized_token_attributions # (token, attribution_percentage)

#[('corgi', 40),

# ('with', 5),

# ('the', 5),

# ('eiffel', 25),

# ('tower', 25)]

```

### Japanese Stable Diffusion

The name says it all! The goal of JSD was to train a model that also captures information about the culture, identity and unique expressions. It was trained with 100 million images with Japanese captions. You can read more about how the model was trained in the [model card](https://huggingface.co/rinna/japanese-stable-diffusion)

### Waifu Diffusion

[Waifu Diffusion](https://huggingface.co/hakurei/waifu-diffusion) is a fine-tuned SD model for high-quality anime images generation.

<figure class="image table text-center m-0 w-full">

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/diffusers-2nd-month/waifu.png" alt="Images of high quality anime"/>

</figure>

(Image from the tool repository)

### Cross Attention Control

[Cross Attention Control](https://github.com/bloc97/CrossAttentionControl) allows fine control of the prompts by modifying the attention maps of the diffusion models. Some cool things you can do:

* Replace a target in the prompt (e.g. replace cat by dog)

* Reduce or increase the importance of words in the prompt (e.g. if you want less attention to be given to "rocks")

* Easily inject styles

And much more! Check out the repo.

### Reusable Seeds

One of the most impressive early demos of Stable Diffusion was the reuse of seeds to tweak images. The idea is to use the seed of an image of interest to generate a new image, with a different prompt. This yields some cool results! Check out the [Colab](https://colab.research.google.com/github/pcuenca/diffusers-examples/blob/main/notebooks/stable-diffusion-seeds.ipynb)

## Thanks for reading!

I hope you enjoy reading this! Remember to give a Star in our [GitHub Repository](https://github.com/huggingface/diffusers) and join the [Hugging Face Discord Server](https://hf.co/join/discord), where we have a category of channels just for Diffusion models. Over there the latest news in the library are shared!

Feel free to open issues with feature requests and bug reports! Everything that has been achieved couldn't have been done without such an amazing community.

| huggingface/blog/blob/main/diffusers-2nd-month.md |

Adversarial Inception v3

**Inception v3** is a convolutional neural network architecture from the Inception family that makes several improvements including using [Label Smoothing](https://paperswithcode.com/method/label-smoothing), Factorized 7 x 7 convolutions, and the use of an [auxiliary classifer](https://paperswithcode.com/method/auxiliary-classifier) to propagate label information lower down the network (along with the use of batch normalization for layers in the sidehead). The key building block is an [Inception Module](https://paperswithcode.com/method/inception-v3-module).

This particular model was trained for study of adversarial examples (adversarial training).

The weights from this model were ported from [Tensorflow/Models](https://github.com/tensorflow/models).

## How do I use this model on an image?

To load a pretrained model:

```python

import timm

model = timm.create_model('adv_inception_v3', pretrained=True)

model.eval()

```

To load and preprocess the image:

```python

import urllib

from PIL import Image

from timm.data import resolve_data_config

from timm.data.transforms_factory import create_transform

config = resolve_data_config({}, model=model)

transform = create_transform(**config)

url, filename = ("https://github.com/pytorch/hub/raw/master/images/dog.jpg", "dog.jpg")

urllib.request.urlretrieve(url, filename)

img = Image.open(filename).convert('RGB')

tensor = transform(img).unsqueeze(0) # transform and add batch dimension

```

To get the model predictions:

```python

import torch

with torch.no_grad():

out = model(tensor)

probabilities = torch.nn.functional.softmax(out[0], dim=0)

print(probabilities.shape)

# prints: torch.Size([1000])

```

To get the top-5 predictions class names:

```python

# Get imagenet class mappings

url, filename = ("https://raw.githubusercontent.com/pytorch/hub/master/imagenet_classes.txt", "imagenet_classes.txt")

urllib.request.urlretrieve(url, filename)

with open("imagenet_classes.txt", "r") as f:

categories = [s.strip() for s in f.readlines()]

# Print top categories per image

top5_prob, top5_catid = torch.topk(probabilities, 5)

for i in range(top5_prob.size(0)):

print(categories[top5_catid[i]], top5_prob[i].item())

# prints class names and probabilities like:

# [('Samoyed', 0.6425196528434753), ('Pomeranian', 0.04062102362513542), ('keeshond', 0.03186424449086189), ('white wolf', 0.01739676296710968), ('Eskimo dog', 0.011717947199940681)]

```

Replace the model name with the variant you want to use, e.g. `adv_inception_v3`. You can find the IDs in the model summaries at the top of this page.

To extract image features with this model, follow the [timm feature extraction examples](https://rwightman.github.io/pytorch-image-models/feature_extraction/), just change the name of the model you want to use.

## How do I finetune this model?

You can finetune any of the pre-trained models just by changing the classifier (the last layer).

```python

model = timm.create_model('adv_inception_v3', pretrained=True, num_classes=NUM_FINETUNE_CLASSES)

```

To finetune on your own dataset, you have to write a training loop or adapt [timm's training

script](https://github.com/rwightman/pytorch-image-models/blob/master/train.py) to use your dataset.

## How do I train this model?

You can follow the [timm recipe scripts](https://rwightman.github.io/pytorch-image-models/scripts/) for training a new model afresh.

## Citation

```BibTeX

@article{DBLP:journals/corr/abs-1804-00097,

author = {Alexey Kurakin and

Ian J. Goodfellow and

Samy Bengio and

Yinpeng Dong and

Fangzhou Liao and

Ming Liang and

Tianyu Pang and

Jun Zhu and

Xiaolin Hu and

Cihang Xie and

Jianyu Wang and

Zhishuai Zhang and

Zhou Ren and

Alan L. Yuille and

Sangxia Huang and

Yao Zhao and

Yuzhe Zhao and

Zhonglin Han and

Junjiajia Long and

Yerkebulan Berdibekov and

Takuya Akiba and

Seiya Tokui and

Motoki Abe},

title = {Adversarial Attacks and Defences Competition},

journal = {CoRR},

volume = {abs/1804.00097},

year = {2018},

url = {http://arxiv.org/abs/1804.00097},

archivePrefix = {arXiv},

eprint = {1804.00097},

timestamp = {Thu, 31 Oct 2019 16:31:22 +0100},

biburl = {https://dblp.org/rec/journals/corr/abs-1804-00097.bib},

bibsource = {dblp computer science bibliography, https://dblp.org}

}

```

<!--

Type: model-index

Collections:

- Name: Adversarial Inception v3

Paper:

Title: Adversarial Attacks and Defences Competition

URL: https://paperswithcode.com/paper/adversarial-attacks-and-defences-competition

Models:

- Name: adv_inception_v3

In Collection: Adversarial Inception v3

Metadata:

FLOPs: 7352418880

Parameters: 23830000

File Size: 95549439

Architecture:

- 1x1 Convolution

- Auxiliary Classifier

- Average Pooling

- Average Pooling

- Batch Normalization

- Convolution

- Dense Connections

- Dropout

- Inception-v3 Module

- Max Pooling

- ReLU

- Softmax

Tasks:

- Image Classification

Training Data:

- ImageNet

ID: adv_inception_v3

Crop Pct: '0.875'

Image Size: '299'

Interpolation: bicubic

Code: https://github.com/rwightman/pytorch-image-models/blob/d8e69206be253892b2956341fea09fdebfaae4e3/timm/models/inception_v3.py#L456

Weights: https://github.com/rwightman/pytorch-image-models/releases/download/v0.1-weights/adv_inception_v3-9e27bd63.pth

Results:

- Task: Image Classification

Dataset: ImageNet

Metrics:

Top 1 Accuracy: 77.58%

Top 5 Accuracy: 93.74%

--> | huggingface/pytorch-image-models/blob/main/docs/models/adversarial-inception-v3.md |

`@gradio/code`

```html

<script>

import { BaseCode, BaseCopy, BaseDownload, BaseWidget, BaseExample} from "gradio/code";

</script>

```

BaseCode

```javascript

export let classNames = "";

export let value = "";

export let dark_mode: boolean;

export let basic = true;

export let language: string;

export let lines = 5;

export let extensions: Extension[] = [];

export let useTab = true;

export let readonly = false;

export let placeholder: string | HTMLElement | null | undefined = undefined;

```

BaseCopy

```javascript

export let value: string;

```

BaseDownload

```javascript

export let value: string;

export let language: string;

```

BaseWidget

```javascript

export let value: string;

export let language: string;

```

BaseExample

```

export let value: string;

export let type: "gallery" | "table";

export let selected = false;

``` | gradio-app/gradio/blob/main/js/code/README.md |

!--⚠️ Note that this file is in Markdown but contain specific syntax for our doc-builder (similar to MDX) that may not be

rendered properly in your Markdown viewer.

-->

# 安装

在开始之前,您需要通过安装适当的软件包来设置您的环境

huggingface_hub 在 Python 3.8 或更高版本上进行了测试,可以保证在这些版本上正常运行。如果您使用的是 Python 3.7 或更低版本,可能会出现兼容性问题

## 使用 pip 安装

我们建议将huggingface_hub安装在[虚拟环境](https://docs.python.org/3/library/venv.html)中.

如果你不熟悉 Python虚拟环境,可以看看这个[指南](https://packaging.python.org/en/latest/guides/installing-using-pip-and-virtual-environments/).

虚拟环境可以更容易地管理不同的项目,避免依赖项之间的兼容性问题

首先在你的项目目录中创建一个虚拟环境,请运行以下代码:

```bash

python -m venv .env

```

在Linux和macOS上,请运行以下代码激活虚拟环境:

```bash

source .env/bin/activate

```

在 Windows 上,请运行以下代码激活虚拟环境:

```bash

.env/Scripts/activate

```

现在您可以从[PyPi注册表](https://pypi.org/project/huggingface-hub/)安装 `huggingface_hub`:

```bash

pip install --upgrade huggingface_hub

```

完成后,[检查安装](#check-installation)是否正常工作

### 安装可选依赖项

`huggingface_hub`的某些依赖项是 [可选](https://setuptools.pypa.io/en/latest/userguide/dependency_management.html#optional-dependencies) 的,因为它们不是运行`huggingface_hub`的核心功能所必需的.但是,如果没有安装可选依赖项, `huggingface_hub` 的某些功能可能会无法使用

您可以通过`pip`安装可选依赖项,请运行以下代码:

```bash

# 安装 TensorFlow 特定功能的依赖项

# /!\ 注意:这不等同于 `pip install tensorflow`

pip install 'huggingface_hub[tensorflow]'

# 安装 TensorFlow 特定功能和 CLI 特定功能的依赖项

pip install 'huggingface_hub[cli,torch]'

```

这里列出了 `huggingface_hub` 的可选依赖项:

- `cli`:为 `huggingface_hub` 提供更方便的命令行界面

- `fastai`,` torch`, `tensorflow`: 运行框架特定功能所需的依赖项

- `dev`:用于为库做贡献的依赖项。包括 `testing`(用于运行测试)、`typing`(用于运行类型检查器)和 `quality`(用于运行 linter)

### 从源代码安装

在某些情况下,直接从源代码安装`huggingface_hub`会更有趣。因为您可以使用最新的主版本`main`而非最新的稳定版本

`main`版本更有利于跟进平台的最新开发进度,例如,在最近一次官方发布之后和最新的官方发布之前所修复的某个错误

但是,这意味着`main`版本可能不总是稳定的。我们会尽力让其正常运行,大多数问题通常会在几小时或一天内解决。如果您遇到问题,请创建一个 Issue ,以便我们可以更快地解决!

```bash

pip install git+https://github.com/huggingface/huggingface_hub # 使用pip从GitHub仓库安装Hugging Face Hub库

```

从源代码安装时,您还可以指定特定的分支。如果您想测试尚未合并的新功能或新错误修复,这很有用

```bash

pip install git+https://github.com/huggingface/huggingface_hub@my-feature-branch # 使用pip从指定的GitHub分支(my-feature-branch)安装Hugging Face Hub库

```

完成安装后,请[检查安装](#check-installation)是否正常工作

### 可编辑安装

从源代码安装允许您设置[可编辑安装](https://pip.pypa.io/en/stable/topics/local-project-installs/#editable-installs).如果您计划为`huggingface_hub`做出贡献并需要测试代码更改,这是一个更高级的安装方式。您需要在本机上克隆一个`huggingface_hub`的本地副本

```bash

# 第一,使用以下命令克隆代码库

git clone https://github.com/huggingface/huggingface_hub.git

# 然后,使用以下命令启动虚拟环境

cd huggingface_hub

pip install -e .

```

这些命令将你克隆存储库的文件夹与你的 Python 库路径链接起来。Python 现在将除了正常的库路径之外,还会在你克隆到的文件夹中查找。例如,如果你的 Python 包通常安装在`./.venv/lib/python3.11/site-packages/`中,Python 还会搜索你克隆的文件夹`./huggingface_hub/`

## 通过 conda 安装

如果你更熟悉它,你可以使用[conda-forge channel](https://anaconda.org/conda-forge/huggingface_hub)渠道来安装 `huggingface_hub`

请运行以下代码:

```bash

conda install -c conda-forge huggingface_hub

```

完成安装后,请[检查安装](#check-installation)是否正常工作

## 验证安装

安装完成后,通过运行以下命令检查`huggingface_hub`是否正常工作:

```bash

python -c "from huggingface_hub import model_info; print(model_info('gpt2'))"

```

这个命令将从 Hub 获取有关 [gpt2](https://huggingface.co/gpt2) 模型的信息。

输出应如下所示:

```text

Model Name: gpt2 模型名称

Tags: ['pytorch', 'tf', 'jax', 'tflite', 'rust', 'safetensors', 'gpt2', 'text-generation', 'en', 'doi:10.57967/hf/0039', 'transformers', 'exbert', 'license:mit', 'has_space'] 标签

Task: text-generation 任务:文本生成

```

## Windows局限性

为了实现让每个人都能使用机器学习的目标,我们构建了 `huggingface_hub`库,使其成为一个跨平台的库,尤其可以在 Unix 和 Windows 系统上正常工作。但是,在某些情况下,`huggingface_hub`在Windows上运行时会有一些限制。以下是一些已知问题的完整列表。如果您遇到任何未记录的问题,请打开 [Github上的issue](https://github.com/huggingface/huggingface_hub/issues/new/choose).让我们知道

- `huggingface_hub`的缓存系统依赖于符号链接来高效地缓存从Hub下载的文件。在Windows上,您必须激活开发者模式或以管理员身份运行您的脚本才能启用符号链接。如果它们没有被激活,缓存系统仍然可以工作,但效率较低。有关更多详细信息,请阅读[缓存限制](./guides/manage-cache#limitations)部分。

- Hub上的文件路径可能包含特殊字符(例如:`path/to?/my/file`)。Windows对[特殊字符](https://learn.microsoft.com/en-us/windows/win32/intl/character-sets-used-in-file-names)更加严格,这使得在Windows上下载这些文件变得不可能。希望这是罕见的情况。如果您认为这是一个错误,请联系存储库所有者或我们,以找出解决方案。

## 后记

一旦您在机器上正确安装了`huggingface_hub`,您可能需要[配置环境变量](package_reference/environment_variables)或者[查看我们的指南之一](guides/overview)以开始使用。 | huggingface/huggingface_hub/blob/main/docs/source/cn/installation.md |

p align="center">

<picture>

<source media="(prefers-color-scheme: dark)" srcset="https://huggingface.co/datasets/safetensors/assets/raw/main/banner-dark.svg">

<source media="(prefers-color-scheme: light)" srcset="https://huggingface.co/datasets/safetensors/assets/raw/main/banner-light.svg">

<img alt="Hugging Face Safetensors Library" src="https://huggingface.co/datasets/safetensors/assets/raw/main/banner-light.svg" style="max-width: 100%;">

</picture>

<br/>

<br/>

</p>

Python

[](https://pypi.org/pypi/safetensors/)

[](https://huggingface.co/docs/safetensors/index)

[](https://codecov.io/gh/huggingface/safetensors)

[](https://pepy.tech/project/safetensors)

Rust

[](https://crates.io/crates/safetensors)

[](https://docs.rs/safetensors/)

[](https://codecov.io/gh/huggingface/safetensors)

[](https://deps.rs/repo/github/huggingface/safetensors?path=safetensors)

# safetensors

## Safetensors

This repository implements a new simple format for storing tensors

safely (as opposed to pickle) and that is still fast (zero-copy).

### Installation

#### Pip

You can install safetensors via the pip manager:

```bash

pip install safetensors

```

#### From source

For the sources, you need Rust

```bash

# Install Rust

curl --proto '=https' --tlsv1.2 -sSf https://sh.rustup.rs | sh

# Make sure it's up to date and using stable channel

rustup update

git clone https://github.com/huggingface/safetensors

cd safetensors/bindings/python

pip install setuptools_rust

pip install -e .

```

### Getting started

```python

import torch

from safetensors import safe_open

from safetensors.torch import save_file

tensors = {

"weight1": torch.zeros((1024, 1024)),

"weight2": torch.zeros((1024, 1024))

}

save_file(tensors, "model.safetensors")

tensors = {}

with safe_open("model.safetensors", framework="pt", device="cpu") as f:

for key in f.keys():

tensors[key] = f.get_tensor(key)

```

[Python documentation](https://huggingface.co/docs/safetensors/index)

### Format

- 8 bytes: `N`, an unsigned little-endian 64-bit integer, containing the size of the header

- N bytes: a JSON UTF-8 string representing the header.

- The header data MUST begin with a `{` character (0x7B).

- The header data MAY be trailing padded with whitespace (0x20).

- The header is a dict like `{"TENSOR_NAME": {"dtype": "F16", "shape": [1, 16, 256], "data_offsets": [BEGIN, END]}, "NEXT_TENSOR_NAME": {...}, ...}`,

- `data_offsets` point to the tensor data relative to the beginning of the byte buffer (i.e. not an absolute position in the file),

with `BEGIN` as the starting offset and `END` as the one-past offset (so total tensor byte size = `END - BEGIN`).

- A special key `__metadata__` is allowed to contain free form string-to-string map. Arbitrary JSON is not allowed, all values must be strings.

- Rest of the file: byte-buffer.

Notes:

- Duplicate keys are disallowed. Not all parsers may respect this.

- In general the subset of JSON is implicitly decided by `serde_json` for

this library. Anything obscure might be modified at a later time, that odd ways

to represent integer, newlines and escapes in utf-8 strings. This would only

be done for safety concerns

- Tensor values are not checked against, in particular NaN and +/-Inf could

be in the file

- Empty tensors (tensors with 1 dimension being 0) are allowed.

They are not storing any data in the databuffer, yet retaining size in the header.

They don't really bring a lot of values but are accepted since they are valid tensors

from traditional tensor libraries perspective (torch, tensorflow, numpy, ..).

- 0-rank Tensors (tensors with shape `[]`) are allowed, they are merely a scalar.

- The byte buffer needs to be entirely indexed, and cannot contain holes. This prevents

the creation of polyglot files.

- Endianness: Little-endian.

moment.

- Order: 'C' or row-major.

### Yet another format ?

The main rationale for this crate is to remove the need to use

`pickle` on `PyTorch` which is used by default.

There are other formats out there used by machine learning and more general

formats.

Let's take a look at alternatives and why this format is deemed interesting.

This is my very personal and probably biased view:

| Format | Safe | Zero-copy | Lazy loading | No file size limit | Layout control | Flexibility | Bfloat16/Fp8

| ----------------------- | --- | --- | --- | --- | --- | --- | --- |

| pickle (PyTorch) | ✗ | ✗ | ✗ | 🗸 | ✗ | 🗸 | 🗸 |

| H5 (Tensorflow) | 🗸 | ✗ | 🗸 | 🗸 | ~ | ~ | ✗ |

| SavedModel (Tensorflow) | 🗸 | ✗ | ✗ | 🗸 | 🗸 | ✗ | 🗸 |

| MsgPack (flax) | 🗸 | 🗸 | ✗ | 🗸 | ✗ | ✗ | 🗸 |

| Protobuf (ONNX) | 🗸 | ✗ | ✗ | ✗ | ✗ | ✗ | 🗸 |

| Cap'n'Proto | 🗸 | 🗸 | ~ | 🗸 | 🗸 | ~ | ✗ |

| Arrow | ? | ? | ? | ? | ? | ? | ✗ |

| Numpy (npy,npz) | 🗸 | ? | ? | ✗ | 🗸 | ✗ | ✗ |

| pdparams (Paddle) | ✗ | ✗ | ✗ | 🗸 | ✗ | 🗸 | 🗸 |

| SafeTensors | 🗸 | 🗸 | 🗸 | 🗸 | 🗸 | ✗ | 🗸 |

- Safe: Can I use a file randomly downloaded and expect not to run arbitrary code ?

- Zero-copy: Does reading the file require more memory than the original file ?

- Lazy loading: Can I inspect the file without loading everything ? And loading only

some tensors in it without scanning the whole file (distributed setting) ?

- Layout control: Lazy loading, is not necessarily enough since if the information about tensors is spread out in your file, then even if the information is lazily accessible you might have to access most of your file to read the available tensors (incurring many DISK -> RAM copies). Controlling the layout to keep fast access to single tensors is important.

- No file size limit: Is there a limit to the file size ?

- Flexibility: Can I save custom code in the format and be able to use it later with zero extra code ? (~ means we can store more than pure tensors, but no custom code)

- Bfloat16/Fp8: Does the format support native bfloat16/fp8 (meaning no weird workarounds are

necessary)? This is becoming increasingly important in the ML world.

### Main oppositions

- Pickle: Unsafe, runs arbitrary code

- H5: Apparently now discouraged for TF/Keras. Seems like a great fit otherwise actually. Some classic use after free issues: <https://www.cvedetails.com/vulnerability-list/vendor_id-15991/product_id-35054/Hdfgroup-Hdf5.html>. On a very different level than pickle security-wise. Also 210k lines of code vs ~400 lines for this lib currently.

- SavedModel: Tensorflow specific (it contains TF graph information).

- MsgPack: No layout control to enable lazy loading (important for loading specific parts in distributed setting)

- Protobuf: Hard 2Go max file size limit

- Cap'n'proto: Float16 support is not present [link](https://capnproto.org/language.html#built-in-types) so using a manual wrapper over a byte-buffer would be necessary. Layout control seems possible but not trivial as buffers have limitations [link](https://stackoverflow.com/questions/48458839/capnproto-maximum-filesize).

- Numpy (npz): No `bfloat16` support. Vulnerable to zip bombs (DOS). Not zero-copy.

- Arrow: No `bfloat16` support. Seem to require decoding [link](https://arrow.apache.org/docs/python/parquet.html#reading-parquet-and-memory-mapping)

### Notes