text

stringlengths 23

371k

| source

stringlengths 32

152

|

|---|---|

Create an Endpoint

After your first login, you will be directed to the [Endpoint creation page](https://ui.endpoints.huggingface.co/new). As an example, this guide will go through the steps to deploy [distilbert-base-uncased-finetuned-sst-2-english](https://huggingface.co/distilbert-base-uncased-finetuned-sst-2-english) for text classification.

## 1. Enter the Hugging Face Repository ID and your desired endpoint name:

<img src="https://raw.githubusercontent.com/huggingface/hf-endpoints-documentation/main/assets/1_repository.png" alt="select repository" />

## 2. Select your Cloud Provider and region. Initially, only AWS will be available as a Cloud Provider with the `us-east-1` and `eu-west-1` regions. We will add Azure soon, and if you need to test Endpoints with other Cloud Providers or regions, please let us know.

<img src="https://raw.githubusercontent.com/huggingface/hf-endpoints-documentation/main/assets/1_region.png" alt="select region" />

## 3. Define the [Security Level](security) for the Endpoint:

<img src="https://raw.githubusercontent.com/huggingface/hf-endpoints-documentation/main/assets/1_security.png" alt="define security" />

## 4. Create your Endpoint by clicking **Create Endpoint**. By default, your Endpoint is created with a medium CPU (2 x 4GB vCPUs with Intel Xeon Ice Lake) The cost estimate assumes the Endpoint will be up for an entire month, and does not take autoscaling into account.

<img src="https://raw.githubusercontent.com/huggingface/hf-endpoints-documentation/main/assets/1_create_cost.png" alt="create endpoint" />

## 5. Wait for the Endpoint to build, initialize and run which can take between 1 to 5 minutes.

<img src="https://raw.githubusercontent.com/huggingface/hf-endpoints-documentation/main/assets/overview.png" alt="overview" />

## 6. Test your Endpoint in the overview with the Inference widget 🏁 🎉!

<img src="https://raw.githubusercontent.com/huggingface/hf-endpoints-documentation/main/assets/1_inference.png" alt="run inference" />

| huggingface/hf-endpoints-documentation/blob/main/docs/source/guides/create_endpoint.mdx |

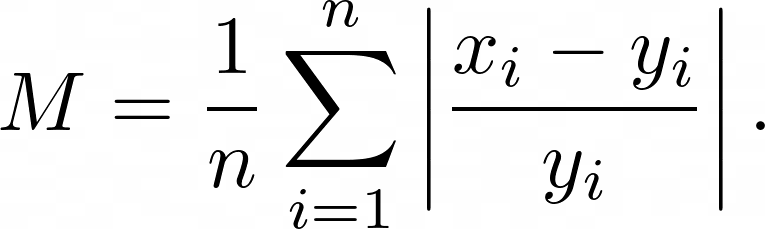

Choosing a metric for your task

**So you've trained your model and want to see how well it’s doing on a dataset of your choice. Where do you start?**

There is no “one size fits all” approach to choosing an evaluation metric, but some good guidelines to keep in mind are:

## Categories of metrics

There are 3 high-level categories of metrics:

1. *Generic metrics*, which can be applied to a variety of situations and datasets, such as precision and accuracy.

2. *Task-specific metrics*, which are limited to a given task, such as Machine Translation (often evaluated using metrics [BLEU](https://huggingface.co/metrics/bleu) or [ROUGE](https://huggingface.co/metrics/rouge)) or Named Entity Recognition (often evaluated with [seqeval](https://huggingface.co/metrics/seqeval)).

3. *Dataset-specific metrics*, which aim to measure model performance on specific benchmarks: for instance, the [GLUE benchmark](https://huggingface.co/datasets/glue) has a dedicated [evaluation metric](https://huggingface.co/metrics/glue).

Let's look at each of these three cases:

### Generic metrics

Many of the metrics used in the Machine Learning community are quite generic and can be applied in a variety of tasks and datasets.

This is the case for metrics like [accuracy](https://huggingface.co/metrics/accuracy) and [precision](https://huggingface.co/metrics/precision), which can be used for evaluating labeled (supervised) datasets, as well as [perplexity](https://huggingface.co/metrics/perplexity), which can be used for evaluating different kinds of (unsupervised) generative tasks.

To see the input structure of a given metric, you can look at its metric card. For example, in the case of [precision](https://huggingface.co/metrics/precision), the format is:

```

>>> precision_metric = evaluate.load("precision")

>>> results = precision_metric.compute(references=[0, 1], predictions=[0, 1])

>>> print(results)

{'precision': 1.0}

```

### Task-specific metrics

Popular ML tasks like Machine Translation and Named Entity Recognition have specific metrics that can be used to compare models. For example, a series of different metrics have been proposed for text generation, ranging from [BLEU](https://huggingface.co/metrics/bleu) and its derivatives such as [GoogleBLEU](https://huggingface.co/metrics/google_bleu) and [GLEU](https://huggingface.co/metrics/gleu), but also [ROUGE](https://huggingface.co/metrics/rouge), [MAUVE](https://huggingface.co/metrics/mauve), etc.

You can find the right metric for your task by:

- **Looking at the [Task pages](https://huggingface.co/tasks)** to see what metrics can be used for evaluating models for a given task.

- **Checking out leaderboards** on sites like [Papers With Code](https://paperswithcode.com/) (you can search by task and by dataset).

- **Reading the metric cards** for the relevant metrics and see which ones are a good fit for your use case. For example, see the [BLEU metric card](https://github.com/huggingface/evaluate/tree/main/metrics/bleu) or [SQuaD metric card](https://github.com/huggingface/evaluate/tree/main/metrics/squad).

- **Looking at papers and blog posts** published on the topic and see what metrics they report. This can change over time, so try to pick papers from the last couple of years!

### Dataset-specific metrics

Some datasets have specific metrics associated with them -- this is especially in the case of popular benchmarks like [GLUE](https://huggingface.co/metrics/glue) and [SQuAD](https://huggingface.co/metrics/squad).

<Tip warning={true}>

💡

GLUE is actually a collection of different subsets on different tasks, so first you need to choose the one that corresponds to the NLI task, such as mnli, which is described as “crowdsourced collection of sentence pairs with textual entailment annotations”

</Tip>

If you are evaluating your model on a benchmark dataset like the ones mentioned above, you can use its dedicated evaluation metric. Make sure you respect the format that they require. For example, to evaluate your model on the [SQuAD](https://huggingface.co/datasets/squad) dataset, you need to feed the `question` and `context` into your model and return the `prediction_text`, which should be compared with the `references` (based on matching the `id` of the question) :

```

>>> from evaluate import load

>>> squad_metric = load("squad")

>>> predictions = [{'prediction_text': '1976', 'id': '56e10a3be3433e1400422b22'}]

>>> references = [{'answers': {'answer_start': [97], 'text': ['1976']}, 'id': '56e10a3be3433e1400422b22'}]

>>> results = squad_metric.compute(predictions=predictions, references=references)

>>> results

{'exact_match': 100.0, 'f1': 100.0}

```

You can find examples of dataset structures by consulting the "Dataset Preview" function or the dataset card for a given dataset, and you can see how to use its dedicated evaluation function based on the metric card.

| huggingface/evaluate/blob/main/docs/source/choosing_a_metric.mdx |

主要特点

让我们来介绍一下 Gradio 最受欢迎的一些功能!这里是 Gradio 的主要特点:

1. [添加示例输入](#example-inputs)

2. [传递自定义错误消息](#errors)

3. [添加描述内容](#descriptive-content)

4. [设置旗标](#flagging)

5. [预处理和后处理](#preprocessing-and-postprocessing)

6. [样式化演示](#styling)

7. [排队用户](#queuing)

8. [迭代输出](#iterative-outputs)

9. [进度条](#progress-bars)

10. [批处理函数](#batch-functions)

11. [在协作笔记本上运行](#colab-notebooks)

## 示例输入

您可以提供用户可以轻松加载到 "Interface" 中的示例数据。这对于演示模型期望的输入类型以及演示数据集和模型一起探索的方式非常有帮助。要加载示例数据,您可以将嵌套列表提供给 Interface 构造函数的 `examples=` 关键字参数。外部列表中的每个子列表表示一个数据样本,子列表中的每个元素表示每个输入组件的输入。有关每个组件的示例数据格式在[Docs](https://gradio.app/docs#components)中有说明。

$code_calculator

$demo_calculator

您可以将大型数据集加载到示例中,通过 Gradio 浏览和与数据集进行交互。示例将自动分页(可以通过 Interface 的 `examples_per_page` 参数进行配置)。

继续了解示例,请参阅[更多示例](https://gradio.app/more-on-examples)指南。

## 错误

您希望向用户传递自定义错误消息。为此,with `gr.Error("custom message")` 来显示错误消息。如果在上面的计算器示例中尝试除以零,将显示自定义错误消息的弹出模态窗口。了解有关错误的更多信息,请参阅[文档](https://gradio.app/docs#error)。

## 描述性内容

在前面的示例中,您可能已经注意到 Interface 构造函数中的 `title=` 和 `description=` 关键字参数,帮助用户了解您的应用程序。

Interface 构造函数中有三个参数用于指定此内容应放置在哪里:

- `title`:接受文本,并可以将其显示在界面的顶部,也将成为页面标题。

- `description`:接受文本、Markdown 或 HTML,并将其放置在标题正下方。

- `article`:也接受文本、Markdown 或 HTML,并将其放置在界面下方。

如果您使用的是 `Blocks` API,则可以 with `gr.Markdown(...)` 或 `gr.HTML(...)` 组件在任何位置插入文本、Markdown 或 HTML,其中描述性内容位于 `Component` 构造函数内部。

另一个有用的关键字参数是 `label=`,它存在于每个 `Component` 中。这修改了每个 `Component` 顶部的标签文本。还可以为诸如 `Textbox` 或 `Radio` 之类的表单元素添加 `info=` 关键字参数,以提供有关其用法的进一步信息。

```python

gr.Number(label='年龄', info='以年为单位,必须大于0')

```

## 旗标

默认情况下,"Interface" 将有一个 "Flag" 按钮。当用户测试您的 `Interface` 时,如果看到有趣的输出,例如错误或意外的模型行为,他们可以将输入标记为您进行查看。在由 `Interface` 构造函数的 `flagging_dir=` 参数提供的目录中,将记录标记的输入到一个 CSV 文件中。如果界面涉及文件数据,例如图像和音频组件,将创建文件夹来存储这些标记的数据。

例如,对于上面显示的计算器界面,我们将在下面的旗标目录中存储标记的数据:

```directory

+-- calculator.py

+-- flagged/

| +-- logs.csv

```

_flagged/logs.csv_

```csv

num1,operation,num2,Output

5,add,7,12

6,subtract,1.5,4.5

```

与早期显示的冷色界面相对应,我们将在下面的旗标目录中存储标记的数据:

```directory

+-- sepia.py

+-- flagged/

| +-- logs.csv

| +-- im/

| | +-- 0.png

| | +-- 1.png

| +-- Output/

| | +-- 0.png

| | +-- 1.png

```

_flagged/logs.csv_

```csv

im,Output

im/0.png,Output/0.png

im/1.png,Output/1.png

```

如果您希望用户提供旗标原因,可以将字符串列表传递给 Interface 的 `flagging_options` 参数。用户在进行旗标时必须选择其中一个字符串,这将作为附加列保存到 CSV 中。

## 预处理和后处理 (Preprocessing and Postprocessing)

如您所见,Gradio 包括可以处理各种不同数据类型的组件,例如图像、音频和视频。大多数组件都可以用作输入或输出。

当组件用作输入时,Gradio 自动处理*预处理*,将数据从用户浏览器发送的类型(例如网络摄像头快照的 base64 表示)转换为您的函数可以接受的形式(例如 `numpy` 数组)。

同样,当组件用作输出时,Gradio 自动处理*后处理*,将数据从函数返回的形式(例如图像路径列表)转换为可以在用户浏览器中显示的形式(例如以 base64 格式显示图像的 `Gallery`)。

您可以使用构建图像组件时的参数控制*预处理*。例如,如果您使用以下参数实例化 `Image` 组件,它将将图像转换为 `PIL` 类型,并将其重塑为`(100, 100)`,而不管提交时的原始大小如何:

```py

img = gr.Image(shape=(100, 100), type="pil")

```

相反,这里我们保留图像的原始大小,但在将其转换为 numpy 数组之前反转颜色:

```py

img = gr.Image(invert_colors=True, type="numpy")

```

后处理要容易得多!Gradio 自动识别返回数据的格式(例如 `Image` 是 `numpy` 数组还是 `str` 文件路径?),并将其后处理为可以由浏览器显示的格式。

请查看[文档](https://gradio.app/docs),了解每个组件的所有与预处理相关的参数。

## 样式 (Styling)

Gradio 主题是自定义应用程序外观和感觉的最简单方法。您可以选择多种主题或创建自己的主题。要这样做,请将 `theme=` 参数传递给 `Interface` 构造函数。例如:

```python

demo = gr.Interface(..., theme=gr.themes.Monochrome())

```

Gradio 带有一组预先构建的主题,您可以从 `gr.themes.*` 加载。您可以扩展这些主题或从头开始创建自己的主题 - 有关更多详细信息,请参阅[主题指南](https://gradio.app/theming-guide)。

要增加额外的样式能力,您可以 with `css=` 关键字将任何 CSS 传递给您的应用程序。

Gradio 应用程序的基类是 `gradio-container`,因此以下是一个更改 Gradio 应用程序背景颜色的示例:

```python

with `gr.Interface(css=".gradio-container {background-color: red}") as demo:

...

```

## 队列 (Queuing)

如果您的应用程序预计会有大量流量,请 with `queue()` 方法来控制处理速率。这将排队处理调用,因此一次只处理一定数量的请求。队列使用 Websockets,还可以防止网络超时,因此如果您的函数的推理时间很长(> 1 分钟),应使用队列。

with `Interface`:

```python

demo = gr.Interface(...).queue()

demo.launch()

```

with `Blocks`:

```python

with gr.Blocks() as demo:

#...

demo.queue()

demo.launch()

```

您可以通过以下方式控制一次处理的请求数量:

```python

demo.queue(concurrency_count=3)

```

查看有关配置其他队列参数的[队列文档](/docs/#queue)。

在 Blocks 中指定仅对某些函数进行排队:

```python

with gr.Blocks() as demo2:

num1 = gr.Number()

num2 = gr.Number()

output = gr.Number()

gr.Button("Add").click(

lambda a, b: a + b, [num1, num2], output)

gr.Button("Multiply").click(

lambda a, b: a * b, [num1, num2], output, queue=True)

demo2.launch()

```

## 迭代输出 (Iterative Outputs)

在某些情况下,您可能需要传输一系列输出而不是一次显示单个输出。例如,您可能有一个图像生成模型,希望显示生成的每个步骤的图像,直到最终图像。或者您可能有一个聊天机器人,它逐字逐句地流式传输响应,而不是一次返回全部响应。

在这种情况下,您可以将**生成器**函数提供给 Gradio,而不是常规函数。在 Python 中创建生成器非常简单:函数不应该有一个单独的 `return` 值,而是应该 with `yield` 连续返回一系列值。通常,`yield` 语句放置在某种循环中。下面是一个简单示例,生成器只是简单计数到给定数字:

```python

def my_generator(x):

for i in range(x):

yield i

```

您以与常规函数相同的方式将生成器提供给 Gradio。例如,这是一个(虚拟的)图像生成模型,它在输出图像之前生成数个步骤的噪音:

$code_fake_diffusion

$demo_fake_diffusion

请注意,我们在迭代器中添加了 `time.sleep(1)`,以创建步骤之间的人工暂停,以便您可以观察迭代器的步骤(在真实的图像生成模型中,这可能是不必要的)。

将生成器提供给 Gradio **需要**在底层 Interface 或 Blocks 中启用队列(请参阅上面的队列部分)。

## 进度条

Gradio 支持创建自定义进度条,以便您可以自定义和控制向用户显示的进度更新。要启用此功能,只需为方法添加一个默认值为 `gr.Progress` 实例的参数即可。然后,您可以直接调用此实例并传入 0 到 1 之间的浮点数来更新进度级别,或者 with `Progress` 实例的 `tqdm()` 方法来跟踪可迭代对象上的进度,如下所示。必须启用队列以进行进度更新。

$code_progress_simple

$demo_progress_simple

如果您 with `tqdm` 库,并且希望从函数内部的任何 `tqdm.tqdm` 自动报告进度更新,请将默认参数设置为 `gr.Progress(track_tqdm=True)`!

## 批处理函数 (Batch Functions)

Gradio 支持传递*批处理*函数。批处理函数只是接受输入列表并返回预测列表的函数。

例如,这是一个批处理函数,它接受两个输入列表(一个单词列表和一个整数列表),并返回修剪过的单词列表作为输出:

```python

import time

def trim_words(words, lens):

trimmed_words = []

time.sleep(5)

for w, l in zip(words, lens):

trimmed_words.append(w[:int(l)])

return [trimmed_words]

for w, l in zip(words, lens):

```

使用批处理函数的优点是,如果启用了队列,Gradio 服务器可以自动*批处理*传入的请求并并行处理它们,从而可能加快演示速度。以下是 Gradio 代码的示例(请注意 `batch=True` 和 `max_batch_size=16` - 这两个参数都可以传递给事件触发器或 `Interface` 类)

with `Interface`:

```python

demo = gr.Interface(trim_words, ["textbox", "number"], ["output"],

batch=True, max_batch_size=16)

demo.queue()

demo.launch()

```

with `Blocks`:

```python

import gradio as gr

with gr.Blocks() as demo:

with gr.Row():

word = gr.Textbox(label="word")

leng = gr.Number(label="leng")

output = gr.Textbox(label="Output")

with gr.Row():

run = gr.Button()

event = run.click(trim_words, [word, leng], output, batch=True, max_batch_size=16)

demo.queue()

demo.launch()

```

在上面的示例中,可以并行处理 16 个请求(总推理时间为 5 秒),而不是分别处理每个请求(总推理时间为 80 秒)。许多 Hugging Face 的 `transformers` 和 `diffusers` 模型在 Gradio 的批处理模式下自然工作:这是[使用批处理生成图像的示例演示](https://github.com/gradio-app/gradio/blob/main/demo/diffusers_with_batching/run.py)

注意:使用 Gradio 的批处理函数 **requires** 在底层 Interface 或 Blocks 中启用队列(请参阅上面的队列部分)。

## Gradio 笔记本 (Colab Notebooks)

Gradio 可以在任何运行 Python 的地方运行,包括本地 Jupyter 笔记本和协作笔记本,如[Google Colab](https://colab.research.google.com/)。对于本地 Jupyter 笔记本和 Google Colab 笔记本,Gradio 在本地服务器上运行,您可以在浏览器中与之交互。(注意:对于 Google Colab,这是通过[服务工作器隧道](https://github.com/tensorflow/tensorboard/blob/master/docs/design/colab_integration.md)实现的,您的浏览器需要启用 cookies。)对于其他远程笔记本,Gradio 也将在服务器上运行,但您需要使用[SSH 隧道](https://coderwall.com/p/ohk6cg/remote-access-to-ipython-notebooks-via-ssh)在本地浏览器中查看应用程序。通常,更简单的选择是使用 Gradio 内置的公共链接,[在下一篇指南中讨论](/sharing-your-app/#sharing-demos)。

| gradio-app/gradio/blob/main/guides/cn/01_getting-started/02_key-features.md |

!--Copyright 2023 The HuggingFace Team. All rights reserved.

Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with

the License. You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on

an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the

⚠️ Note that this file is in Markdown but contain specific syntax for our doc-builder (similar to MDX) that may not be

rendered properly in your Markdown viewer.

-->

# Training on TPU with TensorFlow

<Tip>

If you don't need long explanations and just want TPU code samples to get started with, check out [our TPU example notebook!](https://colab.research.google.com/github/huggingface/notebooks/blob/main/examples/tpu_training-tf.ipynb)

</Tip>

### What is a TPU?

A TPU is a **Tensor Processing Unit.** They are hardware designed by Google, which are used to greatly speed up the tensor computations within neural networks, much like GPUs. They can be used for both network training and inference. They are generally accessed through Google’s cloud services, but small TPUs can also be accessed directly for free through Google Colab and Kaggle Kernels.

Because [all TensorFlow models in 🤗 Transformers are Keras models](https://huggingface.co/blog/tensorflow-philosophy), most of the methods in this document are generally applicable to TPU training for any Keras model! However, there are a few points that are specific to the HuggingFace ecosystem (hug-o-system?) of Transformers and Datasets, and we’ll make sure to flag them up when we get to them.

### What kinds of TPU are available?

New users are often very confused by the range of TPUs, and the different ways to access them. The first key distinction to understand is the difference between **TPU Nodes** and **TPU VMs.**

When you use a **TPU Node**, you are effectively indirectly accessing a remote TPU. You will need a separate VM, which will initialize your network and data pipeline and then forward them to the remote node. When you use a TPU on Google Colab, you are accessing it in the **TPU Node** style.

Using TPU Nodes can have some quite unexpected behaviour for people who aren’t used to them! In particular, because the TPU is located on a physically different system to the machine you’re running your Python code on, your data cannot be local to your machine - any data pipeline that loads from your machine’s internal storage will totally fail! Instead, data must be stored in Google Cloud Storage where your data pipeline can still access it, even when the pipeline is running on the remote TPU node.

<Tip>

If you can fit all your data in memory as `np.ndarray` or `tf.Tensor`, then you can `fit()` on that data even when using Colab or a TPU Node, without needing to upload it to Google Cloud Storage.

</Tip>

<Tip>

**🤗Specific Hugging Face Tip🤗:** The methods `Dataset.to_tf_dataset()` and its higher-level wrapper `model.prepare_tf_dataset()` , which you will see throughout our TF code examples, will both fail on a TPU Node. The reason for this is that even though they create a `tf.data.Dataset` it is not a “pure” `tf.data` pipeline and uses `tf.numpy_function` or `Dataset.from_generator()` to stream data from the underlying HuggingFace `Dataset`. This HuggingFace `Dataset` is backed by data that is on a local disc and which the remote TPU Node will not be able to read.

</Tip>

The second way to access a TPU is via a **TPU VM.** When using a TPU VM, you connect directly to the machine that the TPU is attached to, much like training on a GPU VM. TPU VMs are generally easier to work with, particularly when it comes to your data pipeline. All of the above warnings do not apply to TPU VMs!

This is an opinionated document, so here’s our opinion: **Avoid using TPU Node if possible.** It is more confusing and more difficult to debug than TPU VMs. It is also likely to be unsupported in future - Google’s latest TPU, TPUv4, can only be accessed as a TPU VM, which suggests that TPU Nodes are increasingly going to become a “legacy” access method. However, we understand that the only free TPU access is on Colab and Kaggle Kernels, which uses TPU Node - so we’ll try to explain how to handle it if you have to! Check the [TPU example notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/examples/tpu_training-tf.ipynb) for code samples that explain this in more detail.

### What sizes of TPU are available?

A single TPU (a v2-8/v3-8/v4-8) runs 8 replicas. TPUs exist in **pods** that can run hundreds or thousands of replicas simultaneously. When you use more than a single TPU but less than a whole pod (for example, a v3-32), your TPU fleet is referred to as a **pod slice.**

When you access a free TPU via Colab, you generally get a single v2-8 TPU.

### I keep hearing about this XLA thing. What’s XLA, and how does it relate to TPUs?

XLA is an optimizing compiler, used by both TensorFlow and JAX. In JAX it is the only compiler, whereas in TensorFlow it is optional (but mandatory on TPU!). The easiest way to enable it when training a Keras model is to pass the argument `jit_compile=True` to `model.compile()`. If you don’t get any errors and performance is good, that’s a great sign that you’re ready to move to TPU!

Debugging on TPU is generally a bit harder than on CPU/GPU, so we recommend getting your code running on CPU/GPU with XLA first before trying it on TPU. You don’t have to train for long, of course - just for a few steps to make sure that your model and data pipeline are working like you expect them to.

<Tip>

XLA compiled code is usually faster - so even if you’re not planning to run on TPU, adding `jit_compile=True` can improve your performance. Be sure to note the caveats below about XLA compatibility, though!

</Tip>

<Tip warning={true}>

**Tip born of painful experience:** Although using `jit_compile=True` is a good way to get a speed boost and test if your CPU/GPU code is XLA-compatible, it can actually cause a lot of problems if you leave it in when actually training on TPU. XLA compilation will happen implicitly on TPU, so remember to remove that line before actually running your code on a TPU!

</Tip>

### How do I make my model XLA compatible?

In many cases, your code is probably XLA-compatible already! However, there are a few things that work in normal TensorFlow that don’t work in XLA. We’ve distilled them into three core rules below:

<Tip>

**🤗Specific HuggingFace Tip🤗:** We’ve put a lot of effort into rewriting our TensorFlow models and loss functions to be XLA-compatible. Our models and loss functions generally obey rule #1 and #2 by default, so you can skip over them if you’re using `transformers` models. Don’t forget about these rules when writing your own models and loss functions, though!

</Tip>

#### XLA Rule #1: Your code cannot have “data-dependent conditionals”

What that means is that any `if` statement cannot depend on values inside a `tf.Tensor`. For example, this code block cannot be compiled with XLA!

```python

if tf.reduce_sum(tensor) > 10:

tensor = tensor / 2.0

```

This might seem very restrictive at first, but most neural net code doesn’t need to do this. You can often get around this restriction by using `tf.cond` (see the documentation [here](https://www.tensorflow.org/api_docs/python/tf/cond)) or by removing the conditional and finding a clever math trick with indicator variables instead, like so:

```python

sum_over_10 = tf.cast(tf.reduce_sum(tensor) > 10, tf.float32)

tensor = tensor / (1.0 + sum_over_10)

```

This code has exactly the same effect as the code above, but by avoiding a conditional, we ensure it will compile with XLA without problems!

#### XLA Rule #2: Your code cannot have “data-dependent shapes”

What this means is that the shape of all of the `tf.Tensor` objects in your code cannot depend on their values. For example, the function `tf.unique` cannot be compiled with XLA, because it returns a `tensor` containing one instance of each unique value in the input. The shape of this output will obviously be different depending on how repetitive the input `Tensor` was, and so XLA refuses to handle it!

In general, most neural network code obeys rule #2 by default. However, there are a few common cases where it becomes a problem. One very common one is when you use **label masking**, setting your labels to a negative value to indicate that those positions should be ignored when computing the loss. If you look at NumPy or PyTorch loss functions that support label masking, you will often see code like this that uses [boolean indexing](https://numpy.org/doc/stable/user/basics.indexing.html#boolean-array-indexing):

```python

label_mask = labels >= 0

masked_outputs = outputs[label_mask]

masked_labels = labels[label_mask]

loss = compute_loss(masked_outputs, masked_labels)

mean_loss = torch.mean(loss)

```

This code is totally fine in NumPy or PyTorch, but it breaks in XLA! Why? Because the shape of `masked_outputs` and `masked_labels` depends on how many positions are masked - that makes it a **data-dependent shape.** However, just like for rule #1, we can often rewrite this code to yield exactly the same output without any data-dependent shapes.

```python

label_mask = tf.cast(labels >= 0, tf.float32)

loss = compute_loss(outputs, labels)

loss = loss * label_mask # Set negative label positions to 0

mean_loss = tf.reduce_sum(loss) / tf.reduce_sum(label_mask)

```

Here, we avoid data-dependent shapes by computing the loss for every position, but zeroing out the masked positions in both the numerator and denominator when we calculate the mean, which yields exactly the same result as the first block while maintaining XLA compatibility. Note that we use the same trick as in rule #1 - converting a `tf.bool` to `tf.float32` and using it as an indicator variable. This is a really useful trick, so remember it if you need to convert your own code to XLA!

#### XLA Rule #3: XLA will need to recompile your model for every different input shape it sees

This is the big one. What this means is that if your input shapes are very variable, XLA will have to recompile your model over and over, which will create huge performance problems. This commonly arises in NLP models, where input texts have variable lengths after tokenization. In other modalities, static shapes are more common and this rule is much less of a problem.

How can you get around rule #3? The key is **padding** - if you pad all your inputs to the same length, and then use an `attention_mask`, you can get the same results as you’d get from variable shapes, but without any XLA issues. However, excessive padding can cause severe slowdown too - if you pad all your samples to the maximum length in the whole dataset, you might end up with batches consisting endless padding tokens, which will waste a lot of compute and memory!

There isn’t a perfect solution to this problem. However, you can try some tricks. One very useful trick is to **pad batches of samples up to a multiple of a number like 32 or 64 tokens.** This often only increases the number of tokens by a small amount, but it hugely reduces the number of unique input shapes, because every input shape now has to be a multiple of 32 or 64. Fewer unique input shapes means fewer XLA compilations!

<Tip>

**🤗Specific HuggingFace Tip🤗:** Our tokenizers and data collators have methods that can help you here. You can use `padding="max_length"` or `padding="longest"` when calling tokenizers to get them to output padded data. Our tokenizers and data collators also have a `pad_to_multiple_of` argument that you can use to reduce the number of unique input shapes you see!

</Tip>

### How do I actually train my model on TPU?

Once your training is XLA-compatible and (if you’re using TPU Node / Colab) your dataset has been prepared appropriately, running on TPU is surprisingly easy! All you really need to change in your code is to add a few lines to initialize your TPU, and to ensure that your model and dataset are created inside a `TPUStrategy` scope. Take a look at [our TPU example notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/examples/tpu_training-tf.ipynb) to see this in action!

### Summary

There was a lot in here, so let’s summarize with a quick checklist you can follow when you want to get your model ready for TPU training:

- Make sure your code follows the three rules of XLA

- Compile your model with `jit_compile=True` on CPU/GPU and confirm that you can train it with XLA

- Either load your dataset into memory or use a TPU-compatible dataset loading approach (see [notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/examples/tpu_training-tf.ipynb))

- Migrate your code either to Colab (with accelerator set to “TPU”) or a TPU VM on Google Cloud

- Add TPU initializer code (see [notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/examples/tpu_training-tf.ipynb))

- Create your `TPUStrategy` and make sure dataset loading and model creation are inside the `strategy.scope()` (see [notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/examples/tpu_training-tf.ipynb))

- Don’t forget to take `jit_compile=True` out again when you move to TPU!

- 🙏🙏🙏🥺🥺🥺

- Call model.fit()

- You did it! | huggingface/transformers/blob/main/docs/source/en/perf_train_tpu_tf.md |

Gradio Demo: blocks_random_slider

```

!pip install -q gradio

```

```

import gradio as gr

def func(slider_1, slider_2):

return slider_1 * 5 + slider_2

with gr.Blocks() as demo:

slider = gr.Slider(minimum=-10.2, maximum=15, label="Random Slider (Static)", randomize=True)

slider_1 = gr.Slider(minimum=100, maximum=200, label="Random Slider (Input 1)", randomize=True)

slider_2 = gr.Slider(minimum=10, maximum=23.2, label="Random Slider (Input 2)", randomize=True)

slider_3 = gr.Slider(value=3, label="Non random slider")

btn = gr.Button("Run")

btn.click(func, inputs=[slider_1, slider_2], outputs=gr.Number())

if __name__ == "__main__":

demo.launch()

```

| gradio-app/gradio/blob/main/demo/blocks_random_slider/run.ipynb |

Git over SSH

You can access and write data in repositories on huggingface.co using SSH (Secure Shell Protocol). When you connect via SSH, you authenticate using a private key file on your local machine.

Some actions, such as pushing changes, or cloning private repositories, will require you to upload your SSH public key to your account on huggingface.co.

You can use a pre-existing SSH key, or generate a new one specifically for huggingface.co.

## Checking for existing SSH keys

If you have an existing SSH key, you can use that key to authenticate Git operations over SSH.

SSH keys are usually located under `~/.ssh` on Mac & Linux, and under `C:\\Users\\<username>\\.ssh` on Windows. List files under that directory and look for files of the form:

- id_rsa.pub

- id_ecdsa.pub

- id_ed25519.pub

Those files contain your SSH public key.

If you don't have such file under `~/.ssh`, you will have to [generate a new key](#generating-a-new-ssh-keypair). Otherwise, you can [add your existing SSH public key(s) to your huggingface.co account](#add-a-ssh-key-to-your-account).

## Generating a new SSH keypair

If you don't have any SSH keys on your machine, you can use `ssh-keygen` to generate a new SSH key pair (public + private keys):

```

$ ssh-keygen -t ed25519 -C "[email protected]"

```

We recommend entering a passphrase when you are prompted to. A passphrase is an extra layer of security: it is a password that will be prompted whenever you use your SSH key.

Once your new key is generated, add it to your SSH agent with `ssh-add`:

```

$ ssh-add ~/.ssh/id_ed25519

```

If you chose a different location than the default to store your SSH key, you would have to replace `~/.ssh/id_ed25519` with the file location you used.

## Add a SSH key to your account

To access private repositories with SSH, or to push changes via SSH, you will need to add your SSH public key to your huggingface.co account. You can manage your SSH keys [in your user settings](https://huggingface.co/settings/keys).

To add a SSH key to your account, click on the "Add SSH key" button.

Then, enter a name for this key (for example, "Personal computer"), and copy and paste the content of your **public** SSH key in the area below. The public key is located in the `~/.ssh/id_XXXX.pub` file you found or generated in the previous steps.

Click on "Add key", and voilà! You have added a SSH key to your huggingface.co account.

## Testing your SSH authentication

Once you have added your SSH key to your huggingface.co account, you can test that the connection works as expected.

In a terminal, run:

```

$ ssh -T [email protected]

```

If you see a message with your username, congrats! Everything went well, you are ready to use git over SSH.

Otherwise, if the message states something like the following, make sure your SSH key is actually used by your SSH agent.

```

Hi anonymous, welcome to Hugging Face.

```

| huggingface/hub-docs/blob/main/docs/hub/security-git-ssh.md |

!---

Copyright 2022 The HuggingFace Team. All rights reserved.

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License.

-->

# Token classification with LayoutLMv3 (PyTorch version)

This directory contains a script, `run_funsd_cord.py`, that can be used to fine-tune (or evaluate) LayoutLMv3 on form understanding datasets, such as [FUNSD](https://guillaumejaume.github.io/FUNSD/) and [CORD](https://github.com/clovaai/cord).

The script `run_funsd_cord.py` leverages the 🤗 Datasets library and the Trainer API. You can easily customize it to your needs.

## Fine-tuning on FUNSD

Fine-tuning LayoutLMv3 for token classification on [FUNSD](https://guillaumejaume.github.io/FUNSD/) can be done as follows:

```bash

python run_funsd_cord.py \

--model_name_or_path microsoft/layoutlmv3-base \

--dataset_name funsd \

--output_dir layoutlmv3-test \

--do_train \

--do_eval \

--max_steps 1000 \

--evaluation_strategy steps \

--eval_steps 100 \

--learning_rate 1e-5 \

--load_best_model_at_end \

--metric_for_best_model "eval_f1" \

--push_to_hub \

--push_to_hub°model_id layoutlmv3-finetuned-funsd

```

👀 The resulting model can be found here: https://huggingface.co/nielsr/layoutlmv3-finetuned-funsd. By specifying the `push_to_hub` flag, the model gets uploaded automatically to the hub (regularly), together with a model card, which includes metrics such as precision, recall and F1. Note that you can easily update the model card, as it's just a README file of the respective repo on the hub.

There's also the "Training metrics" [tab](https://huggingface.co/nielsr/layoutlmv3-finetuned-funsd/tensorboard), which shows Tensorboard logs over the course of training. Pretty neat, huh?

## Fine-tuning on CORD

Fine-tuning LayoutLMv3 for token classification on [CORD](https://github.com/clovaai/cord) can be done as follows:

```bash

python run_funsd_cord.py \

--model_name_or_path microsoft/layoutlmv3-base \

--dataset_name cord \

--output_dir layoutlmv3-test \

--do_train \

--do_eval \

--max_steps 1000 \

--evaluation_strategy steps \

--eval_steps 100 \

--learning_rate 5e-5 \

--load_best_model_at_end \

--metric_for_best_model "eval_f1" \

--push_to_hub \

--push_to_hub°model_id layoutlmv3-finetuned-cord

```

👀 The resulting model can be found here: https://huggingface.co/nielsr/layoutlmv3-finetuned-cord. Note that a model card gets generated automatically in case you specify the `push_to_hub` flag. | huggingface/transformers/blob/main/examples/research_projects/layoutlmv3/README.md |

State in Blocks

We covered [State in Interfaces](https://gradio.app/interface-state), this guide takes a look at state in Blocks, which works mostly the same.

## Global State

Global state in Blocks works the same as in Interface. Any variable created outside a function call is a reference shared between all users.

## Session State

Gradio supports session **state**, where data persists across multiple submits within a page session, in Blocks apps as well. To reiterate, session data is _not_ shared between different users of your model. To store data in a session state, you need to do three things:

1. Create a `gr.State()` object. If there is a default value to this stateful object, pass that into the constructor.

2. In the event listener, put the `State` object as an input and output.

3. In the event listener function, add the variable to the input parameters and the return value.

Let's take a look at a game of hangman.

$code_hangman

$demo_hangman

Let's see how we do each of the 3 steps listed above in this game:

1. We store the used letters in `used_letters_var`. In the constructor of `State`, we set the initial value of this to `[]`, an empty list.

2. In `btn.click()`, we have a reference to `used_letters_var` in both the inputs and outputs.

3. In `guess_letter`, we pass the value of this `State` to `used_letters`, and then return an updated value of this `State` in the return statement.

With more complex apps, you will likely have many State variables storing session state in a single Blocks app.

Learn more about `State` in the [docs](https://gradio.app/docs#state).

| gradio-app/gradio/blob/main/guides/03_building-with-blocks/03_state-in-blocks.md |

如何使用地图组件绘制图表

Related spaces:

Tags: PLOTS, MAPS

## 简介

本指南介绍如何使用 Gradio 的 `Plot` 组件在地图上绘制地理数据。Gradio 的 `Plot` 组件可以与 Matplotlib、Bokeh 和 Plotly 一起使用。在本指南中,我们将使用 Plotly 进行操作。Plotly 可以让开发人员轻松创建各种地图来展示他们的地理数据。点击[这里](https://plotly.com/python/maps/)查看一些示例。

## 概述

我们将使用纽约市的 Airbnb 数据集,该数据集托管在 kaggle 上,点击[这里](https://www.kaggle.com/datasets/dgomonov/new-york-city-airbnb-open-data)。我已经将其上传到 Hugging Face Hub 作为一个数据集,方便使用和下载,点击[这里](https://huggingface.co/datasets/gradio/NYC-Airbnb-Open-Data)。使用这些数据,我们将在地图上绘制 Airbnb 的位置,并允许基于价格和位置进行筛选。下面是我们将要构建的演示。 ⚡️

$demo_map_airbnb

## 步骤 1-加载 CSV 数据 💾

让我们首先从 Hugging Face Hub 加载纽约市的 Airbnb 数据。

```python

from datasets import load_dataset

dataset = load_dataset("gradio/NYC-Airbnb-Open-Data", split="train")

df = dataset.to_pandas()

def filter_map(min_price, max_price, boroughs):

new_df = df[(df['neighbourhood_group'].isin(boroughs)) &

(df['price'] > min_price) & (df['price'] < max_price)]

names = new_df["name"].tolist()

prices = new_df["price"].tolist()

text_list = [(names[i], prices[i]) for i in range(0, len(names))]

```

在上面的代码中,我们先将 CSV 数据加载到一个 pandas dataframe 中。让我们首先定义一个函数,这将作为 gradio 应用程序的预测函数。该函数将接受最低价格、最高价格范围和筛选结果地区的列表作为参数。我们可以使用传入的值 (`min_price`、`max_price` 和地区列表) 来筛选数据框并创建 `new_df`。接下来,我们将创建包含每个 Airbnb 的名称和价格的 `text_list`,以便在地图上使用作为标签。

## 步骤 2-地图图表 🌐

Plotly 使得处理地图变得很容易。让我们看一下下面的代码,了解如何创建地图图表。

```python

import plotly.graph_objects as go

fig = go.Figure(go.Scattermapbox(

customdata=text_list,

lat=new_df['latitude'].tolist(),

lon=new_df['longitude'].tolist(),

mode='markers',

marker=go.scattermapbox.Marker(

size=6

),

hoverinfo="text",

hovertemplate='<b>Name</b>: %{customdata[0]}<br><b>Price</b>: $%{customdata[1]}'

))

fig.update_layout(

mapbox_style="open-street-map",

hovermode='closest',

mapbox=dict(

bearing=0,

center=go.layout.mapbox.Center(

lat=40.67,

lon=-73.90

),

pitch=0,

zoom=9

),

)

```

上面的代码中,我们通过传入经纬度列表来创建一个散点图。我们还传入了名称和价格的自定义数据,以便在鼠标悬停在每个标记上时显示额外的信息。接下来,我们使用 `update_layout` 来指定其他地图设置,例如缩放和居中。

有关使用 Mapbox 和 Plotly 创建散点图的更多信息,请点击[这里](https://plotly.com/python/scattermapbox/)。

## 步骤 3-Gradio 应用程序 ⚡️

我们将使用两个 `gr.Number` 组件和一个 `gr.CheckboxGroup` 组件,允许用户指定价格范围和地区位置。然后,我们将使用 `gr.Plot` 组件作为我们之前创建的 Plotly + Mapbox 地图的输出。

```python

with gr.Blocks() as demo:

with gr.Column():

with gr.Row():

min_price = gr.Number(value=250, label="Minimum Price")

max_price = gr.Number(value=1000, label="Maximum Price")

boroughs = gr.CheckboxGroup(choices=["Queens", "Brooklyn", "Manhattan", "Bronx", "Staten Island"], value=["Queens", "Brooklyn"], label="Select Boroughs:")

btn = gr.Button(value="Update Filter")

map = gr.Plot()

demo.load(filter_map, [min_price, max_price, boroughs], map)

btn.click(filter_map, [min_price, max_price, boroughs], map)

```

我们使用 `gr.Column` 和 `gr.Row` 布局这些组件,并为演示加载时和点击 " 更新筛选 " 按钮时添加了事件触发器,以触发地图更新新的筛选条件。

以下是完整演示代码:

$code_map_airbnb

## 步骤 4-部署 Deployment 🤗

如果你运行上面的代码,你的应用程序将在本地运行。

如果要获取临时共享链接,可以将 `share=True` 参数传递给 `launch`。

但如果你想要一个永久的部署解决方案呢?

让我们将我们的 Gradio 应用程序部署到免费的 HuggingFace Spaces 平台。

如果你以前没有使用过 Spaces,请按照之前的指南[这里](/using_hugging_face_integrations)。

## 结论 🎉

你已经完成了!这是构建地图演示所需的所有代码。

链接到演示:[地图演示](https://huggingface.co/spaces/gradio/map_airbnb)和[完整代码](https://huggingface.co/spaces/gradio/map_airbnb/blob/main/run.py)(在 Hugging Face Spaces)

| gradio-app/gradio/blob/main/guides/cn/05_tabular-data-science-and-plots/plot-component-for-maps.md |

SE-ResNet

**SE ResNet** is a variant of a [ResNet](https://www.paperswithcode.com/method/resnet) that employs [squeeze-and-excitation blocks](https://paperswithcode.com/method/squeeze-and-excitation-block) to enable the network to perform dynamic channel-wise feature recalibration.

## How do I use this model on an image?

To load a pretrained model:

```py

>>> import timm

>>> model = timm.create_model('seresnet152d', pretrained=True)

>>> model.eval()

```

To load and preprocess the image:

```py

>>> import urllib

>>> from PIL import Image

>>> from timm.data import resolve_data_config

>>> from timm.data.transforms_factory import create_transform

>>> config = resolve_data_config({}, model=model)

>>> transform = create_transform(**config)

>>> url, filename = ("https://github.com/pytorch/hub/raw/master/images/dog.jpg", "dog.jpg")

>>> urllib.request.urlretrieve(url, filename)

>>> img = Image.open(filename).convert('RGB')

>>> tensor = transform(img).unsqueeze(0) # transform and add batch dimension

```

To get the model predictions:

```py

>>> import torch

>>> with torch.no_grad():

... out = model(tensor)

>>> probabilities = torch.nn.functional.softmax(out[0], dim=0)

>>> print(probabilities.shape)

>>> # prints: torch.Size([1000])

```

To get the top-5 predictions class names:

```py

>>> # Get imagenet class mappings

>>> url, filename = ("https://raw.githubusercontent.com/pytorch/hub/master/imagenet_classes.txt", "imagenet_classes.txt")

>>> urllib.request.urlretrieve(url, filename)

>>> with open("imagenet_classes.txt", "r") as f:

... categories = [s.strip() for s in f.readlines()]

>>> # Print top categories per image

>>> top5_prob, top5_catid = torch.topk(probabilities, 5)

>>> for i in range(top5_prob.size(0)):

... print(categories[top5_catid[i]], top5_prob[i].item())

>>> # prints class names and probabilities like:

>>> # [('Samoyed', 0.6425196528434753), ('Pomeranian', 0.04062102362513542), ('keeshond', 0.03186424449086189), ('white wolf', 0.01739676296710968), ('Eskimo dog', 0.011717947199940681)]

```

Replace the model name with the variant you want to use, e.g. `seresnet152d`. You can find the IDs in the model summaries at the top of this page.

To extract image features with this model, follow the [timm feature extraction examples](../feature_extraction), just change the name of the model you want to use.

## How do I finetune this model?

You can finetune any of the pre-trained models just by changing the classifier (the last layer).

```py

>>> model = timm.create_model('seresnet152d', pretrained=True, num_classes=NUM_FINETUNE_CLASSES)

```

To finetune on your own dataset, you have to write a training loop or adapt [timm's training

script](https://github.com/rwightman/pytorch-image-models/blob/master/train.py) to use your dataset.

## How do I train this model?

You can follow the [timm recipe scripts](../scripts) for training a new model afresh.

## Citation

```BibTeX

@misc{hu2019squeezeandexcitation,

title={Squeeze-and-Excitation Networks},

author={Jie Hu and Li Shen and Samuel Albanie and Gang Sun and Enhua Wu},

year={2019},

eprint={1709.01507},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

```

<!--

Type: model-index

Collections:

- Name: SE ResNet

Paper:

Title: Squeeze-and-Excitation Networks

URL: https://paperswithcode.com/paper/squeeze-and-excitation-networks

Models:

- Name: seresnet152d

In Collection: SE ResNet

Metadata:

FLOPs: 20161904304

Parameters: 66840000

File Size: 268144497

Architecture:

- 1x1 Convolution

- Batch Normalization

- Bottleneck Residual Block

- Convolution

- Global Average Pooling

- Max Pooling

- ReLU

- Residual Block

- Residual Connection

- Softmax

- Squeeze-and-Excitation Block

Tasks:

- Image Classification

Training Techniques:

- Label Smoothing

- SGD with Momentum

- Weight Decay

Training Data:

- ImageNet

Training Resources: 8x NVIDIA Titan X GPUs

ID: seresnet152d

LR: 0.6

Epochs: 100

Layers: 152

Dropout: 0.2

Crop Pct: '0.94'

Momentum: 0.9

Batch Size: 1024

Image Size: '256'

Interpolation: bicubic

Code: https://github.com/rwightman/pytorch-image-models/blob/a7f95818e44b281137503bcf4b3e3e94d8ffa52f/timm/models/resnet.py#L1206

Weights: https://github.com/rwightman/pytorch-image-models/releases/download/v0.1-weights/seresnet152d_ra2-04464dd2.pth

Results:

- Task: Image Classification

Dataset: ImageNet

Metrics:

Top 1 Accuracy: 83.74%

Top 5 Accuracy: 96.77%

- Name: seresnet50

In Collection: SE ResNet

Metadata:

FLOPs: 5285062320

Parameters: 28090000

File Size: 112621903

Architecture:

- 1x1 Convolution

- Batch Normalization

- Bottleneck Residual Block

- Convolution

- Global Average Pooling

- Max Pooling

- ReLU

- Residual Block

- Residual Connection

- Softmax

- Squeeze-and-Excitation Block

Tasks:

- Image Classification

Training Techniques:

- Label Smoothing

- SGD with Momentum

- Weight Decay

Training Data:

- ImageNet

Training Resources: 8x NVIDIA Titan X GPUs

ID: seresnet50

LR: 0.6

Epochs: 100

Layers: 50

Dropout: 0.2

Crop Pct: '0.875'

Momentum: 0.9

Batch Size: 1024

Image Size: '224'

Interpolation: bicubic

Code: https://github.com/rwightman/pytorch-image-models/blob/a7f95818e44b281137503bcf4b3e3e94d8ffa52f/timm/models/resnet.py#L1180

Weights: https://github.com/rwightman/pytorch-image-models/releases/download/v0.1-weights/seresnet50_ra_224-8efdb4bb.pth

Results:

- Task: Image Classification

Dataset: ImageNet

Metrics:

Top 1 Accuracy: 80.26%

Top 5 Accuracy: 95.07%

--> | huggingface/pytorch-image-models/blob/main/hfdocs/source/models/se-resnet.mdx |

--

title: poseval

emoji: 🤗

colorFrom: blue

colorTo: red

sdk: gradio

sdk_version: 3.19.1

app_file: app.py

pinned: false

tags:

- evaluate

- metric

description: >-

The poseval metric can be used to evaluate POS taggers. Since seqeval does not work well with POS data

that is not in IOB format the poseval is an alternative. It treats each token in the dataset as independant

observation and computes the precision, recall and F1-score irrespective of sentences. It uses scikit-learns's

classification report to compute the scores.

---

# Metric Card for peqeval

## Metric description

The poseval metric can be used to evaluate POS taggers. Since seqeval does not work well with POS data (see e.g. [here](https://stackoverflow.com/questions/71327693/how-to-disable-seqeval-label-formatting-for-pos-tagging)) that is not in IOB format the poseval is an alternative. It treats each token in the dataset as independant observation and computes the precision, recall and F1-score irrespective of sentences. It uses scikit-learns's [classification report](https://scikit-learn.org/stable/modules/generated/sklearn.metrics.classification_report.html) to compute the scores.

## How to use

Poseval produces labelling scores along with its sufficient statistics from a source against references.

It takes two mandatory arguments:

`predictions`: a list of lists of predicted labels, i.e. estimated targets as returned by a tagger.

`references`: a list of lists of reference labels, i.e. the ground truth/target values.

It can also take several optional arguments:

`zero_division`: Which value to substitute as a metric value when encountering zero division. Should be one of [`0`,`1`,`"warn"`]. `"warn"` acts as `0`, but the warning is raised.

```python

>>> predictions = [['INTJ', 'ADP', 'PROPN', 'NOUN', 'PUNCT', 'INTJ', 'ADP', 'PROPN', 'VERB', 'SYM']]

>>> references = [['INTJ', 'ADP', 'PROPN', 'PROPN', 'PUNCT', 'INTJ', 'ADP', 'PROPN', 'PROPN', 'SYM']]

>>> poseval = evaluate.load("poseval")

>>> results = poseval.compute(predictions=predictions, references=references)

>>> print(list(results.keys()))

['ADP', 'INTJ', 'NOUN', 'PROPN', 'PUNCT', 'SYM', 'VERB', 'accuracy', 'macro avg', 'weighted avg']

>>> print(results["accuracy"])

0.8

>>> print(results["PROPN"]["recall"])

0.5

```

## Output values

This metric returns a a classification report as a dictionary with a summary of scores for overall and per type:

Overall (weighted and macro avg):

`accuracy`: the average [accuracy](https://huggingface.co/metrics/accuracy), on a scale between 0.0 and 1.0.

`precision`: the average [precision](https://huggingface.co/metrics/precision), on a scale between 0.0 and 1.0.

`recall`: the average [recall](https://huggingface.co/metrics/recall), on a scale between 0.0 and 1.0.

`f1`: the average [F1 score](https://huggingface.co/metrics/f1), which is the harmonic mean of the precision and recall. It also has a scale of 0.0 to 1.0.

Per type (e.g. `MISC`, `PER`, `LOC`,...):

`precision`: the average [precision](https://huggingface.co/metrics/precision), on a scale between 0.0 and 1.0.

`recall`: the average [recall](https://huggingface.co/metrics/recall), on a scale between 0.0 and 1.0.

`f1`: the average [F1 score](https://huggingface.co/metrics/f1), on a scale between 0.0 and 1.0.

## Examples

```python

>>> predictions = [['INTJ', 'ADP', 'PROPN', 'NOUN', 'PUNCT', 'INTJ', 'ADP', 'PROPN', 'VERB', 'SYM']]

>>> references = [['INTJ', 'ADP', 'PROPN', 'PROPN', 'PUNCT', 'INTJ', 'ADP', 'PROPN', 'PROPN', 'SYM']]

>>> poseval = evaluate.load("poseval")

>>> results = poseval.compute(predictions=predictions, references=references)

>>> print(list(results.keys()))

['ADP', 'INTJ', 'NOUN', 'PROPN', 'PUNCT', 'SYM', 'VERB', 'accuracy', 'macro avg', 'weighted avg']

>>> print(results["accuracy"])

0.8

>>> print(results["PROPN"]["recall"])

0.5

```

## Limitations and bias

In contrast to [seqeval](https://github.com/chakki-works/seqeval), the poseval metric treats each token independently and computes the classification report over all concatenated sequences..

## Citation

```bibtex

@article{scikit-learn,

title={Scikit-learn: Machine Learning in {P}ython},

author={Pedregosa, F. and Varoquaux, G. and Gramfort, A. and Michel, V.

and Thirion, B. and Grisel, O. and Blondel, M. and Prettenhofer, P.

and Weiss, R. and Dubourg, V. and Vanderplas, J. and Passos, A. and

Cournapeau, D. and Brucher, M. and Perrot, M. and Duchesnay, E.},

journal={Journal of Machine Learning Research},

volume={12},

pages={2825--2830},

year={2011}

}

```

## Further References

- [README for seqeval at GitHub](https://github.com/chakki-works/seqeval)

- [Classification report](https://scikit-learn.org/stable/modules/generated/sklearn.metrics.classification_report.html)

- [Issues with seqeval](https://stackoverflow.com/questions/71327693/how-to-disable-seqeval-label-formatting-for-pos-tagging) | huggingface/evaluate/blob/main/metrics/poseval/README.md |

--

title: "Large Language Models: A New Moore's Law?"

thumbnail: /blog/assets/33_large_language_models/01_model_size.jpg

authors:

- user: juliensimon

---

# Large Language Models: A New Moore's Law?

A few days ago, Microsoft and NVIDIA [introduced](https://www.microsoft.com/en-us/research/blog/using-deepspeed-and-megatron-to-train-megatron-turing-nlg-530b-the-worlds-largest-and-most-powerful-generative-language-model/) Megatron-Turing NLG 530B, a Transformer-based model hailed as "*the world’s largest and most powerful generative language model*."

This is an impressive show of Machine Learning engineering, no doubt about it. Yet, should we be excited about this mega-model trend? I, for one, am not. Here's why.

<kbd>

<img src="assets/33_large_language_models/01_model_size.jpg">

</kbd>

### This is your Brain on Deep Learning

Researchers estimate that the human brain contains an average of [86 billion neurons](https://pubmed.ncbi.nlm.nih.gov/19226510/) and 100 trillion synapses. It's safe to assume that not all of them are dedicated to language either. Interestingly, GPT-4 is [expected](https://www.wired.com/story/cerebras-chip-cluster-neural-networks-ai/) to have about 100 trillion parameters... As crude as this analogy is, shouldn't we wonder whether building language models that are about the size of the human brain is the best long-term approach?

Of course, our brain is a marvelous device, produced by millions of years of evolution, while Deep Learning models are only a few decades old. Still, our intuition should tell us that something doesn't compute (pun intended).

### Deep Learning, Deep Pockets?

As you would expect, training a 530-billion parameter model on humongous text datasets requires a fair bit of infrastructure. In fact, Microsoft and NVIDIA used hundreds of DGX A100 multi-GPU servers. At $199,000 a piece, and factoring in networking equipment, hosting costs, etc., anyone looking to replicate this experiment would have to spend close to $100 million dollars. Want fries with that?

Seriously, which organizations have business use cases that would justify spending $100 million on Deep Learning infrastructure? Or even $10 million? Very few. So who are these models for, really?

### That Warm Feeling is your GPU Cluster

For all its engineering brilliance, training Deep Learning models on GPUs is a brute force technique. According to the spec sheet, each DGX server can consume up to 6.5 kilowatts. Of course, you'll need at least as much cooling power in your datacenter (or your server closet). Unless you're the Starks and need to keep Winterfell warm in winter, that's another problem you'll have to deal with.

In addition, as public awareness grows on climate and social responsibility issues, organizations need to account for their carbon footprint. According to this 2019 [study](https://arxiv.org/pdf/1906.02243.pdf) from the University of Massachusetts, "*training BERT on GPU is roughly equivalent to a trans-American flight*".

BERT-Large has 340 million parameters. One can only extrapolate what the footprint of Megatron-Turing could be... People who know me wouldn't call me a bleeding-heart environmentalist. Still, some numbers are hard to ignore.

### So?

Am I excited by Megatron-Turing NLG 530B and whatever beast is coming next? No. Do I think that the (relatively small) benchmark improvement is worth the added cost, complexity and carbon footprint? No. Do I think that building and promoting these huge models is helping organizations understand and adopt Machine Learning ? No.

I'm left wondering what's the point of it all. Science for the sake of science? Good old marketing? Technological supremacy? Probably a bit of each. I'll leave them to it, then.

Instead, let me focus on pragmatic and actionable techniques that you can all use to build high quality Machine Learning solutions.

### Use Pretrained Models

In the vast majority of cases, you won't need a custom model architecture. Maybe you'll *want* a custom one (which is a different thing), but there be dragons. Experts only!

A good starting point is to look for [models](https://huggingface.co/models) that have been pretrained for the task you're trying to solve (say, [summarizing English text](https://huggingface.co/models?language=en&pipeline_tag=summarization&sort=downloads)).

Then, you should quickly try out a few models to predict your own data. If metrics tell you that one works well enough, you're done! If you need a little more accuracy, you should consider fine-tuning the model (more on this in a minute).

### Use Smaller Models

When evaluating models, you should pick the smallest one that can deliver the accuracy you need. It will predict faster and require fewer hardware resources for training and inference. Frugality goes a long way.

It's nothing new either. Computer Vision practitioners will remember when [SqueezeNet](https://arxiv.org/abs/1602.07360) came out in 2017, achieving a 50x reduction in model size compared to [AlexNet](https://papers.nips.cc/paper/2012/hash/c399862d3b9d6b76c8436e924a68c45b-Abstract.html), while meeting or exceeding its accuracy. How clever that was!

Downsizing efforts are also under way in the Natural Language Processing community, using transfer learning techniques such as [knowledge distillation](https://en.wikipedia.org/wiki/Knowledge_distillation). [DistilBERT](https://arxiv.org/abs/1910.01108) is perhaps its most widely known achievement. Compared to the original BERT model, it retains 97% of language understanding while being 40% smaller and 60% faster. You can try it [here](https://huggingface.co/distilbert-base-uncased). The same approach has been applied to other models, such as Facebook's [BART](https://arxiv.org/abs/1910.13461), and you can try DistilBART [here](https://huggingface.co/models?search=distilbart).

Recent models from the [Big Science](https://bigscience.huggingface.co/) project are also very impressive. As visible in this graph included in the [research paper](https://arxiv.org/abs/2110.08207), their T0 model outperforms GPT-3 on many tasks while being 16x smaller.

<kbd>

<img src="assets/33_large_language_models/02_t0.png">

</kbd>

You can try T0 [here](https://huggingface.co/bigscience/T0pp). This is the kind of research we need more of!

### Fine-Tune Models

If you need to specialize a model, there should be very few reasons to train it from scratch. Instead, you should fine-tune it, that is to say train it only for a few epochs on your own data. If you're short on data, maybe of one these [datasets](https://huggingface.co/datasets) can get you started.

You guessed it, that's another way to do transfer learning, and it'll help you save on everything!

* Less data to collect, store, clean and annotate,

* Faster experiments and iterations,

* Fewer resources required in production.

In other words: save time, save money, save hardware resources, save the world!

If you need a tutorial, the Hugging Face [course](https://huggingface.co/course) will get you started in no time.

### Use Cloud-Based Infrastructure

Like them or not, cloud companies know how to build efficient infrastructure. Sustainability studies show that cloud-based infrastructure is more energy and carbon efficient than the alternative: see [AWS](https://sustainability.aboutamazon.com/environment/the-cloud), [Azure](https://azure.microsoft.com/en-us/global-infrastructure/sustainability), and [Google](https://cloud.google.com/sustainability). Earth.org [says](https://earth.org/environmental-impact-of-cloud-computing/) that while cloud infrastructure is not perfect, "[*it's] more energy efficient than the alternative and facilitates environmentally beneficial services and economic growth.*"

Cloud certainly has a lot going for it when it comes to ease of use, flexibility and pay as you go. It's also a little greener than you probably thought. If you're short on GPUs, why not try fine-tune your Hugging Face models on [Amazon SageMaker](https://aws.amazon.com/sagemaker/), AWS' managed service for Machine Learning? We've got [plenty of examples](https://huggingface.co/docs/sagemaker/train) for you.

### Optimize Your Models

From compilers to virtual machines, software engineers have long used tools that automatically optimize their code for whatever hardware they're running on.

However, the Machine Learning community is still struggling with this topic, and for good reason. Optimizing models for size and speed is a devilishly complex task, which involves techniques such as:

* Specialized hardware that speeds up training ([Graphcore](https://www.graphcore.ai/), [Habana](https://habana.ai/)) and inference ([Google TPU](https://cloud.google.com/tpu), [AWS Inferentia](https://aws.amazon.com/machine-learning/inferentia/)).

* Pruning: remove model parameters that have little or no impact on the predicted outcome.

* Fusion: merge model layers (say, convolution and activation).

* Quantization: storing model parameters in smaller values (say, 8 bits instead of 32 bits)

Fortunately, automated tools are starting to appear, such as the [Optimum](https://huggingface.co/hardware) open source library, and [Infinity](https://huggingface.co/infinity), a containerized solution that delivers Transformers accuracy at 1-millisecond latency.

### Conclusion

Large language model size has been increasing 10x every year for the last few years. This is starting to look like another [Moore's Law](https://en.wikipedia.org/wiki/Moore%27s_law).

We've been there before, and we should know that this road leads to diminishing returns, higher cost, more complexity, and new risks. Exponentials tend not to end well. Remember [Meltdown and Spectre](https://meltdownattack.com/)? Do we want to find out what that looks like for AI?

Instead of chasing trillion-parameter models (place your bets), wouldn't all be better off if we built practical and efficient solutions that all developers can use to solve real-world problems?

*Interested in how Hugging Face can help your organization build and deploy production-grade Machine Learning solutions? Get in touch at [[email protected]](mailto:[email protected]) (no recruiters, no sales pitches, please).*

| huggingface/blog/blob/main/large-language-models.md |

!--Copyright 2021 The HuggingFace Team. All rights reserved.

Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with

the License. You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on

an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the

specific language governing permissions and limitations under the License.

⚠️ Note that this file is in Markdown but contain specific syntax for our doc-builder (similar to MDX) that may not be

rendered properly in your Markdown viewer.

-->

# VisionTextDualEncoder

## Overview

The [`VisionTextDualEncoderModel`] can be used to initialize a vision-text dual encoder model with

any pretrained vision autoencoding model as the vision encoder (*e.g.* [ViT](vit), [BEiT](beit), [DeiT](deit)) and any pretrained text autoencoding model as the text encoder (*e.g.* [RoBERTa](roberta), [BERT](bert)). Two projection layers are added on top of both the vision and text encoder to project the output embeddings

to a shared latent space. The projection layers are randomly initialized so the model should be fine-tuned on a

downstream task. This model can be used to align the vision-text embeddings using CLIP like contrastive image-text

training and then can be used for zero-shot vision tasks such image-classification or retrieval.

In [LiT: Zero-Shot Transfer with Locked-image Text Tuning](https://arxiv.org/abs/2111.07991) it is shown how

leveraging pre-trained (locked/frozen) image and text model for contrastive learning yields significant improvement on

new zero-shot vision tasks such as image classification or retrieval.

## VisionTextDualEncoderConfig

[[autodoc]] VisionTextDualEncoderConfig

## VisionTextDualEncoderProcessor

[[autodoc]] VisionTextDualEncoderProcessor

<frameworkcontent>

<pt>

## VisionTextDualEncoderModel

[[autodoc]] VisionTextDualEncoderModel

- forward

</pt>

<tf>

## FlaxVisionTextDualEncoderModel

[[autodoc]] FlaxVisionTextDualEncoderModel

- __call__

</tf>

<jax>

## TFVisionTextDualEncoderModel

[[autodoc]] TFVisionTextDualEncoderModel

- call

</jax>

</frameworkcontent>

| huggingface/transformers/blob/main/docs/source/en/model_doc/vision-text-dual-encoder.md |

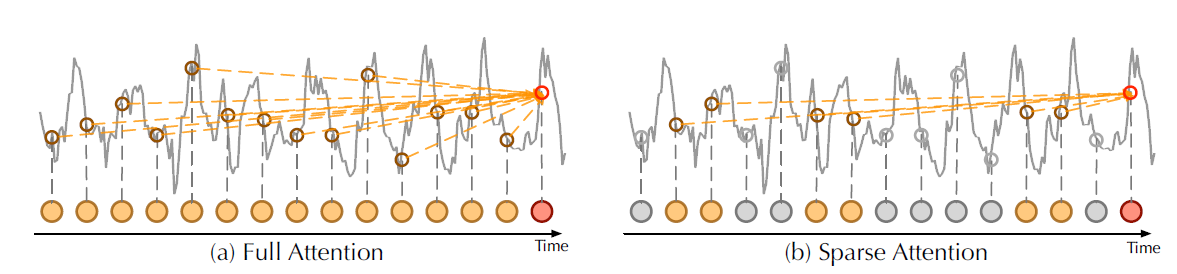

hat is dynamic padding? In the "Batching Inputs together" video, we have seen that to be able to group inputs of different lengths in the same batch, we need to add padding tokens to all the short inputs until they are all of the same length. Here for instance, the longest sentence is the third one, and we need to add 5, 2 and 7 pad tokens to the other to have four sentences of the same lengths. When dealing with a whole dataset, there are various padding strategies we can apply. The most obvious one is to pad all the elements of the dataset to the same length: the length of the longest sample. This will then give us batches that all have the same shape determined by the maximum sequence length. The downside is that batches composed from short sentences will have a lot of padding tokens which introduce more computations in the model we ultimately don't need. To avoid this, another strategy is to pad the elements when we batch them together, to the longest sentence inside the batch. This way batches composed of short inputs will be smaller than the batch containing the longest sentence in the dataset. This will yield some nice speedup on CPU and GPU. The downside is that all batches will then have different shapes, which slows down training on other accelerators like TPUs. Let's see how to apply both strategies in practice. We have actually seen how to apply fixed padding in the Datasets Overview video, when we preprocessed the MRPC dataset: after loading the dataset and tokenizer, we applied the tokenization to all the dataset with padding and truncation to make all samples of length 128. As a result, if we pass this dataset to a PyTorch DataLoader, we get batches of shape batch size (here 16) by 128. To apply dynamic padding, we must defer the padding to the batch preparation, so we remove that part from our tokenize function. We still leave the truncation part so that inputs that are bigger than the maximum length accepted by the model (usually 512) get truncated to that length. Then we pad our samples dynamically by using a data collator. Those classes in the Transformers library are responsible for applying all the final processing needed before forming a batch, here DataCollatorWithPadding will pad the samples to the maximum length inside the batch of sentences. We pass it to the PyTorch DataLoader as a collate function, then observe that the batches generated have various lenghs, all way below the 128 from before. Dynamic batching will almost always be faster on CPUs and GPUs, so you should apply it if you can. Remember to switch back to fixed padding however if you run your training script on TPU or need batches of fixed shapes. | huggingface/course/blob/main/subtitles/en/raw/chapter3/02d_dynamic-padding.md |

!--Copyright 2023 The HuggingFace Team. All rights reserved.

Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with

the License. You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on

an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the

specific language governing permissions and limitations under the License.

-->

# Kandinsky 3

Kandinsky 3 is created by [Vladimir Arkhipkin](https://github.com/oriBetelgeuse),[Anastasia Maltseva](https://github.com/NastyaMittseva),[Igor Pavlov](https://github.com/boomb0om),[Andrei Filatov](https://github.com/anvilarth),[Arseniy Shakhmatov](https://github.com/cene555),[Andrey Kuznetsov](https://github.com/kuznetsoffandrey),[Denis Dimitrov](https://github.com/denndimitrov), [Zein Shaheen](https://github.com/zeinsh)

The description from it's Github page:

*Kandinsky 3.0 is an open-source text-to-image diffusion model built upon the Kandinsky2-x model family. In comparison to its predecessors, enhancements have been made to the text understanding and visual quality of the model, achieved by increasing the size of the text encoder and Diffusion U-Net models, respectively.*

Its architecture includes 3 main components:

1. [FLAN-UL2](https://huggingface.co/google/flan-ul2), which is an encoder decoder model based on the T5 architecture.

2. New U-Net architecture featuring BigGAN-deep blocks doubles depth while maintaining the same number of parameters.

3. Sber-MoVQGAN is a decoder proven to have superior results in image restoration.

The original codebase can be found at [ai-forever/Kandinsky-3](https://github.com/ai-forever/Kandinsky-3).

<Tip>

Check out the [Kandinsky Community](https://huggingface.co/kandinsky-community) organization on the Hub for the official model checkpoints for tasks like text-to-image, image-to-image, and inpainting.

</Tip>

<Tip>

Make sure to check out the schedulers [guide](../../using-diffusers/schedulers) to learn how to explore the tradeoff between scheduler speed and quality, and see the [reuse components across pipelines](../../using-diffusers/loading#reuse-components-across-pipelines) section to learn how to efficiently load the same components into multiple pipelines.

</Tip>

## Kandinsky3Pipeline

[[autodoc]] Kandinsky3Pipeline

- all

- __call__

## Kandinsky3Img2ImgPipeline

[[autodoc]] Kandinsky3Img2ImgPipeline

- all

- __call__

| huggingface/diffusers/blob/main/docs/source/en/api/pipelines/kandinsky3.md |

Datasets server - worker

> Workers that pre-compute and cache the response to /splits, /first-rows, /parquet, /info and /size.

## Configuration

Use environment variables to configure the workers. The prefix of each environment variable gives its scope.

### Uvicorn

The following environment variables are used to configure the Uvicorn server (`WORKER_UVICORN_` prefix). It is used for the /healthcheck and the /metrics endpoints:

- `WORKER_UVICORN_HOSTNAME`: the hostname. Defaults to `"localhost"`.

- `WORKER_UVICORN_NUM_WORKERS`: the number of uvicorn workers. Defaults to `2`.

- `WORKER_UVICORN_PORT`: the port. Defaults to `8000`.

### Prometheus

- `PROMETHEUS_MULTIPROC_DIR`: the directory where the uvicorn workers share their prometheus metrics. See https://github.com/prometheus/client_python#multiprocess-mode-eg-gunicorn. Defaults to empty, in which case every uvicorn worker manages its own metrics, and the /metrics endpoint returns the metrics of a random worker.

## Worker configuration

Set environment variables to configure the worker.

- `WORKER_CONTENT_MAX_BYTES`: the maximum size in bytes of the response content computed by a worker (to prevent returning big responses in the REST API). Defaults to `10_000_000`.

- `WORKER_DIFFICULTY_MAX`: the maximum difficulty of the jobs to process. Defaults to None.

- `WORKER_DIFFICULTY_MIN`: the minimum difficulty of the jobs to process. Defaults to None.

- `WORKER_HEARTBEAT_INTERVAL_SECONDS`: the time interval between two heartbeats. Each heartbeat updates the job "last_heartbeat" field in the queue. Defaults to `60` (1 minute).

- `WORKER_JOB_TYPES_BLOCKED`: comma-separated list of job types that will not be processed, e.g. "dataset-config-names,dataset-split-names". If empty, no job type is blocked. Defaults to empty.

- `WORKER_JOB_TYPES_ONLY`: comma-separated list of the non-blocked job types to process, e.g. "dataset-config-names,dataset-split-names". If empty, the worker processes all the non-blocked jobs. Defaults to empty.

- `WORKER_KILL_LONG_JOB_INTERVAL_SECONDS`: the time interval at which the worker looks for long jobs to kill them. Defaults to `60` (1 minute).

- `WORKER_KILL_ZOMBIES_INTERVAL_SECONDS`: the time interval at which the worker looks for zombie jobs to kill them. Defaults to `600` (10 minutes).

- `WORKER_MAX_DISK_USAGE_PCT`: maximum disk usage of every storage disk in the list (in percentage) to allow a job to start. Set to 0 to disable the test. Defaults to 90.

- `WORKER_MAX_JOB_DURATION_SECONDS`: the maximum duration allowed for a job to run. If the job runs longer, it is killed (see `WORKER_KILL_LONG_JOB_INTERVAL_SECONDS`). Defaults to `1200` (20 minutes).

- `WORKER_MAX_LOAD_PCT`: maximum load of the machine (in percentage: the max between the 1m load and the 5m load divided by the number of CPUs \*100) allowed to start a job. Set to 0 to disable the test. Defaults to 70.

- `WORKER_MAX_MEMORY_PCT`: maximum memory (RAM + SWAP) usage of the machine (in percentage) allowed to start a job. Set to 0 to disable the test. Defaults to 80.

- `WORKER_MAX_MISSING_HEARTBEATS`: the number of hearbeats a job must have missed to be considered a zombie job. Defaults to `5`.

- `WORKER_SLEEP_SECONDS`: wait duration in seconds at each loop iteration before checking if resources are available and processing a job if any is available. Note that the loop doesn't wait just after finishing a job: the next job is immediately processed. Defaults to `15`.

- `WORKER_STORAGE_PATHS`: comma-separated list of paths to check for disk usage. Defaults to empty.

Also, it's possible to force the parent directory in which the temporary files (as the current job state file and its associated lock file) will be created by setting `TMPDIR` to a writable directory. If not set, the worker will use the default temporary directory of the system, as described in https://docs.python.org/3/library/tempfile.html#tempfile.gettempdir.

### Datasets based worker

Set environment variables to configure the datasets-based worker (`DATASETS_BASED_` prefix):

- `DATASETS_BASED_HF_DATASETS_CACHE`: directory where the `datasets` library will store the cached datasets' data. If not set, the datasets library will choose the default location. Defaults to None.

Also, set the modules cache configuration for the datasets-based worker. See [../../libs/libcommon/README.md](../../libs/libcommon/README.md). Note that this variable has no `DATASETS_BASED_` prefix:

- `HF_MODULES_CACHE`: directory where the `datasets` library will store the cached dataset scripts. If not set, the datasets library will choose the default location. Defaults to None.

Note that both directories will be appended to `WORKER_STORAGE_PATHS` (see [../../libs/libcommon/README.md](../../libs/libcommon/README.md)) to hold the workers when the disk is full.

### Numba library

Numba requires setting the `NUMBA_CACHE_DIR` environment variable to a writable directory to cache the compiled functions. Required on cloud infrastructure (see https://stackoverflow.com/a/63367171/7351594):

- `NUMBA_CACHE_DIR`: directory where the `numba` decorators (used by `librosa`) can write cache.

Note that this directory will be appended to `WORKER_STORAGE_PATHS` (see [../../libs/libcommon/README.md](../../libs/libcommon/README.md)) to hold the workers when the disk is full.

### Huggingface_hub library

If the Hub is not https://huggingface.co (i.e., if you set the `COMMON_HF_ENDPOINT` environment variable), you must set the `HF_ENDPOINT` environment variable to the same value. See https://github.com/huggingface/datasets/pull/5196#issuecomment-1322191411 for more details:

- `HF_ENDPOINT`: the URL of the Hub. Defaults to `https://huggingface.co`.

### First rows worker

Set environment variables to configure the `first-rows` worker (`FIRST_ROWS_` prefix):

- `FIRST_ROWS_MAX_BYTES`: the max size of the /first-rows response in bytes. Defaults to `1_000_000` (1 MB).

- `FIRST_ROWS_MAX_NUMBER`: the max number of rows fetched by the worker for the split and provided in the /first-rows response. Defaults to `100`.

- `FIRST_ROWS_MIN_CELL_BYTES`: the minimum size in bytes of a cell when truncating the content of a row (see `FIRST_ROWS_ROWS_MAX_BYTES`). Below this limit, the cell content will not be truncated. Defaults to `100`.

- `FIRST_ROWS_MIN_NUMBER`: the min number of rows fetched by the worker for the split and provided in the /first-rows response. Defaults to `10`.