text

stringlengths 23

371k

| source

stringlengths 32

152

|

|---|---|

Wide ResNet

**Wide Residual Networks** are a variant on [ResNets](https://paperswithcode.com/method/resnet) where we decrease depth and increase the width of residual networks. This is achieved through the use of [wide residual blocks](https://paperswithcode.com/method/wide-residual-block).

## How do I use this model on an image?

To load a pretrained model:

```python

import timm

model = timm.create_model('wide_resnet101_2', pretrained=True)

model.eval()

```

To load and preprocess the image:

```python

import urllib

from PIL import Image

from timm.data import resolve_data_config

from timm.data.transforms_factory import create_transform

config = resolve_data_config({}, model=model)

transform = create_transform(**config)

url, filename = ("https://github.com/pytorch/hub/raw/master/images/dog.jpg", "dog.jpg")

urllib.request.urlretrieve(url, filename)

img = Image.open(filename).convert('RGB')

tensor = transform(img).unsqueeze(0) # transform and add batch dimension

```

To get the model predictions:

```python

import torch

with torch.no_grad():

out = model(tensor)

probabilities = torch.nn.functional.softmax(out[0], dim=0)

print(probabilities.shape)

# prints: torch.Size([1000])

```

To get the top-5 predictions class names:

```python

# Get imagenet class mappings

url, filename = ("https://raw.githubusercontent.com/pytorch/hub/master/imagenet_classes.txt", "imagenet_classes.txt")

urllib.request.urlretrieve(url, filename)

with open("imagenet_classes.txt", "r") as f:

categories = [s.strip() for s in f.readlines()]

# Print top categories per image

top5_prob, top5_catid = torch.topk(probabilities, 5)

for i in range(top5_prob.size(0)):

print(categories[top5_catid[i]], top5_prob[i].item())

# prints class names and probabilities like:

# [('Samoyed', 0.6425196528434753), ('Pomeranian', 0.04062102362513542), ('keeshond', 0.03186424449086189), ('white wolf', 0.01739676296710968), ('Eskimo dog', 0.011717947199940681)]

```

Replace the model name with the variant you want to use, e.g. `wide_resnet101_2`. You can find the IDs in the model summaries at the top of this page.

To extract image features with this model, follow the [timm feature extraction examples](https://rwightman.github.io/pytorch-image-models/feature_extraction/), just change the name of the model you want to use.

## How do I finetune this model?

You can finetune any of the pre-trained models just by changing the classifier (the last layer).

```python

model = timm.create_model('wide_resnet101_2', pretrained=True, num_classes=NUM_FINETUNE_CLASSES)

```

To finetune on your own dataset, you have to write a training loop or adapt [timm's training

script](https://github.com/rwightman/pytorch-image-models/blob/master/train.py) to use your dataset.

## How do I train this model?

You can follow the [timm recipe scripts](https://rwightman.github.io/pytorch-image-models/scripts/) for training a new model afresh.

## Citation

```BibTeX

@article{DBLP:journals/corr/ZagoruykoK16,

author = {Sergey Zagoruyko and

Nikos Komodakis},

title = {Wide Residual Networks},

journal = {CoRR},

volume = {abs/1605.07146},

year = {2016},

url = {http://arxiv.org/abs/1605.07146},

archivePrefix = {arXiv},

eprint = {1605.07146},

timestamp = {Mon, 13 Aug 2018 16:46:42 +0200},

biburl = {https://dblp.org/rec/journals/corr/ZagoruykoK16.bib},

bibsource = {dblp computer science bibliography, https://dblp.org}

}

```

<!--

Type: model-index

Collections:

- Name: Wide ResNet

Paper:

Title: Wide Residual Networks

URL: https://paperswithcode.com/paper/wide-residual-networks

Models:

- Name: wide_resnet101_2

In Collection: Wide ResNet

Metadata:

FLOPs: 29304929280

Parameters: 126890000

File Size: 254695146

Architecture:

- 1x1 Convolution

- Batch Normalization

- Convolution

- Global Average Pooling

- Max Pooling

- ReLU

- Residual Connection

- Softmax

- Wide Residual Block

Tasks:

- Image Classification

Training Data:

- ImageNet

ID: wide_resnet101_2

Crop Pct: '0.875'

Image Size: '224'

Interpolation: bilinear

Code: https://github.com/rwightman/pytorch-image-models/blob/5f9aff395c224492e9e44248b15f44b5cc095d9c/timm/models/resnet.py#L802

Weights: https://download.pytorch.org/models/wide_resnet101_2-32ee1156.pth

Results:

- Task: Image Classification

Dataset: ImageNet

Metrics:

Top 1 Accuracy: 78.85%

Top 5 Accuracy: 94.28%

- Name: wide_resnet50_2

In Collection: Wide ResNet

Metadata:

FLOPs: 14688058368

Parameters: 68880000

File Size: 275853271

Architecture:

- 1x1 Convolution

- Batch Normalization

- Convolution

- Global Average Pooling

- Max Pooling

- ReLU

- Residual Connection

- Softmax

- Wide Residual Block

Tasks:

- Image Classification

Training Data:

- ImageNet

ID: wide_resnet50_2

Crop Pct: '0.875'

Image Size: '224'

Interpolation: bicubic

Code: https://github.com/rwightman/pytorch-image-models/blob/5f9aff395c224492e9e44248b15f44b5cc095d9c/timm/models/resnet.py#L790

Weights: https://github.com/rwightman/pytorch-image-models/releases/download/v0.1-weights/wide_resnet50_racm-8234f177.pth

Results:

- Task: Image Classification

Dataset: ImageNet

Metrics:

Top 1 Accuracy: 81.45%

Top 5 Accuracy: 95.52%

--> | huggingface/pytorch-image-models/blob/main/docs/models/wide-resnet.md |

!--Copyright 2023 The HuggingFace Team. All rights reserved.

Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with

the License. You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on

an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the

specific language governing permissions and limitations under the License.

⚠️ Note that this file is in Markdown but contain specific syntax for our doc-builder (similar to MDX) that may not be

rendered properly in your Markdown viewer.

-->

# Persimmon

## Overview

The Persimmon model was created by [ADEPT](https://www.adept.ai/blog/persimmon-8b), and authored by Erich Elsen, Augustus Odena, Maxwell Nye, Sağnak Taşırlar, Tri Dao, Curtis Hawthorne, Deepak Moparthi, Arushi Somani.

The authors introduced Persimmon-8B, a decoder model based on the classic transformers architecture, with query and key normalization. Persimmon-8B is a fully permissively-licensed model with approximately 8 billion parameters, released under the Apache license. Some of the key attributes of Persimmon-8B are long context size (16K), performance, and capabilities for multimodal extensions.

The authors showcase their approach to model evaluation, focusing on practical text generation, mirroring how users interact with language models. The work also includes a comparative analysis, pitting Persimmon-8B against other prominent models (MPT 7B Instruct and Llama 2 Base 7B 1-Shot), across various evaluation tasks. The results demonstrate Persimmon-8B's competitive performance, even with limited training data.

In terms of model details, the work outlines the architecture and training methodology of Persimmon-8B, providing insights into its design choices, sequence length, and dataset composition. The authors present a fast inference code that outperforms traditional implementations through operator fusion and CUDA graph utilization while maintaining code coherence. They express their anticipation of how the community will leverage this contribution to drive innovation, hinting at further upcoming releases as part of an ongoing series of developments.

This model was contributed by [ArthurZ](https://huggingface.co/ArthurZ).

The original code can be found [here](https://github.com/persimmon-ai-labs/adept-inference).

## Usage tips

<Tip warning={true}>

The `Persimmon` models were trained using `bfloat16`, but the original inference uses `float16` The checkpoints uploaded on the hub use `torch_dtype = 'float16'` which will be

used by the `AutoModel` API to cast the checkpoints from `torch.float32` to `torch.float16`.

The `dtype` of the online weights is mostly irrelevant, unless you are using `torch_dtype="auto"` when initializing a model using `model = AutoModelForCausalLM.from_pretrained("path", torch_dtype = "auto")`. The reason is that the model will first be downloaded ( using the `dtype` of the checkpoints online) then it will be cast to the default `dtype` of `torch` (becomes `torch.float32`). Users should specify the `torch_dtype` they want, and if they don't it will be `torch.float32`.

Finetuning the model in `float16` is not recommended and known to produce `nan`, as such the model should be fine-tuned in `bfloat16`.

</Tip>

Tips:

- To convert the model, you need to clone the original repository using `git clone https://github.com/persimmon-ai-labs/adept-inference`, then get the checkpoints:

```bash

git clone https://github.com/persimmon-ai-labs/adept-inference

wget https://axtkn4xl5cip.objectstorage.us-phoenix-1.oci.customer-oci.com/n/axtkn4xl5cip/b/adept-public-data/o/8b_base_model_release.tar

tar -xvf 8b_base_model_release.tar

python src/transformers/models/persimmon/convert_persimmon_weights_to_hf.py --input_dir /path/to/downloaded/persimmon/weights/ --output_dir /output/path \

--pt_model_path /path/to/8b_chat_model_release/iter_0001251/mp_rank_00/model_optim_rng.pt

--ada_lib_path /path/to/adept-inference

```

For the chat model:

```bash

wget https://axtkn4xl5cip.objectstorage.us-phoenix-1.oci.customer-oci.com/n/axtkn4xl5cip/b/adept-public-data/o/8b_chat_model_release.tar

tar -xvf 8b_base_model_release.tar

```

Thereafter, models can be loaded via:

```py

from transformers import PersimmonForCausalLM, PersimmonTokenizer

model = PersimmonForCausalLM.from_pretrained("/output/path")

tokenizer = PersimmonTokenizer.from_pretrained("/output/path")

```

- Perismmon uses a `sentencepiece` based tokenizer, with a `Unigram` model. It supports bytefallback, which is only available in `tokenizers==0.14.0` for the fast tokenizer.

The `LlamaTokenizer` is used as it is a standard wrapper around sentencepiece. The `chat` template will be updated with the templating functions in a follow up PR!

- The authors suggest to use the following prompt format for the chat mode: `f"human: {prompt}\n\nadept:"`

## PersimmonConfig

[[autodoc]] PersimmonConfig

## PersimmonModel

[[autodoc]] PersimmonModel

- forward

## PersimmonForCausalLM

[[autodoc]] PersimmonForCausalLM

- forward

## PersimmonForSequenceClassification

[[autodoc]] PersimmonForSequenceClassification

- forward

| huggingface/transformers/blob/main/docs/source/en/model_doc/persimmon.md |

Gradio Demo: blocks_webcam

```

!pip install -q gradio

```

```

import numpy as np

import gradio as gr

def snap(image):

return np.flipud(image)

demo = gr.Interface(snap, gr.Image(sources=["webcam"]), "image")

if __name__ == "__main__":

demo.launch()

```

| gradio-app/gradio/blob/main/demo/blocks_webcam/run.ipynb |

Gradio Demo: on_listener_live

```

!pip install -q gradio

```

```

import gradio as gr

with gr.Blocks() as demo:

with gr.Row():

num1 = gr.Slider(1, 10)

num2 = gr.Slider(1, 10)

num3 = gr.Slider(1, 10)

output = gr.Number(label="Sum")

@gr.on(inputs=[num1, num2, num3], outputs=output)

def sum(a, b, c):

return a + b + c

if __name__ == "__main__":

demo.launch()

```

| gradio-app/gradio/blob/main/demo/on_listener_live/run.ipynb |

!--Copyright 2023 The HuggingFace Team. All rights reserved.

Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with

the License. You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on

an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the

specific language governing permissions and limitations under the License.

-->

# ScoreSdeVeScheduler

`ScoreSdeVeScheduler` is a variance exploding stochastic differential equation (SDE) scheduler. It was introduced in the [Score-Based Generative Modeling through Stochastic Differential Equations](https://huggingface.co/papers/2011.13456) paper by Yang Song, Jascha Sohl-Dickstein, Diederik P. Kingma, Abhishek Kumar, Stefano Ermon, Ben Poole.

The abstract from the paper is:

*Creating noise from data is easy; creating data from noise is generative modeling. We present a stochastic differential equation (SDE) that smoothly transforms a complex data distribution to a known prior distribution by slowly injecting noise, and a corresponding reverse-time SDE that transforms the prior distribution back into the data distribution by slowly removing the noise. Crucially, the reverse-time SDE depends only on the time-dependent gradient field (\aka, score) of the perturbed data distribution. By leveraging advances in score-based generative modeling, we can accurately estimate these scores with neural networks, and use numerical SDE solvers to generate samples. We show that this framework encapsulates previous approaches in score-based generative modeling and diffusion probabilistic modeling, allowing for new sampling procedures and new modeling capabilities. In particular, we introduce a predictor-corrector framework to correct errors in the evolution of the discretized reverse-time SDE. We also derive an equivalent neural ODE that samples from the same distribution as the SDE, but additionally enables exact likelihood computation, and improved sampling efficiency. In addition, we provide a new way to solve inverse problems with score-based models, as demonstrated with experiments on class-conditional generation, image inpainting, and colorization. Combined with multiple architectural improvements, we achieve record-breaking performance for unconditional image generation on CIFAR-10 with an Inception score of 9.89 and FID of 2.20, a competitive likelihood of 2.99 bits/dim, and demonstrate high fidelity generation of 1024 x 1024 images for the first time from a score-based generative model.*

## ScoreSdeVeScheduler

[[autodoc]] ScoreSdeVeScheduler

## SdeVeOutput

[[autodoc]] schedulers.scheduling_sde_ve.SdeVeOutput

| huggingface/diffusers/blob/main/docs/source/en/api/schedulers/score_sde_ve.md |

What to do when you get an error[[what-to-do-when-you-get-an-error]]

<CourseFloatingBanner chapter={8}

classNames="absolute z-10 right-0 top-0"

notebooks={[

{label: "Google Colab", value: "https://colab.research.google.com/github/huggingface/notebooks/blob/master/course/en/chapter8/section2.ipynb"},

{label: "Aws Studio", value: "https://studiolab.sagemaker.aws/import/github/huggingface/notebooks/blob/master/course/en/chapter8/section2.ipynb"},

]} />

In this section we'll look at some common errors that can occur when you're trying to generate predictions from your freshly tuned Transformer model. This will prepare you for [section 4](/course/chapter8/section4), where we'll explore how to debug the training phase itself.

<Youtube id="DQ-CpJn6Rc4"/>

We've prepared a [template model repository](https://huggingface.co/lewtun/distilbert-base-uncased-finetuned-squad-d5716d28) for this section, and if you want to run the code in this chapter you'll first need to copy the model to your account on the [Hugging Face Hub](https://huggingface.co). To do so, first log in by running either the following in a Jupyter notebook:

```python

from huggingface_hub import notebook_login

notebook_login()

```

or the following in your favorite terminal:

```bash

huggingface-cli login

```

This will prompt you to enter your username and password, and will save a token under *~/.cache/huggingface/*. Once you've logged in, you can copy the template repository with the following function:

```python

from distutils.dir_util import copy_tree

from huggingface_hub import Repository, snapshot_download, create_repo, get_full_repo_name

def copy_repository_template():

# Clone the repo and extract the local path

template_repo_id = "lewtun/distilbert-base-uncased-finetuned-squad-d5716d28"

commit_hash = "be3eaffc28669d7932492681cd5f3e8905e358b4"

template_repo_dir = snapshot_download(template_repo_id, revision=commit_hash)

# Create an empty repo on the Hub

model_name = template_repo_id.split("/")[1]

create_repo(model_name, exist_ok=True)

# Clone the empty repo

new_repo_id = get_full_repo_name(model_name)

new_repo_dir = model_name

repo = Repository(local_dir=new_repo_dir, clone_from=new_repo_id)

# Copy files

copy_tree(template_repo_dir, new_repo_dir)

# Push to Hub

repo.push_to_hub()

```

Now when you call `copy_repository_template()`, it will create a copy of the template repository under your account.

## Debugging the pipeline from 🤗 Transformers[[debugging-the-pipeline-from-transformers]]

To kick off our journey into the wonderful world of debugging Transformer models, consider the following scenario: you're working with a colleague on a question answering project to help the customers of an e-commerce website find answers about consumer products. Your colleague shoots you a message like:

> G'day! I just ran an experiment using the techniques in [Chapter 7](/course/chapter7/7) of the Hugging Face course and got some great results on SQuAD! I think we can use this model as a starting point for our project. The model ID on the Hub is "lewtun/distillbert-base-uncased-finetuned-squad-d5716d28". Feel free to test it out :)

and the first thing you think of is to load the model using the `pipeline` from 🤗 Transformers:

```python

from transformers import pipeline

model_checkpoint = get_full_repo_name("distillbert-base-uncased-finetuned-squad-d5716d28")

reader = pipeline("question-answering", model=model_checkpoint)

```

```python out

"""

OSError: Can't load config for 'lewtun/distillbert-base-uncased-finetuned-squad-d5716d28'. Make sure that:

- 'lewtun/distillbert-base-uncased-finetuned-squad-d5716d28' is a correct model identifier listed on 'https://huggingface.co/models'

- or 'lewtun/distillbert-base-uncased-finetuned-squad-d5716d28' is the correct path to a directory containing a config.json file

"""

```

Oh no, something seems to have gone wrong! If you're new to programming, these kind of errors can seem a bit cryptic at first (what even is an `OSError`?!). The error displayed here is just the last part of a much larger error report called a _Python traceback_ (aka stack trace). For example, if you're running this code on Google Colab, you should see something like the following screenshot:

<div class="flex justify-center">

<img src="https://huggingface.co/datasets/huggingface-course/documentation-images/resolve/main/en/chapter8/traceback.png" alt="A Python traceback." width="100%"/>

</div>

There's a lot of information contained in these reports, so let's walk through the key parts together. The first thing to note is that tracebacks should be read _from bottom to top_. This might sound weird if you're used to reading English text from top to bottom, but it reflects the fact that the traceback shows the sequence of function calls that the `pipeline` makes when downloading the model and tokenizer. (Check out [Chapter 2](/course/chapter2) for more details on how the `pipeline` works under the hood.)

<Tip>

🚨 See that blue box around "6 frames" in the traceback from Google Colab? That's a special feature of Colab, which compresses the traceback into "frames." If you can't seem to find the source of an error, make sure you expand the full traceback by clicking on those two little arrows.

</Tip>

This means that the last line of the traceback indicates the last error message and gives the name of the exception that was raised. In this case, the exception type is `OSError`, which indicates a system-related error. If we read the accompanying error message, we can see that there seems to be a problem with the model's *config.json* file, and we're given two suggestions to fix it:

```python out

"""

Make sure that:

- 'lewtun/distillbert-base-uncased-finetuned-squad-d5716d28' is a correct model identifier listed on 'https://huggingface.co/models'

- or 'lewtun/distillbert-base-uncased-finetuned-squad-d5716d28' is the correct path to a directory containing a config.json file

"""

```

<Tip>

💡 If you encounter an error message that is difficult to understand, just copy and paste the message into the Google or [Stack Overflow](https://stackoverflow.com/) search bar (yes, really!). There's a good chance that you're not the first person to encounter the error, and this is a good way to find solutions that others in the community have posted. For example, searching for `OSError: Can't load config for` on Stack Overflow gives several [hits](https://stackoverflow.com/search?q=OSError%3A+Can%27t+load+config+for+) that could be used as a starting point for solving the problem.

</Tip>

The first suggestion is asking us to check whether the model ID is actually correct, so the first order of business is to copy the identifier and paste it into the Hub's search bar:

<div class="flex justify-center">

<img src="https://huggingface.co/datasets/huggingface-course/documentation-images/resolve/main/en/chapter8/wrong-model-id.png" alt="The wrong model name." width="100%"/>

</div>

Hmm, it indeed looks like our colleague's model is not on the Hub... aha, but there's a typo in the name of the model! DistilBERT only has one "l" in its name, so let's fix that and look for "lewtun/distilbert-base-uncased-finetuned-squad-d5716d28" instead:

<div class="flex justify-center">

<img src="https://huggingface.co/datasets/huggingface-course/documentation-images/resolve/main/en/chapter8/true-model-id.png" alt="The right model name." width="100%"/>

</div>

Okay, this got a hit. Now let's try to download the model again with the correct model ID:

```python

model_checkpoint = get_full_repo_name("distilbert-base-uncased-finetuned-squad-d5716d28")

reader = pipeline("question-answering", model=model_checkpoint)

```

```python out

"""

OSError: Can't load config for 'lewtun/distilbert-base-uncased-finetuned-squad-d5716d28'. Make sure that:

- 'lewtun/distilbert-base-uncased-finetuned-squad-d5716d28' is a correct model identifier listed on 'https://huggingface.co/models'

- or 'lewtun/distilbert-base-uncased-finetuned-squad-d5716d28' is the correct path to a directory containing a config.json file

"""

```

Argh, foiled again -- welcome to the daily life of a machine learning engineer! Since we've fixed the model ID, the problem must lie in the repository itself. A quick way to access the contents of a repository on the 🤗 Hub is via the `list_repo_files()` function of the `huggingface_hub` library:

```python

from huggingface_hub import list_repo_files

list_repo_files(repo_id=model_checkpoint)

```

```python out

['.gitattributes', 'README.md', 'pytorch_model.bin', 'special_tokens_map.json', 'tokenizer_config.json', 'training_args.bin', 'vocab.txt']

```

Interesting -- there doesn't seem to be a *config.json* file in the repository! No wonder our `pipeline` couldn't load the model; our colleague must have forgotten to push this file to the Hub after they fine-tuned it. In this case, the problem seems pretty straightforward to fix: we could ask them to add the file, or, since we can see from the model ID that the pretrained model used was [`distilbert-base-uncased`](https://huggingface.co/distilbert-base-uncased), we can download the config for this model and push it to our repo to see if that resolves the problem. Let's try that. Using the techniques we learned in [Chapter 2](/course/chapter2), we can download the model's configuration with the `AutoConfig` class:

```python

from transformers import AutoConfig

pretrained_checkpoint = "distilbert-base-uncased"

config = AutoConfig.from_pretrained(pretrained_checkpoint)

```

<Tip warning={true}>

🚨 The approach we're taking here is not foolproof, since our colleague may have tweaked the configuration of `distilbert-base-uncased` before fine-tuning the model. In real life, we'd want to check with them first, but for the purposes of this section we'll assume they used the default configuration.

</Tip>

We can then push this to our model repository with the configuration's `push_to_hub()` function:

```python

config.push_to_hub(model_checkpoint, commit_message="Add config.json")

```

Now we can test if this worked by loading the model from the latest commit on the `main` branch:

```python

reader = pipeline("question-answering", model=model_checkpoint, revision="main")

context = r"""

Extractive Question Answering is the task of extracting an answer from a text

given a question. An example of a question answering dataset is the SQuAD

dataset, which is entirely based on that task. If you would like to fine-tune a

model on a SQuAD task, you may leverage the

examples/pytorch/question-answering/run_squad.py script.

🤗 Transformers is interoperable with the PyTorch, TensorFlow, and JAX

frameworks, so you can use your favourite tools for a wide variety of tasks!

"""

question = "What is extractive question answering?"

reader(question=question, context=context)

```

```python out

{'score': 0.38669535517692566,

'start': 34,

'end': 95,

'answer': 'the task of extracting an answer from a text given a question'}

```

Woohoo, it worked! Let's recap what you've just learned:

- The error messages in Python are known as _tracebacks_ and are read from bottom to top. The last line of the error message usually contains the information you need to locate the source of the problem.

- If the last line does not contain sufficient information, work your way up the traceback and see if you can identify where in the source code the error occurs.

- If none of the error messages can help you debug the problem, try searching online for a solution to a similar issue.

- The `huggingface_hub`

// 🤗 Hub?

library provides a suite of tools that you can use to interact with and debug repositories on the Hub.

Now that you know how to debug a pipeline, let's take a look at a trickier example in the forward pass of the model itself.

## Debugging the forward pass of your model[[debugging-the-forward-pass-of-your-model]]

Although the `pipeline` is great for most applications where you need to quickly generate predictions, sometimes you'll need to access the model's logits (say, if you have some custom post-processing that you'd like to apply). To see what can go wrong in this case, let's first grab the model and tokenizer from our `pipeline`:

```python

tokenizer = reader.tokenizer

model = reader.model

```

Next we need a question, so let's see if our favorite frameworks are supported:

```python

question = "Which frameworks can I use?"

```

As we saw in [Chapter 7](/course/chapter7), the usual steps we need to take are tokenizing the inputs, extracting the logits of the start and end tokens, and then decoding the answer span:

```python

import torch

inputs = tokenizer(question, context, add_special_tokens=True)

input_ids = inputs["input_ids"][0]

outputs = model(**inputs)

answer_start_scores = outputs.start_logits

answer_end_scores = outputs.end_logits

# Get the most likely beginning of answer with the argmax of the score

answer_start = torch.argmax(answer_start_scores)

# Get the most likely end of answer with the argmax of the score

answer_end = torch.argmax(answer_end_scores) + 1

answer = tokenizer.convert_tokens_to_string(

tokenizer.convert_ids_to_tokens(input_ids[answer_start:answer_end])

)

print(f"Question: {question}")

print(f"Answer: {answer}")

```

```python out

"""

---------------------------------------------------------------------------

AttributeError Traceback (most recent call last)

/var/folders/28/k4cy5q7s2hs92xq7_h89_vgm0000gn/T/ipykernel_75743/2725838073.py in <module>

1 inputs = tokenizer(question, text, add_special_tokens=True)

2 input_ids = inputs["input_ids"]

----> 3 outputs = model(**inputs)

4 answer_start_scores = outputs.start_logits

5 answer_end_scores = outputs.end_logits

~/miniconda3/envs/huggingface/lib/python3.8/site-packages/torch/nn/modules/module.py in _call_impl(self, *input, **kwargs)

1049 if not (self._backward_hooks or self._forward_hooks or self._forward_pre_hooks or _global_backward_hooks

1050 or _global_forward_hooks or _global_forward_pre_hooks):

-> 1051 return forward_call(*input, **kwargs)

1052 # Do not call functions when jit is used

1053 full_backward_hooks, non_full_backward_hooks = [], []

~/miniconda3/envs/huggingface/lib/python3.8/site-packages/transformers/models/distilbert/modeling_distilbert.py in forward(self, input_ids, attention_mask, head_mask, inputs_embeds, start_positions, end_positions, output_attentions, output_hidden_states, return_dict)

723 return_dict = return_dict if return_dict is not None else self.config.use_return_dict

724

--> 725 distilbert_output = self.distilbert(

726 input_ids=input_ids,

727 attention_mask=attention_mask,

~/miniconda3/envs/huggingface/lib/python3.8/site-packages/torch/nn/modules/module.py in _call_impl(self, *input, **kwargs)

1049 if not (self._backward_hooks or self._forward_hooks or self._forward_pre_hooks or _global_backward_hooks

1050 or _global_forward_hooks or _global_forward_pre_hooks):

-> 1051 return forward_call(*input, **kwargs)

1052 # Do not call functions when jit is used

1053 full_backward_hooks, non_full_backward_hooks = [], []

~/miniconda3/envs/huggingface/lib/python3.8/site-packages/transformers/models/distilbert/modeling_distilbert.py in forward(self, input_ids, attention_mask, head_mask, inputs_embeds, output_attentions, output_hidden_states, return_dict)

471 raise ValueError("You cannot specify both input_ids and inputs_embeds at the same time")

472 elif input_ids is not None:

--> 473 input_shape = input_ids.size()

474 elif inputs_embeds is not None:

475 input_shape = inputs_embeds.size()[:-1]

AttributeError: 'list' object has no attribute 'size'

"""

```

Oh dear, it looks like we have a bug in our code! But we're not afraid of a little debugging. You can use the Python debugger in a notebook:

<Youtube id="rSPyvPw0p9k"/>

or in a terminal:

<Youtube id="5PkZ4rbHL6c"/>

Here, reading the error message tells us that `'list' object has no attribute 'size'`, and we can see a `-->` arrow pointing to the line where the problem was raised in `model(**inputs)`.You can debug this interactively using the Python debugger, but for now we'll simply print out a slice of `inputs` to see what we have:

```python

inputs["input_ids"][:5]

```

```python out

[101, 2029, 7705, 2015, 2064]

```

This certainly looks like an ordinary Python `list`, but let's double-check the type:

```python

type(inputs["input_ids"])

```

```python out

list

```

Yep, that's a Python `list` for sure. So what went wrong? Recall from [Chapter 2](/course/chapter2) that the `AutoModelForXxx` classes in 🤗 Transformers operate on _tensors_ (either in PyTorch or TensorFlow), and a common operation is to extract the dimensions of a tensor using `Tensor.size()` in, say, PyTorch. Let's take another look at the traceback, to see which line triggered the exception:

```

~/miniconda3/envs/huggingface/lib/python3.8/site-packages/transformers/models/distilbert/modeling_distilbert.py in forward(self, input_ids, attention_mask, head_mask, inputs_embeds, output_attentions, output_hidden_states, return_dict)

471 raise ValueError("You cannot specify both input_ids and inputs_embeds at the same time")

472 elif input_ids is not None:

--> 473 input_shape = input_ids.size()

474 elif inputs_embeds is not None:

475 input_shape = inputs_embeds.size()[:-1]

AttributeError: 'list' object has no attribute 'size'

```

It looks like our code tried to call `input_ids.size()`, but this clearly won't work for a Python `list`, which is just a container. How can we solve this problem? Searching for the error message on Stack Overflow gives quite a few relevant [hits](https://stackoverflow.com/search?q=AttributeError%3A+%27list%27+object+has+no+attribute+%27size%27&s=c15ec54c-63cb-481d-a749-408920073e8f). Clicking on the first one displays a similar question to ours, with the answer shown in the screenshot below:

<div class="flex justify-center">

<img src="https://huggingface.co/datasets/huggingface-course/documentation-images/resolve/main/en/chapter8/stack-overflow.png" alt="An answer from Stack Overflow." width="100%"/>

</div>

The answer recommends that we add `return_tensors='pt'` to the tokenizer, so let's see if that works for us:

```python out

inputs = tokenizer(question, context, add_special_tokens=True, return_tensors="pt")

input_ids = inputs["input_ids"][0]

outputs = model(**inputs)

answer_start_scores = outputs.start_logits

answer_end_scores = outputs.end_logits

# Get the most likely beginning of answer with the argmax of the score

answer_start = torch.argmax(answer_start_scores)

# Get the most likely end of answer with the argmax of the score

answer_end = torch.argmax(answer_end_scores) + 1

answer = tokenizer.convert_tokens_to_string(

tokenizer.convert_ids_to_tokens(input_ids[answer_start:answer_end])

)

print(f"Question: {question}")

print(f"Answer: {answer}")

```

```python out

"""

Question: Which frameworks can I use?

Answer: pytorch, tensorflow, and jax

"""

```

Nice, it worked! This is a great example of how useful Stack Overflow can be: by identifying a similar problem, we were able to benefit from the experience of others in the community. However, a search like this won't always yield a relevant answer, so what can you do in such cases? Fortunately, there is a welcoming community of developers on the [Hugging Face forums](https://discuss.huggingface.co/) that can help you out! In the next section, we'll take a look at how you can craft good forum questions that are likely to get answered. | huggingface/course/blob/main/chapters/en/chapter8/2.mdx |

*TEMPLATE**

=====================================

*search & replace the following keywords, e.g.:*

`:%s/\[name of model\]/brand_new_bert/g`

-[lowercase name of model] # e.g. brand_new_bert

-[camelcase name of model] # e.g. BrandNewBert

-[name of mentor] # e.g. [Peter](https://github.com/peter)

-[link to original repo]

-[start date]

-[end date]

How to add [camelcase name of model] to 🤗 Transformers?

=====================================

Mentor: [name of mentor]

Begin: [start date]

Estimated End: [end date]

Adding a new model is often difficult and requires an in-depth knowledge

of the 🤗 Transformers library and ideally also of the model's original

repository. At Hugging Face, we are trying to empower the community more

and more to add models independently.

The following sections explain in detail how to add [camelcase name of model]

to Transformers. You will work closely with [name of mentor] to

integrate [camelcase name of model] into Transformers. By doing so, you will both gain a

theoretical and deep practical understanding of [camelcase name of model].

But more importantly, you will have made a major

open-source contribution to Transformers. Along the way, you will:

- get insights into open-source best practices

- understand the design principles of one of the most popular NLP

libraries

- learn how to do efficiently test large NLP models

- learn how to integrate Python utilities like `black`, `ruff`,

`make fix-copies` into a library to always ensure clean and readable

code

To start, let's try to get a general overview of the Transformers

library.

General overview of 🤗 Transformers

----------------------------------

First, you should get a general overview of 🤗 Transformers. Transformers

is a very opinionated library, so there is a chance that

you don't agree with some of the library's philosophies or design

choices. From our experience, however, we found that the fundamental

design choices and philosophies of the library are crucial to

efficiently scale Transformers while keeping maintenance costs at a

reasonable level.

A good first starting point to better understand the library is to read

the [documentation of our philosophy](https://huggingface.co/transformers/philosophy.html).

As a result of our way of working, there are some choices that we try to apply to all models:

- Composition is generally favored over abstraction

- Duplicating code is not always bad if it strongly improves the

readability or accessibility of a model

- Model files are as self-contained as possible so that when you read

the code of a specific model, you ideally only have to look into the

respective `modeling_....py` file.

In our opinion, the library's code is not just a means to provide a

product, *e.g.*, the ability to use BERT for inference, but also as the

very product that we want to improve. Hence, when adding a model, the

user is not only the person that will use your model, but also everybody

that will read, try to understand, and possibly tweak your code.

With this in mind, let's go a bit deeper into the general library

design.

### Overview of models

To successfully add a model, it is important to understand the

interaction between your model and its config,

`PreTrainedModel`, and `PretrainedConfig`. For

exemplary purposes, we will call the PyTorch model to be added to 🤗 Transformers

`BrandNewBert`.

Let's take a look:

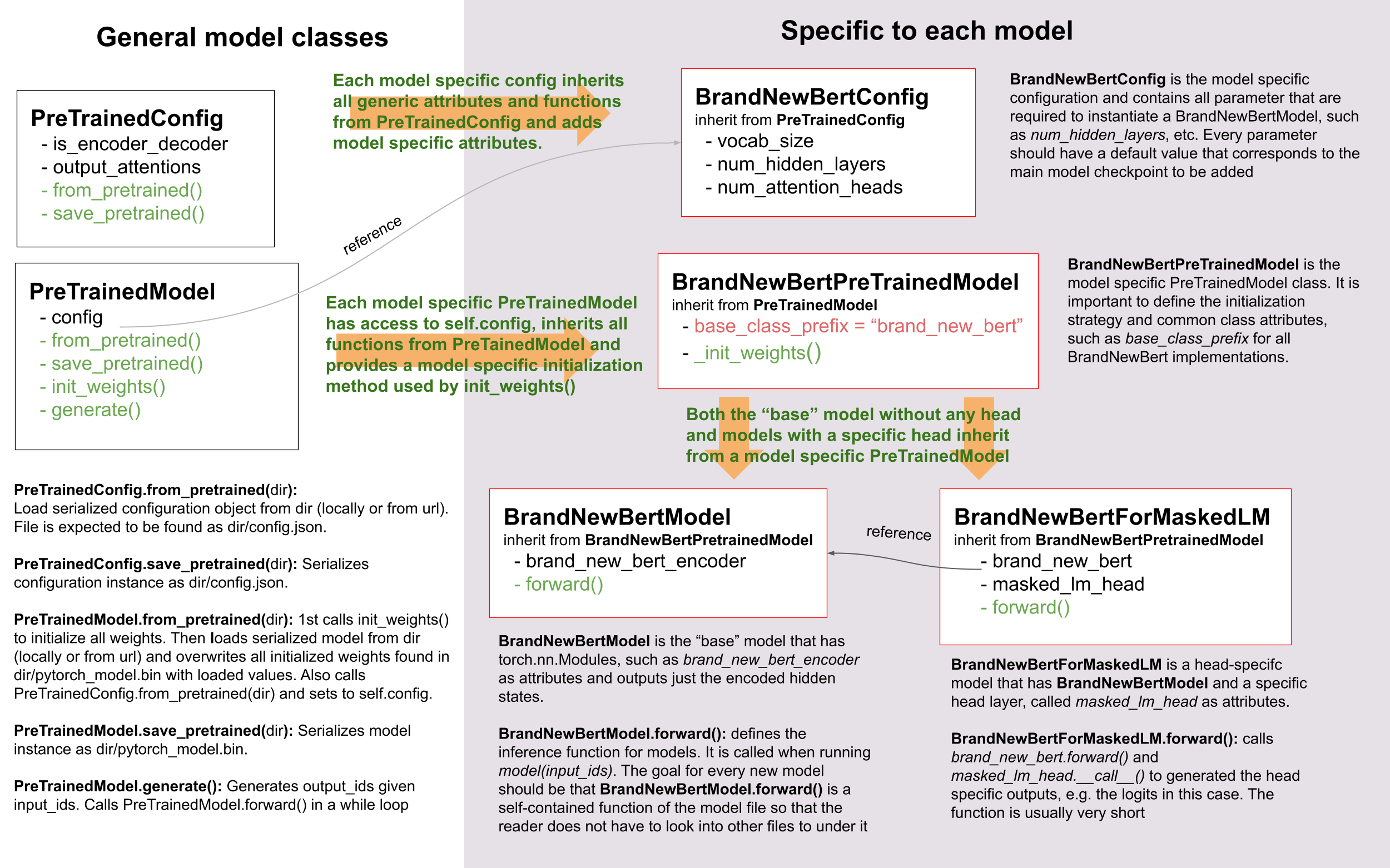

As you can see, we do make use of inheritance in 🤗 Transformers, but we

keep the level of abstraction to an absolute minimum. There are never

more than two levels of abstraction for any model in the library.

`BrandNewBertModel` inherits from

`BrandNewBertPreTrainedModel` which in

turn inherits from `PreTrainedModel` and that's it.

As a general rule, we want to make sure

that a new model only depends on `PreTrainedModel`. The

important functionalities that are automatically provided to every new

model are

`PreTrainedModel.from_pretrained` and `PreTrainedModel.save_pretrained`, which are

used for serialization and deserialization. All

of the other important functionalities, such as

`BrandNewBertModel.forward` should be

completely defined in the new `modeling_brand_new_bert.py` module. Next,

we want to make sure that a model with a specific head layer, such as

`BrandNewBertForMaskedLM` does not inherit

from `BrandNewBertModel`, but rather uses

`BrandNewBertModel` as a component that

can be called in its forward pass to keep the level of abstraction low.

Every new model requires a configuration class, called

`BrandNewBertConfig`. This configuration

is always stored as an attribute in

`PreTrainedModel`, and

thus can be accessed via the `config` attribute for all classes

inheriting from `BrandNewBertPreTrainedModel`

```python

# assuming that `brand_new_bert` belongs to the organization `brandy`

model = BrandNewBertModel.from_pretrained("brandy/brand_new_bert")

model.config # model has access to its config

```

Similar to the model, the configuration inherits basic serialization and

deserialization functionalities from

`PretrainedConfig`. Note

that the configuration and the model are always serialized into two

different formats - the model to a `pytorch_model.bin` file

and the configuration to a `config.json` file. Calling

`PreTrainedModel.save_pretrained` will automatically call

`PretrainedConfig.save_pretrained`, so that both model and configuration are saved.

### Overview of tokenizers

Not quite ready yet :-( This section will be added soon!

Step-by-step recipe to add a model to 🤗 Transformers

----------------------------------------------------

Everyone has different preferences of how to port a model so it can be

very helpful for you to take a look at summaries of how other

contributors ported models to Hugging Face. Here is a list of community

blog posts on how to port a model:

1. [Porting GPT2

Model](https://medium.com/huggingface/from-tensorflow-to-pytorch-265f40ef2a28)

by [Thomas](https://huggingface.co/thomwolf)

2. [Porting WMT19 MT Model](https://huggingface.co/blog/porting-fsmt)

by [Stas](https://huggingface.co/stas)

From experience, we can tell you that the most important things to keep

in mind when adding a model are:

- Don't reinvent the wheel! Most parts of the code you will add for

the new 🤗 Transformers model already exist somewhere in 🤗

Transformers. Take some time to find similar, already existing

models and tokenizers you can copy from.

[grep](https://www.gnu.org/software/grep/) and

[rg](https://github.com/BurntSushi/ripgrep) are your friends. Note

that it might very well happen that your model's tokenizer is based

on one model implementation, and your model's modeling code on

another one. *E.g.*, FSMT's modeling code is based on BART, while

FSMT's tokenizer code is based on XLM.

- It's more of an engineering challenge than a scientific challenge.

You should spend more time on creating an efficient debugging

environment than trying to understand all theoretical aspects of the

model in the paper.

- Ask for help when you're stuck! Models are the core component of 🤗

Transformers so we, at Hugging Face, are more than happy to help

you at every step to add your model. Don't hesitate to ask if you

notice you are not making progress.

In the following, we try to give you a general recipe that we found most

useful when porting a model to 🤗 Transformers.

The following list is a summary of everything that has to be done to add

a model and can be used by you as a To-Do List:

1. [ ] (Optional) Understood theoretical aspects

2. [ ] Prepared transformers dev environment

3. [ ] Set up debugging environment of the original repository

4. [ ] Created script that successfully runs forward pass using

original repository and checkpoint

5. [ ] Successfully opened a PR and added the model skeleton to Transformers

6. [ ] Successfully converted original checkpoint to Transformers

checkpoint

7. [ ] Successfully ran forward pass in Transformers that gives

identical output to original checkpoint

8. [ ] Finished model tests in Transformers

9. [ ] Successfully added Tokenizer in Transformers

10. [ ] Run end-to-end integration tests

11. [ ] Finished docs

12. [ ] Uploaded model weights to the hub

13. [ ] Submitted the pull request for review

14. [ ] (Optional) Added a demo notebook

To begin with, we usually recommend to start by getting a good

theoretical understanding of `[camelcase name of model]`. However, if you prefer to

understand the theoretical aspects of the model *on-the-job*, then it is

totally fine to directly dive into the `[camelcase name of model]`'s code-base. This

option might suit you better, if your engineering skills are better than

your theoretical skill, if you have trouble understanding

`[camelcase name of model]`'s paper, or if you just enjoy programming much more than

reading scientific papers.

### 1. (Optional) Theoretical aspects of [camelcase name of model]

You should take some time to read *[camelcase name of model]'s* paper, if such

descriptive work exists. There might be large sections of the paper that

are difficult to understand. If this is the case, this is fine - don't

worry! The goal is not to get a deep theoretical understanding of the

paper, but to extract the necessary information required to effectively

re-implement the model in 🤗 Transformers. That being said, you don't

have to spend too much time on the theoretical aspects, but rather focus

on the practical ones, namely:

- What type of model is *[camelcase name of model]*? BERT-like encoder-only

model? GPT2-like decoder-only model? BART-like encoder-decoder

model? Look at the `model_summary` if

you're not familiar with the differences between those.

- What are the applications of *[camelcase name of model]*? Text

classification? Text generation? Seq2Seq tasks, *e.g.,*

summarization?

- What is the novel feature of the model making it different from

BERT/GPT-2/BART?

- Which of the already existing [🤗 Transformers

models](https://huggingface.co/transformers/#contents) is most

similar to *[camelcase name of model]*?

- What type of tokenizer is used? A sentencepiece tokenizer? Word

piece tokenizer? Is it the same tokenizer as used for BERT or BART?

After you feel like you have gotten a good overview of the architecture

of the model, you might want to write to [name of mentor] with any

questions you might have. This might include questions regarding the

model's architecture, its attention layer, etc. We will be more than

happy to help you.

#### Additional resources

Before diving into the code, here are some additional resources that might be worth taking a look at:

- [link 1]

- [link 2]

- [link 3]

- ...

#### Make sure you've understood the fundamental aspects of [camelcase name of model]

Alright, now you should be ready to take a closer look into the actual code of [camelcase name of model].

You should have understood the following aspects of [camelcase name of model] by now:

- [characteristic 1 of [camelcase name of model]]

- [characteristic 2 of [camelcase name of model]]

- ...

If any of the mentioned aspects above are **not** clear to you, now is a great time to talk to [name of mentor].

### 2. Next prepare your environment

1. Fork the [repository](https://github.com/huggingface/transformers)

by clicking on the 'Fork' button on the repository's page. This

creates a copy of the code under your GitHub user account.

2. Clone your `transformers` fork to your local disk, and add the base

repository as a remote:

```bash

git clone https://github.com/[your Github handle]/transformers.git

cd transformers

git remote add upstream https://github.com/huggingface/transformers.git

```

3. Set up a development environment, for instance by running the

following command:

```bash

python -m venv .env

source .env/bin/activate

pip install -e ".[dev]"

```

and return to the parent directory

```bash

cd ..

```

4. We recommend adding the PyTorch version of *[camelcase name of model]* to

Transformers. To install PyTorch, please follow the instructions [here](https://pytorch.org/get-started/locally/).

**Note:** You don't need to have CUDA installed. Making the new model

work on CPU is sufficient.

5. To port *[camelcase name of model]*, you will also need access to its

original repository:

```bash

git clone [link to original repo].git

cd [lowercase name of model]

pip install -e .

```

Now you have set up a development environment to port *[camelcase name of model]*

to 🤗 Transformers.

### Run a pretrained checkpoint using the original repository

**3. Set up debugging environment**

At first, you will work on the original *[camelcase name of model]* repository.

Often, the original implementation is very "researchy". Meaning that

documentation might be lacking and the code can be difficult to

understand. But this should be exactly your motivation to reimplement

*[camelcase name of model]*. At Hugging Face, one of our main goals is to *make

people stand on the shoulders of giants* which translates here very well

into taking a working model and rewriting it to make it as **accessible,

user-friendly, and beautiful** as possible. This is the number-one

motivation to re-implement models into 🤗 Transformers - trying to make

complex new NLP technology accessible to **everybody**.

You should start thereby by diving into the [original repository]([link to original repo]).

Successfully running the official pretrained model in the original

repository is often **the most difficult** step. From our experience, it

is very important to spend some time getting familiar with the original

code-base. You need to figure out the following:

- Where to find the pretrained weights?

- How to load the pretrained weights into the corresponding model?

- How to run the tokenizer independently from the model?

- Trace one forward pass so that you know which classes and functions

are required for a simple forward pass. Usually, you only have to

reimplement those functions.

- Be able to locate the important components of the model: Where is

the model's class? Are there model sub-classes, *e.g.*,

EncoderModel, DecoderModel? Where is the self-attention layer? Are

there multiple different attention layers, *e.g.*, *self-attention*,

*cross-attention*...?

- How can you debug the model in the original environment of the repo?

Do you have to add `print` statements, can you work with

an interactive debugger like [ipdb](https://pypi.org/project/ipdb/), or should you use

an efficient IDE to debug the model, like PyCharm?

It is very important that before you start the porting process, that you

can **efficiently** debug code in the original repository! Also,

remember that you are working with an open-source library, so do not

hesitate to open an issue, or even a pull request in the original

repository. The maintainers of this repository are most likely very

happy about someone looking into their code!

At this point, it is really up to you which debugging environment and

strategy you prefer to use to debug the original model. We strongly

advise against setting up a costly GPU environment, but simply work on a

CPU both when starting to dive into the original repository and also

when starting to write the 🤗 Transformers implementation of the model.

Only at the very end, when the model has already been successfully

ported to 🤗 Transformers, one should verify that the model also works as

expected on GPU.

In general, there are two possible debugging environments for running

the original model

- [Jupyter notebooks](https://jupyter.org/) / [google colab](https://colab.research.google.com/notebooks/intro.ipynb)

- Local python scripts.

Jupyter notebooks have the advantage that they allow for cell-by-cell

execution which can be helpful to better split logical components from

one another and to have faster debugging cycles as intermediate results

can be stored. Also, notebooks are often easier to share with other

contributors, which might be very helpful if you want to ask the Hugging

Face team for help. If you are familiar with Jupyter notebooks, we

strongly recommend you to work with them.

The obvious disadvantage of Jupyter notebooks is that if you are not

used to working with them you will have to spend some time adjusting to

the new programming environment and that you might not be able to use

your known debugging tools anymore, like `ipdb`.

**4. Successfully run forward pass**

For each code-base, a good first step is always to load a **small**

pretrained checkpoint and to be able to reproduce a single forward pass

using a dummy integer vector of input IDs as an input. Such a script

could look like this (in pseudocode):

```python

model = [camelcase name of model]Model.load_pretrained_checkpoint("/path/to/checkpoint/")

input_ids = [0, 4, 5, 2, 3, 7, 9] # vector of input ids

original_output = model.predict(input_ids)

```

Next, regarding the debugging strategy, there are generally a few from

which to choose from:

- Decompose the original model into many small testable components and

run a forward pass on each of those for verification

- Decompose the original model only into the original *tokenizer* and

the original *model*, run a forward pass on those, and use

intermediate print statements or breakpoints for verification

Again, it is up to you which strategy to choose. Often, one or the other

is advantageous depending on the original code base.

If the original code-base allows you to decompose the model into smaller

sub-components, *e.g.*, if the original code-base can easily be run in

eager mode, it is usually worth the effort to do so. There are some

important advantages to taking the more difficult road in the beginning:

- at a later stage when comparing the original model to the Hugging

Face implementation, you can verify automatically for each component

individually that the corresponding component of the 🤗 Transformers

implementation matches instead of relying on visual comparison via

print statements

- it can give you some rope to decompose the big problem of porting a

model into smaller problems of just porting individual components

and thus structure your work better

- separating the model into logical meaningful components will help

you to get a better overview of the model's design and thus to

better understand the model

- at a later stage those component-by-component tests help you to

ensure that no regression occurs as you continue changing your code

[Lysandre's](https://gist.github.com/LysandreJik/db4c948f6b4483960de5cbac598ad4ed)

integration checks for ELECTRA gives a nice example of how this can be

done.

However, if the original code-base is very complex or only allows

intermediate components to be run in a compiled mode, it might be too

time-consuming or even impossible to separate the model into smaller

testable sub-components. A good example is [T5's

MeshTensorFlow](https://github.com/tensorflow/mesh/tree/master/mesh_tensorflow)

library which is very complex and does not offer a simple way to

decompose the model into its sub-components. For such libraries, one

often relies on verifying print statements.

No matter which strategy you choose, the recommended procedure is often

the same in that you should start to debug the starting layers first and

the ending layers last.

It is recommended that you retrieve the output, either by print

statements or sub-component functions, of the following layers in the

following order:

1. Retrieve the input IDs passed to the model

2. Retrieve the word embeddings

3. Retrieve the input of the first Transformer layer

4. Retrieve the output of the first Transformer layer

5. Retrieve the output of the following n - 1 Transformer layers

6. Retrieve the output of the whole [camelcase name of model] Model

Input IDs should thereby consists of an array of integers, *e.g.*,

`input_ids = [0, 4, 4, 3, 2, 4, 1, 7, 19]`

The outputs of the following layers often consist of multi-dimensional

float arrays and can look like this:

```bash

[[

[-0.1465, -0.6501, 0.1993, ..., 0.1451, 0.3430, 0.6024],

[-0.4417, -0.5920, 0.3450, ..., -0.3062, 0.6182, 0.7132],

[-0.5009, -0.7122, 0.4548, ..., -0.3662, 0.6091, 0.7648],

...,

[-0.5613, -0.6332, 0.4324, ..., -0.3792, 0.7372, 0.9288],

[-0.5416, -0.6345, 0.4180, ..., -0.3564, 0.6992, 0.9191],

[-0.5334, -0.6403, 0.4271, ..., -0.3339, 0.6533, 0.8694]]],

```

We expect that every model added to 🤗 Transformers passes a couple of

integration tests, meaning that the original model and the reimplemented

version in 🤗 Transformers have to give the exact same output up to a

precision of 0.001! Since it is normal that the exact same model written

in different libraries can give a slightly different output depending on

the library framework, we accept an error tolerance of 1e-3 (0.001). It

is not enough if the model gives nearly the same output, they have to be

the almost identical. Therefore, you will certainly compare the

intermediate outputs of the 🤗 Transformers version multiple times

against the intermediate outputs of the original implementation of

*[camelcase name of model]* in which case an **efficient** debugging environment

of the original repository is absolutely important. Here is some advice

to make your debugging environment as efficient as possible.

- Find the best way of debugging intermediate results. Is the original

repository written in PyTorch? Then you should probably take the

time to write a longer script that decomposes the original model

into smaller sub-components to retrieve intermediate values. Is the

original repository written in Tensorflow 1? Then you might have to

rely on TensorFlow print operations like

[tf.print](https://www.tensorflow.org/api_docs/python/tf/print) to

output intermediate values. Is the original repository written in

Jax? Then make sure that the model is **not jitted** when running

the forward pass, *e.g.*, check-out [this

link](https://github.com/google/jax/issues/196).

- Use the smallest pretrained checkpoint you can find. The smaller the

checkpoint, the faster your debug cycle becomes. It is not efficient

if your pretrained model is so big that your forward pass takes more

than 10 seconds. In case only very large checkpoints are available,

it might make more sense to create a dummy model in the new

environment with randomly initialized weights and save those weights

for comparison with the 🤗 Transformers version of your model

- Make sure you are using the easiest way of calling a forward pass in

the original repository. Ideally, you want to find the function in

the original repository that **only** calls a single forward pass,

*i.e.* that is often called `predict`, `evaluate`, `forward` or

`__call__`. You don't want to debug a function that calls `forward`

multiple times, *e.g.*, to generate text, like

`autoregressive_sample`, `generate`.

- Try to separate the tokenization from the model's

forward pass. If the original repository shows

examples where you have to input a string, then try to find out

where in the forward call the string input is changed to input ids

and start from this point. This might mean that you have to possibly

write a small script yourself or change the original code so that

you can directly input the ids instead of an input string.

- Make sure that the model in your debugging setup is **not** in

training mode, which often causes the model to yield random outputs

due to multiple dropout layers in the model. Make sure that the

forward pass in your debugging environment is **deterministic** so

that the dropout layers are not used. Or use

`transformers.utils.set_seed` if the old and new

implementations are in the same framework.

#### More details on how to create a debugging environment for [camelcase name of model]

[TODO FILL: Here the mentor should add very specific information on what the student should do]

[to set up an efficient environment for the special requirements of this model]

### Port [camelcase name of model] to 🤗 Transformers

Next, you can finally start adding new code to 🤗 Transformers. Go into

the clone of your 🤗 Transformers' fork:

cd transformers

In the special case that you are adding a model whose architecture

exactly matches the model architecture of an existing model you only

have to add a conversion script as described in [this

section](#write-a-conversion-script). In this case, you can just re-use

the whole model architecture of the already existing model.

Otherwise, let's start generating a new model with the amazing

Cookiecutter!

**Use the Cookiecutter to automatically generate the model's code**

To begin with head over to the [🤗 Transformers

templates](https://github.com/huggingface/transformers/tree/main/templates/adding_a_new_model)

to make use of our `cookiecutter` implementation to automatically

generate all the relevant files for your model. Again, we recommend only

adding the PyTorch version of the model at first. Make sure you follow

the instructions of the `README.md` on the [🤗 Transformers

templates](https://github.com/huggingface/transformers/tree/main/templates/adding_a_new_model)

carefully.

**Open a Pull Request on the main huggingface/transformers repo**

Before starting to adapt the automatically generated code, now is the

time to open a "Work in progress (WIP)" pull request, *e.g.*, "\[WIP\]

Add *[camelcase name of model]*", in 🤗 Transformers so that you and the Hugging

Face team can work side-by-side on integrating the model into 🤗

Transformers.

You should do the following:

1. Create a branch with a descriptive name from your main branch

```

git checkout -b add_[lowercase name of model]

```

2. Commit the automatically generated code:

```

git add .

git commit

```

3. Fetch and rebase to current main

```

git fetch upstream

git rebase upstream/main

```

4. Push the changes to your account using:

```

git push -u origin a-descriptive-name-for-my-changes

```

5. Once you are satisfied, go to the webpage of your fork on GitHub.

Click on "Pull request". Make sure to add the GitHub handle of

[name of mentor] as a reviewer, so that the Hugging

Face team gets notified for future changes.

6. Change the PR into a draft by clicking on "Convert to draft" on the

right of the GitHub pull request web page.

In the following, whenever you have done some progress, don't forget to

commit your work and push it to your account so that it shows in the

pull request. Additionally, you should make sure to update your work

with the current main from time to time by doing:

git fetch upstream

git merge upstream/main

In general, all questions you might have regarding the model or your

implementation should be asked in your PR and discussed/solved in the

PR. This way, [name of mentor] will always be notified when you are

committing new code or if you have a question. It is often very helpful

to point [name of mentor] to your added code so that the Hugging

Face team can efficiently understand your problem or question.

To do so, you can go to the "Files changed" tab where you see all of

your changes, go to a line regarding which you want to ask a question,

and click on the "+" symbol to add a comment. Whenever a question or

problem has been solved, you can click on the "Resolve" button of the

created comment.

In the same way, [name of mentor] will open comments when reviewing

your code. We recommend asking most questions on GitHub on your PR. For

some very general questions that are not very useful for the public,

feel free to ping [name of mentor] by Slack or email.

**5. Adapt the generated models code for [camelcase name of model]**

At first, we will focus only on the model itself and not care about the

tokenizer. All the relevant code should be found in the generated files

`src/transformers/models/[lowercase name of model]/modeling_[lowercase name of model].py` and

`src/transformers/models/[lowercase name of model]/configuration_[lowercase name of model].py`.

Now you can finally start coding :). The generated code in

`src/transformers/models/[lowercase name of model]/modeling_[lowercase name of model].py` will

either have the same architecture as BERT if it's an encoder-only model

or BART if it's an encoder-decoder model. At this point, you should

remind yourself what you've learned in the beginning about the

theoretical aspects of the model: *How is the model different from BERT

or BART?*\". Implement those changes which often means to change the

*self-attention* layer, the order of the normalization layer, etc...

Again, it is often useful to look at the similar architecture of already

existing models in Transformers to get a better feeling of how your

model should be implemented.

**Note** that at this point, you don't have to be very sure that your

code is fully correct or clean. Rather, it is advised to add a first

*unclean*, copy-pasted version of the original code to

`src/transformers/models/[lowercase name of model]/modeling_[lowercase name of model].py`

until you feel like all the necessary code is added. From our

experience, it is much more efficient to quickly add a first version of

the required code and improve/correct the code iteratively with the

conversion script as described in the next section. The only thing that

has to work at this point is that you can instantiate the 🤗 Transformers

implementation of *[camelcase name of model]*, *i.e.* the following command

should work:

```python

from transformers import [camelcase name of model]Model, [camelcase name of model]Config

model = [camelcase name of model]Model([camelcase name of model]Config())

```

The above command will create a model according to the default

parameters as defined in `[camelcase name of model]Config()` with random weights,

thus making sure that the `init()` methods of all components works.

[TODO FILL: Here the mentor should add very specific information on what exactly has to be changed for this model]

[...]

[...]

**6. Write a conversion script**

Next, you should write a conversion script that lets you convert the

checkpoint you used to debug *[camelcase name of model]* in the original

repository to a checkpoint compatible with your just created 🤗

Transformers implementation of *[camelcase name of model]*. It is not advised to

write the conversion script from scratch, but rather to look through

already existing conversion scripts in 🤗 Transformers for one that has

been used to convert a similar model that was written in the same

framework as *[camelcase name of model]*. Usually, it is enough to copy an

already existing conversion script and slightly adapt it for your use

case. Don't hesitate to ask [name of mentor] to point you to a

similar already existing conversion script for your model.

- If you are porting a model from TensorFlow to PyTorch, a good

starting point might be BERT's conversion script

[here](https://github.com/huggingface/transformers/blob/7acfa95afb8194f8f9c1f4d2c6028224dbed35a2/src/transformers/models/bert/modeling_bert.py#L91)

- If you are porting a model from PyTorch to PyTorch, a good starting

point might be BART's conversion script

[here](https://github.com/huggingface/transformers/blob/main/src/transformers/models/bart/convert_bart_original_pytorch_checkpoint_to_pytorch.py)

In the following, we'll quickly explain how PyTorch models store layer

weights and define layer names. In PyTorch, the name of a layer is

defined by the name of the class attribute you give the layer. Let's

define a dummy model in PyTorch, called `SimpleModel` as follows:

```python

from torch import nn

class SimpleModel(nn.Module):

def __init__(self):

super().__init__()

self.dense = nn.Linear(10, 10)

self.intermediate = nn.Linear(10, 10)

self.layer_norm = nn.LayerNorm(10)

```

Now we can create an instance of this model definition which will fill

all weights: `dense`, `intermediate`, `layer_norm` with random weights.

We can print the model to see its architecture

```python

model = SimpleModel()

print(model)

```

This will print out the following:

```bash

SimpleModel(

(dense): Linear(in_features=10, out_features=10, bias=True)

(intermediate): Linear(in_features=10, out_features=10, bias=True)

(layer_norm): LayerNorm((10,), eps=1e-05, elementwise_affine=True)

)

```

We can see that the layer names are defined by the name of the class

attribute in PyTorch. You can print out the weight values of a specific

layer:

```python

print(model.dense.weight.data)

```

to see that the weights were randomly initialized

```bash

tensor([[-0.0818, 0.2207, -0.0749, -0.0030, 0.0045, -0.1569, -0.1598, 0.0212,

-0.2077, 0.2157],

[ 0.1044, 0.0201, 0.0990, 0.2482, 0.3116, 0.2509, 0.2866, -0.2190,

0.2166, -0.0212],

[-0.2000, 0.1107, -0.1999, -0.3119, 0.1559, 0.0993, 0.1776, -0.1950,

-0.1023, -0.0447],

[-0.0888, -0.1092, 0.2281, 0.0336, 0.1817, -0.0115, 0.2096, 0.1415,

-0.1876, -0.2467],

[ 0.2208, -0.2352, -0.1426, -0.2636, -0.2889, -0.2061, -0.2849, -0.0465,

0.2577, 0.0402],

[ 0.1502, 0.2465, 0.2566, 0.0693, 0.2352, -0.0530, 0.1859, -0.0604,

0.2132, 0.1680],

[ 0.1733, -0.2407, -0.1721, 0.1484, 0.0358, -0.0633, -0.0721, -0.0090,

0.2707, -0.2509],

[-0.1173, 0.1561, 0.2945, 0.0595, -0.1996, 0.2988, -0.0802, 0.0407,

0.1829, -0.1568],

[-0.1164, -0.2228, -0.0403, 0.0428, 0.1339, 0.0047, 0.1967, 0.2923,

0.0333, -0.0536],

[-0.1492, -0.1616, 0.1057, 0.1950, -0.2807, -0.2710, -0.1586, 0.0739,

0.2220, 0.2358]]).

```

In the conversion script, you should fill those randomly initialized

weights with the exact weights of the corresponding layer in the

checkpoint. *E.g.*,

```python

# retrieve matching layer weights, e.g. by

# recursive algorithm

layer_name = "dense"

pretrained_weight = array_of_dense_layer

model_pointer = getattr(model, "dense")

model_pointer.weight.data = torch.from_numpy(pretrained_weight)

```

While doing so, you must verify that each randomly initialized weight of

your PyTorch model and its corresponding pretrained checkpoint weight

exactly match in both **shape and name**. To do so, it is **necessary**

to add assert statements for the shape and print out the names of the

checkpoints weights. *E.g.*, you should add statements like:

```python

assert (

model_pointer.weight.shape == pretrained_weight.shape

), f"Pointer shape of random weight {model_pointer.shape} and array shape of checkpoint weight {pretrained_weight.shape} mismatched"

```

Besides, you should also print out the names of both weights to make

sure they match, *e.g.*,

```python

logger.info(f"Initialize PyTorch weight {layer_name} from {pretrained_weight.name}")

```

If either the shape or the name doesn't match, you probably assigned

the wrong checkpoint weight to a randomly initialized layer of the 🤗

Transformers implementation.

An incorrect shape is most likely due to an incorrect setting of the

config parameters in `[camelcase name of model]Config()` that do not exactly match

those that were used for the checkpoint you want to convert. However, it

could also be that PyTorch's implementation of a layer requires the

weight to be transposed beforehand.

Finally, you should also check that **all** required weights are

initialized and print out all checkpoint weights that were not used for

initialization to make sure the model is correctly converted. It is

completely normal, that the conversion trials fail with either a wrong

shape statement or wrong name assignment. This is most likely because

either you used incorrect parameters in `[camelcase name of model]Config()`, have a

wrong architecture in the 🤗 Transformers implementation, you have a bug

in the `init()` functions of one of the components of the 🤗 Transformers

implementation or you need to transpose one of the checkpoint weights.

This step should be iterated with the previous step until all weights of

the checkpoint are correctly loaded in the Transformers model. Having

correctly loaded the checkpoint into the 🤗 Transformers implementation,

you can then save the model under a folder of your choice

`/path/to/converted/checkpoint/folder` that should then contain both a

`pytorch_model.bin` file and a `config.json` file:

```python

model.save_pretrained("/path/to/converted/checkpoint/folder")

```

[TODO FILL: Here the mentor should add very specific information on what exactly has to be done for the conversion of this model]

[...]

[...]

**7. Implement the forward pass**

Having managed to correctly load the pretrained weights into the 🤗

Transformers implementation, you should now make sure that the forward

pass is correctly implemented. In [Get familiar with the original

repository](#34-run-a-pretrained-checkpoint-using-the-original-repository),

you have already created a script that runs a forward pass of the model

using the original repository. Now you should write an analogous script

using the 🤗 Transformers implementation instead of the original one. It

should look as follows:

[TODO FILL: Here the model name might have to be adapted, *e.g.*, maybe [camelcase name of model]ForConditionalGeneration instead of [camelcase name of model]Model]

```python

model = [camelcase name of model]Model.from_pretrained("/path/to/converted/checkpoint/folder")

input_ids = [0, 4, 4, 3, 2, 4, 1, 7, 19]

output = model(input_ids).last_hidden_states

```

It is very likely that the 🤗 Transformers implementation and the

original model implementation don't give the exact same output the very

first time or that the forward pass throws an error. Don't be

disappointed - it's expected! First, you should make sure that the

forward pass doesn't throw any errors. It often happens that the wrong

dimensions are used leading to a `"Dimensionality mismatch"`

error or that the wrong data type object is used, *e.g.*, `torch.long`

instead of `torch.float32`. Don't hesitate to ask [name of mentor]

for help, if you don't manage to solve certain errors.

The final part to make sure the 🤗 Transformers implementation works

correctly is to ensure that the outputs are equivalent to a precision of

`1e-3`. First, you should ensure that the output shapes are identical,

*i.e.* `outputs.shape` should yield the same value for the script of the

🤗 Transformers implementation and the original implementation. Next, you

should make sure that the output values are identical as well. This one

of the most difficult parts of adding a new model. Common mistakes why

the outputs are not identical are:

- Some layers were not added, *i.e.* an activation layer

was not added, or the residual connection was forgotten

- The word embedding matrix was not tied

- The wrong positional embeddings are used because the original

implementation uses on offset

- Dropout is applied during the forward pass. To fix this make sure

`model.training is False` and that no dropout layer is

falsely activated during the forward pass, *i.e.* pass

`self.training` to [PyTorch's functional

dropout](https://pytorch.org/docs/stable/nn.functional.html?highlight=dropout#torch.nn.functional.dropout)

The best way to fix the problem is usually to look at the forward pass