text

stringlengths 23

371k

| source

stringlengths 32

152

|

|---|---|

Data

[[autodoc]] timm.data.create_dataset

[[autodoc]] timm.data.create_loader

[[autodoc]] timm.data.create_transform

[[autodoc]] timm.data.resolve_data_config | huggingface/pytorch-image-models/blob/main/hfdocs/source/reference/data.mdx |

!--Copyright 2022 The HuggingFace Team. All rights reserved.

Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with

the License. You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on

an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the

specific language governing permissions and limitations under the License.

⚠️ Note that this file is in Markdown but contain specific syntax for our doc-builder (similar to MDX) that may not be

rendered properly in your Markdown viewer.

-->

# ViTMSN

## Overview

The ViTMSN model was proposed in [Masked Siamese Networks for Label-Efficient Learning](https://arxiv.org/abs/2204.07141) by Mahmoud Assran, Mathilde Caron, Ishan Misra, Piotr Bojanowski, Florian Bordes,

Pascal Vincent, Armand Joulin, Michael Rabbat, Nicolas Ballas. The paper presents a joint-embedding architecture to match the prototypes

of masked patches with that of the unmasked patches. With this setup, their method yields excellent performance in the low-shot and extreme low-shot

regimes.

The abstract from the paper is the following:

*We propose Masked Siamese Networks (MSN), a self-supervised learning framework for learning image representations. Our

approach matches the representation of an image view containing randomly masked patches to the representation of the original

unmasked image. This self-supervised pre-training strategy is particularly scalable when applied to Vision Transformers since only the

unmasked patches are processed by the network. As a result, MSNs improve the scalability of joint-embedding architectures,

while producing representations of a high semantic level that perform competitively on low-shot image classification. For instance,

on ImageNet-1K, with only 5,000 annotated images, our base MSN model achieves 72.4% top-1 accuracy,

and with 1% of ImageNet-1K labels, we achieve 75.7% top-1 accuracy, setting a new state-of-the-art for self-supervised learning on this benchmark.*

<img src="https://i.ibb.co/W6PQMdC/Screenshot-2022-09-13-at-9-08-40-AM.png" alt="drawing" width="600"/>

<small> MSN architecture. Taken from the <a href="https://arxiv.org/abs/2204.07141">original paper.</a> </small>

This model was contributed by [sayakpaul](https://huggingface.co/sayakpaul). The original code can be found [here](https://github.com/facebookresearch/msn).

## Usage tips

- MSN (masked siamese networks) is a method for self-supervised pre-training of Vision Transformers (ViTs). The pre-training

objective is to match the prototypes assigned to the unmasked views of the images to that of the masked views of the same images.

- The authors have only released pre-trained weights of the backbone (ImageNet-1k pre-training). So, to use that on your own image classification dataset,

use the [`ViTMSNForImageClassification`] class which is initialized from [`ViTMSNModel`]. Follow

[this notebook](https://github.com/huggingface/notebooks/blob/main/examples/image_classification.ipynb) for a detailed tutorial on fine-tuning.

- MSN is particularly useful in the low-shot and extreme low-shot regimes. Notably, it achieves 75.7% top-1 accuracy with only 1% of ImageNet-1K

labels when fine-tuned.

## Resources

A list of official Hugging Face and community (indicated by 🌎) resources to help you get started with ViT MSN.

<PipelineTag pipeline="image-classification"/>

- [`ViTMSNForImageClassification`] is supported by this [example script](https://github.com/huggingface/transformers/tree/main/examples/pytorch/image-classification) and [notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/examples/image_classification.ipynb).

- See also: [Image classification task guide](../tasks/image_classification)

If you're interested in submitting a resource to be included here, please feel free to open a Pull Request and we'll review it! The resource should ideally demonstrate something new instead of duplicating an existing resource.

## ViTMSNConfig

[[autodoc]] ViTMSNConfig

## ViTMSNModel

[[autodoc]] ViTMSNModel

- forward

## ViTMSNForImageClassification

[[autodoc]] ViTMSNForImageClassification

- forward

| huggingface/transformers/blob/main/docs/source/en/model_doc/vit_msn.md |

Overview

Welcome to the 🤗 Datasets tutorials! These beginner-friendly tutorials will guide you through the fundamentals of working with 🤗 Datasets. You'll load and prepare a dataset for training with your machine learning framework of choice. Along the way, you'll learn how to load different dataset configurations and splits, interact with and see what's inside your dataset, preprocess, and share a dataset to the [Hub](https://huggingface.co/datasets).

The tutorials assume some basic knowledge of Python and a machine learning framework like PyTorch or TensorFlow. If you're already familiar with these, feel free to check out the [quickstart](./quickstart) to see what you can do with 🤗 Datasets.

<Tip>

The tutorials only cover the basic skills you need to use 🤗 Datasets. There are many other useful functionalities and applications that aren't discussed here. If you're interested in learning more, take a look at [Chapter 5](https://huggingface.co/course/chapter5/1?fw=pt) of the Hugging Face course.

</Tip>

If you have any questions about 🤗 Datasets, feel free to join and ask the community on our [forum](https://discuss.huggingface.co/c/datasets/10).

Let's get started! 🏁

| huggingface/datasets/blob/main/docs/source/tutorial.md |

--

title: MSE

emoji: 🤗

colorFrom: blue

colorTo: red

sdk: gradio

sdk_version: 3.19.1

app_file: app.py

pinned: false

tags:

- evaluate

- metric

description: >-

Mean Squared Error(MSE) is the average of the square of difference between the predicted

and actual values.

---

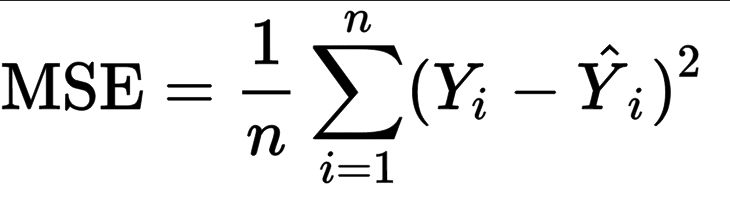

# Metric Card for MSE

## Metric Description

Mean Squared Error(MSE) represents the average of the squares of errors -- i.e. the average squared difference between the estimated values and the actual values.

## How to Use

At minimum, this metric requires predictions and references as inputs.

```python

>>> mse_metric = evaluate.load("mse")

>>> predictions = [2.5, 0.0, 2, 8]

>>> references = [3, -0.5, 2, 7]

>>> results = mse_metric.compute(predictions=predictions, references=references)

```

### Inputs

Mandatory inputs:

- `predictions`: numeric array-like of shape (`n_samples,`) or (`n_samples`, `n_outputs`), representing the estimated target values.

- `references`: numeric array-like of shape (`n_samples,`) or (`n_samples`, `n_outputs`), representing the ground truth (correct) target values.

Optional arguments:

- `sample_weight`: numeric array-like of shape (`n_samples,`) representing sample weights. The default is `None`.

- `multioutput`: `raw_values`, `uniform_average` or numeric array-like of shape (`n_outputs,`), which defines the aggregation of multiple output values. The default value is `uniform_average`.

- `raw_values` returns a full set of errors in case of multioutput input.

- `uniform_average` means that the errors of all outputs are averaged with uniform weight.

- the array-like value defines weights used to average errors.

- `squared` (`bool`): If `True` returns MSE value, if `False` returns RMSE (Root Mean Squared Error). The default value is `True`.

### Output Values

This metric outputs a dictionary, containing the mean squared error score, which is of type:

- `float`: if multioutput is `uniform_average` or an ndarray of weights, then the weighted average of all output errors is returned.

- numeric array-like of shape (`n_outputs,`): if multioutput is `raw_values`, then the score is returned for each output separately.

Each MSE `float` value ranges from `0.0` to `1.0`, with the best value being `0.0`.

Output Example(s):

```python

{'mse': 0.5}

```

If `multioutput="raw_values"`:

```python

{'mse': array([0.41666667, 1. ])}

```

#### Values from Popular Papers

### Examples

Example with the `uniform_average` config:

```python

>>> mse_metric = evaluate.load("mse")

>>> predictions = [2.5, 0.0, 2, 8]

>>> references = [3, -0.5, 2, 7]

>>> results = mse_metric.compute(predictions=predictions, references=references)

>>> print(results)

{'mse': 0.375}

```

Example with `squared = True`, which returns the RMSE:

```python

>>> mse_metric = evaluate.load("mse")

>>> predictions = [2.5, 0.0, 2, 8]

>>> references = [3, -0.5, 2, 7]

>>> rmse_result = mse_metric.compute(predictions=predictions, references=references, squared=False)

>>> print(rmse_result)

{'mse': 0.6123724356957945}

```

Example with multi-dimensional lists, and the `raw_values` config:

```python

>>> mse_metric = evaluate.load("mse", "multilist")

>>> predictions = [[0.5, 1], [-1, 1], [7, -6]]

>>> references = [[0, 2], [-1, 2], [8, -5]]

>>> results = mse_metric.compute(predictions=predictions, references=references, multioutput='raw_values')

>>> print(results)

{'mse': array([0.41666667, 1. ])}

"""

```

## Limitations and Bias

MSE has the disadvantage of heavily weighting outliers -- given that it squares them, this results in large errors weighing more heavily than small ones. It can be used alongside [MAE](https://huggingface.co/metrics/mae), which is complementary given that it does not square the errors.

## Citation(s)

```bibtex

@article{scikit-learn,

title={Scikit-learn: Machine Learning in {P}ython},

author={Pedregosa, F. and Varoquaux, G. and Gramfort, A. and Michel, V.

and Thirion, B. and Grisel, O. and Blondel, M. and Prettenhofer, P.

and Weiss, R. and Dubourg, V. and Vanderplas, J. and Passos, A. and

Cournapeau, D. and Brucher, M. and Perrot, M. and Duchesnay, E.},

journal={Journal of Machine Learning Research},

volume={12},

pages={2825--2830},

year={2011}

}

```

```bibtex

@article{willmott2005advantages,

title={Advantages of the mean absolute error (MAE) over the root mean square error (RMSE) in assessing average model performance},

author={Willmott, Cort J and Matsuura, Kenji},

journal={Climate research},

volume={30},

number={1},

pages={79--82},

year={2005}

}

```

## Further References

- [Mean Squared Error - Wikipedia](https://en.wikipedia.org/wiki/Mean_squared_error)

| huggingface/evaluate/blob/main/metrics/mse/README.md |

!-- DISABLE-FRONTMATTER-SECTIONS -->

# Tokenizers

Fast State-of-the-art tokenizers, optimized for both research and

production

[🤗 Tokenizers](https://github.com/huggingface/tokenizers) provides an

implementation of today's most used tokenizers, with a focus on

performance and versatility. These tokenizers are also used in [🤗 Transformers](https://github.com/huggingface/transformers).

# Main features:

- Train new vocabularies and tokenize, using today's most used tokenizers.

- Extremely fast (both training and tokenization), thanks to the Rust implementation. Takes less than 20 seconds to tokenize a GB of text on a server's CPU.

- Easy to use, but also extremely versatile.

- Designed for both research and production.

- Full alignment tracking. Even with destructive normalization, it's always possible to get the part of the original sentence that corresponds to any token.

- Does all the pre-processing: Truncation, Padding, add the special tokens your model needs.

| huggingface/tokenizers/blob/main/docs/source-doc-builder/index.mdx |

Gradio Demo: hello_login

```

!pip install -q gradio

```

```

import gradio as gr

import argparse

import sys

parser = argparse.ArgumentParser()

parser.add_argument("--name", type=str, default="User")

args, unknown = parser.parse_known_args()

print(sys.argv)

with gr.Blocks() as demo:

gr.Markdown(f"# Greetings {args.name}!")

inp = gr.Textbox()

out = gr.Textbox()

inp.change(fn=lambda x: x, inputs=inp, outputs=out)

if __name__ == "__main__":

demo.launch()

```

| gradio-app/gradio/blob/main/demo/hello_login/run.ipynb |

Uploading datasets

The [Hub](https://huggingface.co/datasets) is home to an extensive collection of community-curated and research datasets. We encourage you to share your dataset to the Hub to help grow the ML community and accelerate progress for everyone. All contributions are welcome; adding a dataset is just a drag and drop away!

Start by [creating a Hugging Face Hub account](https://huggingface.co/join) if you don't have one yet.

## Upload using the Hub UI

The Hub's web-based interface allows users without any developer experience to upload a dataset.

### Create a repository

A repository hosts all your dataset files, including the revision history, making storing more than one dataset version possible.

1. Click on your profile and select **New Dataset** to create a [new dataset repository](https://huggingface.co/new-dataset).

2. Pick a name for your dataset, and choose whether it is a public or private dataset. A public dataset is visible to anyone, whereas a private dataset can only be viewed by you or members of your organization.

<div class="flex justify-center">

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/datasets/create_repo.png"/>

</div>

### Upload dataset

1. Once you've created a repository, navigate to the **Files and versions** tab to add a file. Select **Add file** to upload your dataset files. We support many text, audio, image and other data extensions such as `.csv`, `.mp3`, and `.jpg` (see the full list of [File formats](#file-formats)).

<div class="flex justify-center">

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/datasets/upload_files.png"/>

</div>

2. Drag and drop your dataset files.

<div class="flex justify-center">

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/datasets/commit_files.png"/>

</div>

3. After uploading your dataset files, they are stored in your dataset repository.

<div class="flex justify-center">

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/datasets/files_stored.png"/>

</div>

### Create a Dataset card

Adding a Dataset card is super valuable for helping users find your dataset and understand how to use it responsibly.

1. Click on **Create Dataset Card** to create a [Dataset card](./datasets-cards). This button creates a `README.md` file in your repository.

<div class="flex justify-center">

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/datasets/dataset_card.png"/>

</div>

2. At the top, you'll see the **Metadata UI** with several fields to select from such as license, language, and task categories. These are the most important tags for helping users discover your dataset on the Hub (when applicable). When you select an option for a field, it will be automatically added to the top of the dataset card.

You can also look at the [Dataset Card specifications](https://github.com/huggingface/hub-docs/blob/main/datasetcard.md?plain=1), which has a complete set of allowed tags, including optional like `annotations_creators`, to help you choose the ones that are useful for your dataset.

<div class="flex justify-center">

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/datasets/metadata_ui.png"/>

</div>

3. Write your dataset documentation in the Dataset Card to introduce your dataset to the community and help users understand what is inside: what are the use cases and limitations, where the data comes from, what are important ethical considerations, and any other relevant details.

You can click on the **Import dataset card template** link at the top of the editor to automatically create a dataset card template. For a detailed example of what a good Dataset card should look like, take a look at the [CNN DailyMail Dataset card](https://huggingface.co/datasets/cnn_dailymail).

### Dataset Viewer

The [Dataset Viewer](./datasets-viewer) is useful to know how the data actually looks like before you download it.

It is enabled by default for all public datasets.

Make sure the Dataset Viewer correctly shows your data, or [Configure the Dataset Viewer](./datasets-viewer-configure).

## Using the `huggingface_hub` client library

The rich features set in the `huggingface_hub` library allows you to manage repositories, including creating repos and uploading datasets to the Hub. Visit [the client library's documentation](https://huggingface.co/docs/huggingface_hub/index) to learn more.

## Using other libraries

Some libraries like [🤗 Datasets](https://huggingface.co/docs/datasets/index), [Pandas](https://pandas.pydata.org/), [Dask](https://www.dask.org/) or [DuckDB](https://duckdb.org/) can upload files to the Hub.

See the list of [Libraries supported by the Datasets Hub](./datasets-libraries) for more information.

## Using Git

Since dataset repos are Git repositories, you can use Git to push your data files to the Hub. Follow the guide on [Getting Started with Repositories](repositories-getting-started) to learn about using the `git` CLI to commit and push your datasets.

## File formats

The Hub natively supports multiple file formats:

- CSV (.csv, .tsv)

- JSON Lines, JSON (.jsonl, .json)

- Parquet (.parquet)

- Text (.txt)

- Images (.png, .jpg, etc.)

- Audio (.wav, .mp3, etc.)

It also supports files compressed using ZIP (.zip), GZIP (.gz), ZSTD (.zst), BZ2 (.bz2), LZ4 (.lz4) and LZMA (.xz).

Image and audio resources can also have additional metadata files, see the [Data files Configuration](./datasets-data-files-configuration#image-and-audio-datasets) on image and audio datasets.

You may want to convert your files to these formats to benefit from all the Hub features.

Other formats and structures may not be recognized by the Hub.

| huggingface/hub-docs/blob/main/docs/hub/datasets-adding.md |

Transformers, what can they do?[[transformers-what-can-they-do]]

<CourseFloatingBanner chapter={1}

classNames="absolute z-10 right-0 top-0"

notebooks={[

{label: "Google Colab", value: "https://colab.research.google.com/github/huggingface/notebooks/blob/master/course/en/chapter1/section3.ipynb"},

{label: "Aws Studio", value: "https://studiolab.sagemaker.aws/import/github/huggingface/notebooks/blob/master/course/en/chapter1/section3.ipynb"},

]} />

In this section, we will look at what Transformer models can do and use our first tool from the 🤗 Transformers library: the `pipeline()` function.

<Tip>

👀 See that <em>Open in Colab</em> button on the top right? Click on it to open a Google Colab notebook with all the code samples of this section. This button will be present in any section containing code examples.

If you want to run the examples locally, we recommend taking a look at the <a href="/course/chapter0">setup</a>.

</Tip>

## Transformers are everywhere![[transformers-are-everywhere]]

Transformer models are used to solve all kinds of NLP tasks, like the ones mentioned in the previous section. Here are some of the companies and organizations using Hugging Face and Transformer models, who also contribute back to the community by sharing their models:

<img src="https://huggingface.co/datasets/huggingface-course/documentation-images/resolve/main/en/chapter1/companies.PNG" alt="Companies using Hugging Face" width="100%">

The [🤗 Transformers library](https://github.com/huggingface/transformers) provides the functionality to create and use those shared models. The [Model Hub](https://huggingface.co/models) contains thousands of pretrained models that anyone can download and use. You can also upload your own models to the Hub!

<Tip>

⚠️ The Hugging Face Hub is not limited to Transformer models. Anyone can share any kind of models or datasets they want! <a href="https://huggingface.co/join">Create a huggingface.co</a> account to benefit from all available features!

</Tip>

Before diving into how Transformer models work under the hood, let's look at a few examples of how they can be used to solve some interesting NLP problems.

## Working with pipelines[[working-with-pipelines]]

<Youtube id="tiZFewofSLM" />

The most basic object in the 🤗 Transformers library is the `pipeline()` function. It connects a model with its necessary preprocessing and postprocessing steps, allowing us to directly input any text and get an intelligible answer:

```python

from transformers import pipeline

classifier = pipeline("sentiment-analysis")

classifier("I've been waiting for a HuggingFace course my whole life.")

```

```python out

[{'label': 'POSITIVE', 'score': 0.9598047137260437}]

```

We can even pass several sentences!

```python

classifier(

["I've been waiting for a HuggingFace course my whole life.", "I hate this so much!"]

)

```

```python out

[{'label': 'POSITIVE', 'score': 0.9598047137260437},

{'label': 'NEGATIVE', 'score': 0.9994558095932007}]

```

By default, this pipeline selects a particular pretrained model that has been fine-tuned for sentiment analysis in English. The model is downloaded and cached when you create the `classifier` object. If you rerun the command, the cached model will be used instead and there is no need to download the model again.

There are three main steps involved when you pass some text to a pipeline:

1. The text is preprocessed into a format the model can understand.

2. The preprocessed inputs are passed to the model.

3. The predictions of the model are post-processed, so you can make sense of them.

Some of the currently [available pipelines](https://huggingface.co/transformers/main_classes/pipelines.html) are:

- `feature-extraction` (get the vector representation of a text)

- `fill-mask`

- `ner` (named entity recognition)

- `question-answering`

- `sentiment-analysis`

- `summarization`

- `text-generation`

- `translation`

- `zero-shot-classification`

Let's have a look at a few of these!

## Zero-shot classification[[zero-shot-classification]]

We'll start by tackling a more challenging task where we need to classify texts that haven't been labelled. This is a common scenario in real-world projects because annotating text is usually time-consuming and requires domain expertise. For this use case, the `zero-shot-classification` pipeline is very powerful: it allows you to specify which labels to use for the classification, so you don't have to rely on the labels of the pretrained model. You've already seen how the model can classify a sentence as positive or negative using those two labels — but it can also classify the text using any other set of labels you like.

```python

from transformers import pipeline

classifier = pipeline("zero-shot-classification")

classifier(

"This is a course about the Transformers library",

candidate_labels=["education", "politics", "business"],

)

```

```python out

{'sequence': 'This is a course about the Transformers library',

'labels': ['education', 'business', 'politics'],

'scores': [0.8445963859558105, 0.111976258456707, 0.043427448719739914]}

```

This pipeline is called _zero-shot_ because you don't need to fine-tune the model on your data to use it. It can directly return probability scores for any list of labels you want!

<Tip>

✏️ **Try it out!** Play around with your own sequences and labels and see how the model behaves.

</Tip>

## Text generation[[text-generation]]

Now let's see how to use a pipeline to generate some text. The main idea here is that you provide a prompt and the model will auto-complete it by generating the remaining text. This is similar to the predictive text feature that is found on many phones. Text generation involves randomness, so it's normal if you don't get the same results as shown below.

```python

from transformers import pipeline

generator = pipeline("text-generation")

generator("In this course, we will teach you how to")

```

```python out

[{'generated_text': 'In this course, we will teach you how to understand and use '

'data flow and data interchange when handling user data. We '

'will be working with one or more of the most commonly used '

'data flows — data flows of various types, as seen by the '

'HTTP'}]

```

You can control how many different sequences are generated with the argument `num_return_sequences` and the total length of the output text with the argument `max_length`.

<Tip>

✏️ **Try it out!** Use the `num_return_sequences` and `max_length` arguments to generate two sentences of 15 words each.

</Tip>

## Using any model from the Hub in a pipeline[[using-any-model-from-the-hub-in-a-pipeline]]

The previous examples used the default model for the task at hand, but you can also choose a particular model from the Hub to use in a pipeline for a specific task — say, text generation. Go to the [Model Hub](https://huggingface.co/models) and click on the corresponding tag on the left to display only the supported models for that task. You should get to a page like [this one](https://huggingface.co/models?pipeline_tag=text-generation).

Let's try the [`distilgpt2`](https://huggingface.co/distilgpt2) model! Here's how to load it in the same pipeline as before:

```python

from transformers import pipeline

generator = pipeline("text-generation", model="distilgpt2")

generator(

"In this course, we will teach you how to",

max_length=30,

num_return_sequences=2,

)

```

```python out

[{'generated_text': 'In this course, we will teach you how to manipulate the world and '

'move your mental and physical capabilities to your advantage.'},

{'generated_text': 'In this course, we will teach you how to become an expert and '

'practice realtime, and with a hands on experience on both real '

'time and real'}]

```

You can refine your search for a model by clicking on the language tags, and pick a model that will generate text in another language. The Model Hub even contains checkpoints for multilingual models that support several languages.

Once you select a model by clicking on it, you'll see that there is a widget enabling you to try it directly online. This way you can quickly test the model's capabilities before downloading it.

<Tip>

✏️ **Try it out!** Use the filters to find a text generation model for another language. Feel free to play with the widget and use it in a pipeline!

</Tip>

### The Inference API[[the-inference-api]]

All the models can be tested directly through your browser using the Inference API, which is available on the Hugging Face [website](https://huggingface.co/). You can play with the model directly on this page by inputting custom text and watching the model process the input data.

The Inference API that powers the widget is also available as a paid product, which comes in handy if you need it for your workflows. See the [pricing page](https://huggingface.co/pricing) for more details.

## Mask filling[[mask-filling]]

The next pipeline you'll try is `fill-mask`. The idea of this task is to fill in the blanks in a given text:

```python

from transformers import pipeline

unmasker = pipeline("fill-mask")

unmasker("This course will teach you all about <mask> models.", top_k=2)

```

```python out

[{'sequence': 'This course will teach you all about mathematical models.',

'score': 0.19619831442832947,

'token': 30412,

'token_str': ' mathematical'},

{'sequence': 'This course will teach you all about computational models.',

'score': 0.04052725434303284,

'token': 38163,

'token_str': ' computational'}]

```

The `top_k` argument controls how many possibilities you want to be displayed. Note that here the model fills in the special `<mask>` word, which is often referred to as a *mask token*. Other mask-filling models might have different mask tokens, so it's always good to verify the proper mask word when exploring other models. One way to check it is by looking at the mask word used in the widget.

<Tip>

✏️ **Try it out!** Search for the `bert-base-cased` model on the Hub and identify its mask word in the Inference API widget. What does this model predict for the sentence in our `pipeline` example above?

</Tip>

## Named entity recognition[[named-entity-recognition]]

Named entity recognition (NER) is a task where the model has to find which parts of the input text correspond to entities such as persons, locations, or organizations. Let's look at an example:

```python

from transformers import pipeline

ner = pipeline("ner", grouped_entities=True)

ner("My name is Sylvain and I work at Hugging Face in Brooklyn.")

```

```python out

[{'entity_group': 'PER', 'score': 0.99816, 'word': 'Sylvain', 'start': 11, 'end': 18},

{'entity_group': 'ORG', 'score': 0.97960, 'word': 'Hugging Face', 'start': 33, 'end': 45},

{'entity_group': 'LOC', 'score': 0.99321, 'word': 'Brooklyn', 'start': 49, 'end': 57}

]

```

Here the model correctly identified that Sylvain is a person (PER), Hugging Face an organization (ORG), and Brooklyn a location (LOC).

We pass the option `grouped_entities=True` in the pipeline creation function to tell the pipeline to regroup together the parts of the sentence that correspond to the same entity: here the model correctly grouped "Hugging" and "Face" as a single organization, even though the name consists of multiple words. In fact, as we will see in the next chapter, the preprocessing even splits some words into smaller parts. For instance, `Sylvain` is split into four pieces: `S`, `##yl`, `##va`, and `##in`. In the post-processing step, the pipeline successfully regrouped those pieces.

<Tip>

✏️ **Try it out!** Search the Model Hub for a model able to do part-of-speech tagging (usually abbreviated as POS) in English. What does this model predict for the sentence in the example above?

</Tip>

## Question answering[[question-answering]]

The `question-answering` pipeline answers questions using information from a given context:

```python

from transformers import pipeline

question_answerer = pipeline("question-answering")

question_answerer(

question="Where do I work?",

context="My name is Sylvain and I work at Hugging Face in Brooklyn",

)

```

```python out

{'score': 0.6385916471481323, 'start': 33, 'end': 45, 'answer': 'Hugging Face'}

```

Note that this pipeline works by extracting information from the provided context; it does not generate the answer.

## Summarization[[summarization]]

Summarization is the task of reducing a text into a shorter text while keeping all (or most) of the important aspects referenced in the text. Here's an example:

```python

from transformers import pipeline

summarizer = pipeline("summarization")

summarizer(

"""

America has changed dramatically during recent years. Not only has the number of

graduates in traditional engineering disciplines such as mechanical, civil,

electrical, chemical, and aeronautical engineering declined, but in most of

the premier American universities engineering curricula now concentrate on

and encourage largely the study of engineering science. As a result, there

are declining offerings in engineering subjects dealing with infrastructure,

the environment, and related issues, and greater concentration on high

technology subjects, largely supporting increasingly complex scientific

developments. While the latter is important, it should not be at the expense

of more traditional engineering.

Rapidly developing economies such as China and India, as well as other

industrial countries in Europe and Asia, continue to encourage and advance

the teaching of engineering. Both China and India, respectively, graduate

six and eight times as many traditional engineers as does the United States.

Other industrial countries at minimum maintain their output, while America

suffers an increasingly serious decline in the number of engineering graduates

and a lack of well-educated engineers.

"""

)

```

```python out

[{'summary_text': ' America has changed dramatically during recent years . The '

'number of engineering graduates in the U.S. has declined in '

'traditional engineering disciplines such as mechanical, civil '

', electrical, chemical, and aeronautical engineering . Rapidly '

'developing economies such as China and India, as well as other '

'industrial countries in Europe and Asia, continue to encourage '

'and advance engineering .'}]

```

Like with text generation, you can specify a `max_length` or a `min_length` for the result.

## Translation[[translation]]

For translation, you can use a default model if you provide a language pair in the task name (such as `"translation_en_to_fr"`), but the easiest way is to pick the model you want to use on the [Model Hub](https://huggingface.co/models). Here we'll try translating from French to English:

```python

from transformers import pipeline

translator = pipeline("translation", model="Helsinki-NLP/opus-mt-fr-en")

translator("Ce cours est produit par Hugging Face.")

```

```python out

[{'translation_text': 'This course is produced by Hugging Face.'}]

```

Like with text generation and summarization, you can specify a `max_length` or a `min_length` for the result.

<Tip>

✏️ **Try it out!** Search for translation models in other languages and try to translate the previous sentence into a few different languages.

</Tip>

The pipelines shown so far are mostly for demonstrative purposes. They were programmed for specific tasks and cannot perform variations of them. In the next chapter, you'll learn what's inside a `pipeline()` function and how to customize its behavior.

| huggingface/course/blob/main/chapters/en/chapter1/3.mdx |

!--Copyright 2023 The HuggingFace Team. All rights reserved.

Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with

the License. You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on

an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the

specific language governing permissions and limitations under the License.

-->

# Pyramid Vision Transformer (PVT)

## Overview

The PVT model was proposed in

[Pyramid Vision Transformer: A Versatile Backbone for Dense Prediction without Convolutions](https://arxiv.org/abs/2102.12122)

by Wenhai Wang, Enze Xie, Xiang Li, Deng-Ping Fan, Kaitao Song, Ding Liang, Tong Lu, Ping Luo, Ling Shao. The PVT is a type of

vision transformer that utilizes a pyramid structure to make it an effective backbone for dense prediction tasks. Specifically

it allows for more fine-grained inputs (4 x 4 pixels per patch) to be used, while simultaneously shrinking the sequence length

of the Transformer as it deepens - reducing the computational cost. Additionally, a spatial-reduction attention (SRA) layer

is used to further reduce the resource consumption when learning high-resolution features.

The abstract from the paper is the following:

*Although convolutional neural networks (CNNs) have achieved great success in computer vision, this work investigates a

simpler, convolution-free backbone network useful for many dense prediction tasks. Unlike the recently proposed Vision

Transformer (ViT) that was designed for image classification specifically, we introduce the Pyramid Vision Transformer

(PVT), which overcomes the difficulties of porting Transformer to various dense prediction tasks. PVT has several

merits compared to current state of the arts. Different from ViT that typically yields low resolution outputs and

incurs high computational and memory costs, PVT not only can be trained on dense partitions of an image to achieve high

output resolution, which is important for dense prediction, but also uses a progressive shrinking pyramid to reduce the

computations of large feature maps. PVT inherits the advantages of both CNN and Transformer, making it a unified

backbone for various vision tasks without convolutions, where it can be used as a direct replacement for CNN backbones.

We validate PVT through extensive experiments, showing that it boosts the performance of many downstream tasks, including

object detection, instance and semantic segmentation. For example, with a comparable number of parameters, PVT+RetinaNet

achieves 40.4 AP on the COCO dataset, surpassing ResNet50+RetinNet (36.3 AP) by 4.1 absolute AP (see Figure 2). We hope

that PVT could serve as an alternative and useful backbone for pixel-level predictions and facilitate future research.*

This model was contributed by [Xrenya](<https://huggingface.co/Xrenya). The original code can be found [here](https://github.com/whai362/PVT).

- PVTv1 on ImageNet-1K

| **Model variant** |**Size** |**Acc@1**|**Params (M)**|

|--------------------|:-------:|:-------:|:------------:|

| PVT-Tiny | 224 | 75.1 | 13.2 |

| PVT-Small | 224 | 79.8 | 24.5 |

| PVT-Medium | 224 | 81.2 | 44.2 |

| PVT-Large | 224 | 81.7 | 61.4 |

## PvtConfig

[[autodoc]] PvtConfig

## PvtImageProcessor

[[autodoc]] PvtImageProcessor

- preprocess

## PvtForImageClassification

[[autodoc]] PvtForImageClassification

- forward

## PvtModel

[[autodoc]] PvtModel

- forward

| huggingface/transformers/blob/main/docs/source/en/model_doc/pvt.md |

!--Copyright 2022 The HuggingFace Team. All rights reserved.

Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with

the License. You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on

an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the

specific language governing permissions and limitations under the License.

-->

# Running the simulation

This section will describe how to run the physics simulation and collect data.

The code in this section is reflected in [examples/basic/simple_physics.py](https://github.com/huggingface/simulate/blob/main/examples/basic/simple_physics.py).

Start by displaying a simple scene with a cube above a plane, viewed by a camera:

```

import simulate as sm

scene = sm.Scene(engine="Unity")

scene += sm.LightSun()

scene += sm.Box(

name="floor",

position=[0, 0, 0],

bounds=[-10, 10, -0.1, 0, -10, 10],

material=sm.Material.GRAY75,

)

scene += sm.Box(

name="cube",

position=[0, 3, 0],

scaling=[1, 1, 1],

material=sm.Material.GRAY50,

with_rigid_body=True,

)

scene += sm.Camera(name="camera", position=[0, 2, -10])

scene.show()

# Prevent auto-closing when running locally

input("Press enter to continue...")

```

Note that we use the Unity engine backend, which supports physics simulation, as well as specify `with_rigid_body=True` on the cube, to enable forces like gravity.

Next, run the simulation for 30 timesteps:

```

for i in range(60):

event = scene.step()

```

You should see the cube falling onto the plane.

`step()` tells the backend to step the simulation forward, and allows keyword arguments to be passed, allowing a wide variety of customizable behavior.

The backend then returns a dictionary of data as an `event`. By default, this dictionary contains `nodes` and `frames`.

`nodes` is a dictionary containing all assets in the scene and their physical parameters such as position, rotation, and velocity.

Try graphing the height of the cube as it falls:

```

import numpy as np

import matplotlib.pyplot as plt

plt.ion()

_, ax1 = plt.subplots(1, 1)

heights = []

for i in range(60):

event = scene.step()

height = event["nodes"]["cube"]["position"][1]

heights.append(height)

ax1.clear()

ax1.set_xlim([0, 60])

ax1.set_ylim([0, 3])

ax1.plot(np.arange(len(heights)), heights)

plt.pause(0.1)

```

`frames` is a dictionary containing the rendering from each camera.

Try modifying the code to display these frames in matplotlib:

```

plt.ion()

_, ax1 = plt.subplots(1, 1)

for i in range(60):

event = scene.step()

im = np.array(event["frames"]["camera"], dtype=np.uint8).transpose(1, 2, 0)

ax1.clear()

ax1.imshow(im)

plt.pause(0.1)

```

🤗 Simulate is highly customizable. If you aren't interested in returning this data, you can modify the scene configuration prior to calling `show()` to disable it:

```

scene.config.return_nodes = False

scene.config.return_frames = False

scene.show()

```

For advanced use, you can extend this functionality using [plugins](./howto/plugins).

In this library, we include an extensive plugin for reinforcement learning. If you are using 🤗 Simulate for reinforcement learning, continue with [reinforcement learning how-tos](../howto/rl).

| huggingface/simulate/blob/main/docs/source/tutorials/running_the_simulation.mdx |

Deprecated Pipelines

This folder contains pipelines that have very low usage as measured by model downloads, issues and PRs. While you can still use the pipelines just as before, we will stop testing the pipelines and will not accept any changes to existing files. | huggingface/diffusers/blob/main/src/diffusers/pipelines/deprecated/README.md |

Let's train and play with Huggy 🐶 [[train]]

<CourseFloatingBanner classNames="absolute z-10 right-0 top-0"

notebooks={[

{label: "Google Colab", value: "https://colab.research.google.com/github/huggingface/deep-rl-class/blob/master/notebooks/bonus-unit1/bonus-unit1.ipynb"}

]}

askForHelpUrl="http://hf.co/join/discord" />

We strongly **recommend students use Google Colab for the hands-on exercises** instead of running them on their personal computers.

By using Google Colab, **you can focus on learning and experimenting without worrying about the technical aspects** of setting up your environments.

## Let's train Huggy 🐶

**To start to train Huggy, click on Open In Colab button** 👇 :

[](https://colab.research.google.com/github/huggingface/deep-rl-class/blob/master/notebooks/bonus-unit1/bonus-unit1.ipynb)

<img src="https://huggingface.co/datasets/huggingface-deep-rl-course/course-images/resolve/main/en/unit2/thumbnail.png" alt="Bonus Unit 1Thumbnail">

In this notebook, we'll reinforce what we learned in the first Unit by **teaching Huggy the Dog to fetch the stick and then play with it directly in your browser**

<img src="https://huggingface.co/datasets/huggingface-deep-rl-course/course-images/resolve/main/en/notebooks/unit-bonus1/huggy.jpg" alt="Huggy"/>

### The environment 🎮

- Huggy the Dog, an environment created by [Thomas Simonini](https://twitter.com/ThomasSimonini) based on [Puppo The Corgi](https://blog.unity.com/technology/puppo-the-corgi-cuteness-overload-with-the-unity-ml-agents-toolkit)

### The library used 📚

- [MLAgents](https://github.com/Unity-Technologies/ml-agents)

We're constantly trying to improve our tutorials, so **if you find some issues in this notebook**, please [open an issue on the Github Repo](https://github.com/huggingface/deep-rl-class/issues).

## Objectives of this notebook 🏆

At the end of the notebook, you will:

- Understand **the state space, action space, and reward function used to train Huggy**.

- **Train your own Huggy** to fetch the stick.

- Be able to play **with your trained Huggy directly in your browser**.

## Prerequisites 🏗️

Before diving into the notebook, you need to:

🔲 📚 **Develop an understanding of the foundations of Reinforcement learning** (MC, TD, Rewards hypothesis...) by doing Unit 1

🔲 📚 **Read the introduction to Huggy** by doing Bonus Unit 1

## Set the GPU 💪

- To **accelerate the agent's training, we'll use a GPU**. To do that, go to `Runtime > Change Runtime type`

<img src="https://huggingface.co/datasets/huggingface-deep-rl-course/course-images/resolve/main/en/notebooks/gpu-step1.jpg" alt="GPU Step 1">

- `Hardware Accelerator > GPU`

<img src="https://huggingface.co/datasets/huggingface-deep-rl-course/course-images/resolve/main/en/notebooks/gpu-step2.jpg" alt="GPU Step 2">

## Clone the repository and install the dependencies 🔽

- We need to clone the repository, that contains ML-Agents.

```bash

# Clone the repository (can take 3min)

git clone --depth 1 https://github.com/Unity-Technologies/ml-agents

```

```bash

# Go inside the repository and install the package (can take 3min)

%cd ml-agents

pip3 install -e ./ml-agents-envs

pip3 install -e ./ml-agents

```

## Download and move the environment zip file in `./trained-envs-executables/linux/`

- Our environment executable is in a zip file.

- We need to download it and place it to `./trained-envs-executables/linux/`

```bash

mkdir ./trained-envs-executables

mkdir ./trained-envs-executables/linux

```

```bash

wget --load-cookies /tmp/cookies.txt "https://docs.google.com/uc?export=download&confirm=$(wget --quiet --save-cookies /tmp/cookies.txt --keep-session-cookies --no-check-certificate 'https://docs.google.com/uc?export=download&id=1zv3M95ZJTWHUVOWT6ckq_cm98nft8gdF' -O- | sed -rn 's/.*confirm=([0-9A-Za-z_]+).*/\1\n/p')&id=1zv3M95ZJTWHUVOWT6ckq_cm98nft8gdF" -O ./trained-envs-executables/linux/Huggy.zip && rm -rf /tmp/cookies.txt

```

Download the file Huggy.zip from https://drive.google.com/uc?export=download&id=1zv3M95ZJTWHUVOWT6ckq_cm98nft8gdF using `wget`. Check out the full solution to download large files from GDrive [here](https://bcrf.biochem.wisc.edu/2021/02/05/download-google-drive-files-using-wget/)

```bash

%%capture

unzip -d ./trained-envs-executables/linux/ ./trained-envs-executables/linux/Huggy.zip

```

Make sure your file is accessible

```bash

chmod -R 755 ./trained-envs-executables/linux/Huggy

```

## Let's recap how this environment works

### The State Space: what Huggy perceives.

Huggy doesn't "see" his environment. Instead, we provide him information about the environment:

- The target (stick) position

- The relative position between himself and the target

- The orientation of his legs.

Given all this information, Huggy **can decide which action to take next to fulfill his goal**.

<img src="https://huggingface.co/datasets/huggingface-deep-rl-course/course-images/resolve/main/en/notebooks/unit-bonus1/huggy.jpg" alt="Huggy" width="100%">

### The Action Space: what moves Huggy can do

<img src="https://huggingface.co/datasets/huggingface-deep-rl-course/course-images/resolve/main/en/notebooks/unit-bonus1/huggy-action.jpg" alt="Huggy action" width="100%">

**Joint motors drive huggy legs**. This means that to get the target, Huggy needs to **learn to rotate the joint motors of each of his legs correctly so he can move**.

### The Reward Function

The reward function is designed so that **Huggy will fulfill his goal** : fetch the stick.

Remember that one of the foundations of Reinforcement Learning is the *reward hypothesis*: a goal can be described as the **maximization of the expected cumulative reward**.

Here, our goal is that Huggy **goes towards the stick but without spinning too much**. Hence, our reward function must translate this goal.

Our reward function:

<img src="https://huggingface.co/datasets/huggingface-deep-rl-course/course-images/resolve/main/en/notebooks/unit-bonus1/reward.jpg" alt="Huggy reward function" width="100%">

- *Orientation bonus*: we **reward him for getting close to the target**.

- *Time penalty*: a fixed-time penalty given at every action to **force him to get to the stick as fast as possible**.

- *Rotation penalty*: we penalize Huggy if **he spins too much and turns too quickly**.

- *Getting to the target reward*: we reward Huggy for **reaching the target**.

## Check the Huggy config file

- In ML-Agents, you define the **training hyperparameters in config.yaml files.**

- For the scope of this notebook, we're not going to modify the hyperparameters, but if you want to try as an experiment, Unity provides very [good documentation explaining each of them here](https://github.com/Unity-Technologies/ml-agents/blob/main/docs/Training-Configuration-File.md).

- We need to create a config file for Huggy.

- Go to `/content/ml-agents/config/ppo`

- Create a new file called `Huggy.yaml`

- Copy and paste the content below 🔽

```

behaviors:

Huggy:

trainer_type: ppo

hyperparameters:

batch_size: 2048

buffer_size: 20480

learning_rate: 0.0003

beta: 0.005

epsilon: 0.2

lambd: 0.95

num_epoch: 3

learning_rate_schedule: linear

network_settings:

normalize: true

hidden_units: 512

num_layers: 3

vis_encode_type: simple

reward_signals:

extrinsic:

gamma: 0.995

strength: 1.0

checkpoint_interval: 200000

keep_checkpoints: 15

max_steps: 2e6

time_horizon: 1000

summary_freq: 50000

```

- Don't forget to save the file!

- **In the case you want to modify the hyperparameters**, in Google Colab notebook, you can click here to open the config.yaml: `/content/ml-agents/config/ppo/Huggy.yaml`

We’re now ready to train our agent 🔥.

## Train our agent

To train our agent, we just need to **launch mlagents-learn and select the executable containing the environment.**

<img src="https://huggingface.co/datasets/huggingface-deep-rl-course/course-images/resolve/main/en/notebooks/unit-bonus1/mllearn.png" alt="ml learn function" width="100%">

With ML Agents, we run a training script. We define four parameters:

1. `mlagents-learn <config>`: the path where the hyperparameter config file is.

2. `--env`: where the environment executable is.

3. `--run_id`: the name you want to give to your training run id.

4. `--no-graphics`: to not launch the visualization during the training.

Train the model and use the `--resume` flag to continue training in case of interruption.

> It will fail first time when you use `--resume`, try running the block again to bypass the error.

The training will take 30 to 45min depending on your machine (don't forget to **set up a GPU**), go take a ☕️ you deserve it 🤗.

```bash

mlagents-learn ./config/ppo/Huggy.yaml --env=./trained-envs-executables/linux/Huggy/Huggy --run-id="Huggy" --no-graphics

```

## Push the agent to the 🤗 Hub

- Now that we trained our agent, we’re **ready to push it to the Hub to be able to play with Huggy on your browser🔥.**

To be able to share your model with the community there are three more steps to follow:

1️⃣ (If it's not already done) create an account to HF ➡ https://huggingface.co/join

2️⃣ Sign in and then get your token from the Hugging Face website.

- Create a new token (https://huggingface.co/settings/tokens) **with write role**

<img src="https://huggingface.co/datasets/huggingface-deep-rl-course/course-images/resolve/main/en/notebooks/create-token.jpg" alt="Create HF Token">

- Copy the token

- Run the cell below and paste the token

```python

from huggingface_hub import notebook_login

notebook_login()

```

If you don't want to use Google Colab or a Jupyter Notebook, you need to use this command instead: `huggingface-cli login`

Then, we simply need to run `mlagents-push-to-hf`.

<img src="https://huggingface.co/datasets/huggingface-deep-rl-course/course-images/resolve/main/en/notebooks/unit-bonus1/mlpush.png" alt="ml learn function" width="100%">

And we define 4 parameters:

1. `--run-id`: the name of the training run id.

2. `--local-dir`: where the agent was saved, it’s results/<run_id name>, so in my case results/First Training.

3. `--repo-id`: the name of the Hugging Face repo you want to create or update. It’s always <your huggingface username>/<the repo name>

If the repo does not exist **it will be created automatically**

4. `--commit-message`: since HF repos are git repositories you need to give a commit message.

```bash

mlagents-push-to-hf --run-id="HuggyTraining" --local-dir="./results/Huggy" --repo-id="ThomasSimonini/ppo-Huggy" --commit-message="Huggy"

```

If everything worked you should see this at the end of the process (but with a different url 😆) :

```

Your model is pushed to the hub. You can view your model here: https://huggingface.co/ThomasSimonini/ppo-Huggy

```

It’s the link to your model repository. The repository contains a model card that explains how to use the model, your Tensorboard logs and your config file. **What’s awesome is that it’s a git repository, which means you can have different commits, update your repository with a new push, open Pull Requests, etc.**

<img src="https://huggingface.co/datasets/huggingface-deep-rl-course/course-images/resolve/main/en/notebooks/unit-bonus1/modelcard.png" alt="ml learn function" width="100%">

But now comes the best part: **being able to play with Huggy online 👀.**

## Play with your Huggy 🐕

This step is the simplest:

- Open the Huggy game in your browser: https://huggingface.co/spaces/ThomasSimonini/Huggy

- Click on Play with my Huggy model

<img src="https://huggingface.co/datasets/huggingface-deep-rl-course/course-images/resolve/main/en/notebooks/unit-bonus1/load-huggy.jpg" alt="load-huggy" width="100%">

1. In step 1, choose your model repository, which is the model id (in my case ThomasSimonini/ppo-Huggy).

2. In step 2, **choose which model you want to replay**:

- I have multiple ones, since we saved a model every 500000 timesteps.

- But since I want the most recent one, I choose `Huggy.onnx`

👉 It's good **to try with different models steps to see the improvement of the agent.**

Congrats on finishing this bonus unit!

You can now sit and enjoy playing with your Huggy 🐶. And don't **forget to spread the love by sharing Huggy with your friends 🤗**. And if you share about it on social media, **please tag us @huggingface and me @simoninithomas**

<img src="https://huggingface.co/datasets/huggingface-deep-rl-course/course-images/resolve/main/en/notebooks/unit-bonus1/huggy-cover.jpeg" alt="Huggy cover" width="100%">

## Keep Learning, Stay awesome 🤗

| huggingface/deep-rl-class/blob/main/units/en/unitbonus1/train.mdx |

CSP-ResNet

**CSPResNet** is a convolutional neural network where we apply the Cross Stage Partial Network (CSPNet) approach to [ResNet](https://paperswithcode.com/method/resnet). The CSPNet partitions the feature map of the base layer into two parts and then merges them through a cross-stage hierarchy. The use of a split and merge strategy allows for more gradient flow through the network.

## How do I use this model on an image?

To load a pretrained model:

```py

>>> import timm

>>> model = timm.create_model('cspresnet50', pretrained=True)

>>> model.eval()

```

To load and preprocess the image:

```py

>>> import urllib

>>> from PIL import Image

>>> from timm.data import resolve_data_config

>>> from timm.data.transforms_factory import create_transform

>>> config = resolve_data_config({}, model=model)

>>> transform = create_transform(**config)

>>> url, filename = ("https://github.com/pytorch/hub/raw/master/images/dog.jpg", "dog.jpg")

>>> urllib.request.urlretrieve(url, filename)

>>> img = Image.open(filename).convert('RGB')

>>> tensor = transform(img).unsqueeze(0) # transform and add batch dimension

```

To get the model predictions:

```py

>>> import torch

>>> with torch.no_grad():

... out = model(tensor)

>>> probabilities = torch.nn.functional.softmax(out[0], dim=0)

>>> print(probabilities.shape)

>>> # prints: torch.Size([1000])

```

To get the top-5 predictions class names:

```py

>>> # Get imagenet class mappings

>>> url, filename = ("https://raw.githubusercontent.com/pytorch/hub/master/imagenet_classes.txt", "imagenet_classes.txt")

>>> urllib.request.urlretrieve(url, filename)

>>> with open("imagenet_classes.txt", "r") as f:

... categories = [s.strip() for s in f.readlines()]

>>> # Print top categories per image

>>> top5_prob, top5_catid = torch.topk(probabilities, 5)

>>> for i in range(top5_prob.size(0)):

... print(categories[top5_catid[i]], top5_prob[i].item())

>>> # prints class names and probabilities like:

>>> # [('Samoyed', 0.6425196528434753), ('Pomeranian', 0.04062102362513542), ('keeshond', 0.03186424449086189), ('white wolf', 0.01739676296710968), ('Eskimo dog', 0.011717947199940681)]

```

Replace the model name with the variant you want to use, e.g. `cspresnet50`. You can find the IDs in the model summaries at the top of this page.

To extract image features with this model, follow the [timm feature extraction examples](../feature_extraction), just change the name of the model you want to use.

## How do I finetune this model?

You can finetune any of the pre-trained models just by changing the classifier (the last layer).

```py

>>> model = timm.create_model('cspresnet50', pretrained=True, num_classes=NUM_FINETUNE_CLASSES)

```

To finetune on your own dataset, you have to write a training loop or adapt [timm's training

script](https://github.com/rwightman/pytorch-image-models/blob/master/train.py) to use your dataset.

## How do I train this model?

You can follow the [timm recipe scripts](../scripts) for training a new model afresh.

## Citation

```BibTeX

@misc{wang2019cspnet,

title={CSPNet: A New Backbone that can Enhance Learning Capability of CNN},

author={Chien-Yao Wang and Hong-Yuan Mark Liao and I-Hau Yeh and Yueh-Hua Wu and Ping-Yang Chen and Jun-Wei Hsieh},

year={2019},

eprint={1911.11929},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

```

<!--

Type: model-index

Collections:

- Name: CSP ResNet

Paper:

Title: 'CSPNet: A New Backbone that can Enhance Learning Capability of CNN'

URL: https://paperswithcode.com/paper/cspnet-a-new-backbone-that-can-enhance

Models:

- Name: cspresnet50

In Collection: CSP ResNet

Metadata:

FLOPs: 5924992000

Parameters: 21620000

File Size: 86679303

Architecture:

- 1x1 Convolution

- Batch Normalization

- Bottleneck Residual Block

- Convolution

- Global Average Pooling

- Max Pooling

- ReLU

- Residual Block

- Residual Connection

- Softmax

Tasks:

- Image Classification

Training Techniques:

- Label Smoothing

- Polynomial Learning Rate Decay

- SGD with Momentum

- Weight Decay

Training Data:

- ImageNet

ID: cspresnet50

LR: 0.1

Layers: 50

Crop Pct: '0.887'

Momentum: 0.9

Batch Size: 128

Image Size: '256'

Weight Decay: 0.005

Interpolation: bilinear

Training Steps: 8000000

Code: https://github.com/rwightman/pytorch-image-models/blob/d8e69206be253892b2956341fea09fdebfaae4e3/timm/models/cspnet.py#L415

Weights: https://github.com/rwightman/pytorch-image-models/releases/download/v0.1-weights/cspresnet50_ra-d3e8d487.pth

Results:

- Task: Image Classification

Dataset: ImageNet

Metrics:

Top 1 Accuracy: 79.57%

Top 5 Accuracy: 94.71%

--> | huggingface/pytorch-image-models/blob/main/hfdocs/source/models/csp-resnet.mdx |

!--Copyright 2023 The HuggingFace Team. All rights reserved.

Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with

the License. You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on

an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the

specific language governing permissions and limitations under the License.

⚠️ Note that this file is in Markdown but contain specific syntax for our doc-builder (similar to MDX) that may not be

rendered properly in your Markdown viewer.

-->

# Causal language modeling

[[open-in-colab]]

There are two types of language modeling, causal and masked. This guide illustrates causal language modeling.

Causal language models are frequently used for text generation. You can use these models for creative applications like

choosing your own text adventure or an intelligent coding assistant like Copilot or CodeParrot.

<Youtube id="Vpjb1lu0MDk"/>

Causal language modeling predicts the next token in a sequence of tokens, and the model can only attend to tokens on

the left. This means the model cannot see future tokens. GPT-2 is an example of a causal language model.

This guide will show you how to:

1. Finetune [DistilGPT2](https://huggingface.co/distilgpt2) on the [r/askscience](https://www.reddit.com/r/askscience/) subset of the [ELI5](https://huggingface.co/datasets/eli5) dataset.

2. Use your finetuned model for inference.

<Tip>

You can finetune other architectures for causal language modeling following the same steps in this guide.

Choose one of the following architectures:

<!--This tip is automatically generated by `make fix-copies`, do not fill manually!-->

[BART](../model_doc/bart), [BERT](../model_doc/bert), [Bert Generation](../model_doc/bert-generation), [BigBird](../model_doc/big_bird), [BigBird-Pegasus](../model_doc/bigbird_pegasus), [BioGpt](../model_doc/biogpt), [Blenderbot](../model_doc/blenderbot), [BlenderbotSmall](../model_doc/blenderbot-small), [BLOOM](../model_doc/bloom), [CamemBERT](../model_doc/camembert), [CodeLlama](../model_doc/code_llama), [CodeGen](../model_doc/codegen), [CPM-Ant](../model_doc/cpmant), [CTRL](../model_doc/ctrl), [Data2VecText](../model_doc/data2vec-text), [ELECTRA](../model_doc/electra), [ERNIE](../model_doc/ernie), [Falcon](../model_doc/falcon), [Fuyu](../model_doc/fuyu), [GIT](../model_doc/git), [GPT-Sw3](../model_doc/gpt-sw3), [OpenAI GPT-2](../model_doc/gpt2), [GPTBigCode](../model_doc/gpt_bigcode), [GPT Neo](../model_doc/gpt_neo), [GPT NeoX](../model_doc/gpt_neox), [GPT NeoX Japanese](../model_doc/gpt_neox_japanese), [GPT-J](../model_doc/gptj), [LLaMA](../model_doc/llama), [Marian](../model_doc/marian), [mBART](../model_doc/mbart), [MEGA](../model_doc/mega), [Megatron-BERT](../model_doc/megatron-bert), [Mistral](../model_doc/mistral), [Mixtral](../model_doc/mixtral), [MPT](../model_doc/mpt), [MusicGen](../model_doc/musicgen), [MVP](../model_doc/mvp), [OpenLlama](../model_doc/open-llama), [OpenAI GPT](../model_doc/openai-gpt), [OPT](../model_doc/opt), [Pegasus](../model_doc/pegasus), [Persimmon](../model_doc/persimmon), [Phi](../model_doc/phi), [PLBart](../model_doc/plbart), [ProphetNet](../model_doc/prophetnet), [QDQBert](../model_doc/qdqbert), [Reformer](../model_doc/reformer), [RemBERT](../model_doc/rembert), [RoBERTa](../model_doc/roberta), [RoBERTa-PreLayerNorm](../model_doc/roberta-prelayernorm), [RoCBert](../model_doc/roc_bert), [RoFormer](../model_doc/roformer), [RWKV](../model_doc/rwkv), [Speech2Text2](../model_doc/speech_to_text_2), [Transformer-XL](../model_doc/transfo-xl), [TrOCR](../model_doc/trocr), [Whisper](../model_doc/whisper), [XGLM](../model_doc/xglm), [XLM](../model_doc/xlm), [XLM-ProphetNet](../model_doc/xlm-prophetnet), [XLM-RoBERTa](../model_doc/xlm-roberta), [XLM-RoBERTa-XL](../model_doc/xlm-roberta-xl), [XLNet](../model_doc/xlnet), [X-MOD](../model_doc/xmod)

<!--End of the generated tip-->

</Tip>

Before you begin, make sure you have all the necessary libraries installed:

```bash

pip install transformers datasets evaluate

```

We encourage you to log in to your Hugging Face account so you can upload and share your model with the community. When prompted, enter your token to log in:

```py

>>> from huggingface_hub import notebook_login

>>> notebook_login()

```

## Load ELI5 dataset

Start by loading a smaller subset of the r/askscience subset of the ELI5 dataset from the 🤗 Datasets library.

This'll give you a chance to experiment and make sure everything works before spending more time training on the full dataset.

```py

>>> from datasets import load_dataset

>>> eli5 = load_dataset("eli5", split="train_asks[:5000]")

```

Split the dataset's `train_asks` split into a train and test set with the [`~datasets.Dataset.train_test_split`] method:

```py

>>> eli5 = eli5.train_test_split(test_size=0.2)

```

Then take a look at an example:

```py

>>> eli5["train"][0]

{'answers': {'a_id': ['c3d1aib', 'c3d4lya'],

'score': [6, 3],

'text': ["The velocity needed to remain in orbit is equal to the square root of Newton's constant times the mass of earth divided by the distance from the center of the earth. I don't know the altitude of that specific mission, but they're usually around 300 km. That means he's going 7-8 km/s.\n\nIn space there are no other forces acting on either the shuttle or the guy, so they stay in the same position relative to each other. If he were to become unable to return to the ship, he would presumably run out of oxygen, or slowly fall into the atmosphere and burn up.",

"Hope you don't mind me asking another question, but why aren't there any stars visible in this photo?"]},

'answers_urls': {'url': []},

'document': '',

'q_id': 'nyxfp',

'selftext': '_URL_0_\n\nThis was on the front page earlier and I have a few questions about it. Is it possible to calculate how fast the astronaut would be orbiting the earth? Also how does he stay close to the shuttle so that he can return safely, i.e is he orbiting at the same speed and can therefore stay next to it? And finally if his propulsion system failed, would he eventually re-enter the atmosphere and presumably die?',

'selftext_urls': {'url': ['http://apod.nasa.gov/apod/image/1201/freeflyer_nasa_3000.jpg']},

'subreddit': 'askscience',

'title': 'Few questions about this space walk photograph.',

'title_urls': {'url': []}}

```

While this may look like a lot, you're only really interested in the `text` field. What's cool about language modeling

tasks is you don't need labels (also known as an unsupervised task) because the next word *is* the label.

## Preprocess

<Youtube id="ma1TrR7gE7I"/>

The next step is to load a DistilGPT2 tokenizer to process the `text` subfield:

```py

>>> from transformers import AutoTokenizer

>>> tokenizer = AutoTokenizer.from_pretrained("distilgpt2")

```

You'll notice from the example above, the `text` field is actually nested inside `answers`. This means you'll need to

extract the `text` subfield from its nested structure with the [`flatten`](https://huggingface.co/docs/datasets/process#flatten) method:

```py

>>> eli5 = eli5.flatten()

>>> eli5["train"][0]

{'answers.a_id': ['c3d1aib', 'c3d4lya'],

'answers.score': [6, 3],

'answers.text': ["The velocity needed to remain in orbit is equal to the square root of Newton's constant times the mass of earth divided by the distance from the center of the earth. I don't know the altitude of that specific mission, but they're usually around 300 km. That means he's going 7-8 km/s.\n\nIn space there are no other forces acting on either the shuttle or the guy, so they stay in the same position relative to each other. If he were to become unable to return to the ship, he would presumably run out of oxygen, or slowly fall into the atmosphere and burn up.",

"Hope you don't mind me asking another question, but why aren't there any stars visible in this photo?"],

'answers_urls.url': [],

'document': '',

'q_id': 'nyxfp',

'selftext': '_URL_0_\n\nThis was on the front page earlier and I have a few questions about it. Is it possible to calculate how fast the astronaut would be orbiting the earth? Also how does he stay close to the shuttle so that he can return safely, i.e is he orbiting at the same speed and can therefore stay next to it? And finally if his propulsion system failed, would he eventually re-enter the atmosphere and presumably die?',

'selftext_urls.url': ['http://apod.nasa.gov/apod/image/1201/freeflyer_nasa_3000.jpg'],

'subreddit': 'askscience',

'title': 'Few questions about this space walk photograph.',

'title_urls.url': []}

```

Each subfield is now a separate column as indicated by the `answers` prefix, and the `text` field is a list now. Instead

of tokenizing each sentence separately, convert the list to a string so you can jointly tokenize them.

Here is a first preprocessing function to join the list of strings for each example and tokenize the result:

```py

>>> def preprocess_function(examples):

... return tokenizer([" ".join(x) for x in examples["answers.text"]])

```

To apply this preprocessing function over the entire dataset, use the 🤗 Datasets [`~datasets.Dataset.map`] method. You can speed up the `map` function by setting `batched=True` to process multiple elements of the dataset at once, and increasing the number of processes with `num_proc`. Remove any columns you don't need:

```py

>>> tokenized_eli5 = eli5.map(

... preprocess_function,

... batched=True,

... num_proc=4,

... remove_columns=eli5["train"].column_names,

... )

```

This dataset contains the token sequences, but some of these are longer than the maximum input length for the model.

You can now use a second preprocessing function to

- concatenate all the sequences

- split the concatenated sequences into shorter chunks defined by `block_size`, which should be both shorter than the maximum input length and short enough for your GPU RAM.

```py

>>> block_size = 128

>>> def group_texts(examples):

... # Concatenate all texts.

... concatenated_examples = {k: sum(examples[k], []) for k in examples.keys()}

... total_length = len(concatenated_examples[list(examples.keys())[0]])

... # We drop the small remainder, we could add padding if the model supported it instead of this drop, you can

... # customize this part to your needs.

... if total_length >= block_size:

... total_length = (total_length // block_size) * block_size

... # Split by chunks of block_size.

... result = {

... k: [t[i : i + block_size] for i in range(0, total_length, block_size)]

... for k, t in concatenated_examples.items()

... }

... result["labels"] = result["input_ids"].copy()

... return result

```

Apply the `group_texts` function over the entire dataset:

```py

>>> lm_dataset = tokenized_eli5.map(group_texts, batched=True, num_proc=4)

```

Now create a batch of examples using [`DataCollatorForLanguageModeling`]. It's more efficient to *dynamically pad* the

sentences to the longest length in a batch during collation, instead of padding the whole dataset to the maximum length.

<frameworkcontent>

<pt>

Use the end-of-sequence token as the padding token and set `mlm=False`. This will use the inputs as labels shifted to the right by one element:

```py

>>> from transformers import DataCollatorForLanguageModeling

>>> tokenizer.pad_token = tokenizer.eos_token

>>> data_collator = DataCollatorForLanguageModeling(tokenizer=tokenizer, mlm=False)

```

</pt>

<tf>

Use the end-of-sequence token as the padding token and set `mlm=False`. This will use the inputs as labels shifted to the right by one element:

```py

>>> from transformers import DataCollatorForLanguageModeling

>>> data_collator = DataCollatorForLanguageModeling(tokenizer=tokenizer, mlm=False, return_tensors="tf")

```

</tf>

</frameworkcontent>

## Train

<frameworkcontent>

<pt>

<Tip>

If you aren't familiar with finetuning a model with the [`Trainer`], take a look at the [basic tutorial](../training#train-with-pytorch-trainer)!

</Tip>

You're ready to start training your model now! Load DistilGPT2 with [`AutoModelForCausalLM`]:

```py

>>> from transformers import AutoModelForCausalLM, TrainingArguments, Trainer

>>> model = AutoModelForCausalLM.from_pretrained("distilgpt2")

```

At this point, only three steps remain:

1. Define your training hyperparameters in [`TrainingArguments`]. The only required parameter is `output_dir` which specifies where to save your model. You'll push this model to the Hub by setting `push_to_hub=True` (you need to be signed in to Hugging Face to upload your model).

2. Pass the training arguments to [`Trainer`] along with the model, datasets, and data collator.

3. Call [`~Trainer.train`] to finetune your model.

```py

>>> training_args = TrainingArguments(

... output_dir="my_awesome_eli5_clm-model",

... evaluation_strategy="epoch",

... learning_rate=2e-5,

... weight_decay=0.01,

... push_to_hub=True,

... )

>>> trainer = Trainer(

... model=model,

... args=training_args,

... train_dataset=lm_dataset["train"],

... eval_dataset=lm_dataset["test"],

... data_collator=data_collator,

... )

>>> trainer.train()

```

Once training is completed, use the [`~transformers.Trainer.evaluate`] method to evaluate your model and get its perplexity:

```py

>>> import math

>>> eval_results = trainer.evaluate()

>>> print(f"Perplexity: {math.exp(eval_results['eval_loss']):.2f}")

Perplexity: 49.61

```

Then share your model to the Hub with the [`~transformers.Trainer.push_to_hub`] method so everyone can use your model:

```py

>>> trainer.push_to_hub()

```

</pt>

<tf>

<Tip>

If you aren't familiar with finetuning a model with Keras, take a look at the [basic tutorial](../training#train-a-tensorflow-model-with-keras)!

</Tip>

To finetune a model in TensorFlow, start by setting up an optimizer function, learning rate schedule, and some training hyperparameters:

```py

>>> from transformers import create_optimizer, AdamWeightDecay

>>> optimizer = AdamWeightDecay(learning_rate=2e-5, weight_decay_rate=0.01)

```

Then you can load DistilGPT2 with [`TFAutoModelForCausalLM`]:

```py

>>> from transformers import TFAutoModelForCausalLM

>>> model = TFAutoModelForCausalLM.from_pretrained("distilgpt2")

```

Convert your datasets to the `tf.data.Dataset` format with [`~transformers.TFPreTrainedModel.prepare_tf_dataset`]:

```py

>>> tf_train_set = model.prepare_tf_dataset(

... lm_dataset["train"],

... shuffle=True,

... batch_size=16,

... collate_fn=data_collator,

... )

>>> tf_test_set = model.prepare_tf_dataset(

... lm_dataset["test"],

... shuffle=False,

... batch_size=16,

... collate_fn=data_collator,

... )

```