text

stringlengths 23

371k

| source

stringlengths 32

152

|

|---|---|

--

title: "Chat Templates: An End to the Silent Performance Killer"

thumbnail: /blog/assets/chat-templates/thumbnail.png

authors:

- user: rocketknight1

---

# Chat Templates

> *A spectre is haunting chat models - the spectre of incorrect formatting!*

## tl;dr

Chat models have been trained with very different formats for converting conversations into a single tokenizable string. Using a format different from the format a model was trained with will usually cause severe, silent performance degradation, so matching the format used during training is extremely important! Hugging Face tokenizers now have a `chat_template` attribute that can be used to save the chat format the model was trained with. This attribute contains a Jinja template that converts conversation histories into a correctly formatted string. Please see the [technical documentation](https://huggingface.co/docs/transformers/main/en/chat_templating) for information on how to write and apply chat templates in your code.

## Introduction

If you're familiar with the 🤗 Transformers library, you've probably written code like this:

```python

tokenizer = AutoTokenizer.from_pretrained(checkpoint)

model = AutoModel.from_pretrained(checkpoint)

```

By loading the tokenizer and model from the same checkpoint, you ensure that inputs are tokenized

in the way the model expects. If you pick a tokenizer from a different model, the input tokenization

might be completely different, and the result will be that your model's performance will be seriously damaged. The term for this is a **distribution shift** - the model has been learning data from one distribution (the tokenization it was trained with), and suddenly it has shifted to a completely different one.

Whether you're fine-tuning a model or using it directly for inference, it's always a good idea to minimize these distribution shifts and keep the input you give it as similar as possible to the input it was trained on. With regular language models, it's relatively easy to do that - simply load your tokenizer and model from the same checkpoint, and you're good to go.

With chat models, however, it's a bit different. This is because "chat" is not just a single string of text that can be straightforwardly tokenized - it's a sequence of messages, each of which contains a `role` as well as `content`, which is the actual text of the message. Most commonly, the roles are "user" for messages sent by the user, "assistant" for responses written by the model, and optionally "system" for high-level directives given at the start of the conversation.

If that all seems a bit abstract, here's an example chat to make it more concrete:

```python

[

{"role": "user", "content": "Hi there!"},

{"role": "assistant", "content": "Nice to meet you!"}

]

```

This sequence of messages needs to be converted into a text string before it can be tokenized and used as input to a model. The problem, though, is that there are many ways to do this conversion! You could, for example, convert the list of messages into an "instant messenger" format:

```

User: Hey there!

Bot: Nice to meet you!

```

Or you could add special tokens to indicate the roles:

```

[USER] Hey there! [/USER]

[ASST] Nice to meet you! [/ASST]

```

Or you could add tokens to indicate the boundaries between messages, but insert the role information as a string:

```

<|im_start|>user

Hey there!<|im_end|>

<|im_start|>assistant

Nice to meet you!<|im_end|>

```

There are lots of ways to do this, and none of them is obviously the best or correct way to do it. As a result, different models have been trained with wildly different formatting. I didn't make these examples up; they're all real and being used by at least one active model! But once a model has been trained with a certain format, you really want to ensure that future inputs use the same format, or else you could get a performance-destroying distribution shift.

## Templates: A way to save format information

Right now, if you're lucky, the format you need is correctly documented somewhere in the model card. If you're unlucky, it isn't, so good luck if you want to use that model. In extreme cases, we've even put the whole prompt format in [a blog post](https://huggingface.co/blog/llama2#how-to-prompt-llama-2) to ensure that users don't miss it! Even in the best-case scenario, though, you have to locate the template information and manually code it up in your fine-tuning or inference pipeline. We think this is an especially dangerous issue because using the wrong chat format is a **silent error** - you won't get a loud failure or a Python exception to tell you something is wrong, the model will just perform much worse than it would have with the right format, and it'll be very difficult to debug the cause!

This is the problem that **chat templates** aim to solve. Chat templates are [Jinja template strings](https://jinja.palletsprojects.com/en/3.1.x/) that are saved and loaded with your tokenizer, and that contain all the information needed to turn a list of chat messages into a correctly formatted input for your model. Here are three chat template strings, corresponding to the three message formats above:

```jinja

{% for message in messages %}

{% if message['role'] == 'user' %}

{{ "User : " }}

{% else %}

{{ "Bot : " }}

{{ message['content'] + '\n' }}

{% endfor %}

```

```jinja

{% for message in messages %}

{% if message['role'] == 'user' %}

{{ "[USER] " + message['content'] + " [/USER]" }}

{% else %}

{{ "[ASST] " + message['content'] + " [/ASST]" }}

{{ message['content'] + '\n' }}

{% endfor %}

```

```jinja

"{% for message in messages %}"

"{{'<|im_start|>' + message['role'] + '\n' + message['content'] + '<|im_end|>' + '\n'}}"

"{% endfor %}"

```

If you're unfamiliar with Jinja, I strongly recommend that you take a moment to look at these template strings, and their corresponding template outputs, and see if you can convince yourself that you understand how the template turns a list of messages into a formatted string! The syntax is very similar to Python in a lot of ways.

## Why templates?

Although Jinja can be confusing at first if you're unfamiliar with it, in practice we find that Python programmers can pick it up quickly. During development of this feature, we considered other approaches, such as a limited system to allow users to specify per-role prefixes and suffixes for messages. We found that this could become confusing and unwieldy, and was so inflexible that hacky workarounds were needed for several models. Templating, on the other hand, is powerful enough to cleanly support all of the message formats that we're aware of.

## Why bother doing this? Why not just pick a standard format?

This is an excellent idea! Unfortunately, it's too late, because multiple important models have already been trained with very different chat formats.

However, we can still mitigate this problem a bit. We think the closest thing to a 'standard' for formatting is the [ChatML format](https://github.com/openai/openai-python/blob/main/chatml.md) created by OpenAI. If you're training a new model for chat, and this format is suitable for you, we recommend using it and adding special `<|im_start|>` and `<|im_end|>` tokens to your tokenizer. It has the advantage of being very flexible with roles, as the role is just inserted as a string rather than having specific role tokens. If you'd like to use this one, it's the third of the templates above, and you can set it with this simple one-liner:

```py

tokenizer.chat_template = "{% for message in messages %}{{'<|im_start|>' + message['role'] + '\n' + message['content'] + '<|im_end|>' + '\n'}}{% endfor %}"

```

There's also a second reason not to hardcode a standard format, though, beyond the proliferation of existing formats - we expect that templates will be broadly useful in preprocessing for many types of models, including those that might be doing very different things from standard chat. Hardcoding a standard format limits the ability of model developers to use this feature to do things we haven't even thought of yet, whereas templating gives users and developers maximum freedom. It's even possible to encode checks and logic in templates, which is a feature we don't use extensively in any of the default templates, but which we expect to have enormous power in the hands of adventurous users. We strongly believe that the open-source ecosystem should enable you to do what you want, not dictate to you what you're permitted to do.

## How do templates work?

Chat templates are part of the **tokenizer**, because they fulfill the same role as tokenizers do: They store information about how data is preprocessed, to ensure that you feed data to the model in the same format that it saw during training. We have designed it to be very easy to add template information to an existing tokenizer and save it or upload it to the Hub.

Before chat templates, chat formatting information was stored at the **class level** - this meant that, for example, all LLaMA checkpoints would get the same chat formatting, using code that was hardcoded in `transformers` for the LLaMA model class. For backward compatibility, model classes that had custom chat format methods have been given **default chat templates** instead.

Default chat templates are also set at the class level, and tell classes like `ConversationPipeline` how to format inputs when the model does not have a chat template. We're doing this **purely for backwards compatibility** - we highly recommend that you explicitly set a chat template on any chat model, even when the default chat template is appropriate. This ensures that any future changes or deprecations in the default chat template don't break your model. Although we will be keeping default chat templates for the foreseeable future, we hope to transition all models to explicit chat templates over time, at which point the default chat templates may be removed entirely.

For information about how to set and apply chat templates, please see the [technical documentation](https://huggingface.co/docs/transformers/main/en/chat_templating).

## How do I get started with templates?

Easy! If a tokenizer has the `chat_template` attribute set, it's ready to go. You can use that model and tokenizer in `ConversationPipeline`, or you can call `tokenizer.apply_chat_template()` to format chats for inference or training. Please see our [developer guide](https://huggingface.co/docs/transformers/main/en/chat_templating) or the [apply_chat_template documentation](https://huggingface.co/docs/transformers/main/en/internal/tokenization_utils#transformers.PreTrainedTokenizerBase.apply_chat_template) for more!

If a tokenizer doesn't have a `chat_template` attribute, it might still work, but it will use the default chat template set for that model class. This is fragile, as we mentioned above, and it's also a source of silent bugs when the class template doesn't match what the model was actually trained with. If you want to use a checkpoint that doesn't have a `chat_template`, we recommend checking docs like the model card to verify what the right format is, and then adding a correct `chat_template`for that format. We recommend doing this even if the default chat template is correct - it future-proofs the model, and also makes it clear that the template is present and suitable.

You can add a `chat_template` even for checkpoints that you're not the owner of, by opening a [pull request](https://huggingface.co/docs/hub/repositories-pull-requests-discussions). The only change you need to make is to set the `tokenizer.chat_template` attribute to a Jinja template string. Once that's done, push your changes and you're ready to go!

If you'd like to use a checkpoint for chat but you can't find any documentation on the chat format it used, you should probably open an issue on the checkpoint or ping the owner! Once you figure out the format the model is using, please open a pull request to add a suitable `chat_template`. Other users will really appreciate it!

## Conclusion: Template philosophy

We think templates are a very exciting change. In addition to resolving a huge source of silent, performance-killing bugs, we think they open up completely new approaches and data modalities. Perhaps most importantly, they also represent a philosophical shift: They take a big function out of the core `transformers` codebase and move it into individual model repos, where users have the freedom to do weird and wild and wonderful things. We're excited to see what uses you find for them!

| huggingface/blog/blob/main/chat-templates.md |

--

language:

- en

license:

- bsd-3-clause

annotations_creators:

- crowdsourced

- expert-generated

language_creators:

- found

multilinguality:

- monolingual

size_categories:

- n<1K

task_categories:

- image-segmentation

task_ids:

- semantic-segmentation

pretty_name: Sample Segmentation

---

# Dataset Card for Sample Segmentation

This is a sample dataset card for a semantic segmentation dataset. | huggingface/huggingface_hub/blob/main/tests/fixtures/cards/sample_datasetcard_simple.md |

!--Copyright 2022 The HuggingFace Team. All rights reserved.

Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with

the License. You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on

an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the

specific language governing permissions and limitations under the License.

⚠️ Note that this file is in Markdown but contain specific syntax for our doc-builder (similar to MDX) that may not be

rendered properly in your Markdown viewer.

-->

# Nyströmformer

## Overview

The Nyströmformer model was proposed in [*Nyströmformer: A Nyström-Based Algorithm for Approximating Self-Attention*](https://arxiv.org/abs/2102.03902) by Yunyang Xiong, Zhanpeng Zeng, Rudrasis Chakraborty, Mingxing Tan, Glenn

Fung, Yin Li, and Vikas Singh.

The abstract from the paper is the following:

*Transformers have emerged as a powerful tool for a broad range of natural language processing tasks. A key component

that drives the impressive performance of Transformers is the self-attention mechanism that encodes the influence or

dependence of other tokens on each specific token. While beneficial, the quadratic complexity of self-attention on the

input sequence length has limited its application to longer sequences -- a topic being actively studied in the

community. To address this limitation, we propose Nyströmformer -- a model that exhibits favorable scalability as a

function of sequence length. Our idea is based on adapting the Nyström method to approximate standard self-attention

with O(n) complexity. The scalability of Nyströmformer enables application to longer sequences with thousands of

tokens. We perform evaluations on multiple downstream tasks on the GLUE benchmark and IMDB reviews with standard

sequence length, and find that our Nyströmformer performs comparably, or in a few cases, even slightly better, than

standard self-attention. On longer sequence tasks in the Long Range Arena (LRA) benchmark, Nyströmformer performs

favorably relative to other efficient self-attention methods. Our code is available at this https URL.*

This model was contributed by [novice03](https://huggingface.co/novice03). The original code can be found [here](https://github.com/mlpen/Nystromformer).

## Resources

- [Text classification task guide](../tasks/sequence_classification)

- [Token classification task guide](../tasks/token_classification)

- [Question answering task guide](../tasks/question_answering)

- [Masked language modeling task guide](../tasks/masked_language_modeling)

- [Multiple choice task guide](../tasks/multiple_choice)

## NystromformerConfig

[[autodoc]] NystromformerConfig

## NystromformerModel

[[autodoc]] NystromformerModel

- forward

## NystromformerForMaskedLM

[[autodoc]] NystromformerForMaskedLM

- forward

## NystromformerForSequenceClassification

[[autodoc]] NystromformerForSequenceClassification

- forward

## NystromformerForMultipleChoice

[[autodoc]] NystromformerForMultipleChoice

- forward

## NystromformerForTokenClassification

[[autodoc]] NystromformerForTokenClassification

- forward

## NystromformerForQuestionAnswering

[[autodoc]] NystromformerForQuestionAnswering

- forward

| huggingface/transformers/blob/main/docs/source/en/model_doc/nystromformer.md |

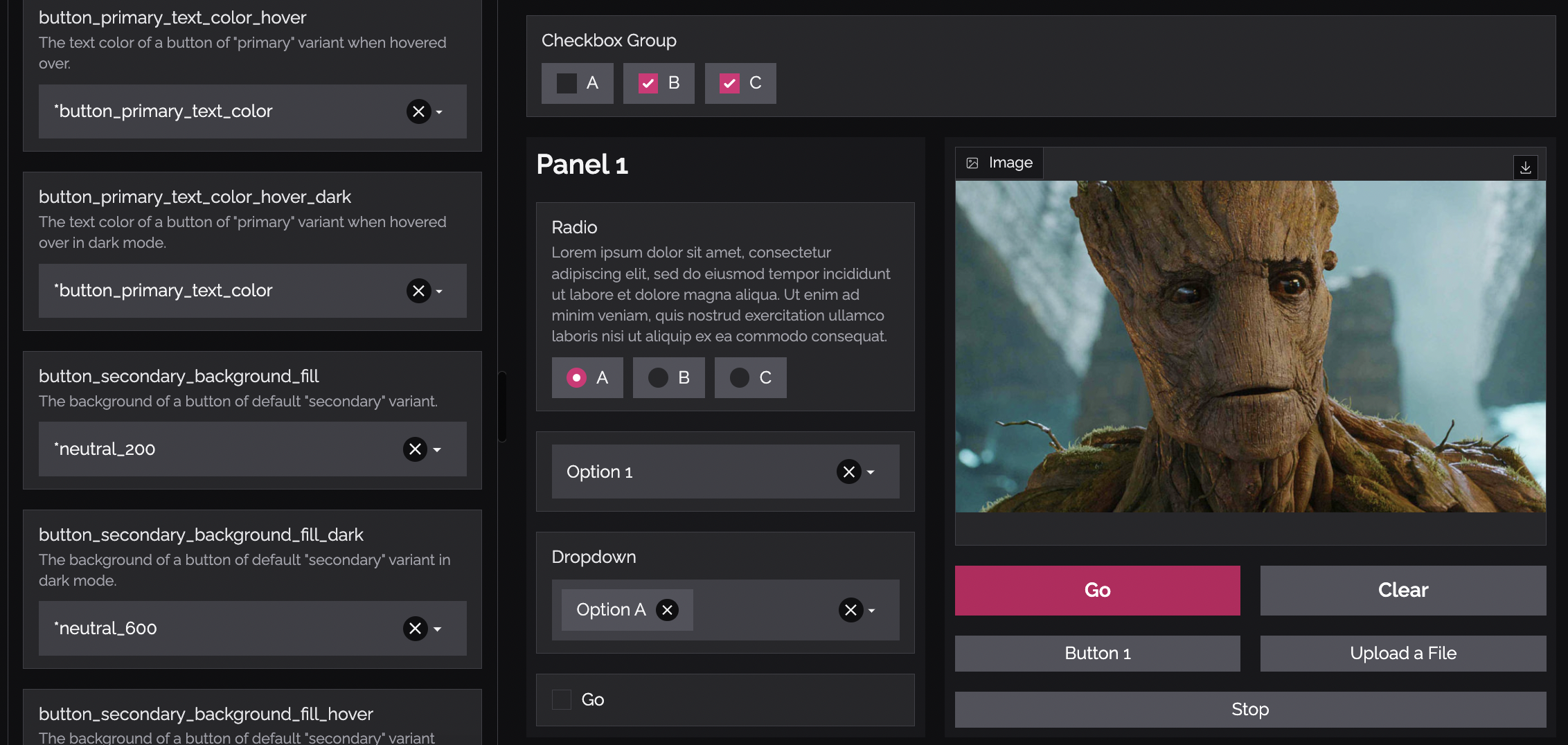

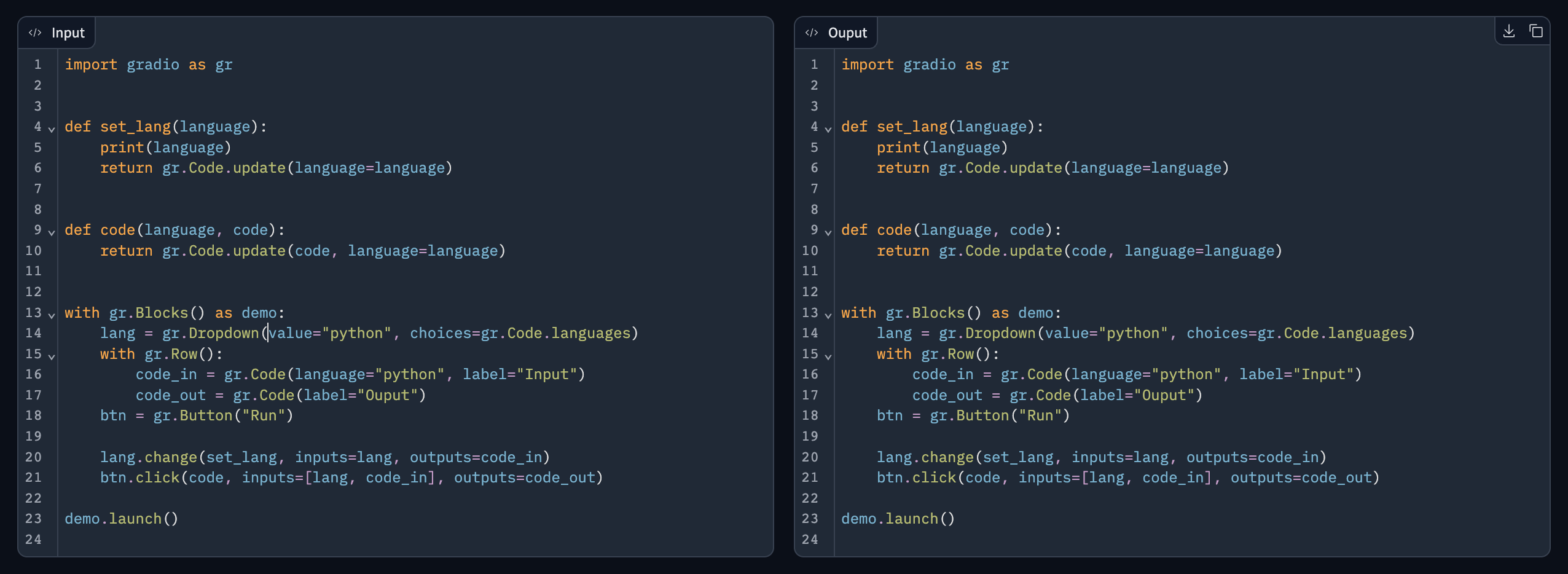

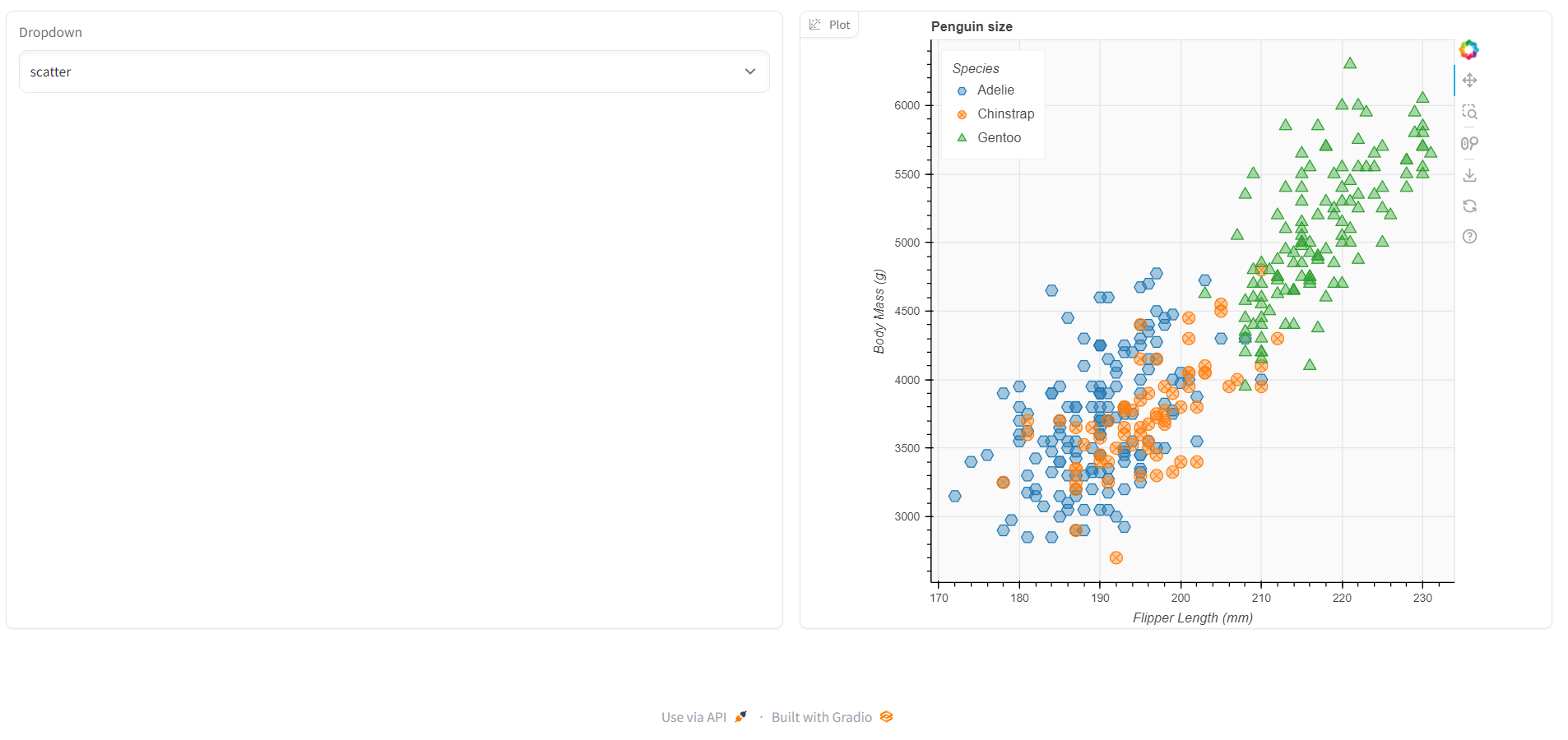

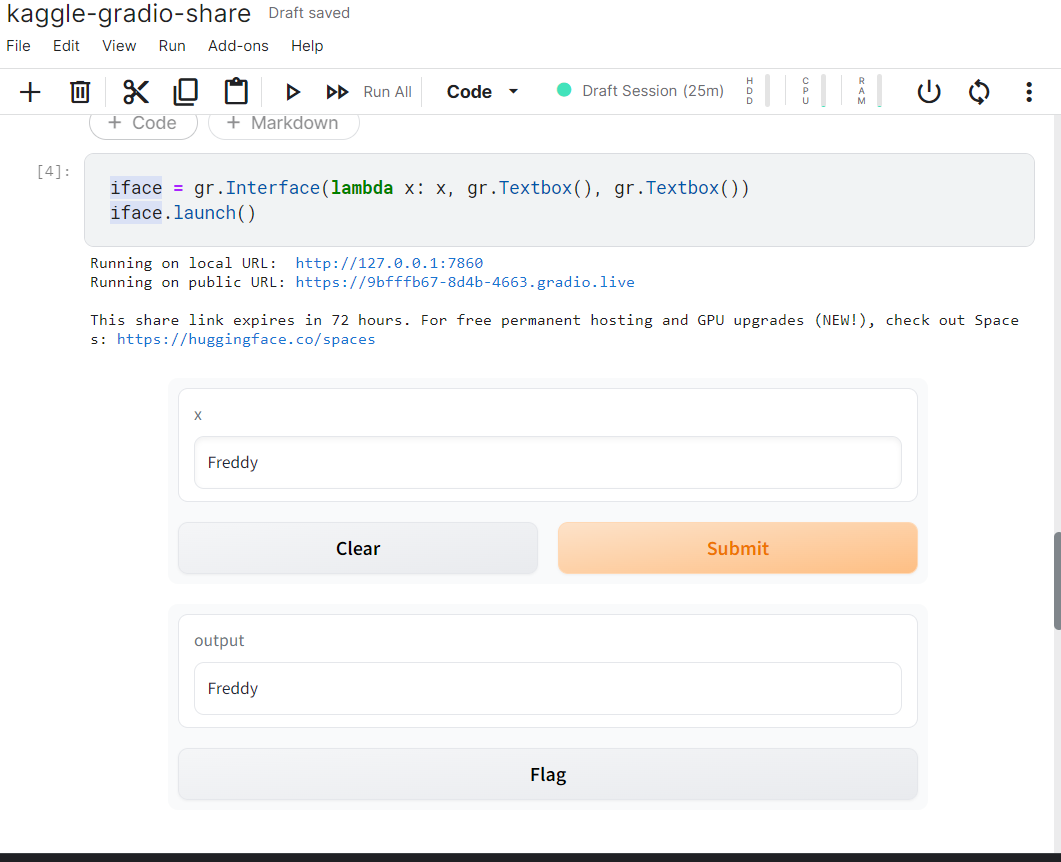

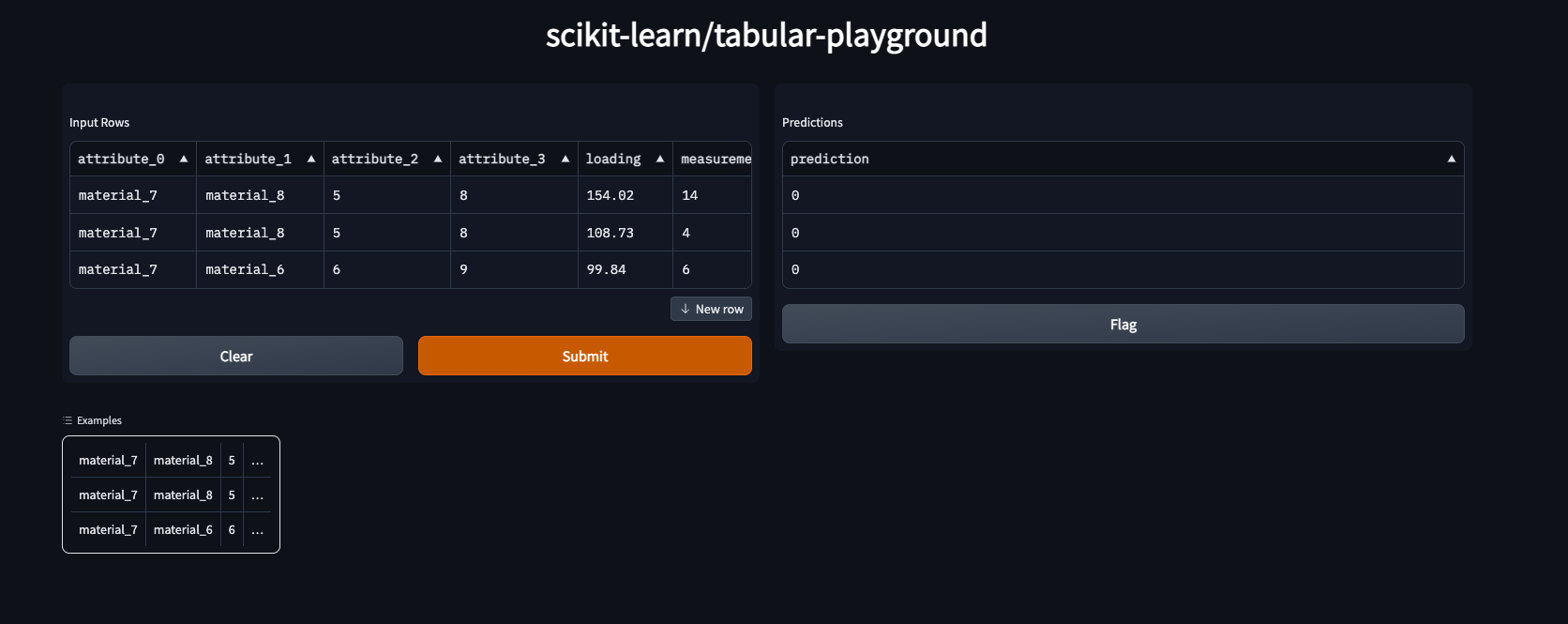

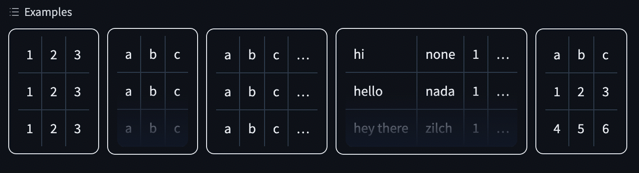

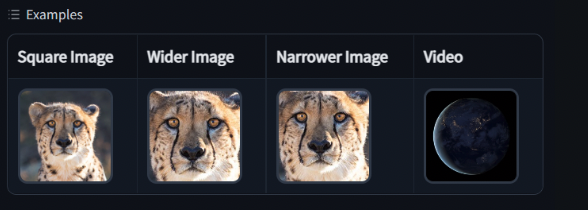

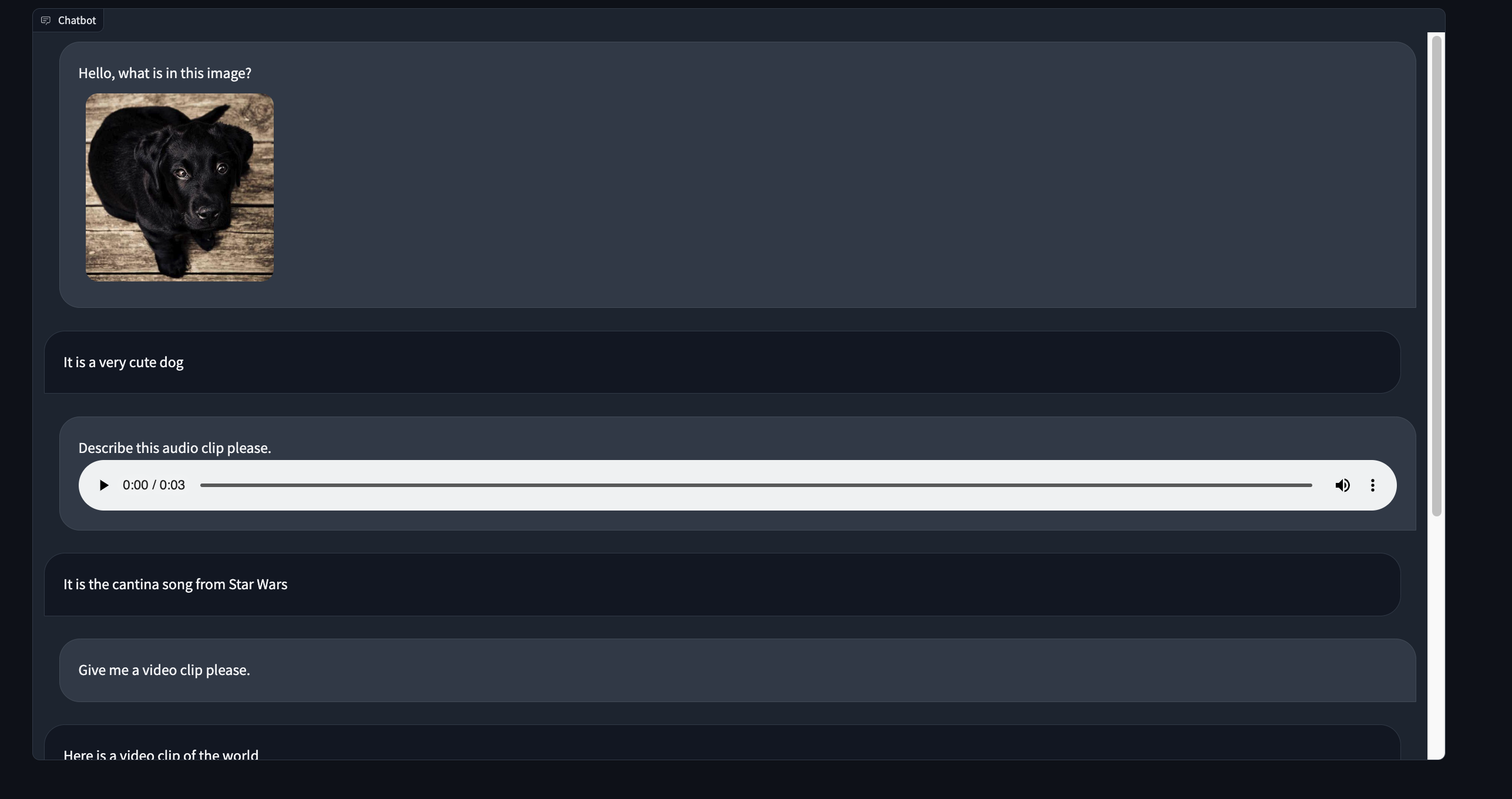

Gradio Demo: kitchen_sink

```

!pip install -q gradio

```

```

# Downloading files from the demo repo

import os

os.mkdir('files')

!wget -q -O files/cantina.wav https://github.com/gradio-app/gradio/raw/main/demo/kitchen_sink/files/cantina.wav

!wget -q -O files/cheetah1.jpg https://github.com/gradio-app/gradio/raw/main/demo/kitchen_sink/files/cheetah1.jpg

!wget -q -O files/lion.jpg https://github.com/gradio-app/gradio/raw/main/demo/kitchen_sink/files/lion.jpg

!wget -q -O files/logo.png https://github.com/gradio-app/gradio/raw/main/demo/kitchen_sink/files/logo.png

!wget -q -O files/time.csv https://github.com/gradio-app/gradio/raw/main/demo/kitchen_sink/files/time.csv

!wget -q -O files/titanic.csv https://github.com/gradio-app/gradio/raw/main/demo/kitchen_sink/files/titanic.csv

!wget -q -O files/tower.jpg https://github.com/gradio-app/gradio/raw/main/demo/kitchen_sink/files/tower.jpg

!wget -q -O files/world.mp4 https://github.com/gradio-app/gradio/raw/main/demo/kitchen_sink/files/world.mp4

```

```

import os

import json

import numpy as np

import gradio as gr

CHOICES = ["foo", "bar", "baz"]

JSONOBJ = """{"items":{"item":[{"id": "0001","type": null,"is_good": false,"ppu": 0.55,"batters":{"batter":[{ "id": "1001", "type": "Regular" },{ "id": "1002", "type": "Chocolate" },{ "id": "1003", "type": "Blueberry" },{ "id": "1004", "type": "Devil's Food" }]},"topping":[{ "id": "5001", "type": "None" },{ "id": "5002", "type": "Glazed" },{ "id": "5005", "type": "Sugar" },{ "id": "5007", "type": "Powdered Sugar" },{ "id": "5006", "type": "Chocolate with Sprinkles" },{ "id": "5003", "type": "Chocolate" },{ "id": "5004", "type": "Maple" }]}]}}"""

def fn(

text1,

text2,

num,

slider1,

slider2,

single_checkbox,

checkboxes,

radio,

dropdown,

multi_dropdown,

im1,

# im2,

# im3,

im4,

video,

audio1,

audio2,

file,

df1,

):

return (

(text1 if single_checkbox else text2)

+ ", selected:"

+ ", ".join(checkboxes), # Text

{

"positive": num / (num + slider1 + slider2),

"negative": slider1 / (num + slider1 + slider2),

"neutral": slider2 / (num + slider1 + slider2),

}, # Label

(audio1[0], np.flipud(audio1[1]))

if audio1 is not None

else os.path.join(os.path.abspath(''), "files/cantina.wav"), # Audio

np.flipud(im1)

if im1 is not None

else os.path.join(os.path.abspath(''), "files/cheetah1.jpg"), # Image

video

if video is not None

else os.path.join(os.path.abspath(''), "files/world.mp4"), # Video

[

("The", "art"),

("quick brown", "adj"),

("fox", "nn"),

("jumped", "vrb"),

("testing testing testing", None),

("over", "prp"),

("the", "art"),

("testing", None),

("lazy", "adj"),

("dogs", "nn"),

(".", "punc"),

]

+ [(f"test {x}", f"test {x}") for x in range(10)], # HighlightedText

# [("The testing testing testing", None), ("quick brown", 0.2), ("fox", 1), ("jumped", -1), ("testing testing testing", 0), ("over", 0), ("the", 0), ("testing", 0), ("lazy", 1), ("dogs", 0), (".", 1)] + [(f"test {x}", x/10) for x in range(-10, 10)], # HighlightedText

[

("The testing testing testing", None),

("over", 0.6),

("the", 0.2),

("testing", None),

("lazy", -0.1),

("dogs", 0.4),

(".", 0),

]

+ [(f"test", x / 10) for x in range(-10, 10)], # HighlightedText

json.loads(JSONOBJ), # JSON

"<button style='background-color: red'>Click Me: "

+ radio

+ "</button>", # HTML

os.path.join(os.path.abspath(''), "files/titanic.csv"),

df1, # Dataframe

np.random.randint(0, 10, (4, 4)), # Dataframe

)

demo = gr.Interface(

fn,

inputs=[

gr.Textbox(value="Lorem ipsum", label="Textbox"),

gr.Textbox(lines=3, placeholder="Type here..", label="Textbox 2"),

gr.Number(label="Number", value=42),

gr.Slider(10, 20, value=15, label="Slider: 10 - 20"),

gr.Slider(maximum=20, step=0.04, label="Slider: step @ 0.04"),

gr.Checkbox(label="Checkbox"),

gr.CheckboxGroup(label="CheckboxGroup", choices=CHOICES, value=CHOICES[0:2]),

gr.Radio(label="Radio", choices=CHOICES, value=CHOICES[2]),

gr.Dropdown(label="Dropdown", choices=CHOICES),

gr.Dropdown(

label="Multiselect Dropdown (Max choice: 2)",

choices=CHOICES,

multiselect=True,

max_choices=2,

),

gr.Image(label="Image"),

# gr.Image(label="Image w/ Cropper", tool="select"),

# gr.Image(label="Sketchpad", source="canvas"),

gr.Image(label="Webcam", sources=["webcam"]),

gr.Video(label="Video"),

gr.Audio(label="Audio"),

gr.Audio(label="Microphone", sources=["microphone"]),

gr.File(label="File"),

gr.Dataframe(label="Dataframe", headers=["Name", "Age", "Gender"]),

],

outputs=[

gr.Textbox(label="Textbox"),

gr.Label(label="Label"),

gr.Audio(label="Audio"),

gr.Image(label="Image"),

gr.Video(label="Video"),

gr.HighlightedText(

label="HighlightedText", color_map={"punc": "pink", "test 0": "blue"}

),

gr.HighlightedText(label="HighlightedText", show_legend=True),

gr.JSON(label="JSON"),

gr.HTML(label="HTML"),

gr.File(label="File"),

gr.Dataframe(label="Dataframe"),

gr.Dataframe(label="Numpy"),

],

examples=[

[

"the quick brown fox",

"jumps over the lazy dog",

10,

12,

4,

True,

["foo", "baz"],

"baz",

"bar",

["foo", "bar"],

os.path.join(os.path.abspath(''), "files/cheetah1.jpg"),

# os.path.join(os.path.abspath(''), "files/cheetah1.jpg"),

# os.path.join(os.path.abspath(''), "files/cheetah1.jpg"),

os.path.join(os.path.abspath(''), "files/cheetah1.jpg"),

os.path.join(os.path.abspath(''), "files/world.mp4"),

os.path.join(os.path.abspath(''), "files/cantina.wav"),

os.path.join(os.path.abspath(''), "files/cantina.wav"),

os.path.join(os.path.abspath(''), "files/titanic.csv"),

[[1, 2, 3, 4], [4, 5, 6, 7], [8, 9, 1, 2], [3, 4, 5, 6]],

]

]

* 3,

title="Kitchen Sink",

description="Try out all the components!",

article="Learn more about [Gradio](http://gradio.app)",

cache_examples=True,

)

if __name__ == "__main__":

demo.launch()

```

| gradio-app/gradio/blob/main/demo/kitchen_sink/run.ipynb |

`@gradio/utils`

General functions for handling events in Gradio Svelte components

```javascript

export async function uploadToHuggingFace(

data: string,

type: "base64" | "url"

): Promise<string>

export function copy(node: HTMLDivElement): ActionReturn

``` | gradio-app/gradio/blob/main/js/utils/README.md |

!--Copyright 2023 The HuggingFace Team. All rights reserved.

Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with

the License. You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on

an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the

specific language governing permissions and limitations under the License.

⚠️ Note that this file is in Markdown but contain specific syntax for our doc-builder (similar to MDX) that may not be

rendered properly in your Markdown viewer.

-->

# SAM

## Overview

SAM (Segment Anything Model) was proposed in [Segment Anything](https://arxiv.org/pdf/2304.02643v1.pdf) by Alexander Kirillov, Eric Mintun, Nikhila Ravi, Hanzi Mao, Chloe Rolland, Laura Gustafson, Tete Xiao, Spencer Whitehead, Alex Berg, Wan-Yen Lo, Piotr Dollar, Ross Girshick.

The model can be used to predict segmentation masks of any object of interest given an input image.

The abstract from the paper is the following:

*We introduce the Segment Anything (SA) project: a new task, model, and dataset for image segmentation. Using our efficient model in a data collection loop, we built the largest segmentation dataset to date (by far), with over 1 billion masks on 11M licensed and privacy respecting images. The model is designed and trained to be promptable, so it can transfer zero-shot to new image distributions and tasks. We evaluate its capabilities on numerous tasks and find that its zero-shot performance is impressive -- often competitive with or even superior to prior fully supervised results. We are releasing the Segment Anything Model (SAM) and corresponding dataset (SA-1B) of 1B masks and 11M images at [https://segment-anything.com](https://segment-anything.com) to foster research into foundation models for computer vision.*

Tips:

- The model predicts binary masks that states the presence or not of the object of interest given an image.

- The model predicts much better results if input 2D points and/or input bounding boxes are provided

- You can prompt multiple points for the same image, and predict a single mask.

- Fine-tuning the model is not supported yet

- According to the paper, textual input should be also supported. However, at this time of writing this seems to be not supported according to [the official repository](https://github.com/facebookresearch/segment-anything/issues/4#issuecomment-1497626844).

This model was contributed by [ybelkada](https://huggingface.co/ybelkada) and [ArthurZ](https://huggingface.co/ArthurZ).

The original code can be found [here](https://github.com/facebookresearch/segment-anything).

Below is an example on how to run mask generation given an image and a 2D point:

```python

import torch

from PIL import Image

import requests

from transformers import SamModel, SamProcessor

device = "cuda" if torch.cuda.is_available() else "cpu"

model = SamModel.from_pretrained("facebook/sam-vit-huge").to(device)

processor = SamProcessor.from_pretrained("facebook/sam-vit-huge")

img_url = "https://huggingface.co/ybelkada/segment-anything/resolve/main/assets/car.png"

raw_image = Image.open(requests.get(img_url, stream=True).raw).convert("RGB")

input_points = [[[450, 600]]] # 2D location of a window in the image

inputs = processor(raw_image, input_points=input_points, return_tensors="pt").to(device)

with torch.no_grad():

outputs = model(**inputs)

masks = processor.image_processor.post_process_masks(

outputs.pred_masks.cpu(), inputs["original_sizes"].cpu(), inputs["reshaped_input_sizes"].cpu()

)

scores = outputs.iou_scores

```

Resources:

- [Demo notebook](https://github.com/huggingface/notebooks/blob/main/examples/segment_anything.ipynb) for using the model.

- [Demo notebook](https://github.com/huggingface/notebooks/blob/main/examples/automatic_mask_generation.ipynb) for using the automatic mask generation pipeline.

- [Demo notebook](https://github.com/NielsRogge/Transformers-Tutorials/blob/master/SAM/Run_inference_with_MedSAM_using_HuggingFace_Transformers.ipynb) for inference with MedSAM, a fine-tuned version of SAM on the medical domain.

- [Demo notebook](https://github.com/NielsRogge/Transformers-Tutorials/blob/master/SAM/Fine_tune_SAM_(segment_anything)_on_a_custom_dataset.ipynb) for fine-tuning the model on custom data.

## SamConfig

[[autodoc]] SamConfig

## SamVisionConfig

[[autodoc]] SamVisionConfig

## SamMaskDecoderConfig

[[autodoc]] SamMaskDecoderConfig

## SamPromptEncoderConfig

[[autodoc]] SamPromptEncoderConfig

## SamProcessor

[[autodoc]] SamProcessor

## SamImageProcessor

[[autodoc]] SamImageProcessor

## SamModel

[[autodoc]] SamModel

- forward

## TFSamModel

[[autodoc]] TFSamModel

- call

| huggingface/transformers/blob/main/docs/source/en/model_doc/sam.md |

!--Copyright 2023 The HuggingFace Team. All rights reserved.

Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with

the License. You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on

an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the

specific language governing permissions and limitations under the License.

-->

# Contribute a community pipeline

<Tip>

💡 Take a look at GitHub Issue [#841](https://github.com/huggingface/diffusers/issues/841) for more context about why we're adding community pipelines to help everyone easily share their work without being slowed down.

</Tip>

Community pipelines allow you to add any additional features you'd like on top of the [`DiffusionPipeline`]. The main benefit of building on top of the `DiffusionPipeline` is anyone can load and use your pipeline by only adding one more argument, making it super easy for the community to access.

This guide will show you how to create a community pipeline and explain how they work. To keep things simple, you'll create a "one-step" pipeline where the `UNet` does a single forward pass and calls the scheduler once.

## Initialize the pipeline

You should start by creating a `one_step_unet.py` file for your community pipeline. In this file, create a pipeline class that inherits from the [`DiffusionPipeline`] to be able to load model weights and the scheduler configuration from the Hub. The one-step pipeline needs a `UNet` and a scheduler, so you'll need to add these as arguments to the `__init__` function:

```python

from diffusers import DiffusionPipeline

import torch

class UnetSchedulerOneForwardPipeline(DiffusionPipeline):

def __init__(self, unet, scheduler):

super().__init__()

```

To ensure your pipeline and its components (`unet` and `scheduler`) can be saved with [`~DiffusionPipeline.save_pretrained`], add them to the `register_modules` function:

```diff

from diffusers import DiffusionPipeline

import torch

class UnetSchedulerOneForwardPipeline(DiffusionPipeline):

def __init__(self, unet, scheduler):

super().__init__()

+ self.register_modules(unet=unet, scheduler=scheduler)

```

Cool, the `__init__` step is done and you can move to the forward pass now! 🔥

## Define the forward pass

In the forward pass, which we recommend defining as `__call__`, you have complete creative freedom to add whatever feature you'd like. For our amazing one-step pipeline, create a random image and only call the `unet` and `scheduler` once by setting `timestep=1`:

```diff

from diffusers import DiffusionPipeline

import torch

class UnetSchedulerOneForwardPipeline(DiffusionPipeline):

def __init__(self, unet, scheduler):

super().__init__()

self.register_modules(unet=unet, scheduler=scheduler)

+ def __call__(self):

+ image = torch.randn(

+ (1, self.unet.config.in_channels, self.unet.config.sample_size, self.unet.config.sample_size),

+ )

+ timestep = 1

+ model_output = self.unet(image, timestep).sample

+ scheduler_output = self.scheduler.step(model_output, timestep, image).prev_sample

+ return scheduler_output

```

That's it! 🚀 You can now run this pipeline by passing a `unet` and `scheduler` to it:

```python

from diffusers import DDPMScheduler, UNet2DModel

scheduler = DDPMScheduler()

unet = UNet2DModel()

pipeline = UnetSchedulerOneForwardPipeline(unet=unet, scheduler=scheduler)

output = pipeline()

```

But what's even better is you can load pre-existing weights into the pipeline if the pipeline structure is identical. For example, you can load the [`google/ddpm-cifar10-32`](https://huggingface.co/google/ddpm-cifar10-32) weights into the one-step pipeline:

```python

pipeline = UnetSchedulerOneForwardPipeline.from_pretrained("google/ddpm-cifar10-32", use_safetensors=True)

output = pipeline()

```

## Share your pipeline

Open a Pull Request on the 🧨 Diffusers [repository](https://github.com/huggingface/diffusers) to add your awesome pipeline in `one_step_unet.py` to the [examples/community](https://github.com/huggingface/diffusers/tree/main/examples/community) subfolder.

Once it is merged, anyone with `diffusers >= 0.4.0` installed can use this pipeline magically 🪄 by specifying it in the `custom_pipeline` argument:

```python

from diffusers import DiffusionPipeline

pipe = DiffusionPipeline.from_pretrained(

"google/ddpm-cifar10-32", custom_pipeline="one_step_unet", use_safetensors=True

)

pipe()

```

Another way to share your community pipeline is to upload the `one_step_unet.py` file directly to your preferred [model repository](https://huggingface.co/docs/hub/models-uploading) on the Hub. Instead of specifying the `one_step_unet.py` file, pass the model repository id to the `custom_pipeline` argument:

```python

from diffusers import DiffusionPipeline

pipeline = DiffusionPipeline.from_pretrained(

"google/ddpm-cifar10-32", custom_pipeline="stevhliu/one_step_unet", use_safetensors=True

)

```

Take a look at the following table to compare the two sharing workflows to help you decide the best option for you:

| | GitHub community pipeline | HF Hub community pipeline |

|----------------|------------------------------------------------------------------------------------------------------------------|-------------------------------------------------------------------------------------------|

| usage | same | same |

| review process | open a Pull Request on GitHub and undergo a review process from the Diffusers team before merging; may be slower | upload directly to a Hub repository without any review; this is the fastest workflow |

| visibility | included in the official Diffusers repository and documentation | included on your HF Hub profile and relies on your own usage/promotion to gain visibility |

<Tip>

💡 You can use whatever package you want in your community pipeline file - as long as the user has it installed, everything will work fine. Make sure you have one and only one pipeline class that inherits from `DiffusionPipeline` because this is automatically detected.

</Tip>

## How do community pipelines work?

A community pipeline is a class that inherits from [`DiffusionPipeline`] which means:

- It can be loaded with the [`custom_pipeline`] argument.

- The model weights and scheduler configuration are loaded from [`pretrained_model_name_or_path`].

- The code that implements a feature in the community pipeline is defined in a `pipeline.py` file.

Sometimes you can't load all the pipeline components weights from an official repository. In this case, the other components should be passed directly to the pipeline:

```python

from diffusers import DiffusionPipeline

from transformers import CLIPImageProcessor, CLIPModel

model_id = "CompVis/stable-diffusion-v1-4"

clip_model_id = "laion/CLIP-ViT-B-32-laion2B-s34B-b79K"

feature_extractor = CLIPImageProcessor.from_pretrained(clip_model_id)

clip_model = CLIPModel.from_pretrained(clip_model_id, torch_dtype=torch.float16)

pipeline = DiffusionPipeline.from_pretrained(

model_id,

custom_pipeline="clip_guided_stable_diffusion",

clip_model=clip_model,

feature_extractor=feature_extractor,

scheduler=scheduler,

torch_dtype=torch.float16,

use_safetensors=True,

)

```

The magic behind community pipelines is contained in the following code. It allows the community pipeline to be loaded from GitHub or the Hub, and it'll be available to all 🧨 Diffusers packages.

```python

# 2. Load the pipeline class, if using custom module then load it from the Hub

# if we load from explicit class, let's use it

if custom_pipeline is not None:

pipeline_class = get_class_from_dynamic_module(

custom_pipeline, module_file=CUSTOM_PIPELINE_FILE_NAME, cache_dir=custom_pipeline

)

elif cls != DiffusionPipeline:

pipeline_class = cls

else:

diffusers_module = importlib.import_module(cls.__module__.split(".")[0])

pipeline_class = getattr(diffusers_module, config_dict["_class_name"])

```

| huggingface/diffusers/blob/main/docs/source/en/using-diffusers/contribute_pipeline.md |

--

title: "Make your llama generation time fly with AWS Inferentia2"

thumbnail: /blog/assets/inferentia-llama2/thumbnail.png

authors:

- user: dacorvo

---

# Make your llama generation time fly with AWS Inferentia2

In a [previous post on the Hugging Face blog](https://huggingface.co/blog/accelerate-transformers-with-inferentia2), we introduced [AWS Inferentia2](https://aws.amazon.com/ec2/instance-types/inf2/), the second-generation AWS Inferentia accelerator, and explained how you could use [optimum-neuron](https://huggingface.co/docs/optimum-neuron/index) to quickly deploy Hugging Face models for standard text and vision tasks on AWS Inferencia 2 instances.

In a further step of integration with the [AWS Neuron SDK](https://github.com/aws-neuron/aws-neuron-sdk), it is now possible to use 🤗 [optimum-neuron](https://huggingface.co/docs/optimum-neuron/index) to deploy LLM models for text generation on AWS Inferentia2.

And what better model could we choose for that demonstration than [Llama 2](https://huggingface.co/meta-llama/Llama-2-13b-hf), one of the most popular models on the [Hugging Face hub](https://huggingface.co/models).

## Setup 🤗 optimum-neuron on your Inferentia2 instance

Our recommendation is to use the [Hugging Face Neuron Deep Learning AMI](https://aws.amazon.com/marketplace/pp/prodview-gr3e6yiscria2) (DLAMI). The DLAMI comes with all required libraries pre-packaged for you, including the Optimum Neuron, Neuron Drivers, Transformers, Datasets, and Accelerate.

Alternatively, you can use the [Hugging Face Neuron SDK DLC](https://github.com/aws/deep-learning-containers/releases?q=hf&expanded=true) to deploy on Amazon SageMaker.

*Note: stay tuned for an upcoming post dedicated to SageMaker deployment.*

Finally, these components can also be installed manually on a fresh Inferentia2 instance following the `optimum-neuron` [installation instructions](https://huggingface.co/docs/optimum-neuron/installation).

## Export the Llama 2 model to Neuron

As explained in the [optimum-neuron documentation](https://huggingface.co/docs/optimum-neuron/guides/export_model#why-compile-to-neuron-model), models need to be compiled and exported to a serialized format before running them on Neuron devices.

Fortunately, 🤗 `optimum-neuron` offers a [very simple API](https://huggingface.co/docs/optimum-neuron/guides/models#configuring-the-export-of-a-generative-model) to export standard 🤗 [transformers models](https://huggingface.co/docs/transformers/index) to the Neuron format.

```

>>> from optimum.neuron import NeuronModelForCausalLM

>>> compiler_args = {"num_cores": 24, "auto_cast_type": 'fp16'}

>>> input_shapes = {"batch_size": 1, "sequence_length": 2048}

>>> model = NeuronModelForCausalLM.from_pretrained(

"meta-llama/Llama-2-7b-hf",

export=True,

**compiler_args,

**input_shapes)

```

This deserves a little explanation:

- using `compiler_args`, we specify on how many cores we want the model to be deployed (each neuron device has two cores), and with which precision (here `float16`),

- using `input_shape`, we set the static input and output dimensions of the model. All model compilers require static shapes, and neuron makes no exception. Note that the

`sequence_length` not only constrains the length of the input context, but also the length of the KV cache, and thus, the output length.

Depending on your choice of parameters and inferentia host, this may take from a few minutes to more than an hour.

Fortunately, you will need to do this only once because you can save your model and reload it later.

```

>>> model.save_pretrained("a_local_path_for_compiled_neuron_model")

```

Even better, you can push it to the [Hugging Face hub](https://huggingface.co/models).

```

>>> model.push_to_hub(

"a_local_path_for_compiled_neuron_model",

repository_id="aws-neuron/Llama-2-7b-hf-neuron-latency")

```

## Generate Text using Llama 2 on AWS Inferentia2

Once your model has been exported, you can generate text using the transformers library, as it has been described in [detail in this previous post](https://huggingface.co/blog/how-to-generate).

```

>>> from optimum.neuron import NeuronModelForCausalLM

>>> from transformers import AutoTokenizer

>>> model = NeuronModelForCausalLM.from_pretrained('aws-neuron/Llama-2-7b-hf-neuron-latency')

>>> tokenizer = AutoTokenizer.from_pretrained("aws-neuron/Llama-2-7b-hf-neuron-latency")

>>> inputs = tokenizer("What is deep-learning ?", return_tensors="pt")

>>> outputs = model.generate(**inputs,

max_new_tokens=128,

do_sample=True,

temperature=0.9,

top_k=50,

top_p=0.9)

>>> tokenizer.batch_decode(outputs, skip_special_tokens=True)

['What is deep-learning ?\nThe term “deep-learning” refers to a type of machine-learning

that aims to model high-level abstractions of the data in the form of a hierarchy of multiple

layers of increasingly complex processing nodes.']

```

*Note: when passing multiple input prompts to a model, the resulting token sequences must be padded to the left with an end-of-stream token.

The tokenizers saved with the exported models are configured accordingly.*

The following generation strategies are supported:

- greedy search,

- multinomial sampling with top-k and top-p (with temperature).

Most logits pre-processing/filters (such as repetition penalty) are supported.

## All-in-one with optimum-neuron pipelines

For those who like to keep it simple, there is an even simpler way to use an LLM model on AWS inferentia 2 using [optimum-neuron pipelines](https://huggingface.co/docs/optimum-neuron/guides/pipelines).

Using them is as simple as:

```

>>> from optimum.neuron import pipeline

>>> p = pipeline('text-generation', 'aws-neuron/Llama-2-7b-hf-neuron-budget')

>>> p("My favorite place on earth is", max_new_tokens=64, do_sample=True, top_k=50)

[{'generated_text': 'My favorite place on earth is the ocean. It is where I feel most

at peace. I love to travel and see new places. I have a'}]

```

## Benchmarks

But how much efficient is text-generation on Inferentia2? Let's figure out!

We have uploaded on the hub pre-compiled versions of the LLama 2 7B and 13B models with different configurations:

| Model type | num cores | batch_size | Hugging Face Hub model |

|----------------------------|-----------|------------|-------------------------------------------|

| Llama2 7B - B (budget) | 2 | 1 |[aws-neuron/Llama-2-7b-hf-neuron-budget](https://huggingface.co/aws-neuron/Llama-2-7b-hf-neuron-budget) |

| Llama2 7B - L (latency) | 24 | 1 |[aws-neuron/Llama-2-7b-hf-neuron-latency](https://huggingface.co/aws-neuron/Llama-2-7b-hf-neuron-latency) |

| Llama2 7B - T (throughput) | 24 | 4 |[aws-neuron/Llama-2-7b-hf-neuron-throughput](https://huggingface.co/aws-neuron/Llama-2-7b-hf-neuron-throughput) |

| Llama2 13B - L (latency) | 24 | 1 |[aws-neuron/Llama-2-13b-hf-neuron-latency](https://huggingface.co/aws-neuron/Llama-2-13b-hf-neuron-latency) |

| Llama2 13B - T (throughput)| 24 | 4 |[aws-neuron/Llama-2-13b-hf-neuron-throughput](https://huggingface.co/aws-neuron/Llama-2-13b-hf-neuron-throughput)|

*Note: all models are compiled with a maximum sequence length of 2048.*

The `llama2 7B` "budget" model is meant to be deployed on `inf2.xlarge` instance that has only one neuron device, and enough `cpu` memory to load the model.

All other models are compiled to use the full extent of cores available on the `inf2.48xlarge` instance.

*Note: please refer to the [inferentia2 product page](https://aws.amazon.com/ec2/instance-types/inf2/) for details on the available instances.*

We created two "latency" oriented configurations for the `llama2 7B` and `llama2 13B` models that can serve only one request at a time, but at full speed.

We also created two "throughput" oriented configurations to serve up to four requests in parallel.

To evaluate the models, we generate tokens up to a total sequence length of 1024, starting from

256 input tokens (i.e. we generate 256, 512 and 768 tokens).

*Note: the "budget" model numbers are reported but not included in the graphs for better readability.*

### Encoding time

The encoding time is the time required to process the input tokens and generate the first output token.

It is a very important metric, as it corresponds to the latency directly perceived by the user when streaming generated tokens.

We test the encoding time for increasing context sizes, 256 input tokens corresponding roughly to a typical Q/A usage,

while 768 is more typical of a Retrieval Augmented Generation (RAG) use-case.

The "budget" model (`Llama2 7B-B`) is deployed on an `inf2.xlarge` instance while other models are deployed on an `inf2.48xlarge` instance.

Encoding time is expressed in **seconds**.

| input tokens | Llama2 7B-L | Llama2 7B-T | Llama2 13B-L | Llama2 13B-T | Llama2 7B-B |

|-----------------|----------------|----------------|-----------------|-----------------|----------------|

| 256 | 0.5 | 0.9 | 0.6 | 1.8 | 0.3 |

| 512 | 0.7 | 1.6 | 1.1 | 3.0 | 0.4 |

| 768 | 1.1 | 3.3 | 1.7 | 5.2 | 0.5 |

We can see that all deployed models exhibit excellent response times, even for long contexts.

### End-to-end Latency

The end-to-end latency corresponds to the total time to reach a sequence length of 1024 tokens.

It therefore includes the encoding and generation time.

The "budget" model (`Llama2 7B-B`) is deployed on an `inf2.xlarge` instance while other models are deployed on an `inf2.48xlarge` instance.

Latency is expressed in **seconds**.

| new tokens | Llama2 7B-L | Llama2 7B-T | Llama2 13B-L | Llama2 13B-T | Llama2 7B-B |

|---------------|----------------|----------------|-----------------|-----------------|----------------|

| 256 | 2.3 | 2.7 | 3.5 | 4.1 | 15.9 |

| 512 | 4.4 | 5.3 | 6.9 | 7.8 | 31.7 |

| 768 | 6.2 | 7.7 | 10.2 | 11.1 | 47.3 |

All models deployed on the high-end instance exhibit a good latency, even those actually configured to optimize throughput.

The "budget" deployed model latency is significantly higher, but still ok.

### Throughput

We adopt the same convention as other benchmarks to evaluate the throughput, by dividing the end-to-end

latency by the sum of both input and output tokens.

In other words, we divide the end-to-end latency by `batch_size * sequence_length` to obtain the number of generated tokens per second.

The "budget" model (`Llama2 7B-B`) is deployed on an `inf2.xlarge` instance while other models are deployed on an `inf2.48xlarge` instance.

Throughput is expressed in **tokens/second**.

| new tokens | Llama2 7B-L | Llama2 7B-T | Llama2 13B-L | Llama2 13B-T | Llama2 7B-B |

|---------------|----------------|----------------|-----------------|-----------------|----------------|

| 256 | 227 | 750 | 145 | 504 | 32 |

| 512 | 177 | 579 | 111 | 394 | 24 |

| 768 | 164 | 529 | 101 | 370 | 22 |

Again, the models deployed on the high-end instance have a very good throughput, even those optimized for latency.

The "budget" model has a much lower throughput, but still ok for a streaming use-case, considering that an average reader reads around 5 words per-second.

## Conclusion

We have illustrated how easy it is to deploy `llama2` models from the [Hugging Face hub](https://huggingface.co/models) on

[AWS Inferentia2](https://aws.amazon.com/ec2/instance-types/inf2/) using 🤗 [optimum-neuron](https://huggingface.co/docs/optimum-neuron/index).

The deployed models demonstrate very good performance in terms of encoding time, latency and throughput.

Interestingly, the deployed models latency is not too sensitive to the batch size, which opens the way for their deployment on inference endpoints

serving multiple requests in parallel.

There is still plenty of room for improvement though:

- in the current implementation, the only way to augment the throughput is to increase the batch size, but it is currently limited by the device memory.

Alternative options such as pipelining are currently integrated,

- the static sequence length limits the model ability to encode long contexts. It would be interesting to see if attention sinks might be a valid option to address this.

| huggingface/blog/blob/main/inferentia-llama2.md |

!--Copyright 2023 The HuggingFace Team. All rights reserved.

Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with

the License. You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on

an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the

specific language governing permissions and limitations under the License.

-->

# DDIM

[Denoising Diffusion Implicit Models](https://huggingface.co/papers/2010.02502) (DDIM) by Jiaming Song, Chenlin Meng and Stefano Ermon.

The abstract from the paper is:

*Denoising diffusion probabilistic models (DDPMs) have achieved high quality image generation without adversarial training, yet they require simulating a Markov chain for many steps to produce a sample. To accelerate sampling, we present denoising diffusion implicit models (DDIMs), a more efficient class of iterative implicit probabilistic models with the same training procedure as DDPMs. In DDPMs, the generative process is defined as the reverse of a Markovian diffusion process. We construct a class of non-Markovian diffusion processes that lead to the same training objective, but whose reverse process can be much faster to sample from. We empirically demonstrate that DDIMs can produce high quality samples 10× to 50× faster in terms of wall-clock time compared to DDPMs, allow us to trade off computation for sample quality, and can perform semantically meaningful image interpolation directly in the latent space.*

The original codebase can be found at [ermongroup/ddim](https://github.com/ermongroup/ddim).

## DDIMPipeline

[[autodoc]] DDIMPipeline

- all

- __call__

## ImagePipelineOutput

[[autodoc]] pipelines.ImagePipelineOutput

| huggingface/diffusers/blob/main/docs/source/en/api/pipelines/ddim.md |

!--Copyright 2022 The HuggingFace Team. All rights reserved.

Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with

the License. You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on

an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the

specific language governing permissions and limitations under the License.

⚠️ Note that this file is in Markdown but contain specific syntax for our doc-builder (similar to MDX) that may not be

rendered properly in your Markdown viewer.

-->

# UPerNet

## Overview

The UPerNet model was proposed in [Unified Perceptual Parsing for Scene Understanding](https://arxiv.org/abs/1807.10221)

by Tete Xiao, Yingcheng Liu, Bolei Zhou, Yuning Jiang, Jian Sun. UPerNet is a general framework to effectively segment

a wide range of concepts from images, leveraging any vision backbone like [ConvNeXt](convnext) or [Swin](swin).

The abstract from the paper is the following:

*Humans recognize the visual world at multiple levels: we effortlessly categorize scenes and detect objects inside, while also identifying the textures and surfaces of the objects along with their different compositional parts. In this paper, we study a new task called Unified Perceptual Parsing, which requires the machine vision systems to recognize as many visual concepts as possible from a given image. A multi-task framework called UPerNet and a training strategy are developed to learn from heterogeneous image annotations. We benchmark our framework on Unified Perceptual Parsing and show that it is able to effectively segment a wide range of concepts from images. The trained networks are further applied to discover visual knowledge in natural scenes.*

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/transformers/model_doc/upernet_architecture.jpg"

alt="drawing" width="600"/>

<small> UPerNet framework. Taken from the <a href="https://arxiv.org/abs/1807.10221">original paper</a>. </small>

This model was contributed by [nielsr](https://huggingface.co/nielsr). The original code is based on OpenMMLab's mmsegmentation [here](https://github.com/open-mmlab/mmsegmentation/blob/master/mmseg/models/decode_heads/uper_head.py).

## Usage examples

UPerNet is a general framework for semantic segmentation. It can be used with any vision backbone, like so:

```py

from transformers import SwinConfig, UperNetConfig, UperNetForSemanticSegmentation

backbone_config = SwinConfig(out_features=["stage1", "stage2", "stage3", "stage4"])

config = UperNetConfig(backbone_config=backbone_config)

model = UperNetForSemanticSegmentation(config)

```

To use another vision backbone, like [ConvNeXt](convnext), simply instantiate the model with the appropriate backbone:

```py

from transformers import ConvNextConfig, UperNetConfig, UperNetForSemanticSegmentation

backbone_config = ConvNextConfig(out_features=["stage1", "stage2", "stage3", "stage4"])

config = UperNetConfig(backbone_config=backbone_config)

model = UperNetForSemanticSegmentation(config)

```

Note that this will randomly initialize all the weights of the model.

## Resources

A list of official Hugging Face and community (indicated by 🌎) resources to help you get started with UPerNet.

- Demo notebooks for UPerNet can be found [here](https://github.com/NielsRogge/Transformers-Tutorials/tree/master/UPerNet).

- [`UperNetForSemanticSegmentation`] is supported by this [example script](https://github.com/huggingface/transformers/tree/main/examples/pytorch/semantic-segmentation) and [notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/examples/semantic_segmentation.ipynb).

- See also: [Semantic segmentation task guide](../tasks/semantic_segmentation)

If you're interested in submitting a resource to be included here, please feel free to open a Pull Request and we'll review it! The resource should ideally demonstrate something new instead of duplicating an existing resource.

## UperNetConfig

[[autodoc]] UperNetConfig

## UperNetForSemanticSegmentation

[[autodoc]] UperNetForSemanticSegmentation

- forward | huggingface/transformers/blob/main/docs/source/en/model_doc/upernet.md |

Tensorflow API

[[autodoc]] safetensors.tensorflow.load_file

[[autodoc]] safetensors.tensorflow.load

[[autodoc]] safetensors.tensorflow.save_file

[[autodoc]] safetensors.tensorflow.save

| huggingface/safetensors/blob/main/docs/source/api/tensorflow.mdx |

!--Copyright 2022 The HuggingFace Team. All rights reserved.

Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with

the License. You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on

an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the

specific language governing permissions and limitations under the License.

⚠️ Note that this file is in Markdown but contain specific syntax for our doc-builder (similar to MDX) that may not be

rendered properly in your Markdown viewer.

-->

# PoolFormer

## Overview

The PoolFormer model was proposed in [MetaFormer is Actually What You Need for Vision](https://arxiv.org/abs/2111.11418) by Sea AI Labs. Instead of designing complicated token mixer to achieve SOTA performance, the target of this work is to demonstrate the competence of transformer models largely stem from the general architecture MetaFormer.

The abstract from the paper is the following:

*Transformers have shown great potential in computer vision tasks. A common belief is their attention-based token mixer module contributes most to their competence. However, recent works show the attention-based module in transformers can be replaced by spatial MLPs and the resulted models still perform quite well. Based on this observation, we hypothesize that the general architecture of the transformers, instead of the specific token mixer module, is more essential to the model's performance. To verify this, we deliberately replace the attention module in transformers with an embarrassingly simple spatial pooling operator to conduct only the most basic token mixing. Surprisingly, we observe that the derived model, termed as PoolFormer, achieves competitive performance on multiple computer vision tasks. For example, on ImageNet-1K, PoolFormer achieves 82.1% top-1 accuracy, surpassing well-tuned vision transformer/MLP-like baselines DeiT-B/ResMLP-B24 by 0.3%/1.1% accuracy with 35%/52% fewer parameters and 48%/60% fewer MACs. The effectiveness of PoolFormer verifies our hypothesis and urges us to initiate the concept of "MetaFormer", a general architecture abstracted from transformers without specifying the token mixer. Based on the extensive experiments, we argue that MetaFormer is the key player in achieving superior results for recent transformer and MLP-like models on vision tasks. This work calls for more future research dedicated to improving MetaFormer instead of focusing on the token mixer modules. Additionally, our proposed PoolFormer could serve as a starting baseline for future MetaFormer architecture design.*

The figure below illustrates the architecture of PoolFormer. Taken from the [original paper](https://arxiv.org/abs/2111.11418).

<img width="600" src="https://user-images.githubusercontent.com/15921929/142746124-1ab7635d-2536-4a0e-ad43-b4fe2c5a525d.png"/>

This model was contributed by [heytanay](https://huggingface.co/heytanay). The original code can be found [here](https://github.com/sail-sg/poolformer).

## Usage tips

- PoolFormer has a hierarchical architecture, where instead of Attention, a simple Average Pooling layer is present. All checkpoints of the model can be found on the [hub](https://huggingface.co/models?other=poolformer).

- One can use [`PoolFormerImageProcessor`] to prepare images for the model.

- As most models, PoolFormer comes in different sizes, the details of which can be found in the table below.

| **Model variant** | **Depths** | **Hidden sizes** | **Params (M)** | **ImageNet-1k Top 1** |

| :---------------: | ------------- | ------------------- | :------------: | :-------------------: |

| s12 | [2, 2, 6, 2] | [64, 128, 320, 512] | 12 | 77.2 |

| s24 | [4, 4, 12, 4] | [64, 128, 320, 512] | 21 | 80.3 |

| s36 | [6, 6, 18, 6] | [64, 128, 320, 512] | 31 | 81.4 |

| m36 | [6, 6, 18, 6] | [96, 192, 384, 768] | 56 | 82.1 |

| m48 | [8, 8, 24, 8] | [96, 192, 384, 768] | 73 | 82.5 |

## Resources

A list of official Hugging Face and community (indicated by 🌎) resources to help you get started with PoolFormer.

<PipelineTag pipeline="image-classification"/>

- [`PoolFormerForImageClassification`] is supported by this [example script](https://github.com/huggingface/transformers/tree/main/examples/pytorch/image-classification) and [notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/examples/image_classification.ipynb).

- See also: [Image classification task guide](../tasks/image_classification)

If you're interested in submitting a resource to be included here, please feel free to open a Pull Request and we'll review it! The resource should ideally demonstrate something new instead of duplicating an existing resource.

## PoolFormerConfig

[[autodoc]] PoolFormerConfig

## PoolFormerFeatureExtractor

[[autodoc]] PoolFormerFeatureExtractor

- __call__

## PoolFormerImageProcessor

[[autodoc]] PoolFormerImageProcessor

- preprocess

## PoolFormerModel

[[autodoc]] PoolFormerModel

- forward

## PoolFormerForImageClassification

[[autodoc]] PoolFormerForImageClassification

- forward

| huggingface/transformers/blob/main/docs/source/en/model_doc/poolformer.md |

!--⚠️ Note that this file is in Markdown but contain specific syntax for our doc-builder (similar to MDX) that may not be

rendered properly in your Markdown viewer.

-->

# TensorBoard logger

TensorBoard is a visualization toolkit for machine learning experimentation. TensorBoard allows tracking and visualizing

metrics such as loss and accuracy, visualizing the model graph, viewing histograms, displaying images and much more.

TensorBoard is well integrated with the Hugging Face Hub. The Hub automatically detects TensorBoard traces (such as

`tfevents`) when pushed to the Hub which starts an instance to visualize them. To get more information about TensorBoard

integration on the Hub, check out [this guide](https://huggingface.co/docs/hub/tensorboard).

To benefit from this integration, `huggingface_hub` provides a custom logger to push logs to the Hub. It works as a

drop-in replacement for [SummaryWriter](https://tensorboardx.readthedocs.io/en/latest/tensorboard.html) with no extra

code needed. Traces are still saved locally and a background job push them to the Hub at regular interval.

## HFSummaryWriter

[[autodoc]] HFSummaryWriter | huggingface/huggingface_hub/blob/main/docs/source/en/package_reference/tensorboard.md |

div align="center">

<h1><code>create-wasm-app</code></h1>

<strong>An <code>npm init</code> template for kick starting a project that uses NPM packages containing Rust-generated WebAssembly and bundles them with Webpack.</strong>

<p>

<a href="https://travis-ci.org/rustwasm/create-wasm-app"><img src="https://img.shields.io/travis/rustwasm/create-wasm-app.svg?style=flat-square" alt="Build Status" /></a>

</p>

<h3>

<a href="#usage">Usage</a>

<span> | </span>

<a href="https://discordapp.com/channels/442252698964721669/443151097398296587">Chat</a>

</h3>

<sub>Built with 🦀🕸 by <a href="https://rustwasm.github.io/">The Rust and WebAssembly Working Group</a></sub>

</div>

## About

This template is designed for depending on NPM packages that contain

Rust-generated WebAssembly and using them to create a Website.

* Want to create an NPM package with Rust and WebAssembly? [Check out

`wasm-pack-template`.](https://github.com/rustwasm/wasm-pack-template)

* Want to make a monorepo-style Website without publishing to NPM? Check out

[`rust-webpack-template`](https://github.com/rustwasm/rust-webpack-template)

and/or

[`rust-parcel-template`](https://github.com/rustwasm/rust-parcel-template).

## 🚴 Usage

```

npm init wasm-app

```

## 🔋 Batteries Included

- `.gitignore`: ignores `node_modules`

- `LICENSE-APACHE` and `LICENSE-MIT`: most Rust projects are licensed this way, so these are included for you

- `README.md`: the file you are reading now!

- `index.html`: a bare bones html document that includes the webpack bundle

- `index.js`: example js file with a comment showing how to import and use a wasm pkg

- `package.json` and `package-lock.json`:

- pulls in devDependencies for using webpack:

- [`webpack`](https://www.npmjs.com/package/webpack)

- [`webpack-cli`](https://www.npmjs.com/package/webpack-cli)

- [`webpack-dev-server`](https://www.npmjs.com/package/webpack-dev-server)

- defines a `start` script to run `webpack-dev-server`

- `webpack.config.js`: configuration file for bundling your js with webpack

## License

Licensed under either of

* Apache License, Version 2.0, ([LICENSE-APACHE](LICENSE-APACHE) or http://www.apache.org/licenses/LICENSE-2.0)

* MIT license ([LICENSE-MIT](LICENSE-MIT) or http://opensource.org/licenses/MIT)

at your option.

### Contribution

Unless you explicitly state otherwise, any contribution intentionally

submitted for inclusion in the work by you, as defined in the Apache-2.0

license, shall be dual licensed as above, without any additional terms or

conditions.

| huggingface/tokenizers/blob/main/tokenizers/examples/unstable_wasm/www/README.md |

Gradio Demo: audio_component

```

!pip install -q gradio

```

```

import gradio as gr

with gr.Blocks() as demo:

gr.Audio()

demo.launch()

```

| gradio-app/gradio/blob/main/demo/audio_component/run.ipynb |

Gradio Demo: musical_instrument_identification

### This demo identifies musical instruments from an audio file. It uses Gradio's Audio and Label components.

```

!pip install -q gradio torch==1.12.0 torchvision==0.13.0 torchaudio==0.12.0 librosa==0.9.2 gdown

```

```

# Downloading files from the demo repo

import os

!wget -q https://github.com/gradio-app/gradio/raw/main/demo/musical_instrument_identification/data_setups.py

```

```

import gradio as gr

import torch

import torchaudio

from timeit import default_timer as timer

from data_setups import audio_preprocess, resample

import gdown

url = 'https://drive.google.com/uc?id=1X5CR18u0I-ZOi_8P0cNptCe5JGk9Ro0C'

output = 'piano.wav'

gdown.download(url, output, quiet=False)

url = 'https://drive.google.com/uc?id=1W-8HwmGR5SiyDbUcGAZYYDKdCIst07__'

output= 'torch_efficientnet_fold2_CNN.pth'

gdown.download(url, output, quiet=False)

device = "cuda" if torch.cuda.is_available() else "cpu"

SAMPLE_RATE = 44100

AUDIO_LEN = 2.90

model = torch.load("torch_efficientnet_fold2_CNN.pth", map_location=torch.device('cpu'))

LABELS = [

"Cello", "Clarinet", "Flute", "Acoustic Guitar", "Electric Guitar", "Organ", "Piano", "Saxophone", "Trumpet", "Violin", "Voice"

]

example_list = [

["piano.wav"]

]

def predict(audio_path):

start_time = timer()

wavform, sample_rate = torchaudio.load(audio_path)

wav = resample(wavform, sample_rate, SAMPLE_RATE)

if len(wav) > int(AUDIO_LEN * SAMPLE_RATE):

wav = wav[:int(AUDIO_LEN * SAMPLE_RATE)]

else:

print(f"input length {len(wav)} too small!, need over {int(AUDIO_LEN * SAMPLE_RATE)}")

return

img = audio_preprocess(wav, SAMPLE_RATE).unsqueeze(0)

model.eval()

with torch.inference_mode():

pred_probs = torch.softmax(model(img), dim=1)

pred_labels_and_probs = {LABELS[i]: float(pred_probs[0][i]) for i in range(len(LABELS))}

pred_time = round(timer() - start_time, 5)

return pred_labels_and_probs, pred_time

demo = gr.Interface(fn=predict,

inputs=gr.Audio(type="filepath"),

outputs=[gr.Label(num_top_classes=11, label="Predictions"),

gr.Number(label="Prediction time (s)")],

examples=example_list,

cache_examples=False

)

demo.launch(debug=False)

```

| gradio-app/gradio/blob/main/demo/musical_instrument_identification/run.ipynb |

``python

import os

import torch

from transformers import AutoModelForSeq2SeqLM, AutoTokenizer, default_data_collator, get_linear_schedule_with_warmup

from peft import get_peft_model, PromptTuningConfig, TaskType, PromptTuningInit

from torch.utils.data import DataLoader

from tqdm import tqdm

from datasets import load_dataset

os.environ["TOKENIZERS_PARALLELISM"] = "false"

device = "cuda"

model_name_or_path = "t5-large"

tokenizer_name_or_path = "t5-large"

checkpoint_name = "financial_sentiment_analysis_prompt_tuning_v1.pt"

text_column = "sentence"

label_column = "text_label"

max_length = 128

lr = 1

num_epochs = 5

batch_size = 8

```

```python

# creating model

peft_config = PromptTuningConfig(

task_type=TaskType.SEQ_2_SEQ_LM,

prompt_tuning_init=PromptTuningInit.TEXT,

num_virtual_tokens=20,

prompt_tuning_init_text="What is the sentiment of this article?\n",

inference_mode=False,

tokenizer_name_or_path=model_name_or_path,

)

model = AutoModelForSeq2SeqLM.from_pretrained(model_name_or_path)

model = get_peft_model(model, peft_config)

model.print_trainable_parameters()

model

```

```python

# loading dataset

dataset = load_dataset("financial_phrasebank", "sentences_allagree")

dataset = dataset["train"].train_test_split(test_size=0.1)

dataset["validation"] = dataset["test"]

del dataset["test"]

classes = dataset["train"].features["label"].names

dataset = dataset.map(

lambda x: {"text_label": [classes[label] for label in x["label"]]},

batched=True,

num_proc=1,

)

dataset["train"][0]

```

```python

# data preprocessing

tokenizer = AutoTokenizer.from_pretrained(model_name_or_path)

target_max_length = max([len(tokenizer(class_label)["input_ids"]) for class_label in classes])

def preprocess_function(examples):

inputs = examples[text_column]

targets = examples[label_column]

model_inputs = tokenizer(inputs, max_length=max_length, padding="max_length", truncation=True, return_tensors="pt")

labels = tokenizer(

targets, max_length=target_max_length, padding="max_length", truncation=True, return_tensors="pt"

)

labels = labels["input_ids"]

labels[labels == tokenizer.pad_token_id] = -100

model_inputs["labels"] = labels

return model_inputs

processed_datasets = dataset.map(

preprocess_function,

batched=True,

num_proc=1,

remove_columns=dataset["train"].column_names,

load_from_cache_file=False,

desc="Running tokenizer on dataset",

)

train_dataset = processed_datasets["train"]

eval_dataset = processed_datasets["validation"]

train_dataloader = DataLoader(

train_dataset, shuffle=True, collate_fn=default_data_collator, batch_size=batch_size, pin_memory=True

)

eval_dataloader = DataLoader(eval_dataset, collate_fn=default_data_collator, batch_size=batch_size, pin_memory=True)

```

```python

# optimizer and lr scheduler

optimizer = torch.optim.AdamW(model.parameters(), lr=lr)

lr_scheduler = get_linear_schedule_with_warmup(

optimizer=optimizer,

num_warmup_steps=0,

num_training_steps=(len(train_dataloader) * num_epochs),

)

```

```python

# training and evaluation

model = model.to(device)

for epoch in range(num_epochs):

model.train()

total_loss = 0

for step, batch in enumerate(tqdm(train_dataloader)):

batch = {k: v.to(device) for k, v in batch.items()}

outputs = model(**batch)

loss = outputs.loss

total_loss += loss.detach().float()

loss.backward()

optimizer.step()

lr_scheduler.step()

optimizer.zero_grad()

model.eval()

eval_loss = 0

eval_preds = []

for step, batch in enumerate(tqdm(eval_dataloader)):

batch = {k: v.to(device) for k, v in batch.items()}

with torch.no_grad():

outputs = model(**batch)

loss = outputs.loss

eval_loss += loss.detach().float()

eval_preds.extend(

tokenizer.batch_decode(torch.argmax(outputs.logits, -1).detach().cpu().numpy(), skip_special_tokens=True)

)

eval_epoch_loss = eval_loss / len(eval_dataloader)

eval_ppl = torch.exp(eval_epoch_loss)

train_epoch_loss = total_loss / len(train_dataloader)

train_ppl = torch.exp(train_epoch_loss)

print(f"{epoch=}: {train_ppl=} {train_epoch_loss=} {eval_ppl=} {eval_epoch_loss=}")

```

```python

# print accuracy

correct = 0

total = 0

for pred, true in zip(eval_preds, dataset["validation"]["text_label"]):

if pred.strip() == true.strip():

correct += 1

total += 1

accuracy = correct / total * 100

print(f"{accuracy=} % on the evaluation dataset")

print(f"{eval_preds[:10]=}")

print(f"{dataset['validation']['text_label'][:10]=}")

```

```python

# saving model

peft_model_id = f"{model_name_or_path}_{peft_config.peft_type}_{peft_config.task_type}"

model.save_pretrained(peft_model_id)

```

```python

ckpt = f"{peft_model_id}/adapter_model.bin"

!du -h $ckpt

```

```python

from peft import PeftModel, PeftConfig

peft_model_id = f"{model_name_or_path}_{peft_config.peft_type}_{peft_config.task_type}"

config = PeftConfig.from_pretrained(peft_model_id)

model = AutoModelForSeq2SeqLM.from_pretrained(config.base_model_name_or_path)

model = PeftModel.from_pretrained(model, peft_model_id)

```

```python

model.eval()

i = 107

input_ids = tokenizer(dataset["validation"][text_column][i], return_tensors="pt").input_ids

print(dataset["validation"][text_column][i])

print(input_ids)

with torch.no_grad():

outputs = model.generate(input_ids=input_ids, max_new_tokens=10)

print(outputs)

print(tokenizer.batch_decode(outputs.detach().cpu().numpy(), skip_special_tokens=True))

```

| huggingface/peft/blob/main/examples/conditional_generation/peft_prompt_tuning_seq2seq.ipynb |

--

title: Brier Score

emoji: 🤗

colorFrom: blue

colorTo: red

sdk: gradio

sdk_version: 3.19.1

app_file: app.py

pinned: false

tags:

- evaluate

- metric

description: >-

The Brier score is a measure of the error between two probability distributions.

---

# Metric Card for Brier Score

## Metric Description

Brier score is a type of evaluation metric for classification tasks, where you predict outcomes such as win/lose, spam/ham, click/no-click etc.

`BrierScore = 1/N * sum( (p_i - o_i)^2 )`

Where `p_i` is the prediction probability of occurrence of the event, and the term `o_i` is equal to 1 if the event occurred and 0 if not. Which means: the lower the value of this score, the better the prediction.

## How to Use

At minimum, this metric requires predictions and references as inputs.

```python

>>> brier_score = evaluate.load("brier_score")

>>> predictions = np.array([0, 0, 1, 1])

>>> references = np.array([0.1, 0.9, 0.8, 0.3])

>>> results = brier_score.compute(predictions=predictions, references=references)

```

### Inputs

Mandatory inputs:

- `predictions`: numeric array-like of shape (`n_samples,`) or (`n_samples`, `n_outputs`), representing the estimated target values.

- `references`: numeric array-like of shape (`n_samples,`) or (`n_samples`, `n_outputs`), representing the ground truth (correct) target values.

Optional arguments:

- `sample_weight`: numeric array-like of shape (`n_samples,`) representing sample weights. The default is `None`.

- `pos_label`: the label of the positive class. The default is `1`.

### Output Values

This metric returns a dictionary with the following keys:

- `brier_score (float)`: the computed Brier score.

Output Example(s):

```python

{'brier_score': 0.5}

```