text

stringlengths 23

371k

| source

stringlengths 32

152

|

|---|---|

--

title: "Efficient Controllable Generation for SDXL with T2I-Adapters"

thumbnail: /blog/assets/t2i-sdxl-adapters/thumbnail.png

authors:

- user: Adapter

guest: true

- user: valhalla

- user: sayakpaul

- user: Xintao

guest: true

- user: hysts

---

# Efficient Controllable Generation for SDXL with T2I-Adapters

<p align="center">

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/t2i-adapters-sdxl/hf_tencent.png" height=180/>

</p>

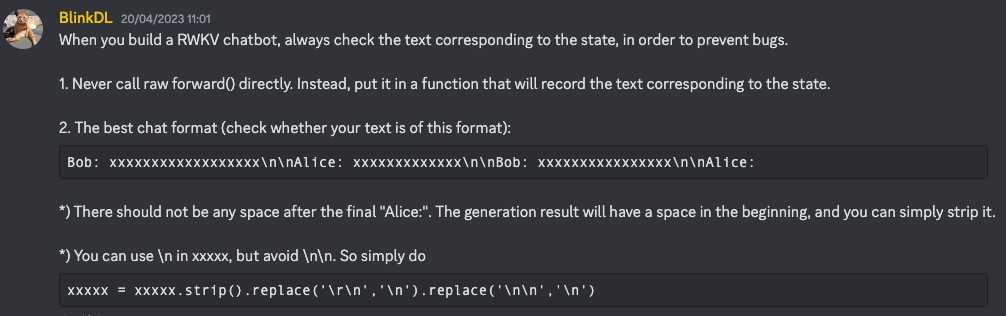

[T2I-Adapter](https://huggingface.co/papers/2302.08453) is an efficient plug-and-play model that provides extra guidance to pre-trained text-to-image models while freezing the original large text-to-image models. T2I-Adapter aligns internal knowledge in T2I models with external control signals. We can train various adapters according to different conditions and achieve rich control and editing effects.

As a contemporaneous work, [ControlNet](https://hf.co/papers/2302.05543) has a similar function and is widely used. However, it can be **computationally expensive** to run. This is because, during each denoising step of the reverse diffusion process, both the ControlNet and UNet need to be run. In addition, ControlNet emphasizes the importance of copying the UNet encoder as a control model, resulting in a larger parameter number. Thus, the generation is bottlenecked by the size of the ControlNet (the larger, the slower the process becomes).

T2I-Adapters provide a competitive advantage to ControlNets in this matter. T2I-Adapters are smaller in size, and unlike ControlNets, T2I-Adapters are run just once for the entire course of the denoising process.

| **Model Type** | **Model Parameters** | **Storage (fp16)** |

| --- | --- | --- |

| [ControlNet-SDXL](https://huggingface.co/diffusers/controlnet-canny-sdxl-1.0) | 1251 M | 2.5 GB |

| [ControlLoRA](https://huggingface.co/stabilityai/control-lora) (with rank 128) | 197.78 M (84.19% reduction) | 396 MB (84.53% reduction) |

| [T2I-Adapter-SDXL](https://huggingface.co/TencentARC/t2i-adapter-canny-sdxl-1.0) | 79 M (**_93.69% reduction_**) | 158 MB (**_94% reduction_**) |

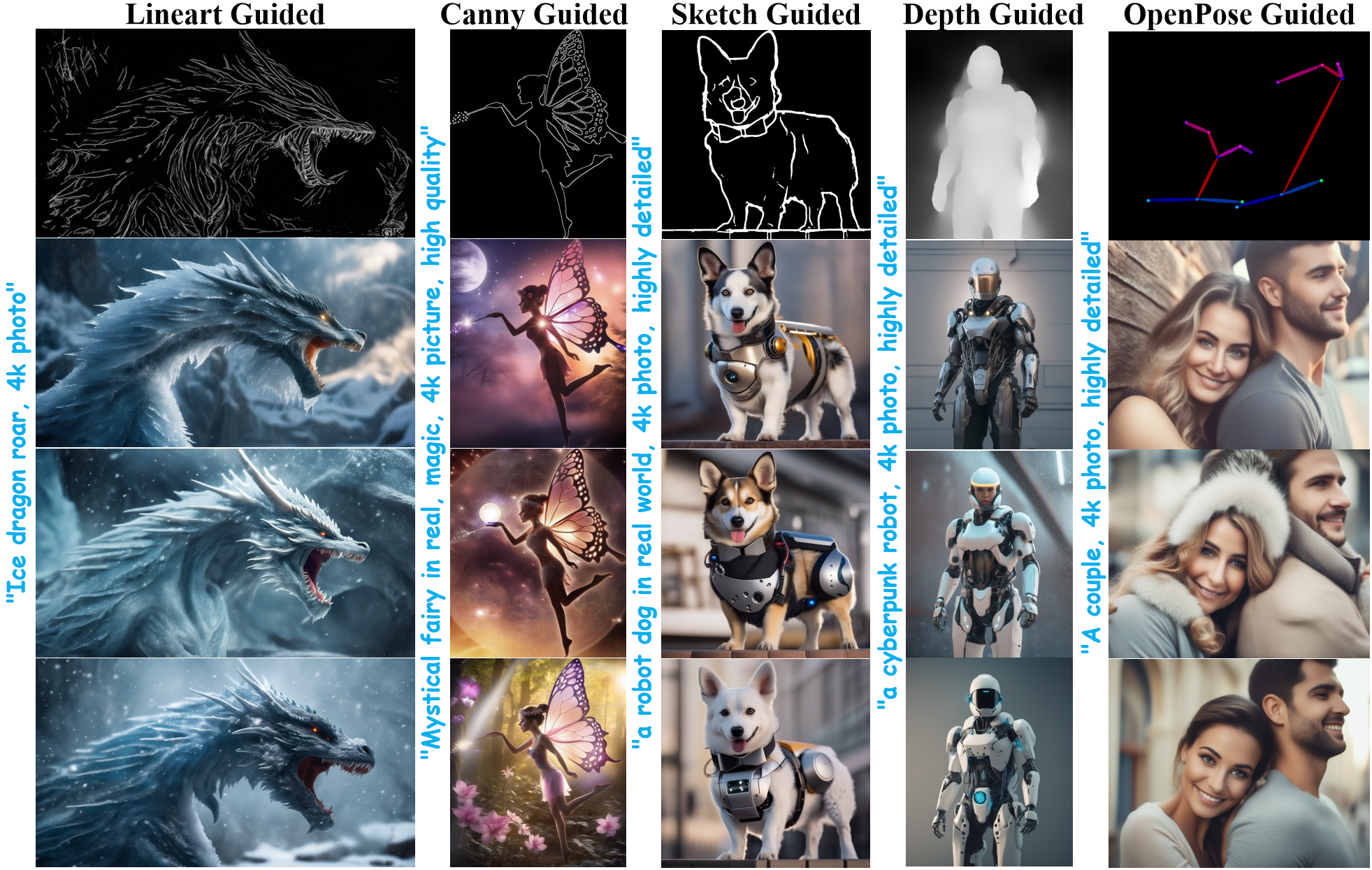

Over the past few weeks, the Diffusers team and the T2I-Adapter authors have been collaborating to bring the support of T2I-Adapters for [Stable Diffusion XL (SDXL)](https://huggingface.co/papers/2307.01952) in [`diffusers`](https://github.com/huggingface/diffusers). In this blog post, we share our findings from training T2I-Adapters on SDXL from scratch, some appealing results, and, of course, the T2I-Adapter checkpoints on various conditionings (sketch, canny, lineart, depth, and openpose)!

Compared to previous versions of T2I-Adapter (SD-1.4/1.5), [T2I-Adapter-SDXL](https://github.com/TencentARC/T2I-Adapter) still uses the original recipe, driving 2.6B SDXL with a 79M Adapter! T2I-Adapter-SDXL maintains powerful control capabilities while inheriting the high-quality generation of SDXL!

## Training T2I-Adapter-SDXL with `diffusers`

We built our training script on [this official example](https://github.com/huggingface/diffusers/blob/main/examples/t2i_adapter/README_sdxl.md) provided by `diffusers`.

Most of the T2I-Adapter models we mention in this blog post were trained on 3M high-resolution image-text pairs from LAION-Aesthetics V2 with the following settings:

- Training steps: 20000-35000

- Batch size: Data parallel with a single GPU batch size of 16 for a total batch size of 128.

- Learning rate: Constant learning rate of 1e-5.

- Mixed precision: fp16

We encourage the community to use our scripts to train custom and powerful T2I-Adapters, striking a competitive trade-off between speed, memory, and quality.

## Using T2I-Adapter-SDXL in `diffusers`

Here, we take the lineart condition as an example to demonstrate the usage of [T2I-Adapter-SDXL](https://github.com/TencentARC/T2I-Adapter/tree/XL). To get started, first install the required dependencies:

```bash

pip install -U git+https://github.com/huggingface/diffusers.git

pip install -U controlnet_aux==0.0.7 # for conditioning models and detectors

pip install transformers accelerate

```

The generation process of the T2I-Adapter-SDXL mainly consists of the following two steps:

1. Condition images are first prepared into the appropriate *control image* format.

2. The *control image* and *prompt* are passed to the [`StableDiffusionXLAdapterPipeline`](https://github.com/huggingface/diffusers/blob/0ec7a02b6a609a31b442cdf18962d7238c5be25d/src/diffusers/pipelines/t2i_adapter/pipeline_stable_diffusion_xl_adapter.py#L126).

Let's have a look at a simple example using the [Lineart Adapter](https://huggingface.co/TencentARC/t2i-adapter-lineart-sdxl-1.0). We start by initializing the T2I-Adapter pipeline for SDXL and the lineart detector.

```python

import torch

from controlnet_aux.lineart import LineartDetector

from diffusers import (AutoencoderKL, EulerAncestralDiscreteScheduler,

StableDiffusionXLAdapterPipeline, T2IAdapter)

from diffusers.utils import load_image, make_image_grid

# load adapter

adapter = T2IAdapter.from_pretrained(

"TencentARC/t2i-adapter-lineart-sdxl-1.0", torch_dtype=torch.float16, varient="fp16"

).to("cuda")

# load pipeline

model_id = "stabilityai/stable-diffusion-xl-base-1.0"

euler_a = EulerAncestralDiscreteScheduler.from_pretrained(

model_id, subfolder="scheduler"

)

vae = AutoencoderKL.from_pretrained(

"madebyollin/sdxl-vae-fp16-fix", torch_dtype=torch.float16

)

pipe = StableDiffusionXLAdapterPipeline.from_pretrained(

model_id,

vae=vae,

adapter=adapter,

scheduler=euler_a,

torch_dtype=torch.float16,

variant="fp16",

).to("cuda")

# load lineart detector

line_detector = LineartDetector.from_pretrained("lllyasviel/Annotators").to("cuda")

```

Then, load an image to detect lineart:

```python

url = "https://huggingface.co/Adapter/t2iadapter/resolve/main/figs_SDXLV1.0/org_lin.jpg"

image = load_image(url)

image = line_detector(image, detect_resolution=384, image_resolution=1024)

```

Then we generate:

```python

prompt = "Ice dragon roar, 4k photo"

negative_prompt = "anime, cartoon, graphic, text, painting, crayon, graphite, abstract, glitch, deformed, mutated, ugly, disfigured"

gen_images = pipe(

prompt=prompt,

negative_prompt=negative_prompt,

image=image,

num_inference_steps=30,

adapter_conditioning_scale=0.8,

guidance_scale=7.5,

).images[0]

gen_images.save("out_lin.png")

```

There are two important arguments to understand that help you control the amount of conditioning.

1. `adapter_conditioning_scale`

This argument controls how much influence the conditioning should have on the input. High values mean a higher conditioning effect and vice-versa.

2. `adapter_conditioning_factor`

This argument controls how many initial generation steps should have the conditioning applied. The value should be set between 0-1 (default is 1). The value of `adapter_conditioning_factor=1` means the adapter should be applied to all timesteps, while the `adapter_conditioning_factor=0.5` means it will only applied for the first 50% of the steps.

For more details, we welcome you to check the [official documentation](https://huggingface.co/docs/diffusers/main/en/api/pipelines/stable_diffusion/adapter).

## Try out the Demo

You can easily try T2I-Adapter-SDXL in [this Space](https://huggingface.co/spaces/TencentARC/T2I-Adapter-SDXL) or in the playground embedded below:

<script type="module" src="https://gradio.s3-us-west-2.amazonaws.com/3.43.1/gradio.js"></script>

<gradio-app src="https://tencentarc-t2i-adapter-sdxl.hf.space"></gradio-app>

You can also try out [Doodly](https://huggingface.co/spaces/TencentARC/T2I-Adapter-SDXL-Sketch), built using the sketch model that turns your doodles into realistic images (with language supervision):

<script type="module" src="https://gradio.s3-us-west-2.amazonaws.com/3.43.1/gradio.js"></script>

<gradio-app src="https://tencentarc-t2i-adapter-sdxl-sketch.hf.space"></gradio-app>

## More Results

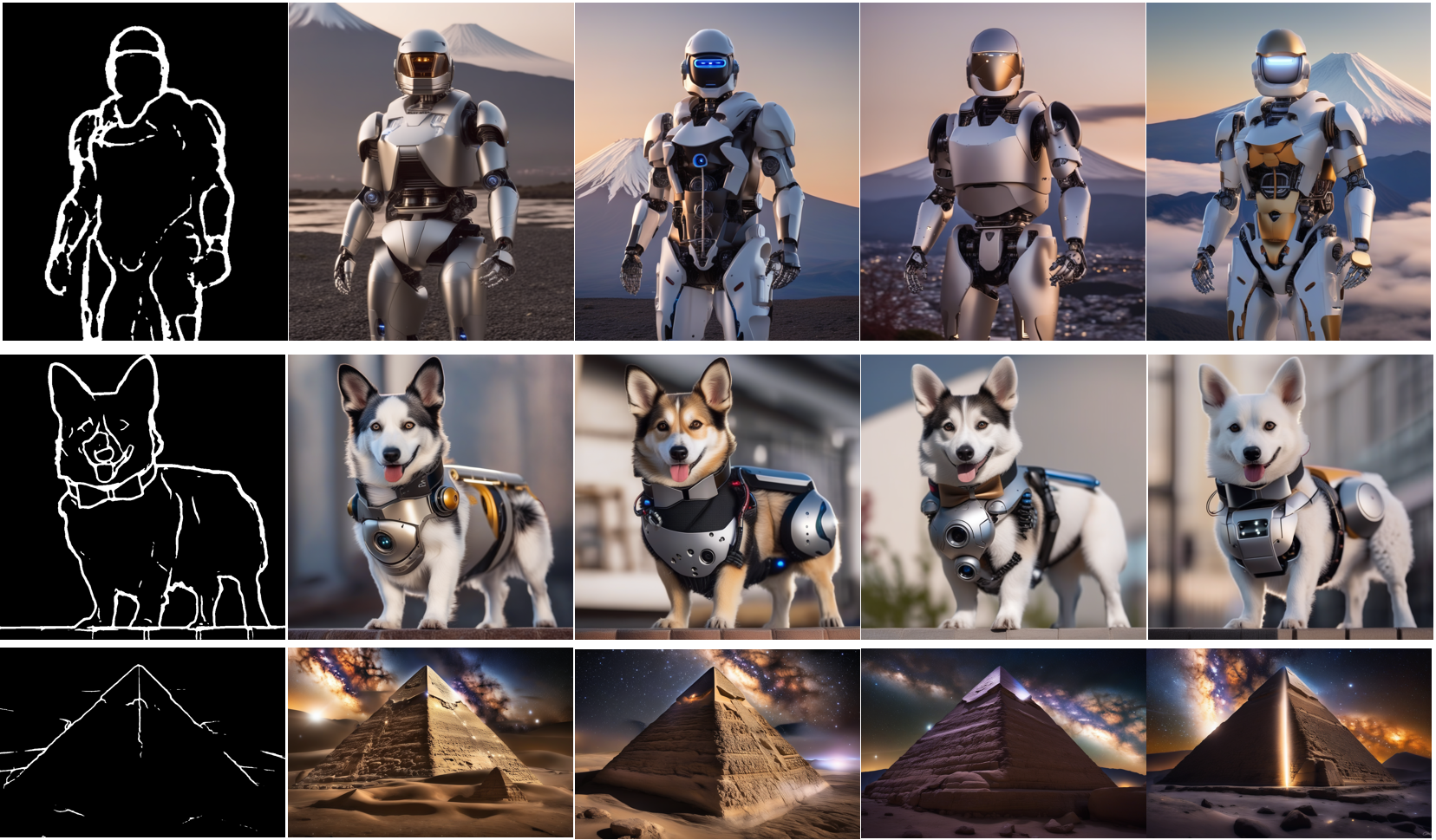

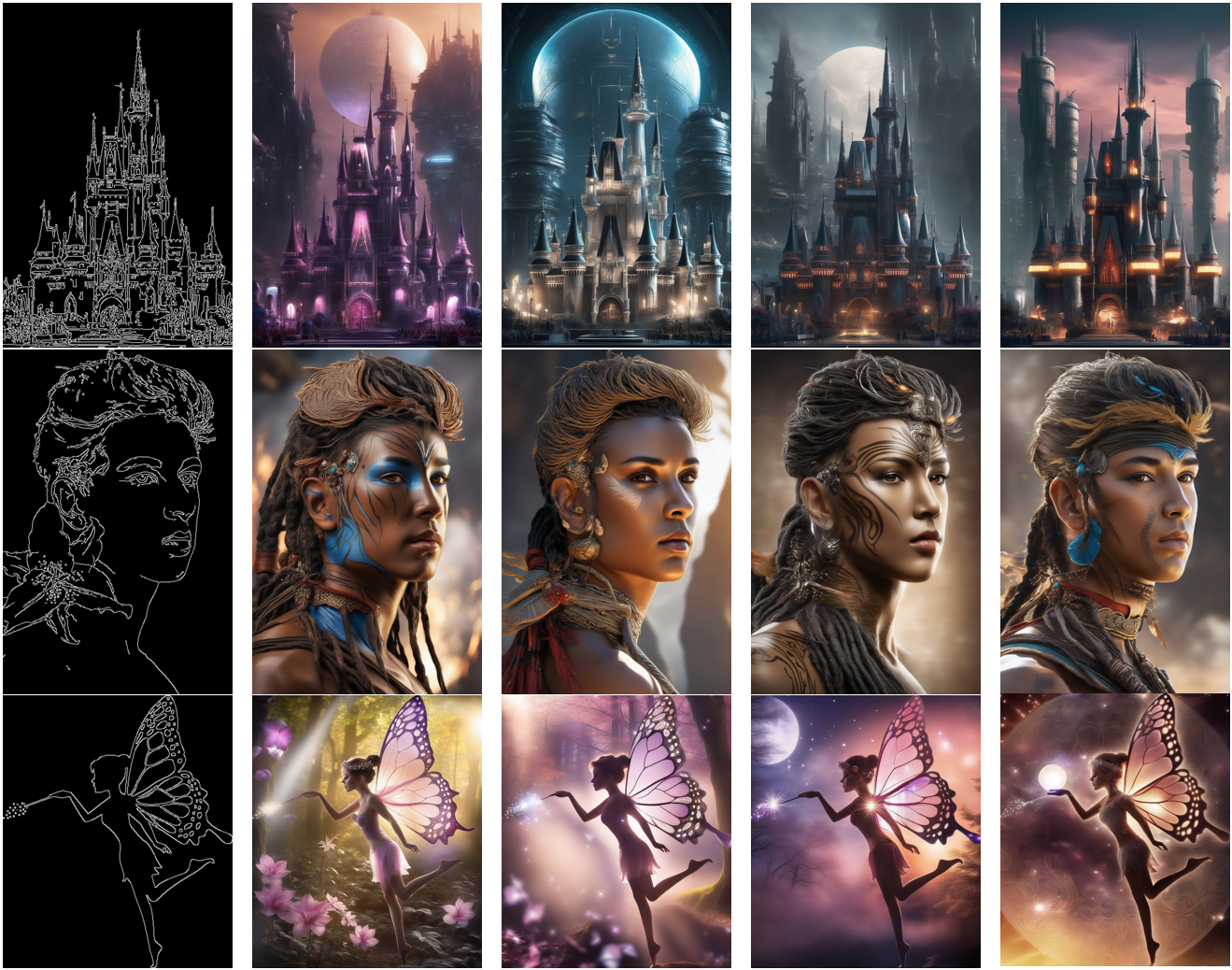

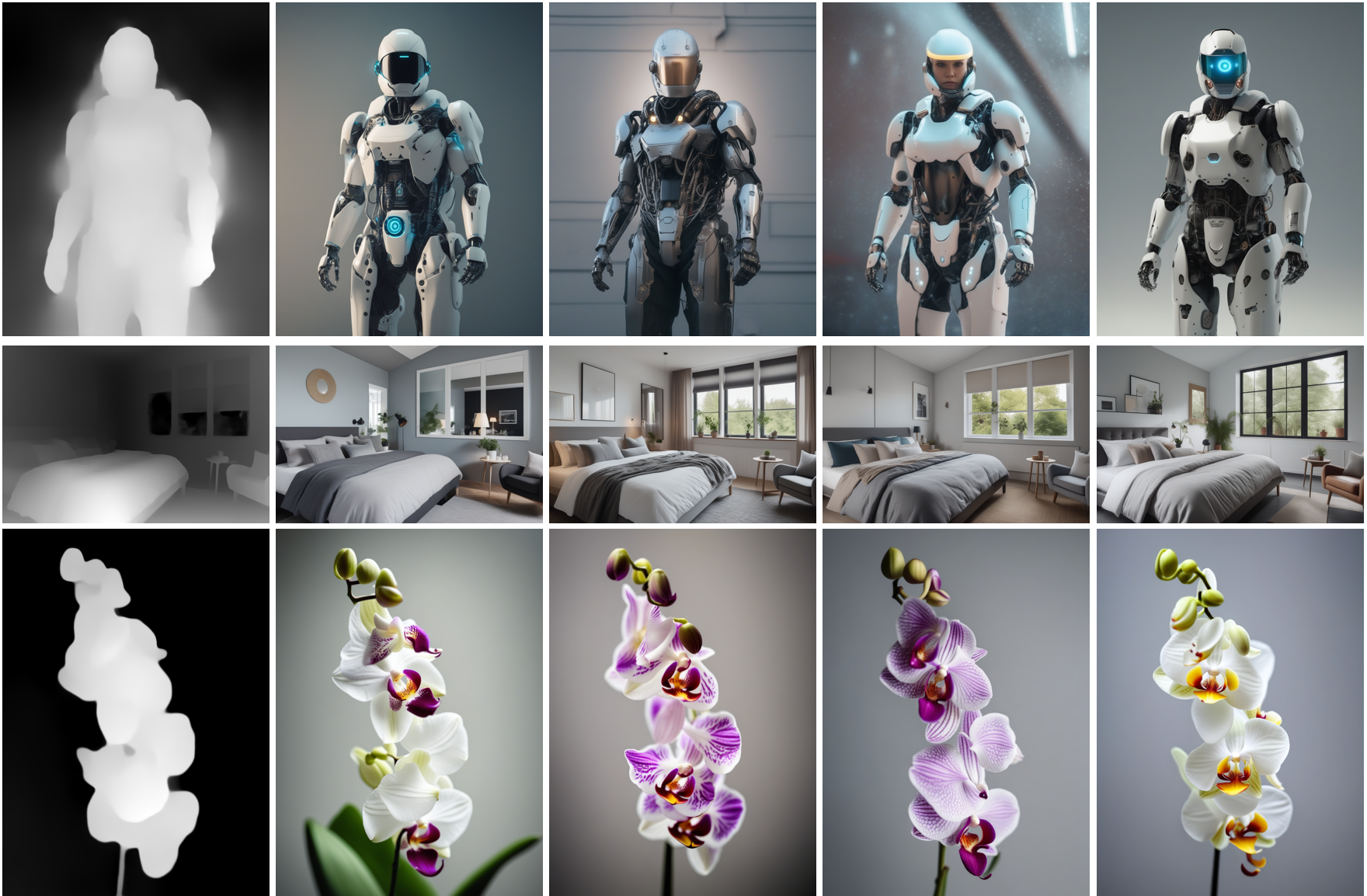

Below, we present results obtained from using different kinds of conditions. We also supplement the results with links to their corresponding pre-trained checkpoints. Their model cards contain more details on how they were trained, along with example usage.

### Lineart Guided

*Model from [`TencentARC/t2i-adapter-lineart-sdxl-1.0`](https://huggingface.co/TencentARC/t2i-adapter-lineart-sdxl-1.0)*

### Sketch Guided

*Model from [`TencentARC/t2i-adapter-sketch-sdxl-1.0`](https://huggingface.co/TencentARC/t2i-adapter-sketch-sdxl-1.0)*

### Canny Guided

*Model from [`TencentARC/t2i-adapter-canny-sdxl-1.0`](https://huggingface.co/TencentARC/t2i-adapter-canny-sdxl-1.0)*

### Depth Guided

*Depth guided models from [`TencentARC/t2i-adapter-depth-midas-sdxl-1.0`](https://huggingface.co/TencentARC/t2i-adapter-depth-midas-sdxl-1.0) and [`TencentARC/t2i-adapter-depth-zoe-sdxl-1.0`](https://huggingface.co/TencentARC/t2i-adapter-depth-zoe-sdxl-1.0) respectively*

### OpenPose Guided

*Model from [`TencentARC/t2i-adapter-openpose-sdxl-1.0`](https://hf.co/TencentARC/t2i-adapter-openpose-sdxl-1.0)*

---

*Acknowledgements: Immense thanks to [William Berman](https://twitter.com/williamLberman) for helping us train the models and sharing his insights.*

| huggingface/blog/blob/main/t2i-sdxl-adapters.md |

--

title: "Introduction to Graph Machine Learning"

thumbnail: /blog/assets/125_intro-to-graphml/thumbnail.png

authors:

- user: clefourrier

---

# Introduction to Graph Machine Learning

In this blog post, we cover the basics of graph machine learning.

We first study what graphs are, why they are used, and how best to represent them. We then cover briefly how people learn on graphs, from pre-neural methods (exploring graph features at the same time) to what are commonly called Graph Neural Networks. Lastly, we peek into the world of Transformers for graphs.

## Graphs

### What is a graph?

In its essence, a graph is a description of items linked by relations.

Examples of graphs include social networks (Twitter, Mastodon, any citation networks linking papers and authors), molecules, knowledge graphs (such as UML diagrams, encyclopedias, and any website with hyperlinks between its pages), sentences expressed as their syntactic trees, any 3D mesh, and more! It is, therefore, not hyperbolic to say that graphs are everywhere.

The items of a graph (or network) are called its *nodes* (or vertices), and their connections its *edges* (or links). For example, in a social network, nodes are users and edges their connections; in a molecule, nodes are atoms and edges their molecular bond.

* A graph with either typed nodes or typed edges is called **heterogeneous** (example: citation networks with items that can be either papers or authors have typed nodes, and XML diagram where relations are typed have typed edges). It cannot be represented solely through its topology, it needs additional information. This post focuses on homogeneous graphs.

* A graph can also be **directed** (like a follower network, where A follows B does not imply B follows A) or **undirected** (like a molecule, where the relation between atoms goes both ways). Edges can connect different nodes or one node to itself (self-edges), but not all nodes need to be connected.

If you want to use your data, you must first consider its best characterisation (homogeneous/heterogeneous, directed/undirected, and so on).

### What are graphs used for?

Let's look at a panel of possible tasks we can do on graphs.

At the **graph level**, the main tasks are:

- graph generation, used in drug discovery to generate new plausible molecules,

- graph evolution (given a graph, predict how it will evolve over time), used in physics to predict the evolution of systems

- graph level prediction (categorisation or regression tasks from graphs), such as predicting the toxicity of molecules.

At the **node level**, it's usually a node property prediction. For example, [Alphafold](https://www.deepmind.com/blog/alphafold-a-solution-to-a-50-year-old-grand-challenge-in-biology) uses node property prediction to predict the 3D coordinates of atoms given the overall graph of the molecule, and therefore predict how molecules get folded in 3D space, a hard bio-chemistry problem.

At the **edge level**, it's either edge property prediction or missing edge prediction. Edge property prediction helps drug side effect prediction predict adverse side effects given a pair of drugs. Missing edge prediction is used in recommendation systems to predict whether two nodes in a graph are related.

It is also possible to work at the **sub-graph level** on community detection or subgraph property prediction. Social networks use community detection to determine how people are connected. Subgraph property prediction can be found in itinerary systems (such as [Google Maps](https://www.deepmind.com/blog/traffic-prediction-with-advanced-graph-neural-networks)) to predict estimated times of arrival.

Working on these tasks can be done in two ways.

When you want to predict the evolution of a specific graph, you work in a **transductive** setting, where everything (training, validation, and testing) is done on the same single graph. *If this is your setup, be careful! Creating train/eval/test datasets from a single graph is not trivial.* However, a lot of the work is done using different graphs (separate train/eval/test splits), which is called an **inductive** setting.

### How do we represent graphs?

The common ways to represent a graph to process and operate it are either:

* as the set of all its edges (possibly complemented with the set of all its nodes)

* or as the adjacency matrix between all its nodes. An adjacency matrix is a square matrix (of node size * node size) that indicates which nodes are directly connected to which others (where \(A_{ij} = 1\) if \(n_i\) and \(n_j\) are connected, else 0). *Note: most graphs are not densely connected and therefore have sparse adjacency matrices, which can make computations harder.*

However, though these representations seem familiar, do not be fooled!

Graphs are very different from typical objects used in ML because their topology is more complex than just "a sequence" (such as text and audio) or "an ordered grid" (images and videos, for example)): even if they can be represented as lists or matrices, their representation should not be considered an ordered object!

But what does this mean? If you have a sentence and shuffle its words, you create a new sentence. If you have an image and rearrange its columns, you create a new image.

<div align="center">

<figure class="image table text-center m-0 w-full">

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/125_intro-to-graphml/assembled_hf.png" width="500" />

<figcaption>On the left, the Hugging Face logo - on the right, a shuffled Hugging Face logo, which is quite a different new image.</figcaption>

</figure>

</div>

This is not the case for a graph: if you shuffle its edge list or the columns of its adjacency matrix, it is still the same graph. (We explain this more formally a bit lower, look for permutation invariance).

<div align="center">

<figure class="image table text-center m-0 w-full">

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/125_intro-to-graphml/assembled_graphs.png" width="1000" />

<figcaption>On the left, a small graph (nodes in yellow, edges in orange). In the centre, its adjacency matrix, with columns and rows ordered in the alphabetical node order: on the row for node A (first row), we can read that it is connected to E and C. On the right, a shuffled adjacency matrix (the columns are no longer sorted alphabetically), which is also a valid representation of the graph: A is still connected to E and C.</figcaption>

</figure>

</div>

## Graph representations through ML

The usual process to work on graphs with machine learning is first to generate a meaningful representation for your items of interest (nodes, edges, or full graphs depending on your task), then to use these to train a predictor for your target task. We want (as in other modalities) to constrain the mathematical representations of your objects so that similar objects are mathematically close. However, this similarity is hard to define strictly in graph ML: for example, are two nodes more similar when they have the same labels or the same neighbours?

Note: *In the following sections, we will focus on generating node representations.

Once you have node-level representations, it is possible to obtain edge or graph-level information. For edge-level information, you can concatenate node pair representations or do a dot product. For graph-level information, it is possible to do a global pooling (average, sum, etc.) on the concatenated tensor of all the node-level representations. Still, it will smooth and lose information over the graph -- a recursive hierarchical pooling can make more sense, or add a virtual node, connected to all other nodes in the graph, and use its representation as the overall graph representation.*

### Pre-neural approaches

#### Simply using engineered features

Before neural networks, graphs and their items of interest could be represented as combinations of features, in a task-specific fashion. Now, these features are still used for data augmentation and [semi-supervised learning](https://arxiv.org/abs/2202.08871), though [more complex feature generation methods](https://arxiv.org/abs/2208.11973) exist; it can be essential to find how best to provide them to your network depending on your task.

**Node-level** features can give information about importance (how important is this node for the graph?) and/or structure based (what is the shape of the graph around the node?), and can be combined.

The node **centrality** measures the node importance in the graph. It can be computed recursively by summing the centrality of each node’s neighbours until convergence, or through shortest distance measures between nodes, for example. The node **degree** is the quantity of direct neighbours it has. The **clustering coefficient** measures how connected the node neighbours are. **Graphlets degree vectors** count how many different graphlets are rooted at a given node, where graphlets are all the mini graphs you can create with a given number of connected nodes (with three connected nodes, you can have a line with two edges, or a triangle with three edges).

<div align="center">

<figure class="image table text-center m-0 w-full">

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/125_intro-to-graphml/graphlets.png" width="700" />

<figcaption>The 2-to 5-node graphlets (Pržulj, 2007)</figcaption>

</figure>

</div>

**Edge-level** features complement the representation with more detailed information about the connectedness of the nodes, and include the **shortest distance** between two nodes, their **common neighbours**, and their **Katz index** (which is the number of possible walks of up to a certain length between two nodes - it can be computed directly from the adjacency matrix).

**Graph level features** contain high-level information about graph similarity and specificities. Total **graphlet counts**, though computationally expensive, provide information about the shape of sub-graphs. **Kernel methods** measure similarity between graphs through different "bag of nodes" methods (similar to bag of words).

### Walk-based approaches

[**Walk-based approaches**](https://en.wikipedia.org/wiki/Random_walk) use the probability of visiting a node j from a node i on a random walk to define similarity metrics; these approaches combine both local and global information. [**Node2Vec**](https://snap.stanford.edu/node2vec/), for example, simulates random walks between nodes of a graph, then processes these walks with a skip-gram, [much like we would do with words in sentences](https://arxiv.org/abs/1301.3781), to compute embeddings. These approaches can also be used to [accelerate computations](https://arxiv.org/abs/1208.3071) of the [**Page Rank method**](http://infolab.stanford.edu/pub/papers/google.pdf), which assigns an importance score to each node (based on its connectivity to other nodes, evaluated as its frequency of visit by random walk, for example).

However, these methods have limits: they cannot obtain embeddings for new nodes, do not capture structural similarity between nodes finely, and cannot use added features.

## Graph Neural Networks

Neural networks can generalise to unseen data. Given the representation constraints we evoked earlier, what should a good neural network be to work on graphs?

It should:

- be permutation invariant:

- Equation: \\(f(P(G))=f(G)\\) with f the network, P the permutation function, G the graph

- Explanation: the representation of a graph and its permutations should be the same after going through the network

- be permutation equivariant

- Equation: \\(P(f(G))=f(P(G))\\) with f the network, P the permutation function, G the graph

- Explanation: permuting the nodes before passing them to the network should be equivalent to permuting their representations

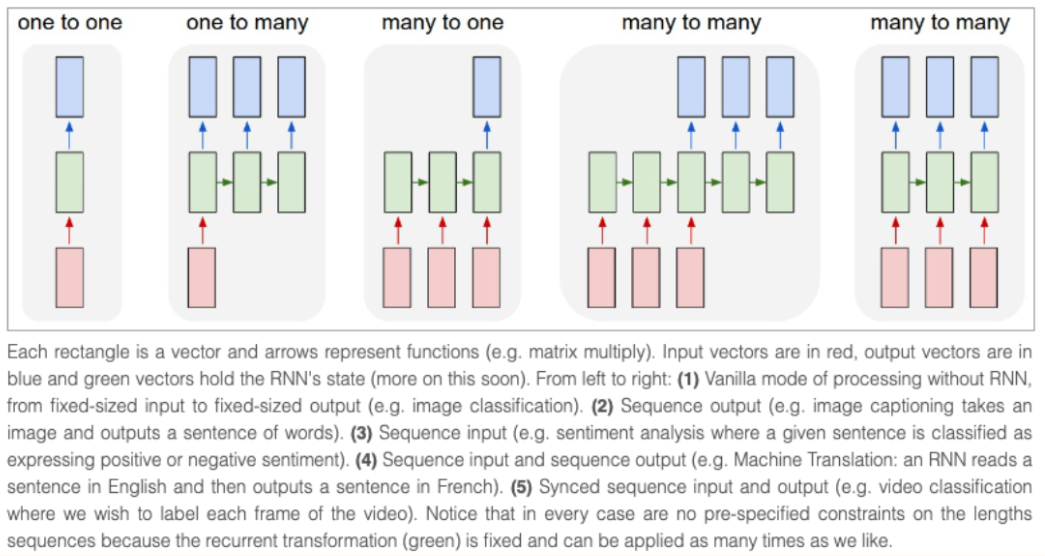

Typical neural networks, such as RNNs or CNNs are not permutation invariant. A new architecture, the [Graph Neural Network](https://ieeexplore.ieee.org/abstract/document/1517930), was therefore introduced (initially as a state-based machine).

A GNN is made of successive layers. A GNN layer represents a node as the combination (**aggregation**) of the representations of its neighbours and itself from the previous layer (**message passing**), plus usually an activation to add some nonlinearity.

**Comparison to other models**: A CNN can be seen as a GNN with fixed neighbour sizes (through the sliding window) and ordering (it is not permutation equivariant). A [Transformer](https://arxiv.org/abs/1706.03762v3) without positional embeddings can be seen as a GNN on a fully-connected input graph.

### Aggregation and message passing

There are many ways to aggregate messages from neighbour nodes, summing, averaging, for example. Some notable works following this idea include:

- [Graph Convolutional Networks](https://tkipf.github.io/graph-convolutional-networks/) averages the normalised representation of the neighbours for a node (most GNNs are actually GCNs);

- [Graph Attention Networks](https://petar-v.com/GAT/) learn to weigh the different neighbours based on their importance (like transformers);

- [GraphSAGE](https://snap.stanford.edu/graphsage/) samples neighbours at different hops before aggregating their information in several steps with max pooling.

- [Graph Isomorphism Networks](https://arxiv.org/pdf/1810.00826v3.pdf) aggregates representation by applying an MLP to the sum of the neighbours' node representations.

**Choosing an aggregation**: Some aggregation techniques (notably mean/max pooling) can encounter failure cases when creating representations which finely differentiate nodes with different neighbourhoods of similar nodes (ex: through mean pooling, a neighbourhood with 4 nodes, represented as 1,1,-1,-1, averaged as 0, is not going to be different from one with only 3 nodes represented as -1, 0, 1).

### GNN shape and the over-smoothing problem

At each new layer, the node representation includes more and more nodes.

A node, through the first layer, is the aggregation of its direct neighbours. Through the second layer, it is still the aggregation of its direct neighbours, but this time, their representations include their own neighbours (from the first layer). After n layers, the representation of all nodes becomes an aggregation of all their neighbours at distance n, therefore, of the full graph if its diameter is smaller than n!

If your network has too many layers, there is a risk that each node becomes an aggregation of the full graph (and that node representations converge to the same one for all nodes). This is called **the oversmoothing problem**

This can be solved by :

- scaling the GNN to have a layer number small enough to not approximate each node as the whole network (by first analysing the graph diameter and shape)

- increasing the complexity of the layers

- adding non message passing layers to process the messages (such as simple MLPs)

- adding skip-connections.

The oversmoothing problem is an important area of study in graph ML, as it prevents GNNs to scale up, like Transformers have been shown to in other modalities.

## Graph Transformers

A Transformer without its positional encoding layer is permutation invariant, and Transformers are known to scale well, so recently, people have started looking at adapting Transformers to graphs ([Survey)](https://github.com/ChandlerBang/awesome-graph-transformer). Most methods focus on the best ways to represent graphs by looking for the best features and best ways to represent positional information and changing the attention to fit this new data.

Here are some interesting methods which got state-of-the-art results or close on one of the hardest available benchmarks as of writing, [Stanford's Open Graph Benchmark](https://ogb.stanford.edu/):

- [*Graph Transformer for Graph-to-Sequence Learning*](https://arxiv.org/abs/1911.07470) (Cai and Lam, 2020) introduced a Graph Encoder, which represents nodes as a concatenation of their embeddings and positional embeddings, node relations as the shortest paths between them, and combine both in a relation-augmented self attention.

- [*Rethinking Graph Transformers with Spectral Attention*](https://arxiv.org/abs/2106.03893) (Kreuzer et al, 2021) introduced Spectral Attention Networks (SANs). These combine node features with learned positional encoding (computed from Laplacian eigenvectors/values), to use as keys and queries in the attention, with attention values being the edge features.

- [*GRPE: Relative Positional Encoding for Graph Transformer*](https://arxiv.org/abs/2201.12787) (Park et al, 2021) introduced the Graph Relative Positional Encoding Transformer. It represents a graph by combining a graph-level positional encoding with node information, edge level positional encoding with node information, and combining both in the attention.

- [*Global Self-Attention as a Replacement for Graph Convolution*](https://arxiv.org/abs/2108.03348) (Hussain et al, 2021) introduced the Edge Augmented Transformer. This architecture embeds nodes and edges separately, and aggregates them in a modified attention.

- [*Do Transformers Really Perform Badly for Graph Representation*](https://arxiv.org/abs/2106.05234) (Ying et al, 2021) introduces Microsoft's [**Graphormer**](https://www.microsoft.com/en-us/research/project/graphormer/), which won first place on the OGB when it came out. This architecture uses node features as query/key/values in the attention, and sums their representation with a combination of centrality, spatial, and edge encodings in the attention mechanism.

The most recent approach is [*Pure Transformers are Powerful Graph Learners*](https://arxiv.org/abs/2207.02505) (Kim et al, 2022), which introduced **TokenGT**. This method represents input graphs as a sequence of node and edge embeddings (augmented with orthonormal node identifiers and trainable type identifiers), with no positional embedding, and provides this sequence to Transformers as input. It is extremely simple, yet smart!

A bit different, [*Recipe for a General, Powerful, Scalable Graph Transformer*](https://arxiv.org/abs/2205.12454) (Rampášek et al, 2022) introduces, not a model, but a framework, called **GraphGPS**. It allows to combine message passing networks with linear (long range) transformers to create hybrid networks easily. This framework also contains several tools to compute positional and structural encodings (node, graph, edge level), feature augmentation, random walks, etc.

Using transformers for graphs is still very much a field in its infancy, but it looks promising, as it could alleviate several limitations of GNNs, such as scaling to larger/denser graphs, or increasing model size without oversmoothing.

# Further resources

If you want to delve deeper, you can look at some of these courses:

- Academic format

- [Stanford's Machine Learning with Graphs](https://web.stanford.edu/class/cs224w/)

- [McGill's Graph Representation Learning](https://cs.mcgill.ca/~wlh/comp766/)

- Video format

- [Geometric Deep Learning course](https://www.youtube.com/playlist?list=PLn2-dEmQeTfSLXW8yXP4q_Ii58wFdxb3C)

- Books

- [Graph Representation Learning*, Hamilton](https://www.cs.mcgill.ca/~wlh/grl_book/)

- Surveys

- [Graph Neural Networks Study Guide](https://github.com/dair-ai/GNNs-Recipe)

- Research directions

- [GraphML in 2023](https://towardsdatascience.com/graph-ml-in-2023-the-state-of-affairs-1ba920cb9232) summarizes plausible interesting directions for GraphML in 2023.

Nice libraries to work on graphs are [PyGeometric](https://pytorch-geometric.readthedocs.io/en/latest/) or the [Deep Graph Library](https://www.dgl.ai/) (for graph ML) and [NetworkX](https://networkx.org/) (to manipulate graphs more generally).

If you need quality benchmarks you can check out:

- [OGB, the Open Graph Benchmark](https://ogb.stanford.edu/): the reference graph benchmark datasets, for different tasks and data scales.

- [Benchmarking GNNs](https://github.com/graphdeeplearning/benchmarking-gnns): Library and datasets to benchmark graph ML networks and their expressivity. The associated paper notably studies which datasets are relevant from a statistical standpoint, what graph properties they allow to evaluate, and which datasets should no longer be used as benchmarks.

- [Long Range Graph Benchmark](https://github.com/vijaydwivedi75/lrgb): recent (Nov2022) benchmark looking at long range graph information

- [Taxonomy of Benchmarks in Graph Representation Learning](https://openreview.net/pdf?id=EM-Z3QFj8n): paper published at the 2022 Learning on Graphs conference, which analyses and sort existing benchmarks datasets

For more datasets, see:

- [Paper with code Graph tasks Leaderboards](https://paperswithcode.com/area/graphs): Leaderboard for public datasets and benchmarks - careful, not all the benchmarks on this leaderboard are still relevant

- [TU datasets](https://chrsmrrs.github.io/datasets/docs/datasets/): Compilation of publicly available datasets, now ordered by categories and features. Most of these datasets can also be loaded with PyG, and a number of them have been ported to Datasets

- [SNAP datasets: Stanford Large Network Dataset Collection](https://snap.stanford.edu/data/):

- [MoleculeNet datasets](https://moleculenet.org/datasets-1)

- [Relational datasets repository](https://relational.fit.cvut.cz/)

### External images attribution

Emojis in the thumbnail come from Openmoji (CC-BY-SA 4.0), the Graphlets figure comes from *Biological network comparison using graphlet degree distribution* (Pržulj, 2007).

| huggingface/blog/blob/main/intro-graphml.md |

--

title: "Transformer-based Encoder-Decoder Models"

thumbnail: /blog/assets/05_encoder_decoder/thumbnail.png

authors:

- user: patrickvonplaten

---

# Transformers-based Encoder-Decoder Models

<a target="_blank" href="https://colab.research.google.com/github/patrickvonplaten/notebooks/blob/master/Encoder_Decoder_Model.ipynb">

<img src="https://colab.research.google.com/assets/colab-badge.svg" alt="Open In Colab"/>

</a>

# **Transformer-based Encoder-Decoder Models**

```bash

!pip install transformers==4.2.1

!pip install sentencepiece==0.1.95

```

The *transformer-based* encoder-decoder model was introduced by Vaswani

et al. in the famous [Attention is all you need

paper](https://arxiv.org/abs/1706.03762) and is today the *de-facto*

standard encoder-decoder architecture in natural language processing

(NLP).

Recently, there has been a lot of research on different *pre-training*

objectives for transformer-based encoder-decoder models, *e.g.* T5,

Bart, Pegasus, ProphetNet, Marge, *etc*\..., but the model architecture

has stayed largely the same.

The goal of the blog post is to give an **in-detail** explanation of

**how** the transformer-based encoder-decoder architecture models

*sequence-to-sequence* problems. We will focus on the mathematical model

defined by the architecture and how the model can be used in inference.

Along the way, we will give some background on sequence-to-sequence

models in NLP and break down the *transformer-based* encoder-decoder

architecture into its **encoder** and **decoder** parts. We provide many

illustrations and establish the link between the theory of

*transformer-based* encoder-decoder models and their practical usage in

🤗Transformers for inference. Note that this blog post does *not* explain

how such models can be trained - this will be the topic of a future blog

post.

Transformer-based encoder-decoder models are the result of years of

research on _representation learning_ and _model architectures_. This

notebook provides a short summary of the history of neural

encoder-decoder models. For more context, the reader is advised to read

this awesome [blog

post](https://ruder.io/a-review-of-the-recent-history-of-nlp/) by

Sebastion Ruder. Additionally, a basic understanding of the

_self-attention architecture_ is recommended. The following blog post by

Jay Alammar serves as a good refresher on the original Transformer model

[here](http://jalammar.github.io/illustrated-transformer/).

At the time of writing this notebook, 🤗Transformers comprises the

encoder-decoder models *T5*, *Bart*, *MarianMT*, and *Pegasus*, which

are summarized in the docs under [model

summaries](https://huggingface.co/transformers/model_summary.html#sequence-to-sequence-models).

The notebook is divided into four parts:

- **Background** - *A short history of neural encoder-decoder models

is given with a focus on RNN-based models.*

- **Encoder-Decoder** - *The transformer-based encoder-decoder model

is presented and it is explained how the model is used for

inference.*

- **Encoder** - *The encoder part of the model is explained in

detail.*

- **Decoder** - *The decoder part of the model is explained in

detail.*

Each part builds upon the previous part, but can also be read on its

own.

## **Background**

Tasks in natural language generation (NLG), a subfield of NLP, are best

expressed as sequence-to-sequence problems. Such tasks can be defined as

finding a model that maps a sequence of input words to a sequence of

target words. Some classic examples are *summarization* and

*translation*. In the following, we assume that each word is encoded

into a vector representation. \\(n\\) input words can then be represented as

a sequence of \\(n\\) input vectors:

$$\mathbf{X}_{1:n} = \{\mathbf{x}_1, \ldots, \mathbf{x}_n\}.$$

Consequently, sequence-to-sequence problems can be solved by finding a

mapping \\(f\\) from an input sequence of \\(n\\) vectors \\(\mathbf{X}_{1:n}\\) to

a sequence of \\(m\\) target vectors \\(\mathbf{Y}_{1:m}\\), whereas the number

of target vectors \\(m\\) is unknown apriori and depends on the input

sequence:

$$ f: \mathbf{X}_{1:n} \to \mathbf{Y}_{1:m}. $$

[Sutskever et al. (2014)](https://arxiv.org/abs/1409.3215) noted that

deep neural networks (DNN)s, \"*despite their flexibility and power can

only define a mapping whose inputs and targets can be sensibly encoded

with vectors of fixed dimensionality.*\" \\({}^1\\)

Using a DNN model \\({}^2\\) to solve sequence-to-sequence problems would

therefore mean that the number of target vectors \\(m\\) has to be known

*apriori* and would have to be independent of the input

\\(\mathbf{X}_{1:n}\\). This is suboptimal because, for tasks in NLG, the

number of target words usually depends on the input \\(\mathbf{X}_{1:n}\\)

and not just on the input length \\(n\\). *E.g.*, an article of 1000 words

can be summarized to both 200 words and 100 words depending on its

content.

In 2014, [Cho et al.](https://arxiv.org/pdf/1406.1078.pdf) and

[Sutskever et al.](https://arxiv.org/abs/1409.3215) proposed to use an

encoder-decoder model purely based on recurrent neural networks (RNNs)

for *sequence-to-sequence* tasks. In contrast to DNNS, RNNs are capable

of modeling a mapping to a variable number of target vectors. Let\'s

dive a bit deeper into the functioning of RNN-based encoder-decoder

models.

During inference, the encoder RNN encodes an input sequence

\\(\mathbf{X}_{1:n}\\) by successively updating its *hidden state* \\({}^3\\).

After having processed the last input vector \\(\mathbf{x}_n\\), the

encoder\'s hidden state defines the input encoding \\(\mathbf{c}\\). Thus,

the encoder defines the mapping:

$$ f_{\theta_{enc}}: \mathbf{X}_{1:n} \to \mathbf{c}. $$

Then, the decoder\'s hidden state is initialized with the input encoding

and during inference, the decoder RNN is used to auto-regressively

generate the target sequence. Let\'s explain.

Mathematically, the decoder defines the probability distribution of a

target sequence \\(\mathbf{Y}_{1:m}\\) given the hidden state \\(\mathbf{c}\\):

$$ p_{\theta_{dec}}(\mathbf{Y}_{1:m} |\mathbf{c}). $$

By Bayes\' rule the distribution can be decomposed into conditional

distributions of single target vectors as follows:

$$ p_{\theta_{dec}}(\mathbf{Y}_{1:m} |\mathbf{c}) = \prod_{i=1}^{m} p_{\theta_{\text{dec}}}(\mathbf{y}_i | \mathbf{Y}_{0: i-1}, \mathbf{c}). $$

Thus, if the architecture can model the conditional distribution of the

next target vector, given all previous target vectors:

$$ p_{\theta_{\text{dec}}}(\mathbf{y}_i | \mathbf{Y}_{0: i-1}, \mathbf{c}), \forall i \in \{1, \ldots, m\},$$

then it can model the distribution of any target vector sequence given

the hidden state \\(\mathbf{c}\\) by simply multiplying all conditional

probabilities.

So how does the RNN-based decoder architecture model

\\(p_{\theta_{\text{dec}}}(\mathbf{y}_i | \mathbf{Y}_{0: i-1}, \mathbf{c})\\)?

In computational terms, the model sequentially maps the previous inner

hidden state \\(\mathbf{c}_{i-1}\\) and the previous target vector

\\(\mathbf{y}_{i-1}\\) to the current inner hidden state \\(\mathbf{c}_i\\) and a

*logit vector* \\(\mathbf{l}_i\\) (shown in dark red below):

$$ f_{\theta_{\text{dec}}}(\mathbf{y}_{i-1}, \mathbf{c}_{i-1}) \to \mathbf{l}_i, \mathbf{c}_i.$$

\\(\mathbf{c}_0\\) is thereby defined as \\(\mathbf{c}\\) being the output

hidden state of the RNN-based encoder. Subsequently, the *softmax*

operation is used to transform the logit vector \\(\mathbf{l}_i\\) to a

conditional probablity distribution of the next target vector:

$$ p(\mathbf{y}_i | \mathbf{l}_i) = \textbf{Softmax}(\mathbf{l}_i), \text{ with } \mathbf{l}_i = f_{\theta_{\text{dec}}}(\mathbf{y}_{i-1}, \mathbf{c}_{\text{prev}}). $$

For more detail on the logit vector and the resulting probability

distribution, please see footnote \\({}^4\\). From the above equation, we

can see that the distribution of the current target vector

\\(\mathbf{y}_i\\) is directly conditioned on the previous target vector

\\(\mathbf{y}_{i-1}\\) and the previous hidden state \\(\mathbf{c}_{i-1}\\).

Because the previous hidden state \\(\mathbf{c}_{i-1}\\) depends on all

previous target vectors \\(\mathbf{y}_0, \ldots, \mathbf{y}_{i-2}\\), it can

be stated that the RNN-based decoder *implicitly* (*e.g.* *indirectly*)

models the conditional distribution

\\(p_{\theta_{\text{dec}}}(\mathbf{y}_i | \mathbf{Y}_{0: i-1}, \mathbf{c})\\).

The space of possible target vector sequences \\(\mathbf{Y}_{1:m}\\) is

prohibitively large so that at inference, one has to rely on decoding

methods \\({}^5\\) that efficiently sample high probability target vector

sequences from \\(p_{\theta_{dec}}(\mathbf{Y}_{1:m} |\mathbf{c})\\).

Given such a decoding method, during inference, the next input vector

\\(\mathbf{y}_i\\) can then be sampled from

\\(p_{\theta_{\text{dec}}}(\mathbf{y}_i | \mathbf{Y}_{0: i-1}, \mathbf{c})\\)

and is consequently appended to the input sequence so that the decoder

RNN then models

\\(p_{\theta_{\text{dec}}}(\mathbf{y}_{i+1} | \mathbf{Y}_{0: i}, \mathbf{c})\\)

to sample the next input vector \\(\mathbf{y}_{i+1}\\) and so on in an

*auto-regressive* fashion.

An important feature of RNN-based encoder-decoder models is the

definition of *special* vectors, such as the \\(\text{EOS}\\) and

\\(\text{BOS}\\) vector. The \\(\text{EOS}\\) vector often represents the final

input vector \\(\mathbf{x}_n\\) to \"cue\" the encoder that the input

sequence has ended and also defines the end of the target sequence. As

soon as the \\(\text{EOS}\\) is sampled from a logit vector, the generation

is complete. The \\(\text{BOS}\\) vector represents the input vector

\\(\mathbf{y}_0\\) fed to the decoder RNN at the very first decoding step.

To output the first logit \\(\mathbf{l}_1\\), an input is required and since

no input has been generated at the first step a special \\(\text{BOS}\\)

input vector is fed to the decoder RNN. Ok - quite complicated! Let\'s

illustrate and walk through an example.

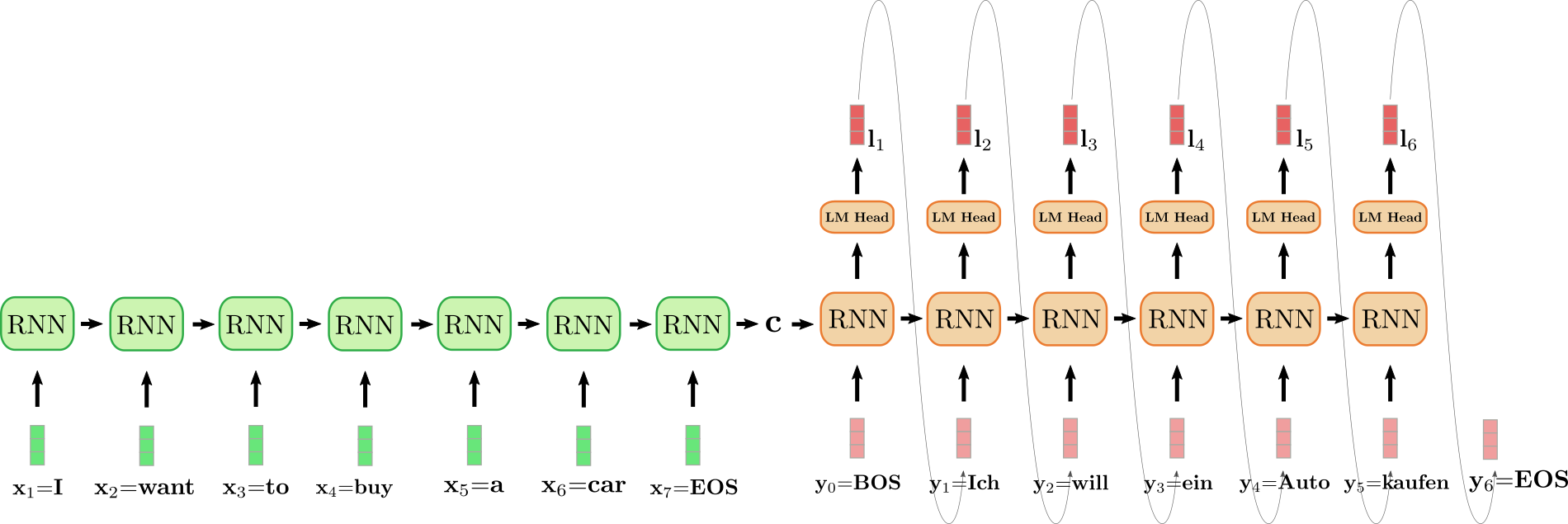

The unfolded RNN encoder is colored in green and the unfolded RNN

decoder is colored in red.

The English sentence \"I want to buy a car\", represented by

\\(\mathbf{x}_1 = \text{I}\\), \\(\mathbf{x}_2 = \text{want}\\),

\\(\mathbf{x}_3 = \text{to}\\), \\(\mathbf{x}_4 = \text{buy}\\),

\\(\mathbf{x}_5 = \text{a}\\), \\(\mathbf{x}_6 = \text{car}\\) and

\\(\mathbf{x}_7 = \text{EOS}\\) is translated into German: \"Ich will ein

Auto kaufen\" defined as \\(\mathbf{y}_0 = \text{BOS}\\),

\\(\mathbf{y}_1 = \text{Ich}\\), \\(\mathbf{y}_2 = \text{will}\\),

\\(\mathbf{y}_3 = \text{ein}\\),

\\(\mathbf{y}_4 = \text{Auto}, \mathbf{y}_5 = \text{kaufen}\\) and

\\(\mathbf{y}_6=\text{EOS}\\). To begin with, the input vector

\\(\mathbf{x}_1 = \text{I}\\) is processed by the encoder RNN and updates

its hidden state. Note that because we are only interested in the final

encoder\'s hidden state \\(\mathbf{c}\\), we can disregard the RNN

encoder\'s target vector. The encoder RNN then processes the rest of the

input sentence \\(\text{want}\\), \\(\text{to}\\), \\(\text{buy}\\), \\(\text{a}\\),

\\(\text{car}\\), \\(\text{EOS}\\) in the same fashion, updating its hidden

state at each step until the vector \\(\mathbf{x}_7={EOS}\\) is reached

\\({}^6\\). In the illustration above the horizontal arrow connecting the

unfolded encoder RNN represents the sequential updates of the hidden

state. The final hidden state of the encoder RNN, represented by

\\(\mathbf{c}\\) then completely defines the *encoding* of the input

sequence and is used as the initial hidden state of the decoder RNN.

This can be seen as *conditioning* the decoder RNN on the encoded input.

To generate the first target vector, the decoder is fed the \\(\text{BOS}\\)

vector, illustrated as \\(\mathbf{y}_0\\) in the design above. The target

vector of the RNN is then further mapped to the logit vector

\\(\mathbf{l}_1\\) by means of the *LM Head* feed-forward layer to define

the conditional distribution of the first target vector as explained

above:

$$ p_{\theta_{dec}}(\mathbf{y} | \text{BOS}, \mathbf{c}). $$

The word \\(\text{Ich}\\) is sampled (shown by the grey arrow, connecting

\\(\mathbf{l}_1\\) and \\(\mathbf{y}_1\\)) and consequently the second target

vector can be sampled:

$$ \text{will} \sim p_{\theta_{dec}}(\mathbf{y} | \text{BOS}, \text{Ich}, \mathbf{c}). $$

And so on until at step \\(i=6\\), the \\(\text{EOS}\\) vector is sampled from

\\(\mathbf{l}_6\\) and the decoding is finished. The resulting target

sequence amounts to

\\(\mathbf{Y}_{1:6} = \{\mathbf{y}_1, \ldots, \mathbf{y}_6\}\\), which is

\"Ich will ein Auto kaufen\" in our example above.

To sum it up, an RNN-based encoder-decoder model, represented by

\\(f_{\theta_{\text{enc}}}\\) and \\( p_{\theta_{\text{dec}}} \\) defines

the distribution \\(p(\mathbf{Y}_{1:m} | \mathbf{X}_{1:n})\\) by

factorization:

$$ p_{\theta_{\text{enc}}, \theta_{\text{dec}}}(\mathbf{Y}_{1:m} | \mathbf{X}_{1:n}) = \prod_{i=1}^{m} p_{\theta_{\text{enc}}, \theta_{\text{dec}}}(\mathbf{y}_i | \mathbf{Y}_{0: i-1}, \mathbf{X}_{1:n}) = \prod_{i=1}^{m} p_{\theta_{\text{dec}}}(\mathbf{y}_i | \mathbf{Y}_{0: i-1}, \mathbf{c}), \text{ with } \mathbf{c}=f_{\theta_{enc}}(X). $$

During inference, efficient decoding methods can auto-regressively

generate the target sequence \\(\mathbf{Y}_{1:m}\\).

The RNN-based encoder-decoder model took the NLG community by storm. In

2016, Google announced to fully replace its heavily feature engineered

translation service by a single RNN-based encoder-decoder model (see

[here](https://www.oreilly.com/radar/what-machine-learning-means-for-software-development/#:~:text=Machine%20learning%20is%20already%20making,of%20code%20in%20Google%20Translate.)).

Nevertheless, RNN-based encoder-decoder models have two pitfalls. First,

RNNs suffer from the vanishing gradient problem, making it very

difficult to capture long-range dependencies, *cf.* [Hochreiter et al.

(2001)](https://www.bioinf.jku.at/publications/older/ch7.pdf). Second,

the inherent recurrent architecture of RNNs prevents efficient

parallelization when encoding, *cf.* [Vaswani et al.

(2017)](https://arxiv.org/abs/1706.03762).

------------------------------------------------------------------------

\\({}^1\\) The original quote from the paper is \"*Despite their flexibility

and power, DNNs can only be applied to problems whose inputs and targets

can be sensibly encoded with vectors of fixed dimensionality*\", which

is slightly adapted here.

\\({}^2\\) The same holds essentially true for convolutional neural networks

(CNNs). While an input sequence of variable length can be fed into a

CNN, the dimensionality of the target will always be dependent on the

input dimensionality or fixed to a specific value.

\\({}^3\\) At the first step, the hidden state is initialized as a zero

vector and fed to the RNN together with the first input vector

\\(\mathbf{x}_1\\).

\\({}^4\\) A neural network can define a probability distribution over all

words, *i.e.* \\(p(\mathbf{y} | \mathbf{c}, \mathbf{Y}_{0: i-1})\\) as

follows. First, the network defines a mapping from the inputs

\\(\mathbf{c}, \mathbf{Y}_{0: i-1}\\) to an embedded vector representation

\\(\mathbf{y'}\\), which corresponds to the RNN target vector. The embedded

vector representation \\(\mathbf{y'}\\) is then passed to the \"language

model head\" layer, which means that it is multiplied by the *word

embedding matrix*, *i.e.* \\(\mathbf{Y}^{\text{vocab}}\\), so that a score

between \\(\mathbf{y'}\\) and each encoded vector

\\(\mathbf{y} \in \mathbf{Y}^{\text{vocab}}\\) is computed. The resulting

vector is called the logit vector

\\( \mathbf{l} = \mathbf{Y}^{\text{vocab}} \mathbf{y'} \\) and can be

mapped to a probability distribution over all words by applying a

softmax operation:

\\(p(\mathbf{y} | \mathbf{c}) = \text{Softmax}(\mathbf{Y}^{\text{vocab}} \mathbf{y'}) = \text{Softmax}(\mathbf{l})\\).

\\({}^5\\) Beam-search decoding is an example of such a decoding method.

Different decoding methods are out of scope for this notebook. The

reader is advised to refer to this [interactive

notebook](https://huggingface.co/blog/how-to-generate) on decoding

methods.

\\({}^6\\) [Sutskever et al. (2014)](https://arxiv.org/abs/1409.3215)

reverses the order of the input so that in the above example the input

vectors would correspond to \\(\mathbf{x}_1 = \text{car}\\),

\\(\mathbf{x}_2 = \text{a}\\), \\(\mathbf{x}_3 = \text{buy}\\),

\\(\mathbf{x}_4 = \text{to}\\), \\(\mathbf{x}_5 = \text{want}\\),

\\(\mathbf{x}_6 = \text{I}\\) and \\(\mathbf{x}_7 = \text{EOS}\\). The

motivation is to allow for a shorter connection between corresponding

word pairs such as \\(\mathbf{x}_6 = \text{I}\\) and

\\(\mathbf{y}_1 = \text{Ich}\\). The research group emphasizes that the

reversal of the input sequence was a key reason for their model\'s

improved performance on machine translation.

## **Encoder-Decoder**

In 2017, Vaswani et al. introduced the **Transformer** and thereby gave

birth to *transformer-based* encoder-decoder models.

Analogous to RNN-based encoder-decoder models, transformer-based

encoder-decoder models consist of an encoder and a decoder which are

both stacks of *residual attention blocks*. The key innovation of

transformer-based encoder-decoder models is that such residual attention

blocks can process an input sequence \\(\mathbf{X}_{1:n}\\) of variable

length \\(n\\) without exhibiting a recurrent structure. Not relying on a

recurrent structure allows transformer-based encoder-decoders to be

highly parallelizable, which makes the model orders of magnitude more

computationally efficient than RNN-based encoder-decoder models on

modern hardware.

As a reminder, to solve a *sequence-to-sequence* problem, we need to

find a mapping of an input sequence \\(\mathbf{X}_{1:n}\\) to an output

sequence \\(\mathbf{Y}_{1:m}\\) of variable length \\(m\\). Let\'s see how

transformer-based encoder-decoder models are used to find such a

mapping.

Similar to RNN-based encoder-decoder models, the transformer-based

encoder-decoder models define a conditional distribution of target

vectors \\(\mathbf{Y}_{1:n}\\) given an input sequence \\(\mathbf{X}_{1:n}\\):

$$

p_{\theta_{\text{enc}}, \theta_{\text{dec}}}(\mathbf{Y}_{1:m} | \mathbf{X}_{1:n}).

$$

The transformer-based encoder part encodes the input sequence

\\(\mathbf{X}_{1:n}\\) to a *sequence* of *hidden states*

\\(\mathbf{\overline{X}}_{1:n}\\), thus defining the mapping:

$$ f_{\theta_{\text{enc}}}: \mathbf{X}_{1:n} \to \mathbf{\overline{X}}_{1:n}. $$

The transformer-based decoder part then models the conditional

probability distribution of the target vector sequence

\\(\mathbf{Y}_{1:n}\\) given the sequence of encoded hidden states

\\(\mathbf{\overline{X}}_{1:n}\\):

$$ p_{\theta_{dec}}(\mathbf{Y}_{1:n} | \mathbf{\overline{X}}_{1:n}).$$

By Bayes\' rule, this distribution can be factorized to a product of

conditional probability distribution of the target vector \\(\mathbf{y}_i\\)

given the encoded hidden states \\(\mathbf{\overline{X}}_{1:n}\\) and all

previous target vectors \\(\mathbf{Y}_{0:i-1}\\):

$$

p_{\theta_{dec}}(\mathbf{Y}_{1:n} | \mathbf{\overline{X}}_{1:n}) = \prod_{i=1}^{n} p_{\theta_{\text{dec}}}(\mathbf{y}_i | \mathbf{Y}_{0: i-1}, \mathbf{\overline{X}}_{1:n}). $$

The transformer-based decoder hereby maps the sequence of encoded hidden

states \\(\mathbf{\overline{X}}_{1:n}\\) and all previous target vectors

\\(\mathbf{Y}_{0:i-1}\\) to the *logit* vector \\(\mathbf{l}_i\\). The logit

vector \\(\mathbf{l}_i\\) is then processed by the *softmax* operation to

define the conditional distribution

\\(p_{\theta_{\text{dec}}}(\mathbf{y}_i | \mathbf{Y}_{0: i-1}, \mathbf{\overline{X}}_{1:n})\\),

just as it is done for RNN-based decoders. However, in contrast to

RNN-based decoders, the distribution of the target vector \\(\mathbf{y}_i\\)

is *explicitly* (or directly) conditioned on all previous target vectors

\\(\mathbf{y}_0, \ldots, \mathbf{y}_{i-1}\\) as we will see later in more

detail. The 0th target vector \\(\mathbf{y}_0\\) is hereby represented by a

special \"begin-of-sentence\" \\(\text{BOS}\\) vector.

Having defined the conditional distribution

\\(p_{\theta_{\text{dec}}}(\mathbf{y}_i | \mathbf{Y}_{0: i-1}, \mathbf{\overline{X}}_{1:n})\\),

we can now *auto-regressively* generate the output and thus define a

mapping of an input sequence \\(\mathbf{X}_{1:n}\\) to an output sequence

\\(\mathbf{Y}_{1:m}\\) at inference.

Let\'s visualize the complete process of *auto-regressive* generation of

*transformer-based* encoder-decoder models.

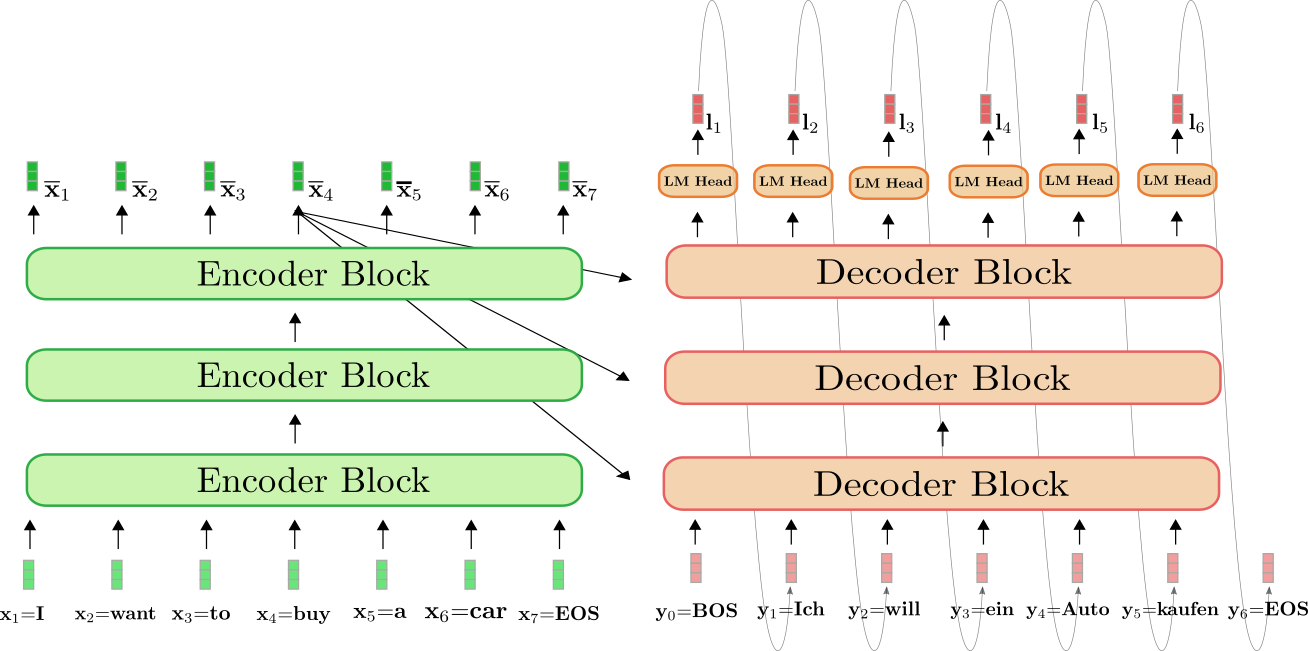

The transformer-based encoder is colored in green and the

transformer-based decoder is colored in red. As in the previous section,

we show how the English sentence \"I want to buy a car\", represented by

\\(\mathbf{x}_1 = \text{I}\\), \\(\mathbf{x}_2 = \text{want}\\),

\\(\mathbf{x}_3 = \text{to}\\), \\(\mathbf{x}_4 = \text{buy}\\),

\\(\mathbf{x}_5 = \text{a}\\), \\(\mathbf{x}_6 = \text{car}\\), and

\\(\mathbf{x}_7 = \text{EOS}\\) is translated into German: \"Ich will ein

Auto kaufen\" defined as \\(\mathbf{y}_0 = \text{BOS}\\),

\\(\mathbf{y}_1 = \text{Ich}\\), \\(\mathbf{y}_2 = \text{will}\\),

\\(\mathbf{y}_3 = \text{ein}\\),

\\(\mathbf{y}_4 = \text{Auto}, \mathbf{y}_5 = \text{kaufen}\\), and

\\(\mathbf{y}_6=\text{EOS}\\).

To begin with, the encoder processes the complete input sequence

\\(\mathbf{X}_{1:7}\\) = \"I want to buy a car\" (represented by the light

green vectors) to a contextualized encoded sequence

\\(\mathbf{\overline{X}}_{1:7}\\). *E.g.* \\(\mathbf{\overline{x}}_4\\) defines

an encoding that depends not only on the input \\(\mathbf{x}_4\\) = \"buy\",

but also on all other words \"I\", \"want\", \"to\", \"a\", \"car\" and

\"EOS\", *i.e.* the context.

Next, the input encoding \\(\mathbf{\overline{X}}_{1:7}\\) together with the

BOS vector, *i.e.* \\(\mathbf{y}_0\\), is fed to the decoder. The decoder

processes the inputs \\(\mathbf{\overline{X}}_{1:7}\\) and \\(\mathbf{y}_0\\) to

the first logit \\(\mathbf{l}_1\\) (shown in darker red) to define the

conditional distribution of the first target vector \\(\mathbf{y}_1\\):

$$ p_{\theta_{enc, dec}}(\mathbf{y} | \mathbf{y}_0, \mathbf{X}_{1:7}) = p_{\theta_{enc, dec}}(\mathbf{y} | \text{BOS}, \text{I want to buy a car EOS}) = p_{\theta_{dec}}(\mathbf{y} | \text{BOS}, \mathbf{\overline{X}}_{1:7}). $$

Next, the first target vector \\(\mathbf{y}_1\\) = \\(\text{Ich}\\) is sampled

from the distribution (represented by the grey arrows) and can now be

fed to the decoder again. The decoder now processes both \\(\mathbf{y}_0\\)

= \"BOS\" and \\(\mathbf{y}_1\\) = \"Ich\" to define the conditional

distribution of the second target vector \\(\mathbf{y}_2\\):

$$ p_{\theta_{dec}}(\mathbf{y} | \text{BOS Ich}, \mathbf{\overline{X}}_{1:7}). $$

We can sample again and produce the target vector \\(\mathbf{y}_2\\) =

\"will\". We continue in auto-regressive fashion until at step 6 the EOS

vector is sampled from the conditional distribution:

$$ \text{EOS} \sim p_{\theta_{dec}}(\mathbf{y} | \text{BOS Ich will ein Auto kaufen}, \mathbf{\overline{X}}_{1:7}). $$

And so on in auto-regressive fashion.

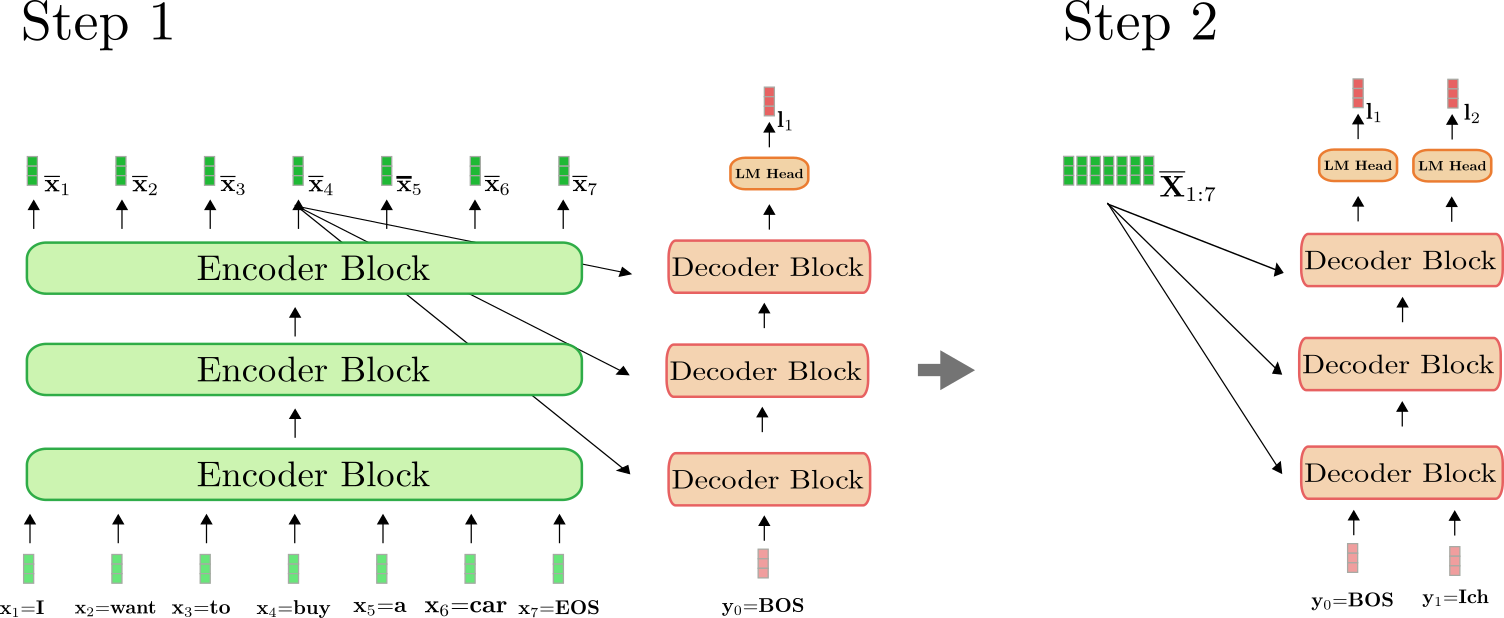

It is important to understand that the encoder is only used in the first

forward pass to map \\(\mathbf{X}_{1:n}\\) to \\(\mathbf{\overline{X}}_{1:n}\\).

As of the second forward pass, the decoder can directly make use of the

previously calculated encoding \\(\mathbf{\overline{X}}_{1:n}\\). For

clarity, let\'s illustrate the first and the second forward pass for our

example above.

As can be seen, only in step \\(i=1\\) do we have to encode \"I want to buy

a car EOS\" to \\(\mathbf{\overline{X}}_{1:7}\\). At step \\(i=2\\), the

contextualized encodings of \"I want to buy a car EOS\" are simply

reused by the decoder.

In 🤗Transformers, this auto-regressive generation is done under-the-hood

when calling the `.generate()` method. Let\'s use one of our translation

models to see this in action.

```python

from transformers import MarianMTModel, MarianTokenizer

tokenizer = MarianTokenizer.from_pretrained("Helsinki-NLP/opus-mt-en-de")

model = MarianMTModel.from_pretrained("Helsinki-NLP/opus-mt-en-de")

# create ids of encoded input vectors

input_ids = tokenizer("I want to buy a car", return_tensors="pt").input_ids

# translate example

output_ids = model.generate(input_ids)[0]

# decode and print

print(tokenizer.decode(output_ids))

```

_Output:_

```

<pad> Ich will ein Auto kaufen

```

Calling `.generate()` does many things under-the-hood. First, it passes

the `input_ids` to the encoder. Second, it passes a pre-defined token, which is the \\(\text{<pad>}\\) symbol in the case of

`MarianMTModel` along with the encoded `input_ids` to the decoder.

Third, it applies the beam search decoding mechanism to

auto-regressively sample the next output word of the *last* decoder

output \\({}^1\\). For more detail on how beam search decoding works, one is

advised to read [this](https://huggingface.co/blog/how-to-generate) blog

post.

In the Appendix, we have included a code snippet that shows how a simple

generation method can be implemented \"from scratch\". To fully

understand how *auto-regressive* generation works under-the-hood, it is

highly recommended to read the Appendix.

To sum it up:

- The transformer-based encoder defines a mapping from the input

sequence \\(\mathbf{X}_{1:n}\\) to a contextualized encoding sequence

\\(\mathbf{\overline{X}}_{1:n}\\).

- The transformer-based decoder defines the conditional distribution

\\(p_{\theta_{\text{dec}}}(\mathbf{y}_i | \mathbf{Y}_{0: i-1}, \mathbf{\overline{X}}_{1:n})\\).

- Given an appropriate decoding mechanism, the output sequence

\\(\mathbf{Y}_{1:m}\\) can auto-regressively be sampled from

\\(p_{\theta_{\text{dec}}}(\mathbf{y}_i | \mathbf{Y}_{0: i-1}, \mathbf{\overline{X}}_{1:n}), \forall i \in \{1, \ldots, m\}\\).

Great, now that we have gotten a general overview of how

*transformer-based* encoder-decoder models work, we can dive deeper into

both the encoder and decoder part of the model. More specifically, we

will see exactly how the encoder makes use of the self-attention layer

to yield a sequence of context-dependent vector encodings and how

self-attention layers allow for efficient parallelization. Then, we will

explain in detail how the self-attention layer works in the decoder

model and how the decoder is conditioned on the encoder\'s output with

*cross-attention* layers to define the conditional distribution

\\(p_{\theta_{\text{dec}}}(\mathbf{y}_i | \mathbf{Y}_{0: i-1}, \mathbf{\overline{X}}_{1:n})\\).

Along, the way it will become obvious how transformer-based

encoder-decoder models solve the long-range dependencies problem of

RNN-based encoder-decoder models.

------------------------------------------------------------------------

\\({}^1\\) In the case of `"Helsinki-NLP/opus-mt-en-de"`, the decoding

parameters can be accessed

[here](https://s3.amazonaws.com/models.huggingface.co/bert/Helsinki-NLP/opus-mt-en-de/config.json),

where we can see that model applies beam search with `num_beams=6`.

## **Encoder**

As mentioned in the previous section, the *transformer-based* encoder

maps the input sequence to a contextualized encoding sequence:

$$ f_{\theta_{\text{enc}}}: \mathbf{X}_{1:n} \to \mathbf{\overline{X}}_{1:n}. $$

Taking a closer look at the architecture, the transformer-based encoder

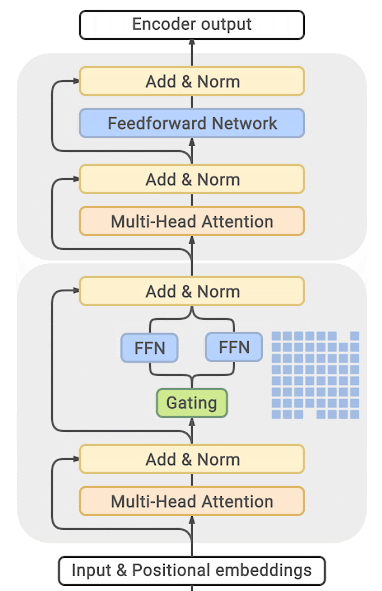

is a stack of residual _encoder blocks_. Each encoder block consists of

a **bi-directional** self-attention layer, followed by two feed-forward

layers. For simplicity, we disregard the normalization layers in this

notebook. Also, we will not further discuss the role of the two

feed-forward layers, but simply see it as a final vector-to-vector

mapping required in each encoder block \\({}^1\\). The bi-directional

self-attention layer puts each input vector

\\(\mathbf{x'}_j, \forall j \in \{1, \ldots, n\}\\) into relation with all

input vectors \\(\mathbf{x'}_1, \ldots, \mathbf{x'}_n\\) and by doing so

transforms the input vector \\(\mathbf{x'}_j\\) to a more \"refined\"

contextual representation of itself, defined as \\(\mathbf{x''}_j\\).

Thereby, the first encoder block transforms each input vector of the

input sequence \\(\mathbf{X}_{1:n}\\) (shown in light green below) from a

*context-independent* vector representation to a *context-dependent*

vector representation, and the following encoder blocks further refine

this contextual representation until the last encoder block outputs the

final contextual encoding \\(\mathbf{\overline{X}}_{1:n}\\) (shown in darker

green below).

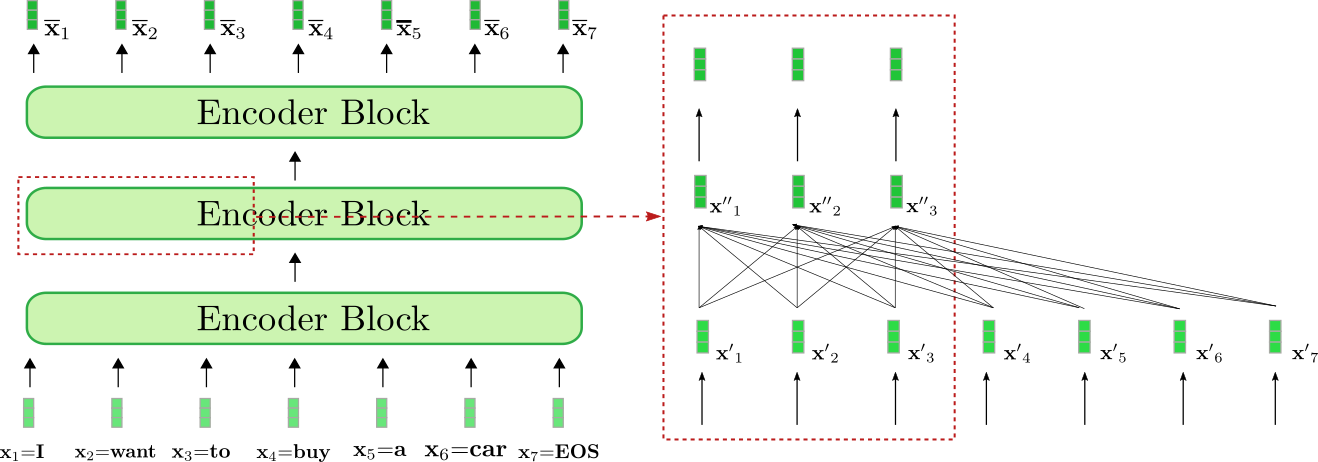

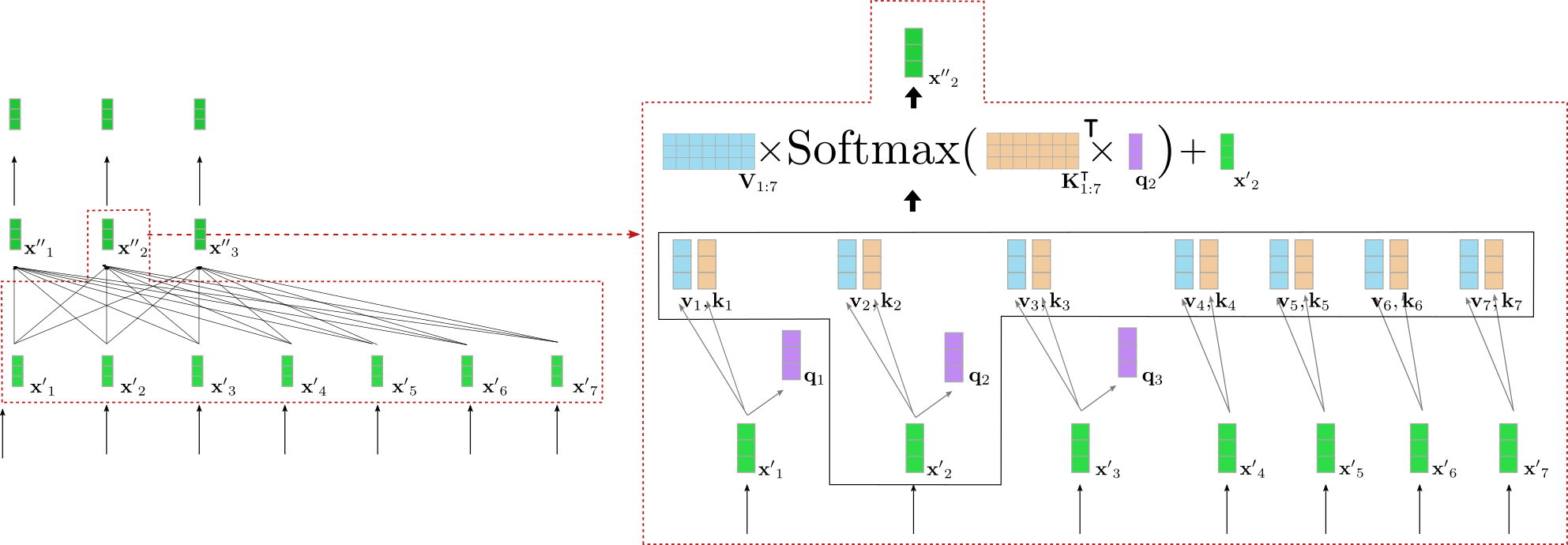

Let\'s visualize how the encoder processes the input sequence \"I want

to buy a car EOS\" to a contextualized encoding sequence. Similar to

RNN-based encoders, transformer-based encoders also add a special

\"end-of-sequence\" input vector to the input sequence to hint to the

model that the input vector sequence is finished \\({}^2\\).

Our exemplary *transformer-based* encoder is composed of three encoder

blocks, whereas the second encoder block is shown in more detail in the

red box on the right for the first three input vectors

\\(\mathbf{x}_1, \mathbf{x}_2 and \mathbf{x}_3\\). The bi-directional

self-attention mechanism is illustrated by the fully-connected graph in

the lower part of the red box and the two feed-forward layers are shown

in the upper part of the red box. As stated before, we will focus only

on the bi-directional self-attention mechanism.

As can be seen each output vector of the self-attention layer

\\(\mathbf{x''}_i, \forall i \in \{1, \ldots, 7\}\\) depends *directly* on

*all* input vectors \\(\mathbf{x'}_1, \ldots, \mathbf{x'}_7\\). This means,

*e.g.* that the input vector representation of the word \"want\", *i.e.*

\\(\mathbf{x'}_2\\), is put into direct relation with the word \"buy\",

*i.e.* \\(\mathbf{x'}_4\\), but also with the word \"I\",*i.e.*

\\(\mathbf{x'}_1\\). The output vector representation of \"want\", *i.e.*

\\(\mathbf{x''}_2\\), thus represents a more refined contextual

representation for the word \"want\".

Let\'s take a deeper look at how bi-directional self-attention works.

Each input vector \\(\mathbf{x'}_i\\) of an input sequence

\\(\mathbf{X'}_{1:n}\\) of an encoder block is projected to a key vector

\\(\mathbf{k}_i\\), value vector \\(\mathbf{v}_i\\) and query vector

\\(\mathbf{q}_i\\) (shown in orange, blue, and purple respectively below)

through three trainable weight matrices

\\(\mathbf{W}_q, \mathbf{W}_v, \mathbf{W}_k\\):

$$ \mathbf{q}_i = \mathbf{W}_q \mathbf{x'}_i,$$

$$ \mathbf{v}_i = \mathbf{W}_v \mathbf{x'}_i,$$

$$ \mathbf{k}_i = \mathbf{W}_k \mathbf{x'}_i, $$

$$ \forall i \in \{1, \ldots n \}.$$

Note, that the **same** weight matrices are applied to each input vector

\\(\mathbf{x}_i, \forall i \in \{i, \ldots, n\}\\). After projecting each

input vector \\(\mathbf{x}_i\\) to a query, key, and value vector, each

query vector \\(\mathbf{q}_j, \forall j \in \{1, \ldots, n\}\\) is compared

to all key vectors \\(\mathbf{k}_1, \ldots, \mathbf{k}_n\\). The more

similar one of the key vectors \\(\mathbf{k}_1, \ldots \mathbf{k}_n\\) is to

a query vector \\(\mathbf{q}_j\\), the more important is the corresponding

value vector \\(\mathbf{v}_j\\) for the output vector \\(\mathbf{x''}_j\\). More

specifically, an output vector \\(\mathbf{x''}_j\\) is defined as the

weighted sum of all value vectors \\(\mathbf{v}_1, \ldots, \mathbf{v}_n\\)

plus the input vector \\(\mathbf{x'}_j\\). Thereby, the weights are

proportional to the cosine similarity between \\(\mathbf{q}_j\\) and the

respective key vectors \\(\mathbf{k}_1, \ldots, \mathbf{k}_n\\), which is

mathematically expressed by

\\(\textbf{Softmax}(\mathbf{K}_{1:n}^\intercal \mathbf{q}_j)\\) as

illustrated in the equation below. For a complete description of the

self-attention layer, the reader is advised to take a look at

[this](http://jalammar.github.io/illustrated-transformer/) blog post or

the original [paper](https://arxiv.org/abs/1706.03762).

Alright, this sounds quite complicated. Let\'s illustrate the

bi-directional self-attention layer for one of the query vectors of our

example above. For simplicity, it is assumed that our exemplary

*transformer-based* decoder uses only a single attention head

`config.num_heads = 1` and that no normalization is applied.

On the left, the previously illustrated second encoder block is shown

again and on the right, an in detail visualization of the bi-directional

self-attention mechanism is given for the second input vector

\\(\mathbf{x'}_2\\) that corresponds to the input word \"want\". At first

all input vectors \\(\mathbf{x'}_1, \ldots, \mathbf{x'}_7\\) are projected

to their respective query vectors \\(\mathbf{q}_1, \ldots, \mathbf{q}_7\\)

(only the first three query vectors are shown in purple above), value

vectors \\(\mathbf{v}_1, \ldots, \mathbf{v}_7\\) (shown in blue), and key

vectors \\(\mathbf{k}_1, \ldots, \mathbf{k}_7\\) (shown in orange). The

query vector \\(\mathbf{q}_2\\) is then multiplied by the transpose of all

key vectors, *i.e.* \\(\mathbf{K}_{1:7}^{\intercal}\\) followed by the

softmax operation to yield the _self-attention weights_. The

self-attention weights are finally multiplied by the respective value

vectors and the input vector \\(\mathbf{x'}_2\\) is added to output the

\"refined\" representation of the word \"want\", *i.e.* \\(\mathbf{x''}_2\\)

(shown in dark green on the right). The whole equation is illustrated in

the upper part of the box on the right. The multiplication of

\\(\mathbf{K}_{1:7}^{\intercal}\\) and \\(\mathbf{q}_2\\) thereby makes it

possible to compare the vector representation of \"want\" to all other

input vector representations \"I\", \"to\", \"buy\", \"a\", \"car\",

\"EOS\" so that the self-attention weights mirror the importance each of

the other input vector representations

\\(\mathbf{x'}_j \text{, with } j \ne 2\\) for the refined representation

\\(\mathbf{x''}_2\\) of the word \"want\".

To further understand the implications of the bi-directional

self-attention layer, let\'s assume the following sentence is processed:

\"*The house is beautiful and well located in the middle of the city

where it is easily accessible by public transport*\". The word \"it\"

refers to \"house\", which is 12 \"positions away\". In

transformer-based encoders, the bi-directional self-attention layer

performs a single mathematical operation to put the input vector of

\"house\" into relation with the input vector of \"it\" (compare to the

first illustration of this section). In contrast, in an RNN-based

encoder, a word that is 12 \"positions away\", would require at least 12

mathematical operations meaning that in an RNN-based encoder a linear

number of mathematical operations are required. This makes it much

harder for an RNN-based encoder to model long-range contextual

representations. Also, it becomes clear that a transformer-based encoder

is much less prone to lose important information than an RNN-based

encoder-decoder model because the sequence length of the encoding is

kept the same, *i.e.*

\\(\textbf{len}(\mathbf{X}_{1:n}) = \textbf{len}(\mathbf{\overline{X}}_{1:n}) = n\\),

while an RNN compresses the length from

\\(*\textbf{len}((\mathbf{X}_{1:n}) = n\\) to just

\\(\textbf{len}(\mathbf{c}) = 1\\), which makes it very difficult for RNNs

to effectively encode long-range dependencies between input words.

In addition to making long-range dependencies more easily learnable, we

can see that the Transformer architecture is able to process text in

parallel.Mathematically, this can easily be shown by writing the

self-attention formula as a product of query, key, and value matrices:

$$\mathbf{X''}_{1:n} = \mathbf{V}_{1:n} \text{Softmax}(\mathbf{Q}_{1:n}^\intercal \mathbf{K}_{1:n}) + \mathbf{X'}_{1:n}. $$

The output \\(\mathbf{X''}_{1:n} = \mathbf{x''}_1, \ldots, \mathbf{x''}_n\\)

is computed via a series of matrix multiplications and a softmax

operation, which can be parallelized effectively. Note, that in an

RNN-based encoder model, the computation of the hidden state

\\(\mathbf{c}\\) has to be done sequentially: Compute hidden state of the

first input vector \\(\mathbf{x}_1\\), then compute the hidden state of the

second input vector that depends on the hidden state of the first hidden

vector, etc. The sequential nature of RNNs prevents effective

parallelization and makes them much more inefficient compared to

transformer-based encoder models on modern GPU hardware.

Great, now we should have a better understanding of a) how

transformer-based encoder models effectively model long-range contextual

representations and b) how they efficiently process long sequences of

input vectors.

Now, let\'s code up a short example of the encoder part of our

`MarianMT` encoder-decoder models to verify that the explained theory

holds in practice.

------------------------------------------------------------------------

\\({}^1\\) An in-detail explanation of the role the feed-forward layers play

in transformer-based models is out-of-scope for this notebook. It is

argued in [Yun et. al, (2017)](https://arxiv.org/pdf/1912.10077.pdf)

that feed-forward layers are crucial to map each contextual vector

\\(\mathbf{x'}_i\\) individually to the desired output space, which the

_self-attention_ layer does not manage to do on its own. It should be

noted here, that each output token \\(\mathbf{x'}\\) is processed by the

same feed-forward layer. For more detail, the reader is advised to read

the paper.

\\({}^2\\) However, the EOS input vector does not have to be appended to the

input sequence, but has been shown to improve performance in many cases.

In contrast to the _0th_ \\(\text{BOS}\\) target vector of the

transformer-based decoder is required as a starting input vector to

predict a first target vector.

```python

from transformers import MarianMTModel, MarianTokenizer

import torch

tokenizer = MarianTokenizer.from_pretrained("Helsinki-NLP/opus-mt-en-de")

model = MarianMTModel.from_pretrained("Helsinki-NLP/opus-mt-en-de")

embeddings = model.get_input_embeddings()

# create ids of encoded input vectors

input_ids = tokenizer("I want to buy a car", return_tensors="pt").input_ids

# pass input_ids to encoder

encoder_hidden_states = model.base_model.encoder(input_ids, return_dict=True).last_hidden_state

# change the input slightly and pass to encoder

input_ids_perturbed = tokenizer("I want to buy a house", return_tensors="pt").input_ids

encoder_hidden_states_perturbed = model.base_model.encoder(input_ids_perturbed, return_dict=True).last_hidden_state

# compare shape and encoding of first vector

print(f"Length of input embeddings {embeddings(input_ids).shape[1]}. Length of encoder_hidden_states {encoder_hidden_states.shape[1]}")

# compare values of word embedding of "I" for input_ids and perturbed input_ids

print("Is encoding for `I` equal to its perturbed version?: ", torch.allclose(encoder_hidden_states[0, 0], encoder_hidden_states_perturbed[0, 0], atol=1e-3))

```

_Outputs:_

```

Length of input embeddings 7. Length of encoder_hidden_states 7

Is encoding for `I` equal to its perturbed version?: False

```

We compare the length of the input word embeddings, *i.e.*

`embeddings(input_ids)` corresponding to \\(\mathbf{X}_{1:n}\\), with the

length of the `encoder_hidden_states`, corresponding to

\\(\mathbf{\overline{X}}_{1:n}\\). Also, we have forwarded the word sequence

\"I want to buy a car\" and a slightly perturbated version \"I want to

buy a house\" through the encoder to check if the first output encoding,

corresponding to \"I\", differs when only the last word is changed in

the input sequence.

As expected the output length of the input word embeddings and encoder

output encodings, *i.e.* \\(\textbf{len}(\mathbf{X}_{1:n})\\) and

\\(\textbf{len}(\mathbf{\overline{X}}_{1:n})\\), is equal. Second, it can be

noted that the values of the encoded output vector of

\\(\mathbf{\overline{x}}_1 = \text{"I"}\\) are different when the last word

is changed from \"car\" to \"house\". This however should not come as a

surprise if one has understood bi-directional self-attention.

On a side-note, _autoencoding_ models, such as BERT, have the exact same

architecture as _transformer-based_ encoder models. _Autoencoding_

models leverage this architecture for massive self-supervised

pre-training on open-domain text data so that they can map any word

sequence to a deep bi-directional representation. In [Devlin et al.

(2018)](https://arxiv.org/abs/1810.04805), the authors show that a

pre-trained BERT model with a single task-specific classification layer

on top can achieve SOTA results on eleven NLP tasks. All *autoencoding*

models of 🤗Transformers can be found

[here](https://huggingface.co/transformers/model_summary.html#autoencoding-models).

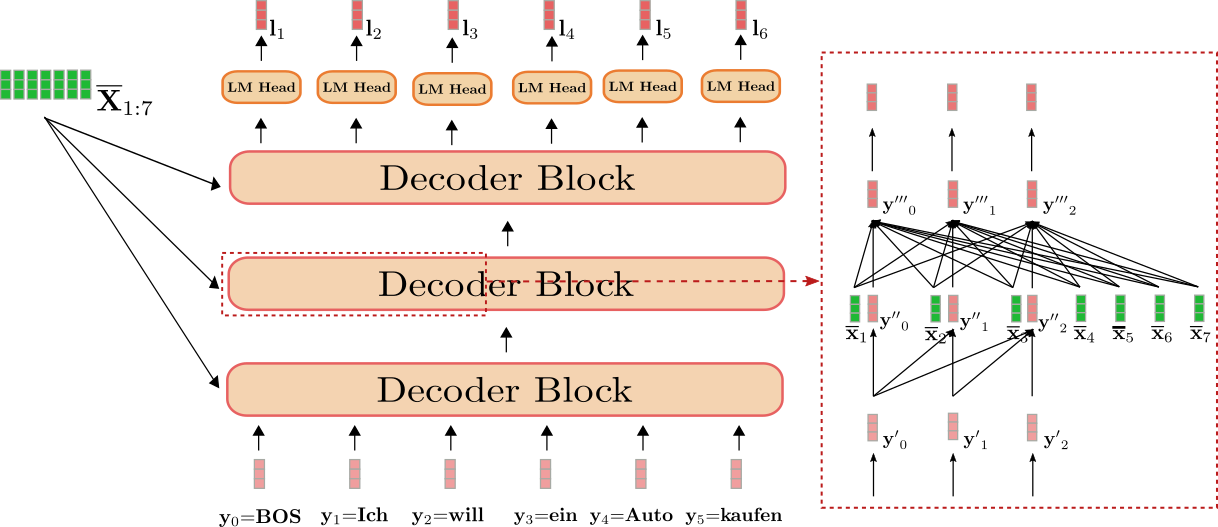

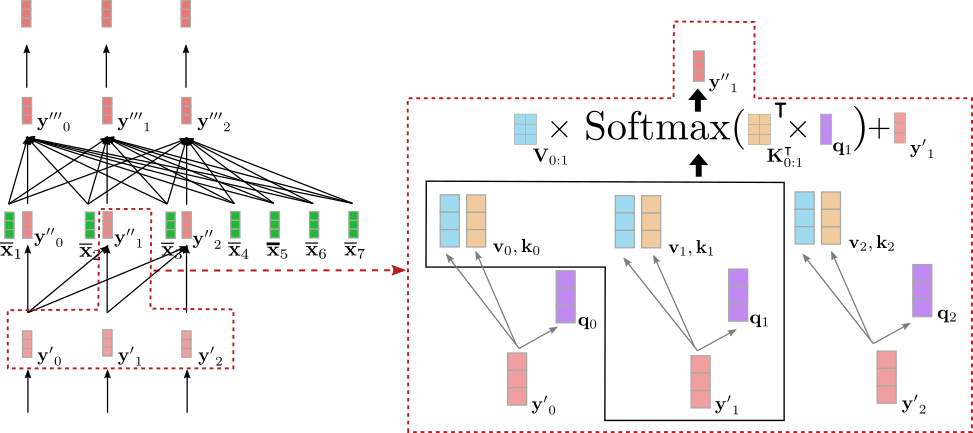

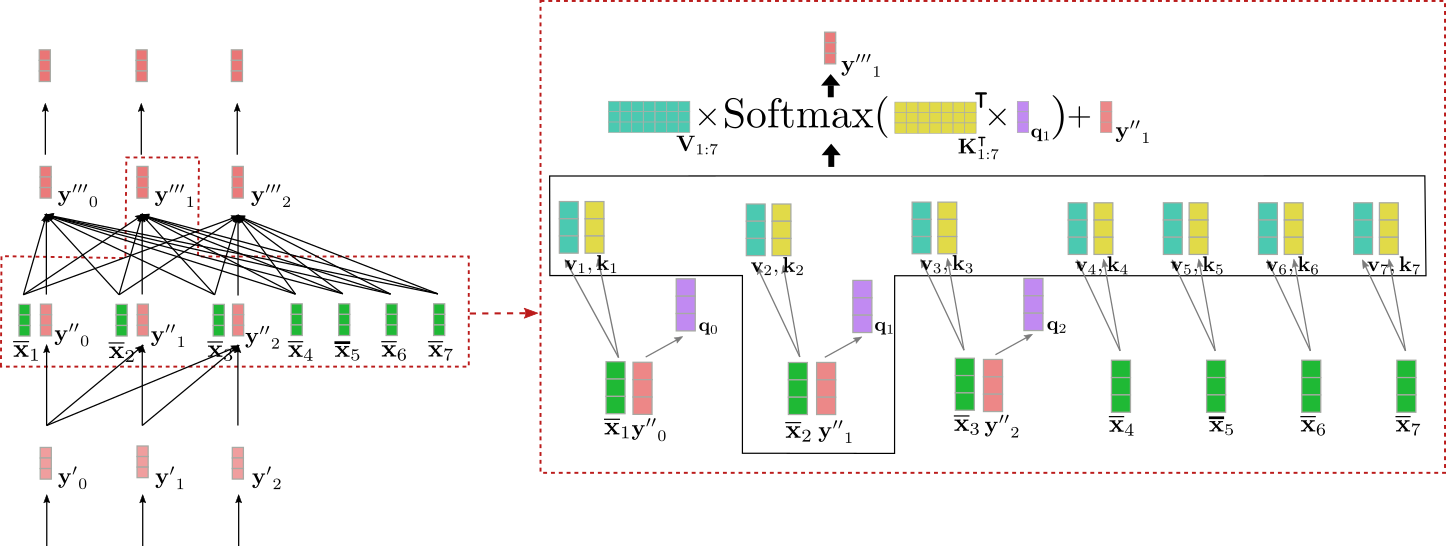

## **Decoder**

As mentioned in the *Encoder-Decoder* section, the *transformer-based*

decoder defines the conditional probability distribution of a target

sequence given the contextualized encoding sequence:

$$ p_{\theta_{dec}}(\mathbf{Y}_{1: m} | \mathbf{\overline{X}}_{1:n}), $$

which by Bayes\' rule can be decomposed into a product of conditional

distributions of the next target vector given the contextualized

encoding sequence and all previous target vectors:

$$ p_{\theta_{dec}}(\mathbf{Y}_{1:m} | \mathbf{\overline{X}}_{1:n}) = \prod_{i=1}^{m} p_{\theta_{dec}}(\mathbf{y}_i | \mathbf{Y}_{0: i-1}, \mathbf{\overline{X}}_{1:n}). $$

Let\'s first understand how the transformer-based decoder defines a

probability distribution. The transformer-based decoder is a stack of

*decoder blocks* followed by a dense layer, the \"LM head\". The stack

of decoder blocks maps the contextualized encoding sequence

\\(\mathbf{\overline{X}}_{1:n}\\) and a target vector sequence prepended by

the \\(\text{BOS}\\) vector and cut to the last target vector, *i.e.*

\\(\mathbf{Y}_{0:i-1}\\), to an encoded sequence of target vectors

\\(\mathbf{\overline{Y}}_{0: i-1}\\). Then, the \"LM head\" maps the encoded

sequence of target vectors \\(\mathbf{\overline{Y}}_{0: i-1}\\) to a

sequence of logit vectors

\\(\mathbf{L}_{1:n} = \mathbf{l}_1, \ldots, \mathbf{l}_n\\), whereas the

dimensionality of each logit vector \\(\mathbf{l}_i\\) corresponds to the

size of the vocabulary. This way, for each \\(i \in \{1, \ldots, n\}\\) a

probability distribution over the whole vocabulary can be obtained by

applying a softmax operation on \\(\mathbf{l}_i\\). These distributions

define the conditional distribution:

$$p_{\theta_{dec}}(\mathbf{y}_i | \mathbf{Y}_{0: i-1}, \mathbf{\overline{X}}_{1:n}), \forall i \in \{1, \ldots, n\},$$

respectively. The \"LM head\" is often tied to the transpose of the word

embedding matrix, *i.e.*

\\(\mathbf{W}_{\text{emb}}^{\intercal} = \left[\mathbf{y}^1, \ldots, \mathbf{y}^{\text{vocab}}\right]^{\intercal}\\)

\\({}^1\\). Intuitively this means that for all \\(i \in \{0, \ldots, n - 1\}\\)

the \"LM Head\" layer compares the encoded output vector

\\(\mathbf{\overline{y}}_i\\) to all word embeddings in the vocabulary

\\(\mathbf{y}^1, \ldots, \mathbf{y}^{\text{vocab}}\\) so that the logit

vector \\(\mathbf{l}_{i+1}\\) represents the similarity scores between the

encoded output vector and each word embedding. The softmax operation

simply transformers the similarity scores to a probability distribution.

For each \\(i \in \{1, \ldots, n\}\\), the following equations hold:

$$ p_{\theta_{dec}}(\mathbf{y} | \mathbf{\overline{X}}_{1:n}, \mathbf{Y}_{0:i-1})$$

$$ = \text{Softmax}(f_{\theta_{\text{dec}}}(\mathbf{\overline{X}}_{1:n}, \mathbf{Y}_{0:i-1}))$$

$$ = \text{Softmax}(\mathbf{W}_{\text{emb}}^{\intercal} \mathbf{\overline{y}}_{i-1})$$