text

stringlengths 23

371k

| source

stringlengths 32

152

|

|---|---|

Vision Transformers 图像分类

相关空间:https://huggingface.co/spaces/abidlabs/vision-transformer

标签:VISION, TRANSFORMERS, HUB

## 简介

图像分类是计算机视觉中的重要任务。构建更好的分类器以确定图像中存在的对象是当前研究的热点领域,因为它在从人脸识别到制造质量控制等方面都有应用。

最先进的图像分类器基于 _transformers_ 架构,该架构最初在自然语言处理任务中很受欢迎。这种架构通常被称为 vision transformers (ViT)。这些模型非常适合与 Gradio 的*图像*输入组件一起使用,因此在本教程中,我们将构建一个使用 Gradio 进行图像分类的 Web 演示。我们只需用**一行 Python 代码**即可构建整个 Web 应用程序,其效果如下(试用一下示例之一!):

<iframe src="https://abidlabs-vision-transformer.hf.space" frameBorder="0" height="660" title="Gradio app" class="container p-0 flex-grow space-iframe" allow="accelerometer; ambient-light-sensor; autoplay; battery; camera; document-domain; encrypted-media; fullscreen; geolocation; gyroscope; layout-animations; legacy-image-formats; magnetometer; microphone; midi; oversized-images; payment; picture-in-picture; publickey-credentials-get; sync-xhr; usb; vr ; wake-lock; xr-spatial-tracking" sandbox="allow-forms allow-modals allow-popups allow-popups-to-escape-sandbox allow-same-origin allow-scripts allow-downloads"></iframe>

让我们开始吧!

### 先决条件

确保您已经[安装](/getting_started)了 `gradio` Python 包。

## 步骤 1 - 选择 Vision 图像分类模型

首先,我们需要一个图像分类模型。在本教程中,我们将使用[Hugging Face Model Hub](https://huggingface.co/models?pipeline_tag=image-classification)上的一个模型。该 Hub 包含数千个模型,涵盖了多种不同的机器学习任务。

在左侧边栏中展开 Tasks 类别,并选择我们感兴趣的“Image Classification”作为我们的任务。然后,您将看到 Hub 上为图像分类设计的所有模型。

在撰写时,最受欢迎的模型是 `google/vit-base-patch16-224`,该模型在分辨率为 224x224 像素的 ImageNet 图像上进行了训练。我们将在演示中使用此模型。

## 步骤 2 - 使用 Gradio 加载 Vision Transformer 模型

当使用 Hugging Face Hub 上的模型时,我们无需为演示定义输入或输出组件。同样,我们不需要关心预处理或后处理的细节。所有这些都可以从模型标签中自动推断出来。

除了导入语句外,我们只需要一行代码即可加载并启动演示。

我们使用 `gr.Interface.load()` 方法,并传入包含 `huggingface/` 的模型路径,以指定它来自 Hugging Face Hub。

```python

import gradio as gr

gr.Interface.load(

"huggingface/google/vit-base-patch16-224",

examples=["alligator.jpg", "laptop.jpg"]).launch()

```

请注意,我们添加了一个 `examples` 参数,允许我们使用一些预定义的示例预填充我们的界面。

这将生成以下接口,您可以直接在浏览器中尝试。当您输入图像时,它会自动进行预处理并发送到 Hugging Face Hub API,通过模型处理,并以人类可解释的预测结果返回。尝试上传您自己的图像!

<iframe src="https://abidlabs-vision-transformer.hf.space" frameBorder="0" height="660" title="Gradio app" class="container p-0 flex-grow space-iframe" allow="accelerometer; ambient-light-sensor; autoplay; battery; camera; document-domain; encrypted-media; fullscreen; geolocation; gyroscope; layout-animations; legacy-image-formats; magnetometer; microphone; midi; oversized-images; payment; picture-in-picture; publickey-credentials-get; sync-xhr; usb; vr ; wake-lock; xr-spatial-tracking" sandbox="allow-forms allow-modals allow-popups allow-popups-to-escape-sandbox allow-same-origin allow-scripts allow-downloads"></iframe>

---

完成!只需一行代码,您就建立了一个图像分类器的 Web 演示。如果您想与他人分享,请在 `launch()` 接口时设置 `share=True`。

| gradio-app/gradio/blob/main/guides/cn/04_integrating-other-frameworks/image-classification-with-vision-transformers.md |

ConsistencyDecoderScheduler

This scheduler is a part of the [`ConsistencyDecoderPipeline`] and was introduced in [DALL-E 3](https://openai.com/dall-e-3).

The original codebase can be found at [openai/consistency_models](https://github.com/openai/consistency_models).

## ConsistencyDecoderScheduler

[[autodoc]] schedulers.scheduling_consistency_decoder.ConsistencyDecoderScheduler | huggingface/diffusers/blob/main/docs/source/en/api/schedulers/consistency_decoder.md |

!--⚠️ Note that this file is in Markdown but contain specific syntax for our doc-builder (similar to MDX) that may not be

rendered properly in your Markdown viewer.

-->

# How-to guides

In this section, you will find practical guides to help you achieve a specific goal.

Take a look at these guides to learn how to use huggingface_hub to solve real-world problems:

<div class="mt-10">

<div class="w-full flex flex-col space-y-4 md:space-y-0 md:grid md:grid-cols-3 md:gap-y-4 md:gap-x-5">

<a class="!no-underline border dark:border-gray-700 p-5 rounded-lg shadow hover:shadow-lg"

href="./repository">

<div class="w-full text-center bg-gradient-to-br from-indigo-400 to-indigo-500 rounded-lg py-1.5 font-semibold mb-5 text-white text-lg leading-relaxed">

Repository

</div><p class="text-gray-700">

How to create a repository on the Hub? How to configure it? How to interact with it?

</p>

</a>

<a class="!no-underline border dark:border-gray-700 p-5 rounded-lg shadow hover:shadow-lg"

href="./download">

<div class="w-full text-center bg-gradient-to-br from-indigo-400 to-indigo-500 rounded-lg py-1.5 font-semibold mb-5 text-white text-lg leading-relaxed">

Download files

</div><p class="text-gray-700">

How do I download a file from the Hub? How do I download a repository?

</p>

</a>

<a class="!no-underline border dark:border-gray-700 p-5 rounded-lg shadow hover:shadow-lg"

href="./upload">

<div class="w-full text-center bg-gradient-to-br from-indigo-400 to-indigo-500 rounded-lg py-1.5 font-semibold mb-5 text-white text-lg leading-relaxed">

Upload files

</div><p class="text-gray-700">

How to upload a file or a folder? How to make changes to an existing repository on the Hub?

</p>

</a>

<a class="!no-underline border dark:border-gray-700 p-5 rounded-lg shadow hover:shadow-lg"

href="./search">

<div class="w-full text-center bg-gradient-to-br from-indigo-400 to-indigo-500 rounded-lg py-1.5 font-semibold mb-5 text-white text-lg leading-relaxed">

Search

</div><p class="text-gray-700">

How to efficiently search through the 200k+ public models, datasets and spaces?

</p>

</a>

<a class="!no-underline border dark:border-gray-700 p-5 rounded-lg shadow hover:shadow-lg"

href="./hf_file_system">

<div class="w-full text-center bg-gradient-to-br from-indigo-400 to-indigo-500 rounded-lg py-1.5 font-semibold mb-5 text-white text-lg leading-relaxed">

HfFileSystem

</div><p class="text-gray-700">

How to interact with the Hub through a convenient interface that mimics Python's file interface?

</p>

</a>

<a class="!no-underline border dark:border-gray-700 p-5 rounded-lg shadow hover:shadow-lg"

href="./inference">

<div class="w-full text-center bg-gradient-to-br from-indigo-400 to-indigo-500 rounded-lg py-1.5 font-semibold mb-5 text-white text-lg leading-relaxed">

Inference

</div><p class="text-gray-700">

How to make predictions using the accelerated Inference API?

</p>

</a>

<a class="!no-underline border dark:border-gray-700 p-5 rounded-lg shadow hover:shadow-lg"

href="./community">

<div class="w-full text-center bg-gradient-to-br from-indigo-400 to-indigo-500 rounded-lg py-1.5 font-semibold mb-5 text-white text-lg leading-relaxed">

Community Tab

</div><p class="text-gray-700">

How to interact with the Community tab (Discussions and Pull Requests)?

</p>

</a>

<a class="!no-underline border dark:border-gray-700 p-5 rounded-lg shadow hover:shadow-lg"

href="./collections">

<div class="w-full text-center bg-gradient-to-br from-indigo-400 to-indigo-500 rounded-lg py-1.5 font-semibold mb-5 text-white text-lg leading-relaxed">

Collections

</div><p class="text-gray-700">

How to programmatically build collections?

</p>

</a>

<a class="!no-underline border dark:border-gray-700 p-5 rounded-lg shadow hover:shadow-lg"

href="./manage-cache">

<div class="w-full text-center bg-gradient-to-br from-indigo-400 to-indigo-500 rounded-lg py-1.5 font-semibold mb-5 text-white text-lg leading-relaxed">

Cache

</div><p class="text-gray-700">

How does the cache-system work? How to benefit from it?

</p>

</a>

<a class="!no-underline border dark:border-gray-700 p-5 rounded-lg shadow hover:shadow-lg"

href="./model-cards">

<div class="w-full text-center bg-gradient-to-br from-indigo-400 to-indigo-500 rounded-lg py-1.5 font-semibold mb-5 text-white text-lg leading-relaxed">

Model Cards

</div><p class="text-gray-700">

How to create and share Model Cards?

</p>

</a>

<a class="!no-underline border dark:border-gray-700 p-5 rounded-lg shadow hover:shadow-lg"

href="./manage-spaces">

<div class="w-full text-center bg-gradient-to-br from-indigo-400 to-indigo-500 rounded-lg py-1.5 font-semibold mb-5 text-white text-lg leading-relaxed">

Manage your Space

</div><p class="text-gray-700">

How to manage your Space hardware and configuration?

</p>

</a>

<a class="!no-underline border dark:border-gray-700 p-5 rounded-lg shadow hover:shadow-lg"

href="./integrations">

<div class="w-full text-center bg-gradient-to-br from-indigo-400 to-indigo-500 rounded-lg py-1.5 font-semibold mb-5 text-white text-lg leading-relaxed">

Integrate a library

</div><p class="text-gray-700">

What does it mean to integrate a library with the Hub? And how to do it?

</p>

</a>

<a class="!no-underline border dark:border-gray-700 p-5 rounded-lg shadow hover:shadow-lg"

href="./webhooks_server">

<div class="w-full text-center bg-gradient-to-br from-indigo-400 to-indigo-500 rounded-lg py-1.5 font-semibold mb-5 text-white text-lg leading-relaxed">

Webhooks server

</div><p class="text-gray-700">

How to create a server to receive Webhooks and deploy it as a Space?

</p>

</a>

</div>

</div>

| huggingface/huggingface_hub/blob/main/docs/source/en/guides/overview.md |

--

title: "Sentiment Analysis on Encrypted Data with Homomorphic Encryption"

thumbnail: /blog/assets/sentiment-analysis-fhe/thumbnail.png

authors:

- user: jfrery-zama

guest: true

---

# Sentiment Analysis on Encrypted Data with Homomorphic Encryption

It is well-known that a sentiment analysis model determines whether a text is positive, negative, or neutral. However, this process typically requires access to unencrypted text, which can pose privacy concerns.

Homomorphic encryption is a type of encryption that allows for computation on encrypted data without needing to decrypt it first. This makes it well-suited for applications where user's personal and potentially sensitive data is at risk (e.g. sentiment analysis of private messages).

This blog post uses the [Concrete-ML library](https://github.com/zama-ai/concrete-ml), allowing data scientists to use machine learning models in fully homomorphic encryption (FHE) settings without any prior knowledge of cryptography. We provide a practical tutorial on how to use the library to build a sentiment analysis model on encrypted data.

The post covers:

- transformers

- how to use transformers with XGBoost to perform sentiment analysis

- how to do the training

- how to use Concrete-ML to turn predictions into predictions over encrypted data

- how to [deploy to the cloud](https://docs.zama.ai/concrete-ml/getting-started/cloud) using a client/server protocol

Last but not least, we’ll finish with a complete demo over [Hugging Face Spaces](https://huggingface.co/spaces) to show this functionality in action.

## Setup the environment

First make sure your pip and setuptools are up to date by running:

```

pip install -U pip setuptools

```

Now we can install all the required libraries for the this blog with the following command.

```

pip install concrete-ml transformers datasets

```

## Using a public dataset

The dataset we use in this notebook can be found [here](https://www.kaggle.com/datasets/crowdflower/twitter-airline-sentiment).

To represent the text for sentiment analysis, we chose to use a transformer hidden representation as it yields high accuracy for the final model in a very efficient way. For a comparison of this representation set against a more common procedure like the TF-IDF approach, please see this [full notebook](https://github.com/zama-ai/concrete-ml/blob/release/0.4.x/use_case_examples/encrypted_sentiment_analysis/SentimentClassification.ipynb).

We can start by opening the dataset and visualize some statistics.

```python

from datasets import load_datasets

train = load_dataset("osanseviero/twitter-airline-sentiment")["train"].to_pandas()

text_X = train['text']

y = train['airline_sentiment']

y = y.replace(['negative', 'neutral', 'positive'], [0, 1, 2])

pos_ratio = y.value_counts()[2] / y.value_counts().sum()

neg_ratio = y.value_counts()[0] / y.value_counts().sum()

neutral_ratio = y.value_counts()[1] / y.value_counts().sum()

print(f'Proportion of positive examples: {round(pos_ratio * 100, 2)}%')

print(f'Proportion of negative examples: {round(neg_ratio * 100, 2)}%')

print(f'Proportion of neutral examples: {round(neutral_ratio * 100, 2)}%')

```

The output, then, looks like this:

```

Proportion of positive examples: 16.14%

Proportion of negative examples: 62.69%

Proportion of neutral examples: 21.17%

```

The ratio of positive and neutral examples is rather similar, though we have significantly more negative examples. Let’s keep this in mind to select the final evaluation metric.

Now we can split our dataset into training and test sets. We will use a seed for this code to ensure it is perfectly reproducible.

```python

from sklearn.model_selection import train_test_split

text_X_train, text_X_test, y_train, y_test = train_test_split(text_X, y,

test_size=0.1, random_state=42)

```

## Text representation using a transformer

[Transformers](https://en.wikipedia.org/wiki/Transformer_(machine_learning_model)) are neural networks often trained to predict the next words to appear in a text (this task is commonly called self-supervised learning). They can also be fine-tuned on some specific subtasks such that they specialize and get better results on a given problem.

They are powerful tools for all kinds of Natural Language Processing tasks. In fact, we can leverage their representation for any text and feed it to a more FHE-friendly machine-learning model for classification. In this notebook, we will use XGBoost.

We start by importing the requirements for transformers. Here, we use the popular library from [Hugging Face](https://huggingface.co) to get a transformer quickly.

The model we have chosen is a BERT transformer, fine-tuned on the Stanford Sentiment Treebank dataset.

```python

import torch

from transformers import AutoModelForSequenceClassification, AutoTokenizer

device = "cuda:0" if torch.cuda.is_available() else "cpu"

# Load the tokenizer (converts text to tokens)

tokenizer = AutoTokenizer.from_pretrained("cardiffnlp/twitter-roberta-base-sentiment-latest")

# Load the pre-trained model

transformer_model = AutoModelForSequenceClassification.from_pretrained(

"cardiffnlp/twitter-roberta-base-sentiment-latest"

)

```

This should download the model, which is now ready to be used.

Using the hidden representation for some text can be tricky at first, mainly because we could tackle this with many different approaches. Below is the approach we chose.

First, we tokenize the text. Tokenizing means splitting the text into tokens (a sequence of specific characters that can also be words) and replacing each with a number. Then, we send the tokenized text to the transformer model, which outputs a hidden representation (output of the self attention layers which are often used as input to the classification layers) for each word. Finally, we average the representations for each word to get a text-level representation.

The result is a matrix of shape (number of examples, hidden size). The hidden size is the number of dimensions in the hidden representation. For BERT, the hidden size is 768. The hidden representation is a vector of numbers that represents the text that can be used for many different tasks. In this case, we will use it for classification with [XGBoost](https://github.com/dmlc/xgboost) afterwards.

```python

import numpy as np

import tqdm

# Function that transforms a list of texts to their representation

# learned by the transformer.

def text_to_tensor(

list_text_X_train: list,

transformer_model: AutoModelForSequenceClassification,

tokenizer: AutoTokenizer,

device: str,

) -> np.ndarray:

# Tokenize each text in the list one by one

tokenized_text_X_train_split = []

tokenized_text_X_train_split = [

tokenizer.encode(text_x_train, return_tensors="pt")

for text_x_train in list_text_X_train

]

# Send the model to the device

transformer_model = transformer_model.to(device)

output_hidden_states_list = [None] * len(tokenized_text_X_train_split)

for i, tokenized_x in enumerate(tqdm.tqdm(tokenized_text_X_train_split)):

# Pass the tokens through the transformer model and get the hidden states

# Only keep the last hidden layer state for now

output_hidden_states = transformer_model(tokenized_x.to(device), output_hidden_states=True)[

1

][-1]

# Average over the tokens axis to get a representation at the text level.

output_hidden_states = output_hidden_states.mean(dim=1)

output_hidden_states = output_hidden_states.detach().cpu().numpy()

output_hidden_states_list[i] = output_hidden_states

return np.concatenate(output_hidden_states_list, axis=0)

```

```python

# Let's vectorize the text using the transformer

list_text_X_train = text_X_train.tolist()

list_text_X_test = text_X_test.tolist()

X_train_transformer = text_to_tensor(list_text_X_train, transformer_model, tokenizer, device)

X_test_transformer = text_to_tensor(list_text_X_test, transformer_model, tokenizer, device)

```

This transformation of the text (text to transformer representation) would need to be executed on the client machine as the encryption is done over the transformer representation.

## Classifying with XGBoost

Now that we have our training and test sets properly built to train a classifier, next comes the training of our FHE model. Here it will be very straightforward, using a hyper-parameter tuning tool such as GridSearch from scikit-learn.

```python

from concrete.ml.sklearn import XGBClassifier

from sklearn.model_selection import GridSearchCV

# Let's build our model

model = XGBClassifier()

# A gridsearch to find the best parameters

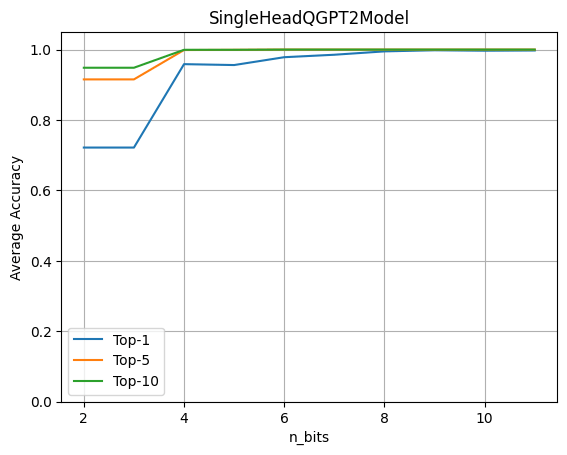

parameters = {

"n_bits": [2, 3],

"max_depth": [1],

"n_estimators": [10, 30, 50],

"n_jobs": [-1],

}

# Now we have a representation for each tweet, we can train a model on these.

grid_search = GridSearchCV(model, parameters, cv=5, n_jobs=1, scoring="accuracy")

grid_search.fit(X_train_transformer, y_train)

# Check the accuracy of the best model

print(f"Best score: {grid_search.best_score_}")

# Check best hyperparameters

print(f"Best parameters: {grid_search.best_params_}")

# Extract best model

best_model = grid_search.best_estimator_

```

The output is as follows:

```

Best score: 0.8378111718275654

Best parameters: {'max_depth': 1, 'n_bits': 3, 'n_estimators': 50, 'n_jobs': -1}

```

Now, let’s see how the model performs on the test set.

```python

from sklearn.metrics import ConfusionMatrixDisplay

# Compute the metrics on the test set

y_pred = best_model.predict(X_test_transformer)

y_proba = best_model.predict_proba(X_test_transformer)

# Compute and plot the confusion matrix

matrix = confusion_matrix(y_test, y_pred)

ConfusionMatrixDisplay(matrix).plot()

# Compute the accuracy

accuracy_transformer_xgboost = np.mean(y_pred == y_test)

print(f"Accuracy: {accuracy_transformer_xgboost:.4f}")

```

With the following output:

```

Accuracy: 0.8504

```

## Predicting over encrypted data

Now let’s predict over encrypted text. The idea here is that we will encrypt the representation given by the transformer rather than the raw text itself. In Concrete-ML, you can do this very quickly by setting the parameter `execute_in_fhe=True` in the predict function. This is just a developer feature (mainly used to check the running time of the FHE model). We will see how we can make this work in a deployment setting a bit further down.

```python

import time

# Compile the model to get the FHE inference engine

# (this may take a few minutes depending on the selected model)

start = time.perf_counter()

best_model.compile(X_train_transformer)

end = time.perf_counter()

print(f"Compilation time: {end - start:.4f} seconds")

# Let's write a custom example and predict in FHE

tested_tweet = ["AirFrance is awesome, almost as much as Zama!"]

X_tested_tweet = text_to_tensor(tested_tweet, transformer_model, tokenizer, device)

clear_proba = best_model.predict_proba(X_tested_tweet)

# Now let's predict with FHE over a single tweet and print the time it takes

start = time.perf_counter()

decrypted_proba = best_model.predict_proba(X_tested_tweet, execute_in_fhe=True)

end = time.perf_counter()

fhe_exec_time = end - start

print(f"FHE inference time: {fhe_exec_time:.4f} seconds")

```

The output becomes:

```

Compilation time: 9.3354 seconds

FHE inference time: 4.4085 seconds

```

A check that the FHE predictions are the same as the clear predictions is also necessary.

```python

print(f"Probabilities from the FHE inference: {decrypted_proba}")

print(f"Probabilities from the clear model: {clear_proba}")

```

This output reads:

```

Probabilities from the FHE inference: [[0.08434131 0.05571389 0.8599448 ]]

Probabilities from the clear model: [[0.08434131 0.05571389 0.8599448 ]]

```

## Deployment

At this point, our model is fully trained and compiled, ready to be deployed. In Concrete-ML, you can use a [deployment API](https://docs.zama.ai/concrete-ml/advanced-topics/client_server) to do this easily:

```python

# Let's save the model to be pushed to a server later

from concrete.ml.deployment import FHEModelDev

fhe_api = FHEModelDev("sentiment_fhe_model", best_model)

fhe_api.save()

```

These few lines are enough to export all the files needed for both the client and the server. You can check out the notebook explaining this deployment API in detail [here](https://github.com/zama-ai/concrete-ml/blob/release/0.4.x/docs/advanced_examples/ClientServer.ipynb).

## Full example in a Hugging Face Space

You can also have a look at the [final application on Hugging Face Space](https://huggingface.co/spaces/zama-fhe/encrypted_sentiment_analysis). The client app was developed with [Gradio](https://gradio.app/) while the server runs with [Uvicorn](https://www.uvicorn.org/) and was developed with [FastAPI](https://fastapi.tiangolo.com/).

The process is as follows:

- User generates a new private/public key

- User types a message that will be encoded, quantized, and encrypted

- Server receives the encrypted data and starts the prediction over encrypted data, using the public evaluation key

- Server sends back the encrypted predictions and the client can decrypt them using his private key

## Conclusion

We have presented a way to leverage the power of transformers where the representation is then used to:

1. train a machine learning model to classify tweets, and

2. predict over encrypted data using this model with FHE.

The final model (Transformer representation + XGboost) has a final accuracy of 85%, which is above the transformer itself with 80% accuracy (please see this [notebook](https://github.com/zama-ai/concrete-ml/blob/release/0.4.x/use_case_examples/encrypted_sentiment_analysis/SentimentClassification.ipynb) for the comparisons).

The FHE execution time per example is 4.4 seconds on a 16 cores cpu.

The files for deployment are used for a sentiment analysis app that allows a client to request sentiment analysis predictions from a server while keeping its data encrypted all along the chain of communication.

[Concrete-ML](https://github.com/zama-ai/concrete-ml) (Don't forget to star us on Github ⭐️💛) allows straightforward ML model building and conversion to the FHE equivalent to be able to predict over encrypted data.

Hope you enjoyed this post and let us know your thoughts/feedback!

And special thanks to [Abubakar Abid](https://huggingface.co/abidlabs) for his previous advice on how to build our first Hugging Face Space!

| huggingface/blog/blob/main/sentiment-analysis-fhe.md |

!---

Copyright 2022 The HuggingFace Team. All rights reserved.

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License.

-->

# Language Modeling

## Language Modeling Training

By running the scripts [`run_clm.py`](https://github.com/huggingface/optimum/blob/main/examples/onnxruntime/training/language-modeling/run_clm.py)

and [`run_mlm.py`](https://github.com/huggingface/optimum/blob/main/examples/onnxruntime/training/language-modeling/run_mlm.py),

we will be able to leverage the [`ONNX Runtime`](https://github.com/microsoft/onnxruntime) accelerator to train the language models from the

[HuggingFace hub](https://huggingface.co/models).

__The following example applies the acceleration features powered by ONNX Runtime.__

### ONNX Runtime Training

The following example trains GPT2 on wikitext-2 with mixed precision (fp16).

```bash

torchrun --nproc_per_node=NUM_GPUS_YOU_HAVE run_clm.py \

--model_name_or_path gpt2 \

--dataset_name wikitext \

--dataset_config_name wikitext-2-raw-v1 \

--do_train \

--output_dir /tmp/test-clm \

--fp16

```

__Note__

> *To enable ONNX Runtime training, your devices need to be equipped with GPU. Install the dependencies either with our prepared*

*[Dockerfiles](https://github.com/huggingface/optimum/blob/main/examples/onnxruntime/training/docker/) or follow the instructions*

*in [`torch_ort`](https://github.com/pytorch/ort/blob/main/torch_ort/docker/README.md).*

> *The inference will use PyTorch by default, if you want to use ONNX Runtime backend instead, add the flag `--inference_with_ort`.*

---

| huggingface/optimum/blob/main/examples/onnxruntime/training/language-modeling/README.md |

Gradio Demo: blocks_speech_text_sentiment

```

!pip install -q gradio torch transformers

```

```

from transformers import pipeline

import gradio as gr

asr = pipeline("automatic-speech-recognition", "facebook/wav2vec2-base-960h")

classifier = pipeline("text-classification")

def speech_to_text(speech):

text = asr(speech)["text"]

return text

def text_to_sentiment(text):

return classifier(text)[0]["label"]

demo = gr.Blocks()

with demo:

audio_file = gr.Audio(type="filepath")

text = gr.Textbox()

label = gr.Label()

b1 = gr.Button("Recognize Speech")

b2 = gr.Button("Classify Sentiment")

b1.click(speech_to_text, inputs=audio_file, outputs=text)

b2.click(text_to_sentiment, inputs=text, outputs=label)

if __name__ == "__main__":

demo.launch()

```

| gradio-app/gradio/blob/main/demo/blocks_speech_text_sentiment/run.ipynb |

--

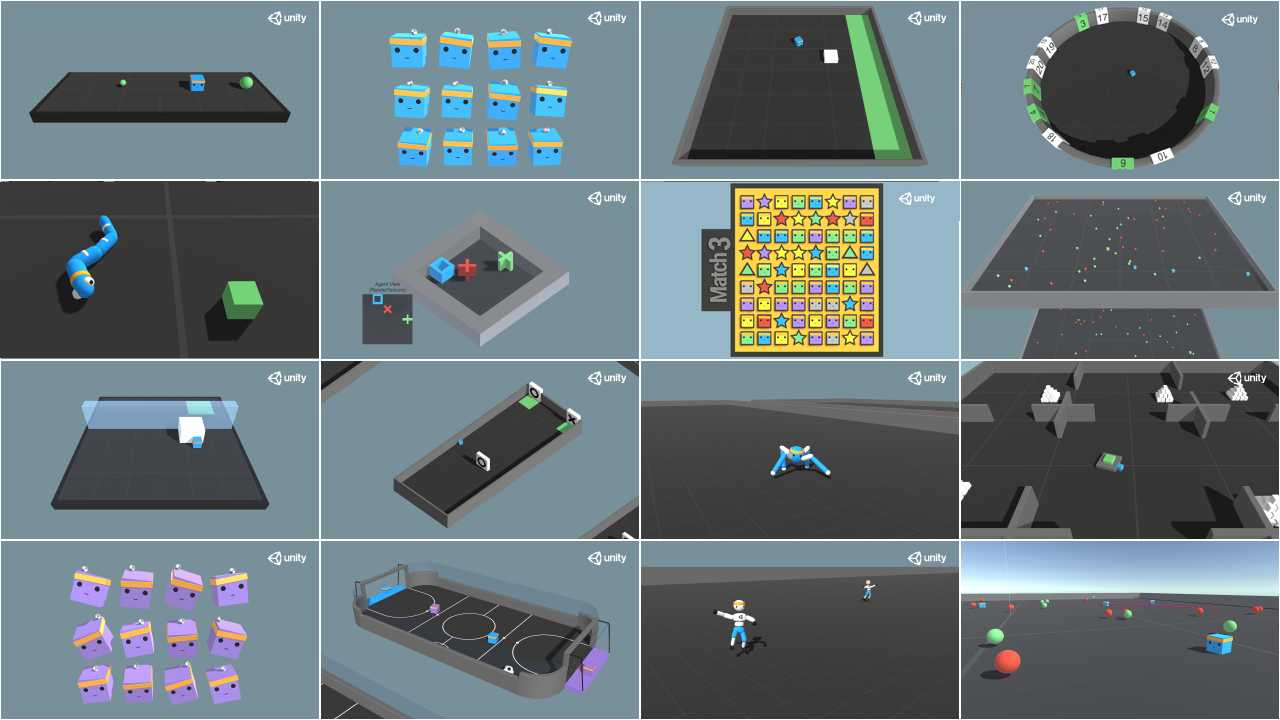

title: "How to host a Unity game in a Space"

thumbnail: /blog/assets/124_ml-for-games/unity-in-spaces-thumbnail.png

authors:

- user: dylanebert

---

# How to host a Unity game in a Space

<!-- {authors} -->

Did you know you can host a Unity game in a Hugging Face Space? No? Well, you can!

Hugging Face Spaces are an easy way to build, host, and share demos. While they are typically used for Machine Learning demos,

they can also host playable Unity games. Here are some examples:

- [Huggy](https://huggingface.co/spaces/ThomasSimonini/Huggy)

- [Farming Game](https://huggingface.co/spaces/dylanebert/FarmingGame)

- [Unity API Demo](https://huggingface.co/spaces/dylanebert/UnityDemo)

Here's how you can host your own Unity game in a Space.

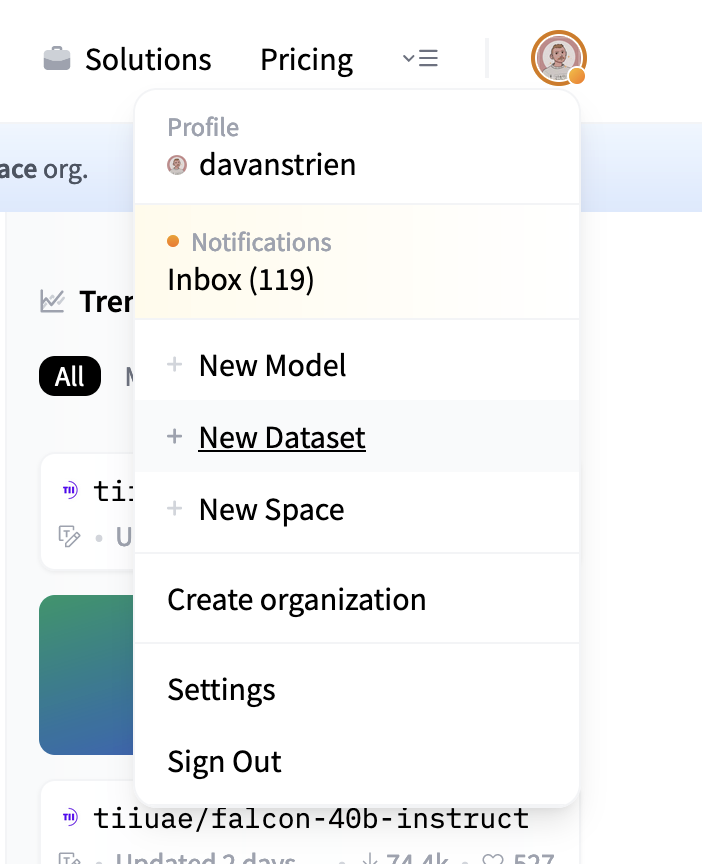

## Step 1: Create a Space using the Static HTML template

First, navigate to [Hugging Face Spaces](https://huggingface.co/new-space) to create a space.

<figure class="image text-center">

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/124_ml-for-games/games-in-spaces/1.png">

</figure>

Select the "Static HTML" template, give your Space a name, and create it.

<figure class="image text-center">

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/124_ml-for-games/games-in-spaces/2.png">

</figure>

## Step 2: Use Git to Clone the Space

Clone your newly created Space to your local machine using Git. You can do this by running the following command in your terminal or command prompt:

```

git clone https://huggingface.co/spaces/{your-username}/{your-space-name}

```

## Step 3: Open your Unity Project

Open the Unity project you want to host in your Space.

<figure class="image text-center">

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/124_ml-for-games/games-in-spaces/3.png">

</figure>

## Step 4: Switch the Build Target to WebGL

Navigate to `File > Build Settings` and switch the Build Target to WebGL.

<figure class="image text-center">

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/124_ml-for-games/games-in-spaces/4.png">

</figure>

## Step 5: Open Player Settings

In the Build Settings window, click the "Player Settings" button to open the Player Settings panel.

<figure class="image text-center">

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/124_ml-for-games/games-in-spaces/5.png">

</figure>

## Step 6: Optionally, Download the Hugging Face Unity WebGL Template

You can enhance your game's appearance in a Space by downloading the Hugging Face Unity WebGL template, available [here](https://github.com/huggingface/Unity-WebGL-template-for-Hugging-Face-Spaces). Just download the repository and drop it in your project files.

Then, in the Player Settings panel, switch the WebGL template to Hugging Face. To do so, in Player Settings, click "Resolution and Presentation", then select the Hugging Face WebGL template.

<figure class="image text-center">

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/124_ml-for-games/games-in-spaces/6.png">

</figure>

## Step 7: Change the Compression Format to Disabled

In the Player Settings panel, navigate to the "Publishing Settings" section and change the Compression Format to "Disabled".

<figure class="image text-center">

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/124_ml-for-games/games-in-spaces/7.png">

</figure>

## Step 8: Build your Project

Return to the Build Settings window and click the "Build" button. Choose a location to save your build files, and Unity will build the project for WebGL.

<figure class="image text-center">

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/124_ml-for-games/games-in-spaces/8.png">

</figure>

## Step 9: Copy the Contents of the Build Folder

After the build process is finished, navigate to the folder containing your build files. Copy the files in the build folder to the repository you cloned in [Step 2](#step-2-use-git-to-clone-the-space).

<figure class="image text-center">

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/124_ml-for-games/games-in-spaces/9.png">

</figure>

## Step 10: Enable Git-LFS for Large File Storage

Navigate to your repository. Use the following commands to track large build files.

```

git lfs install

git lfs track Build/*

```

## Step 11: Push your Changes

Finally, use the following Git commands to push your changes:

```

git add .

git commit -m "Add Unity WebGL build files"

git push

```

## Done!

Congratulations! Refresh your Space. You should now be able to play your game in a Hugging Face Space.

We hope you found this tutorial helpful. If you have any questions or would like to get more involved in using Hugging Face for Games, join the [Hugging Face Discord](https://hf.co/join/discord)! | huggingface/blog/blob/main/unity-in-spaces.md |

!---

Copyright 2022 The HuggingFace Team. All rights reserved.

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License.

-->

This folder contains a template to add a tokenization test.

## Usage

Using the `cookiecutter` utility requires to have all the `dev` dependencies installed.

Let's first [fork](https://docs.github.com/en/get-started/quickstart/fork-a-repo) the `transformers` repo on github. Once it's done you can clone your fork and install `transformers` in our environment:

```shell script

git clone https://github.com/YOUR-USERNAME/transformers

cd transformers

pip install -e ".[dev]"

```

Once the installation is done, you can generate the template by running the following command. Be careful, the template will be generated inside a new folder in your current working directory.

```shell script

cookiecutter path-to-the folder/adding_a_missing_tokenization_test/

```

You will then have to answer some questions about the tokenizer for which you want to add tests. The `modelname` should be cased according to the plain text casing, i.e., BERT, RoBERTa, DeBERTa.

Once the command has finished, you should have a one new file inside the newly created folder named `test_tokenization_Xxx.py`. At this point the template is finished and you can move it to the sub-folder of the corresponding model in the test folder.

| huggingface/transformers/blob/main/templates/adding_a_missing_tokenization_test/README.md |

p align="center">

<br>

<img src="https://huggingface.co/landing/assets/tokenizers/tokenizers-logo.png" width="600"/>

<br>

<p>

<p align="center">

<a href="https://badge.fury.io/py/tokenizers">

<img alt="Build" src="https://badge.fury.io/py/tokenizers.svg">

</a>

<a href="https://github.com/huggingface/tokenizers/blob/master/LICENSE">

<img alt="GitHub" src="https://img.shields.io/github/license/huggingface/tokenizers.svg?color=blue">

</a>

</p>

<br>

# Tokenizers

Provides an implementation of today's most used tokenizers, with a focus on performance and

versatility.

Bindings over the [Rust](https://github.com/huggingface/tokenizers/tree/master/tokenizers) implementation.

If you are interested in the High-level design, you can go check it there.

Otherwise, let's dive in!

## Main features:

- Train new vocabularies and tokenize using 4 pre-made tokenizers (Bert WordPiece and the 3

most common BPE versions).

- Extremely fast (both training and tokenization), thanks to the Rust implementation. Takes

less than 20 seconds to tokenize a GB of text on a server's CPU.

- Easy to use, but also extremely versatile.

- Designed for research and production.

- Normalization comes with alignments tracking. It's always possible to get the part of the

original sentence that corresponds to a given token.

- Does all the pre-processing: Truncate, Pad, add the special tokens your model needs.

### Installation

#### With pip:

```bash

pip install tokenizers

```

#### From sources:

To use this method, you need to have the Rust installed:

```bash

# Install with:

curl https://sh.rustup.rs -sSf | sh -s -- -y

export PATH="$HOME/.cargo/bin:$PATH"

```

Once Rust is installed, you can compile doing the following

```bash

git clone https://github.com/huggingface/tokenizers

cd tokenizers/bindings/python

# Create a virtual env (you can use yours as well)

python -m venv .env

source .env/bin/activate

# Install `tokenizers` in the current virtual env

pip install -e .

```

### Load a pretrained tokenizer from the Hub

```python

from tokenizers import Tokenizer

tokenizer = Tokenizer.from_pretrained("bert-base-cased")

```

### Using the provided Tokenizers

We provide some pre-build tokenizers to cover the most common cases. You can easily load one of

these using some `vocab.json` and `merges.txt` files:

```python

from tokenizers import CharBPETokenizer

# Initialize a tokenizer

vocab = "./path/to/vocab.json"

merges = "./path/to/merges.txt"

tokenizer = CharBPETokenizer(vocab, merges)

# And then encode:

encoded = tokenizer.encode("I can feel the magic, can you?")

print(encoded.ids)

print(encoded.tokens)

```

And you can train them just as simply:

```python

from tokenizers import CharBPETokenizer

# Initialize a tokenizer

tokenizer = CharBPETokenizer()

# Then train it!

tokenizer.train([ "./path/to/files/1.txt", "./path/to/files/2.txt" ])

# Now, let's use it:

encoded = tokenizer.encode("I can feel the magic, can you?")

# And finally save it somewhere

tokenizer.save("./path/to/directory/my-bpe.tokenizer.json")

```

#### Provided Tokenizers

- `CharBPETokenizer`: The original BPE

- `ByteLevelBPETokenizer`: The byte level version of the BPE

- `SentencePieceBPETokenizer`: A BPE implementation compatible with the one used by SentencePiece

- `BertWordPieceTokenizer`: The famous Bert tokenizer, using WordPiece

All of these can be used and trained as explained above!

### Build your own

Whenever these provided tokenizers don't give you enough freedom, you can build your own tokenizer,

by putting all the different parts you need together.

You can check how we implemented the [provided tokenizers](https://github.com/huggingface/tokenizers/tree/master/bindings/python/py_src/tokenizers/implementations) and adapt them easily to your own needs.

#### Building a byte-level BPE

Here is an example showing how to build your own byte-level BPE by putting all the different pieces

together, and then saving it to a single file:

```python

from tokenizers import Tokenizer, models, pre_tokenizers, decoders, trainers, processors

# Initialize a tokenizer

tokenizer = Tokenizer(models.BPE())

# Customize pre-tokenization and decoding

tokenizer.pre_tokenizer = pre_tokenizers.ByteLevel(add_prefix_space=True)

tokenizer.decoder = decoders.ByteLevel()

tokenizer.post_processor = processors.ByteLevel(trim_offsets=True)

# And then train

trainer = trainers.BpeTrainer(

vocab_size=20000,

min_frequency=2,

initial_alphabet=pre_tokenizers.ByteLevel.alphabet()

)

tokenizer.train([

"./path/to/dataset/1.txt",

"./path/to/dataset/2.txt",

"./path/to/dataset/3.txt"

], trainer=trainer)

# And Save it

tokenizer.save("byte-level-bpe.tokenizer.json", pretty=True)

```

Now, when you want to use this tokenizer, this is as simple as:

```python

from tokenizers import Tokenizer

tokenizer = Tokenizer.from_file("byte-level-bpe.tokenizer.json")

encoded = tokenizer.encode("I can feel the magic, can you?")

```

| huggingface/tokenizers/blob/main/bindings/python/README.md |

!--Copyright 2023 The HuggingFace Team. All rights reserved.

Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with

the License. You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on

an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the

specific language governing permissions and limitations under the License.

-->

# KarrasVeScheduler

`KarrasVeScheduler` is a stochastic sampler tailored to variance-expanding (VE) models. It is based on the [Elucidating the Design Space of Diffusion-Based Generative Models](https://huggingface.co/papers/2206.00364) and [Score-based generative modeling through stochastic differential equations](https://huggingface.co/papers/2011.13456) papers.

## KarrasVeScheduler

[[autodoc]] KarrasVeScheduler

## KarrasVeOutput

[[autodoc]] schedulers.deprecated.scheduling_karras_ve.KarrasVeOutput | huggingface/diffusers/blob/main/docs/source/en/api/schedulers/stochastic_karras_ve.md |

Webhooks

<Tip>

Webhooks are now publicly available!

</Tip>

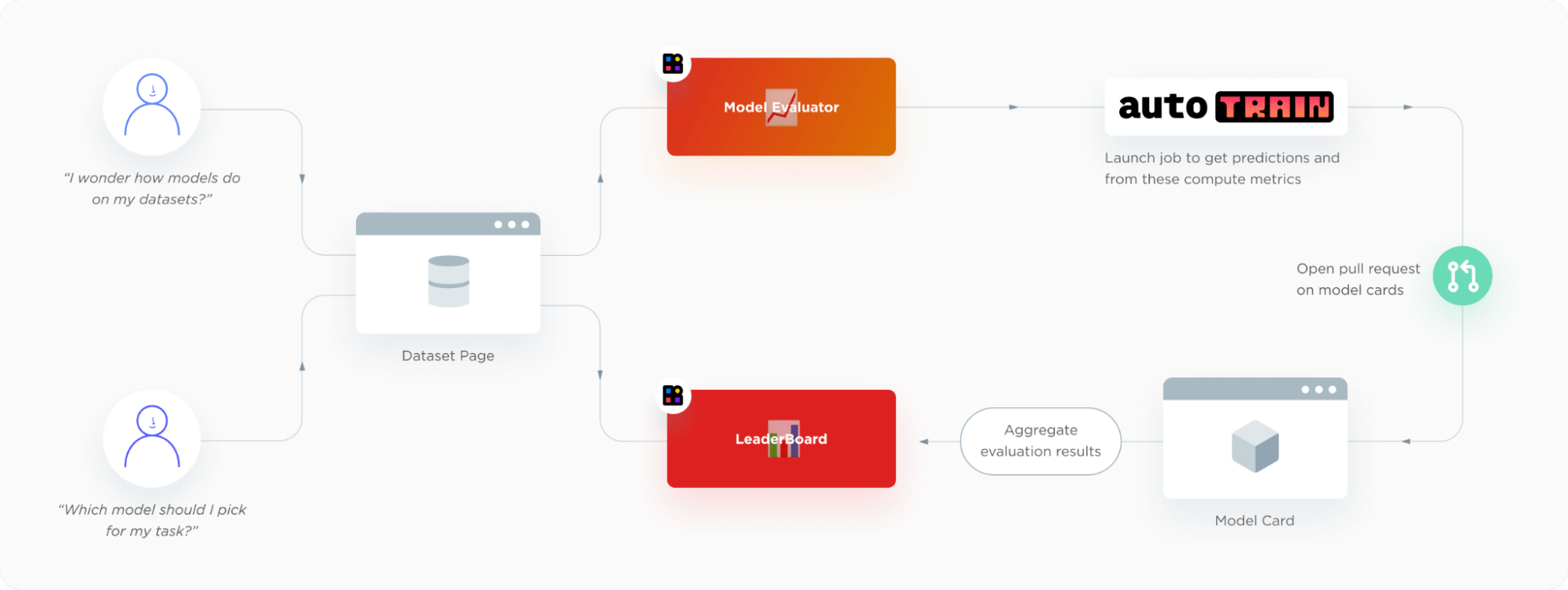

Webhooks are a foundation for MLOps-related features. They allow you to listen for new changes on specific repos or to all repos belonging to particular set of users/organizations (not just your repos, but any repo).

You can use them to auto-convert models, build community bots, or build CI/CD for your models, datasets, and Spaces (and much more!).

The documentation for Webhooks is below – or you can also browse our **guides** showcasing a few possible use cases of Webhooks:

- [Fine-tune a new model whenever a dataset gets updated (Python)](./webhooks-guide-auto-retrain)

- [Create a discussion bot on the Hub, using a LLM API (NodeJS)](./webhooks-guide-discussion-bot)

- [Create metadata quality reports (Python)](./webhooks-guide-metadata-review)

- and more to come…

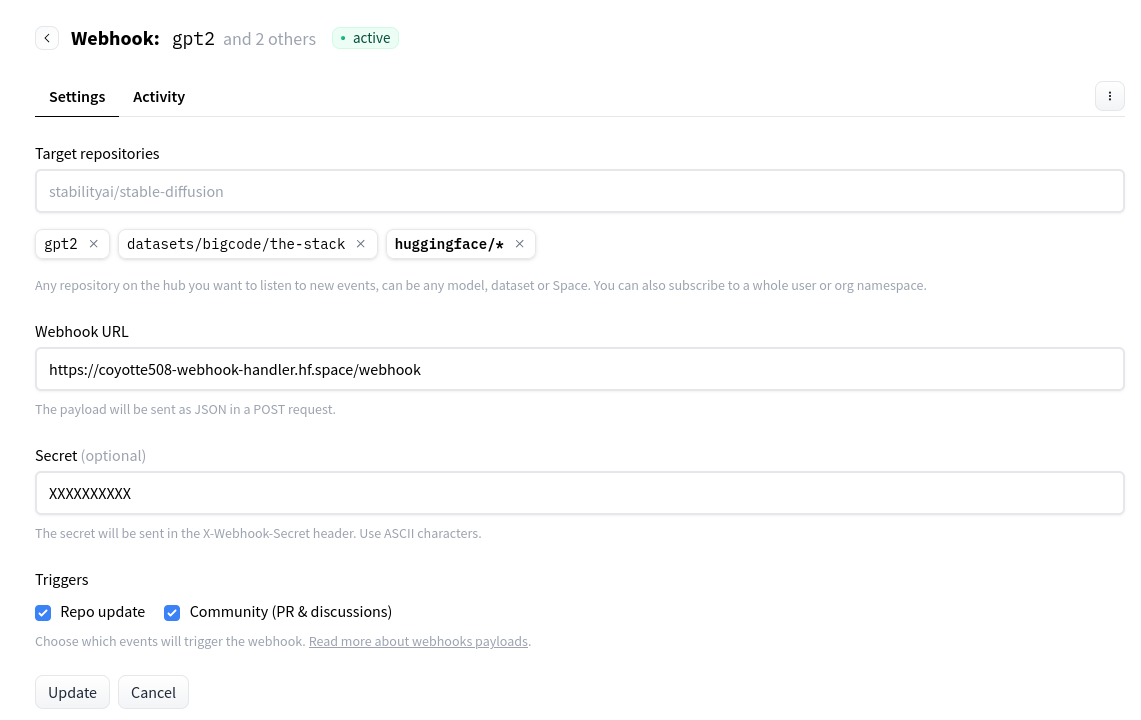

## Create your Webhook

You can create new Webhooks and edit existing ones in your Webhooks [settings](https://huggingface.co/settings/webhooks):

Webhooks can watch for repos updates, Pull Requests, discussions, and new comments. It's even possible to create a Space to react to your Webhooks!

## Webhook Payloads

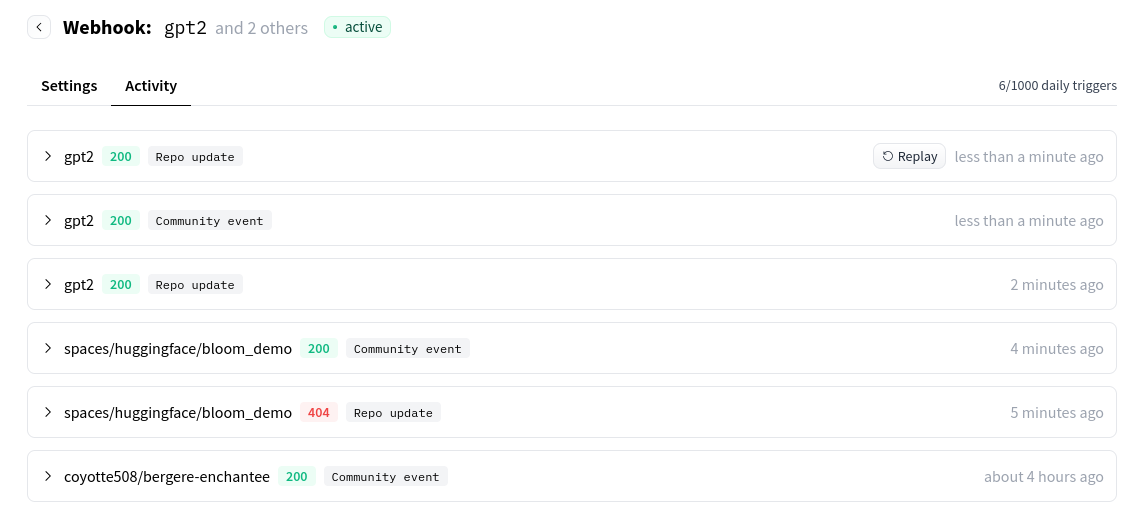

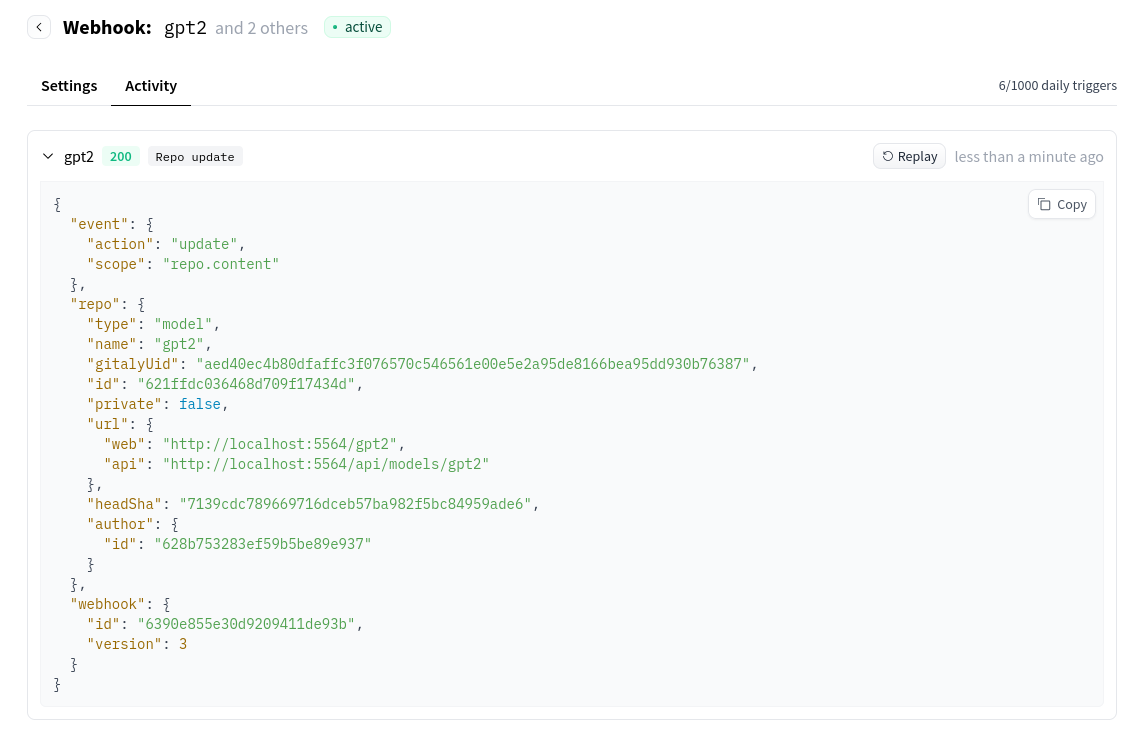

After registering a Webhook, you will be notified of new events via an `HTTP POST` call on the specified target URL. The payload is encoded in JSON.

You can view the history of payloads sent in the activity tab of the webhook settings page, it's also possible to replay past webhooks for easier debugging:

As an example, here is the full payload when a Pull Request is opened:

```json

{

"event": {

"action": "create",

"scope": "discussion"

},

"repo": {

"type": "model",

"name": "gpt2",

"id": "621ffdc036468d709f17434d",

"private": false,

"url": {

"web": "https://huggingface.co/gpt2",

"api": "https://huggingface.co/api/models/gpt2"

},

"owner": {

"id": "628b753283ef59b5be89e937"

}

},

"discussion": {

"id": "6399f58518721fdd27fc9ca9",

"title": "Update co2 emissions",

"url": {

"web": "https://huggingface.co/gpt2/discussions/19",

"api": "https://huggingface.co/api/models/gpt2/discussions/19"

},

"status": "open",

"author": {

"id": "61d2f90c3c2083e1c08af22d"

},

"num": 19,

"isPullRequest": true,

"changes": {

"base": "refs/heads/main"

}

},

"comment": {

"id": "6399f58518721fdd27fc9caa",

"author": {

"id": "61d2f90c3c2083e1c08af22d"

},

"content": "Add co2 emissions information to the model card",

"hidden": false,

"url": {

"web": "https://huggingface.co/gpt2/discussions/19#6399f58518721fdd27fc9caa"

}

},

"webhook": {

"id": "6390e855e30d9209411de93b",

"version": 3

}

}

```

### Event

The top-level properties `event` is always specified and used to determine the nature of the event.

It has two sub-properties: `event.action` and `event.scope`.

`event.scope` will be one of the following values:

- `"repo"` - Global events on repos. Possible values for the associated `action`: `"create"`, `"delete"`, `"update"`, `"move"`.

- `"repo.content"` - Events on the repo's content, such as new commits or tags. It triggers on new Pull Requests as well due to the newly created reference/commit. The associated `action` is always `"update"`.

- `"repo.config"` - Events on the config: update Space secrets, update settings, update DOIs, disabled or not, etc. The associated `action` is always `"update"`.

- `"discussion"` - Creating a discussion or Pull Request, updating the title or status, and merging. Possible values for the associated `action`: `"create"`, `"delete"`, `"update"`.

- `"discussion.comment"` - Creating, updating, and hiding a comment. Possible values for the associated `action`: `"create"`, `"update"`.

More scopes can be added in the future. To handle unknown events, your webhook handler can consider any action on a narrowed scope to be an `"update"` action on the broader scope.

For example, if the `"repo.config.dois"` scope is added in the future, any event with that scope can be considered by your webhook handler as an `"update"` action on the `"repo.config"` scope.

### Repo

In the current version of webhooks, the top-level property `repo` is always specified, as events can always be associated with a repo. For example, consider the following value:

```json

"repo": {

"type": "model",

"name": "some-user/some-repo",

"id": "6366c000a2abcdf2fd69a080",

"private": false,

"url": {

"web": "https://huggingface.co/some-user/some-repo",

"api": "https://huggingface.co/api/models/some-user/some-repo"

},

"headSha": "c379e821c9c95d613899e8c4343e4bfee2b0c600",

"tags": [

"license:other",

"has_space"

],

"owner": {

"id": "61d2000c3c2083e1c08af22d"

}

}

```

`repo.headSha` is the sha of the latest commit on the repo's `main` branch. It is only sent when `event.scope` starts with `"repo"`, not on community events like discussions and comments.

### Discussions and Pull Requests

The top-level property `discussion` is specified on community events (discussions and Pull Requests). The `discussion.isPullRequest` property is a boolean indicating if the discussion is also a Pull Request (on the Hub, a PR is a special type of discussion). Here is an example value:

```json

"discussion": {

"id": "639885d811ae2bad2b7ba461",

"title": "Hello!",

"url": {

"web": "https://huggingface.co/some-user/some-repo/discussions/3",

"api": "https://huggingface.co/api/models/some-user/some-repo/discussions/3"

},

"status": "open",

"author": {

"id": "61d2000c3c2083e1c08af22d"

},

"isPullRequest": true,

"changes": {

"base": "refs/heads/main"

}

"num": 3

}

```

### Comment

The top level property `comment` is specified when a comment is created (including on discussion creation) or updated. Here is an example value:

```json

"comment": {

"id": "6398872887bfcfb93a306f18",

"author": {

"id": "61d2000c3c2083e1c08af22d"

},

"content": "This adds an env key",

"hidden": false,

"url": {

"web": "https://huggingface.co/some-user/some-repo/discussions/4#6398872887bfcfb93a306f18"

}

}

```

## Webhook secret

Setting a Webhook secret is useful to make sure payloads sent to your Webhook handler URL are actually from Hugging Face.

If you set a secret for your Webhook, it will be sent along as an `X-Webhook-Secret` HTTP header on every request. Only ASCII characters are supported.

<Tip>

It's also possible to add the secret directly in the handler URL. For example, setting it as a query parameter: https://example.com/webhook?secret=XXX.

This can be helpful if accessing the HTTP headers of the request is complicated for your Webhook handler.

</Tip>

## Rate limiting

Each Webhook is limited to 1,000 triggers per 24 hours. You can view your usage in the Webhook settings page in the "Activity" tab.

If you need to increase the number of triggers for your Webhook, contact us at [email protected].

## Developing your Webhooks

If you do not have an HTTPS endpoint/URL, you can try out public tools for webhook testing. These tools act as catch-all (capture all requests) sent to them and give 200 OK status code. [Beeceptor](https://beeceptor.com/) is one tool you can use to create a temporary HTTP endpoint and review the incoming payload. Another such tool is [Webhook.site](https://webhook.site/).

Additionally, you can route a real Webhook payload to the code running locally on your machine during development. This is a great way to test and debug for faster integrations. You can do this by exposing your localhost port to the Internet. To be able to go this path, you can use [ngrok](https://ngrok.com/) or [localtunnel](https://theboroer.github.io/localtunnel-www/).

## Debugging Webhooks

You can easily find recently generated events for your webhooks. Open the activity tab for your webhook. There you will see the list of recent events.

Here you can review the HTTP status code and the payload of the generated events. Additionally, you can replay these events by clicking on the `Replay` button!

Note: When changing the target URL or secret of a Webhook, replaying an event will send the payload to the updated URL.

## FAQ

##### Can I define webhooks on my organization vs my user account?

No, this is not currently supported.

##### How can I subscribe to events on all repos (or across a whole repo type, like on all models)?

This is not currently exposed to end users but we can toggle this for you if you send an email to [email protected].

| huggingface/hub-docs/blob/main/docs/hub/webhooks.md |

Security Policy

## Supported Versions

<!--

Use this section to tell people about which versions of your project are

currently being supported with security updates.

| Version | Supported |

| ------- | ------------------ |

| 5.1.x | :white_check_mark: |

| 5.0.x | :x: |

| 4.0.x | :white_check_mark: |

| < 4.0 | :x: |

-->

Each major version is currently being supported with security updates.

| Version | Supported |

| ------- | ------------------ |

| 1.x.x | :white_check_mark: |

## Reporting a Vulnerability

<!--

Use this section to tell people how to report a vulnerability.

Tell them where to go, how often they can expect to get an update on a

reported vulnerability, what to expect if the vulnerability is accepted or

declined, etc.

-->

To report a security vulnerability, please contact: [email protected]

| huggingface/datasets-server/blob/main/SECURITY.md |

!--Copyright 2023 The HuggingFace Team. All rights reserved.

Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with

the License. You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on

an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the

specific language governing permissions and limitations under the License.

-->

# Pipeline callbacks

The denoising loop of a pipeline can be modified with custom defined functions using the `callback_on_step_end` parameter. This can be really useful for *dynamically* adjusting certain pipeline attributes, or modifying tensor variables. The flexibility of callbacks opens up some interesting use-cases such as changing the prompt embeddings at each timestep, assigning different weights to the prompt embeddings, and editing the guidance scale.

This guide will show you how to use the `callback_on_step_end` parameter to disable classifier-free guidance (CFG) after 40% of the inference steps to save compute with minimal cost to performance.

The callback function should have the following arguments:

* `pipe` (or the pipeline instance) provides access to useful properties such as `num_timestep` and `guidance_scale`. You can modify these properties by updating the underlying attributes. For this example, you'll disable CFG by setting `pipe._guidance_scale=0.0`.

* `step_index` and `timestep` tell you where you are in the denoising loop. Use `step_index` to turn off CFG after reaching 40% of `num_timestep`.

* `callback_kwargs` is a dict that contains tensor variables you can modify during the denoising loop. It only includes variables specified in the `callback_on_step_end_tensor_inputs` argument, which is passed to the pipeline's `__call__` method. Different pipelines may use different sets of variables, so please check a pipeline's `_callback_tensor_inputs` attribute for the list of variables you can modify. Some common variables include `latents` and `prompt_embeds`. For this function, change the batch size of `prompt_embeds` after setting `guidance_scale=0.0` in order for it to work properly.

Your callback function should look something like this:

```python

def callback_dynamic_cfg(pipe, step_index, timestep, callback_kwargs):

# adjust the batch_size of prompt_embeds according to guidance_scale

if step_index == int(pipe.num_timestep * 0.4):

prompt_embeds = callback_kwargs["prompt_embeds"]

prompt_embeds = prompt_embeds.chunk(2)[-1]

# update guidance_scale and prompt_embeds

pipe._guidance_scale = 0.0

callback_kwargs["prompt_embeds"] = prompt_embeds

return callback_kwargs

```

Now, you can pass the callback function to the `callback_on_step_end` parameter and the `prompt_embeds` to `callback_on_step_end_tensor_inputs`.

```py

import torch

from diffusers import StableDiffusionPipeline

pipe = StableDiffusionPipeline.from_pretrained("runwayml/stable-diffusion-v1-5", torch_dtype=torch.float16)

pipe = pipe.to("cuda")

prompt = "a photo of an astronaut riding a horse on mars"

generator = torch.Generator(device="cuda").manual_seed(1)

out = pipe(prompt, generator=generator, callback_on_step_end=callback_custom_cfg, callback_on_step_end_tensor_inputs=['prompt_embeds'])

out.images[0].save("out_custom_cfg.png")

```

The callback function is executed at the end of each denoising step, and modifies the pipeline attributes and tensor variables for the next denoising step.

With callbacks, you can implement features such as dynamic CFG without having to modify the underlying code at all!

<Tip>

🤗 Diffusers currently only supports `callback_on_step_end`, but feel free to open a [feature request](https://github.com/huggingface/diffusers/issues/new/choose) if you have a cool use-case and require a callback function with a different execution point!

</Tip>

| huggingface/diffusers/blob/main/docs/source/en/using-diffusers/callback.md |

From Q-Learning to Deep Q-Learning [[from-q-to-dqn]]

We learned that **Q-Learning is an algorithm we use to train our Q-Function**, an **action-value function** that determines the value of being at a particular state and taking a specific action at that state.

<figure>

<img src="https://huggingface.co/datasets/huggingface-deep-rl-course/course-images/resolve/main/en/unit3/Q-function.jpg" alt="Q-function"/>

</figure>

The **Q comes from "the Quality" of that action at that state.**

Internally, our Q-function is encoded by **a Q-table, a table where each cell corresponds to a state-action pair value.** Think of this Q-table as **the memory or cheat sheet of our Q-function.**

The problem is that Q-Learning is a *tabular method*. This becomes a problem if the states and actions spaces **are not small enough to be represented efficiently by arrays and tables**. In other words: it is **not scalable**.

Q-Learning worked well with small state space environments like:

- FrozenLake, we had 16 states.

- Taxi-v3, we had 500 states.

But think of what we're going to do today: we will train an agent to learn to play Space Invaders, a more complex game, using the frames as input.

As **[Nikita Melkozerov mentioned](https://twitter.com/meln1k), Atari environments** have an observation space with a shape of (210, 160, 3)*, containing values ranging from 0 to 255 so that gives us \\(256^{210 \times 160 \times 3} = 256^{100800}\\) possible observations (for comparison, we have approximately \\(10^{80}\\) atoms in the observable universe).

* A single frame in Atari is composed of an image of 210x160 pixels. Given that the images are in color (RGB), there are 3 channels. This is why the shape is (210, 160, 3). For each pixel, the value can go from 0 to 255.

<img src="https://huggingface.co/datasets/huggingface-deep-rl-course/course-images/resolve/main/en/unit4/atari.jpg" alt="Atari State Space"/>

Therefore, the state space is gigantic; due to this, creating and updating a Q-table for that environment would not be efficient. In this case, the best idea is to approximate the Q-values using a parametrized Q-function \\(Q_{\theta}(s,a)\\) .

This neural network will approximate, given a state, the different Q-values for each possible action at that state. And that's exactly what Deep Q-Learning does.

<img src="https://huggingface.co/datasets/huggingface-deep-rl-course/course-images/resolve/main/en/unit1/deep.jpg" alt="Deep Q Learning"/>

Now that we understand Deep Q-Learning, let's dive deeper into the Deep Q-Network.

| huggingface/deep-rl-class/blob/main/units/en/unit3/from-q-to-dqn.mdx |

Using the `evaluator` with custom pipelines

The evaluator is designed to work with `transformer` pipelines out-of-the-box. However, in many cases you might have a model or pipeline that's not part of the `transformer` ecosystem. You can still use `evaluator` to easily compute metrics for them. In this guide we show how to do this for a Scikit-Learn [pipeline](https://scikit-learn.org/stable/modules/generated/sklearn.pipeline.Pipeline.html#sklearn.pipeline.Pipeline) and a Spacy [pipeline](https://spacy.io). Let's start with the Scikit-Learn case.

## Scikit-Learn

First we need to train a model. We'll train a simple text classifier on the [IMDb dataset](https://huggingface.co/datasets/imdb), so let's start by downloading the dataset:

```py

from datasets import load_dataset

ds = load_dataset("imdb")

```

Then we can build a simple TF-IDF preprocessor and Naive Bayes classifier wrapped in a `Pipeline`:

```py

from sklearn.pipeline import Pipeline

from sklearn.naive_bayes import MultinomialNB

from sklearn.feature_extraction.text import TfidfTransformer

from sklearn.feature_extraction.text import CountVectorizer

text_clf = Pipeline([

('vect', CountVectorizer()),

('tfidf', TfidfTransformer()),

('clf', MultinomialNB()),

])

text_clf.fit(ds["train"]["text"], ds["train"]["label"])

```

Following the convention in the `TextClassificationPipeline` of `transformers` our pipeline should be callable and return a list of dictionaries. In addition we use the `task` attribute to check if the pipeline is compatible with the `evaluator`. We can write a small wrapper class for that purpose:

```py

class ScikitEvalPipeline:

def __init__(self, pipeline):

self.pipeline = pipeline

self.task = "text-classification"

def __call__(self, input_texts, **kwargs):

return [{"label": p} for p in self.pipeline.predict(input_texts)]

pipe = ScikitEvalPipeline(text_clf)

```

We can now pass this `pipeline` to the `evaluator`:

```py

from evaluate import evaluator

task_evaluator = evaluator("text-classification")

task_evaluator.compute(pipe, ds["test"], "accuracy")

>>> {'accuracy': 0.82956}

```

Implementing that simple wrapper is all that's needed to use any model from any framework with the `evaluator`. In the `__call__` you can implement all logic necessary for efficient forward passes through your model.

## Spacy

We'll use the `polarity` feature of the `spacytextblob` project to get a simple sentiment analyzer. First you'll need to install the project and download the resources:

```bash

pip install spacytextblob

python -m textblob.download_corpora

python -m spacy download en_core_web_sm

```

Then we can simply load the `nlp` pipeline and add the `spacytextblob` pipeline:

```py

import spacy

nlp = spacy.load('en_core_web_sm')

nlp.add_pipe('spacytextblob')

```

This snippet shows how we can use the `polarity` feature added with `spacytextblob` to get the sentiment of a text:

```py

texts = ["This movie is horrible", "This movie is awesome"]

results = nlp.pipe(texts)

for txt, res in zip(texts, results):

print(f"{text} | Polarity: {res._.blob.polarity}")

```

Now we can wrap it in a simple wrapper class like in the Scikit-Learn example before. It just has to return a list of dictionaries with the predicted lables. If the polarity is larger than 0 we'll predict positive sentiment and negative otherwise:

```py

class SpacyEvalPipeline:

def __init__(self, nlp):

self.nlp = nlp

self.task = "text-classification"

def __call__(self, input_texts, **kwargs):

results =[]

for p in self.nlp.pipe(input_texts):

if p._.blob.polarity>=0:

results.append({"label": 1})

else:

results.append({"label": 0})

return results

pipe = SpacyEvalPipeline(nlp)

```

That class is compatible with the `evaluator` and we can use the same instance from the previous examlpe along with the IMDb test set:

```py

eval.compute(pipe, ds["test"], "accuracy")

>>> {'accuracy': 0.6914}

```

This will take a little longer than the Scikit-Learn example but after roughly 10-15min you will have the evaluation results!

| huggingface/evaluate/blob/main/docs/source/custom_evaluator.mdx |

Gradio Demo: spectogram

```

!pip install -q gradio scipy numpy matplotlib

```

```

import matplotlib.pyplot as plt

import numpy as np

from scipy import signal

import gradio as gr

def spectrogram(audio):

sr, data = audio

if len(data.shape) == 2:

data = np.mean(data, axis=0)

frequencies, times, spectrogram_data = signal.spectrogram(

data, sr, window="hamming"

)

plt.pcolormesh(times, frequencies, np.log10(spectrogram_data))

return plt

demo = gr.Interface(spectrogram, "audio", "plot")

if __name__ == "__main__":

demo.launch()

```

| gradio-app/gradio/blob/main/demo/spectogram/run.ipynb |

Gradio Demo: clustering

### This demo built with Blocks generates 9 plots based on the input.

```

!pip install -q gradio matplotlib>=3.5.2 scikit-learn>=1.0.1

```

```

import gradio as gr

import math

from functools import partial

import matplotlib.pyplot as plt

import numpy as np

from sklearn.cluster import (

AgglomerativeClustering, Birch, DBSCAN, KMeans, MeanShift, OPTICS, SpectralClustering, estimate_bandwidth

)

from sklearn.datasets import make_blobs, make_circles, make_moons

from sklearn.mixture import GaussianMixture

from sklearn.neighbors import kneighbors_graph

from sklearn.preprocessing import StandardScaler

plt.style.use('seaborn-v0_8')

SEED = 0

MAX_CLUSTERS = 10

N_SAMPLES = 1000

N_COLS = 3

FIGSIZE = 7, 7 # does not affect size in webpage

COLORS = [

'blue', 'orange', 'green', 'red', 'purple', 'brown', 'pink', 'gray', 'olive', 'cyan'

]

if len(COLORS) <= MAX_CLUSTERS:

raise ValueError("Not enough different colors for all clusters")

np.random.seed(SEED)

def normalize(X):

return StandardScaler().fit_transform(X)

def get_regular(n_clusters):

# spiral pattern

centers = [

[0, 0],

[1, 0],

[1, 1],

[0, 1],

[-1, 1],

[-1, 0],

[-1, -1],

[0, -1],

[1, -1],

[2, -1],

][:n_clusters]

assert len(centers) == n_clusters

X, labels = make_blobs(n_samples=N_SAMPLES, centers=centers, cluster_std=0.25, random_state=SEED)

return normalize(X), labels

def get_circles(n_clusters):

X, labels = make_circles(n_samples=N_SAMPLES, factor=0.5, noise=0.05, random_state=SEED)

return normalize(X), labels

def get_moons(n_clusters):

X, labels = make_moons(n_samples=N_SAMPLES, noise=0.05, random_state=SEED)

return normalize(X), labels

def get_noise(n_clusters):

np.random.seed(SEED)

X, labels = np.random.rand(N_SAMPLES, 2), np.random.randint(0, n_clusters, size=(N_SAMPLES,))

return normalize(X), labels

def get_anisotropic(n_clusters):

X, labels = make_blobs(n_samples=N_SAMPLES, centers=n_clusters, random_state=170)

transformation = [[0.6, -0.6], [-0.4, 0.8]]

X = np.dot(X, transformation)

return X, labels

def get_varied(n_clusters):

cluster_std = [1.0, 2.5, 0.5, 1.0, 2.5, 0.5, 1.0, 2.5, 0.5, 1.0][:n_clusters]

assert len(cluster_std) == n_clusters

X, labels = make_blobs(

n_samples=N_SAMPLES, centers=n_clusters, cluster_std=cluster_std, random_state=SEED

)

return normalize(X), labels

def get_spiral(n_clusters):

# from https://scikit-learn.org/stable/auto_examples/cluster/plot_agglomerative_clustering.html

np.random.seed(SEED)

t = 1.5 * np.pi * (1 + 3 * np.random.rand(1, N_SAMPLES))

x = t * np.cos(t)

y = t * np.sin(t)

X = np.concatenate((x, y))

X += 0.7 * np.random.randn(2, N_SAMPLES)

X = np.ascontiguousarray(X.T)

labels = np.zeros(N_SAMPLES, dtype=int)

return normalize(X), labels

DATA_MAPPING = {

'regular': get_regular,

'circles': get_circles,

'moons': get_moons,

'spiral': get_spiral,

'noise': get_noise,

'anisotropic': get_anisotropic,

'varied': get_varied,

}

def get_groundtruth_model(X, labels, n_clusters, **kwargs):

# dummy model to show true label distribution

class Dummy:

def __init__(self, y):

self.labels_ = labels

return Dummy(labels)

def get_kmeans(X, labels, n_clusters, **kwargs):

model = KMeans(init="k-means++", n_clusters=n_clusters, n_init=10, random_state=SEED)

model.set_params(**kwargs)

return model.fit(X)

def get_dbscan(X, labels, n_clusters, **kwargs):

model = DBSCAN(eps=0.3)

model.set_params(**kwargs)

return model.fit(X)

def get_agglomerative(X, labels, n_clusters, **kwargs):

connectivity = kneighbors_graph(

X, n_neighbors=n_clusters, include_self=False

)

# make connectivity symmetric

connectivity = 0.5 * (connectivity + connectivity.T)

model = AgglomerativeClustering(

n_clusters=n_clusters, linkage="ward", connectivity=connectivity

)

model.set_params(**kwargs)

return model.fit(X)

def get_meanshift(X, labels, n_clusters, **kwargs):

bandwidth = estimate_bandwidth(X, quantile=0.25)

model = MeanShift(bandwidth=bandwidth, bin_seeding=True)

model.set_params(**kwargs)

return model.fit(X)

def get_spectral(X, labels, n_clusters, **kwargs):

model = SpectralClustering(

n_clusters=n_clusters,

eigen_solver="arpack",

affinity="nearest_neighbors",

)

model.set_params(**kwargs)

return model.fit(X)

def get_optics(X, labels, n_clusters, **kwargs):

model = OPTICS(

min_samples=7,

xi=0.05,

min_cluster_size=0.1,

)

model.set_params(**kwargs)

return model.fit(X)

def get_birch(X, labels, n_clusters, **kwargs):

model = Birch(n_clusters=n_clusters)

model.set_params(**kwargs)

return model.fit(X)

def get_gaussianmixture(X, labels, n_clusters, **kwargs):

model = GaussianMixture(

n_components=n_clusters, covariance_type="full", random_state=SEED,

)

model.set_params(**kwargs)

return model.fit(X)

MODEL_MAPPING = {

'True labels': get_groundtruth_model,

'KMeans': get_kmeans,

'DBSCAN': get_dbscan,

'MeanShift': get_meanshift,

'SpectralClustering': get_spectral,

'OPTICS': get_optics,

'Birch': get_birch,

'GaussianMixture': get_gaussianmixture,

'AgglomerativeClustering': get_agglomerative,

}

def plot_clusters(ax, X, labels):

set_clusters = set(labels)

set_clusters.discard(-1) # -1 signifiies outliers, which we plot separately

for label, color in zip(sorted(set_clusters), COLORS):

idx = labels == label

if not sum(idx):

continue

ax.scatter(X[idx, 0], X[idx, 1], color=color)

# show outliers (if any)

idx = labels == -1

if sum(idx):

ax.scatter(X[idx, 0], X[idx, 1], c='k', marker='x')

ax.grid(None)

ax.set_xticks([])

ax.set_yticks([])

return ax

def cluster(dataset: str, n_clusters: int, clustering_algorithm: str):

if isinstance(n_clusters, dict):

n_clusters = n_clusters['value']

else:

n_clusters = int(n_clusters)

X, labels = DATA_MAPPING[dataset](n_clusters)

model = MODEL_MAPPING[clustering_algorithm](X, labels, n_clusters=n_clusters)

if hasattr(model, "labels_"):

y_pred = model.labels_.astype(int)

else:

y_pred = model.predict(X)

fig, ax = plt.subplots(figsize=FIGSIZE)

plot_clusters(ax, X, y_pred)

ax.set_title(clustering_algorithm, fontsize=16)

return fig

title = "Clustering with Scikit-learn"

description = (

"This example shows how different clustering algorithms work. Simply pick "

"the dataset and the number of clusters to see how the clustering algorithms work. "

"Colored circles are (predicted) labels and black x are outliers."

)

def iter_grid(n_rows, n_cols):

# create a grid using gradio Block

for _ in range(n_rows):

with gr.Row():

for _ in range(n_cols):

with gr.Column():

yield

with gr.Blocks(title=title) as demo:

gr.HTML(f"<b>{title}</b>")

gr.Markdown(description)

input_models = list(MODEL_MAPPING)

input_data = gr.Radio(

list(DATA_MAPPING),

value="regular",

label="dataset"

)

input_n_clusters = gr.Slider(

minimum=1,

maximum=MAX_CLUSTERS,

value=4,

step=1,

label='Number of clusters'

)

n_rows = int(math.ceil(len(input_models) / N_COLS))

counter = 0

for _ in iter_grid(n_rows, N_COLS):

if counter >= len(input_models):

break

input_model = input_models[counter]

plot = gr.Plot(label=input_model)

fn = partial(cluster, clustering_algorithm=input_model)

input_data.change(fn=fn, inputs=[input_data, input_n_clusters], outputs=plot)

input_n_clusters.change(fn=fn, inputs=[input_data, input_n_clusters], outputs=plot)

counter += 1

demo.launch()

```

| gradio-app/gradio/blob/main/demo/clustering/run.ipynb |

Datasets server admin machine

> Admin endpoints

## Configuration

The worker can be configured using environment variables. They are grouped by scope.

### Admin service

Set environment variables to configure the application (`ADMIN_` prefix):

- `ADMIN_HF_ORGANIZATION`: the huggingface organization from which the authenticated user must be part of in order to access the protected routes, eg. "huggingface". If empty, the authentication is disabled. Defaults to None.

- `ADMIN_CACHE_REPORTS_NUM_RESULTS`: the number of results in /cache-reports/... endpoints. Defaults to `100`.

- `ADMIN_CACHE_REPORTS_WITH_CONTENT_NUM_RESULTS`: the number of results in /cache-reports-with-content/... endpoints. Defaults to `100`.

- `ADMIN_HF_TIMEOUT_SECONDS`: the timeout in seconds for the requests to the Hugging Face Hub. Defaults to `0.2` (200 ms).

- `ADMIN_HF_WHOAMI_PATH`: the path of the external whoami service, on the hub (see `HF_ENDPOINT`), eg. "/api/whoami-v2". Defaults to `/api/whoami-v2`.

- `ADMIN_MAX_AGE`: number of seconds to set in the `max-age` header on technical endpoints. Defaults to `10` (10 seconds).

### Uvicorn

The following environment variables are used to configure the Uvicorn server (`ADMIN_UVICORN_` prefix):

- `ADMIN_UVICORN_HOSTNAME`: the hostname. Defaults to `"localhost"`.

- `ADMIN_UVICORN_NUM_WORKERS`: the number of uvicorn workers. Defaults to `2`.

- `ADMIN_UVICORN_PORT`: the port. Defaults to `8000`.

### Prometheus

- `PROMETHEUS_MULTIPROC_DIR`: the directory where the uvicorn workers share their prometheus metrics. See https://github.com/prometheus/client_python#multiprocess-mode-eg-gunicorn. Defaults to empty, in which case every worker manages its own metrics, and the /metrics endpoint returns the metrics of a random worker.

### Common

See [../../libs/libcommon/README.md](../../libs/libcommon/README.md) for more information about the common configuration.

## Endpoints

The admin service provides endpoints:

- `/healthcheck`

- `/metrics`: give info about the cache and the queue

- `/cache-reports{processing_step}`: give detailed reports on the content of the cache for a processing step

- `/cache-reports-with-content{processing_step}`: give detailed reports on the content of the cache for a processing step, including the content itself, which can be heavy

- `/pending-jobs`: give the pending jobs, classed by queue and status (waiting or started)

- `/force-refresh{processing_step}`: force refresh cache entries for the processing step. It's a POST endpoint. Pass the requested parameters, depending on the processing step's input type:

- `dataset`: `?dataset={dataset}`

- `config`: `?dataset={dataset}&config={config}`

- `split`: `?dataset={dataset}&config={config}&split={split}`

- `/recreate-dataset`: deletes all the cache entries related to a specific dataset, then run all the steps in order. It's a POST endpoint. Pass the requested parameters:

- `dataset`: the dataset name

- `priority`: `low` (default), `normal` or `high`

| huggingface/datasets-server/blob/main/services/admin/README.md |

Contributor Covenant Code of Conduct

## Our Pledge

We as members, contributors, and leaders pledge to make participation in our

community a harassment-free experience for everyone, regardless of age, body

size, visible or invisible disability, ethnicity, sex characteristics, gender

identity and expression, level of experience, education, socio-economic status,

nationality, personal appearance, race, caste, color, religion, or sexual identity

and orientation.

We pledge to act and interact in ways that contribute to an open, welcoming,

diverse, inclusive, and healthy community.

## Our Standards

Examples of behavior that contributes to a positive environment for our

community include:

* Demonstrating empathy and kindness toward other people

* Being respectful of differing opinions, viewpoints, and experiences

* Giving and gracefully accepting constructive feedback

* Accepting responsibility and apologizing to those affected by our mistakes,

and learning from the experience

* Focusing on what is best not just for us as individuals, but for the

overall community

Examples of unacceptable behavior include:

* The use of sexualized language or imagery, and sexual attention or

advances of any kind

* Trolling, insulting or derogatory comments, and personal or political attacks

* Public or private harassment

* Publishing others' private information, such as a physical or email

address, without their explicit permission

* Other conduct which could reasonably be considered inappropriate in a

professional setting

## Enforcement Responsibilities

Community leaders are responsible for clarifying and enforcing our standards of

acceptable behavior and will take appropriate and fair corrective action in