text

stringlengths 23

371k

| source

stringlengths 32

152

|

|---|---|

Pandas

[Pandas](https://github.com/pandas-dev/pandas) is a widely used Python data analysis toolkit.

Since it uses [fsspec](https://filesystem-spec.readthedocs.io) to read and write remote data, you can use the Hugging Face paths ([`hf://`](https://huggingface.co/docs/huggingface_hub/guides/hf_file_system#integrations)) to read and write data on the Hub:

First you need to [Login with your Hugging Face account](../huggingface_hub/quick-start#login), for example using:

```

huggingface-cli login

```

Then you can [Create a dataset repository](../huggingface_hub/quick-start#create-a-repository), for example using:

```python

from huggingface_hub import HfApi

HfApi().create_repo(repo_id="username/my_dataset", repo_type="dataset")

```

Finally, you can use [Hugging Face paths]([Hugging Face paths](https://huggingface.co/docs/huggingface_hub/guides/hf_file_system#integrations)) in Pandas:

```python

import pandas as pd

df.to_parquet("hf://datasets/username/my_dataset/data.parquet")

# or write in separate files if the dataset has train/validation/test splits

df_train.to_parquet("hf://datasets/username/my_dataset/train.parquet")

df_valid.to_parquet("hf://datasets/username/my_dataset/validation.parquet")

df_test .to_parquet("hf://datasets/username/my_dataset/test.parquet")

```

This creates a dataset repository `username/my_dataset` containing your Pandas dataset in Parquet format.

You can reload it later:

```python

import pandas as pd

df = pd.read_parquet("hf://datasets/username/my_dataset/data.parquet")

# or read from separate files if the dataset has train/validation/test splits

df_train = pd.read_parquet("hf://datasets/username/my_dataset/train.parquet")

df_valid = pd.read_parquet("hf://datasets/username/my_dataset/validation.parquet")

df_test = pd.read_parquet("hf://datasets/username/my_dataset/test.parquet")

```

To have more information on the Hugging Face paths and how they are implemented, please refer to the [the client library's documentation on the HfFileSystem](https://huggingface.co/docs/huggingface_hub/guides/hf_file_system).

| huggingface/hub-docs/blob/main/docs/hub/datasets-pandas.md |

!--⚠️ Note that this file is in Markdown but contain specific syntax for our doc-builder (similar to MDX) that may not be

rendered properly in your Markdown viewer.

-->

# Manage your Space

In this guide, we will see how to manage your Space runtime

([secrets](https://huggingface.co/docs/hub/spaces-overview#managing-secrets),

[hardware](https://huggingface.co/docs/hub/spaces-gpus), and [storage](https://huggingface.co/docs/hub/spaces-storage#persistent-storage)) using `huggingface_hub`.

## A simple example: configure secrets and hardware.

Here is an end-to-end example to create and setup a Space on the Hub.

**1. Create a Space on the Hub.**

```py

>>> from huggingface_hub import HfApi

>>> repo_id = "Wauplin/my-cool-training-space"

>>> api = HfApi()

# For example with a Gradio SDK

>>> api.create_repo(repo_id=repo_id, repo_type="space", space_sdk="gradio")

```

**1. (bis) Duplicate a Space.**

This can prove useful if you want to build up from an existing Space instead of starting from scratch.

It is also useful is you want control over the configuration/settings of a public Space. See [`duplicate_space`] for more details.

```py

>>> api.duplicate_space("multimodalart/dreambooth-training")

```

**2. Upload your code using your preferred solution.**

Here is an example to upload the local folder `src/` from your machine to your Space:

```py

>>> api.upload_folder(repo_id=repo_id, repo_type="space", folder_path="src/")

```

At this step, your app should already be running on the Hub for free !

However, you might want to configure it further with secrets and upgraded hardware.

**3. Configure secrets and variables**

Your Space might require some secret keys, token or variables to work.

See [docs](https://huggingface.co/docs/hub/spaces-overview#managing-secrets) for more details.

For example, an HF token to upload an image dataset to the Hub once generated from your Space.

```py

>>> api.add_space_secret(repo_id=repo_id, key="HF_TOKEN", value="hf_api_***")

>>> api.add_space_variable(repo_id=repo_id, key="MODEL_REPO_ID", value="user/repo")

```

Secrets and variables can be deleted as well:

```py

>>> api.delete_space_secret(repo_id=repo_id, key="HF_TOKEN")

>>> api.delete_space_variable(repo_id=repo_id, key="MODEL_REPO_ID")

```

<Tip>

From within your Space, secrets are available as environment variables (or

Streamlit Secrets Management if using Streamlit). No need to fetch them via the API!

</Tip>

<Tip warning={true}>

Any change in your Space configuration (secrets or hardware) will trigger a restart of your app.

</Tip>

**Bonus: set secrets and variables when creating or duplicating the Space!**

Secrets and variables can be set when creating or duplicating a space:

```py

>>> api.create_repo(

... repo_id=repo_id,

... repo_type="space",

... space_sdk="gradio",

... space_secrets=[{"key"="HF_TOKEN", "value"="hf_api_***"}, ...],

... space_variables=[{"key"="MODEL_REPO_ID", "value"="user/repo"}, ...],

... )

```

```py

>>> api.duplicate_space(

... from_id=repo_id,

... secrets=[{"key"="HF_TOKEN", "value"="hf_api_***"}, ...],

... variables=[{"key"="MODEL_REPO_ID", "value"="user/repo"}, ...],

... )

```

**4. Configure the hardware**

By default, your Space will run on a CPU environment for free. You can upgrade the hardware

to run it on GPUs. A payment card or a community grant is required to access upgrade your

Space. See [docs](https://huggingface.co/docs/hub/spaces-gpus) for more details.

```py

# Use `SpaceHardware` enum

>>> from huggingface_hub import SpaceHardware

>>> api.request_space_hardware(repo_id=repo_id, hardware=SpaceHardware.T4_MEDIUM)

# Or simply pass a string value

>>> api.request_space_hardware(repo_id=repo_id, hardware="t4-medium")

```

Hardware updates are not done immediately as your Space has to be reloaded on our servers.

At any time, you can check on which hardware your Space is running to see if your request

has been met.

```py

>>> runtime = api.get_space_runtime(repo_id=repo_id)

>>> runtime.stage

"RUNNING_BUILDING"

>>> runtime.hardware

"cpu-basic"

>>> runtime.requested_hardware

"t4-medium"

```

You now have a Space fully configured. Make sure to downgrade your Space back to "cpu-classic"

when you are done using it.

**Bonus: request hardware when creating or duplicating the Space!**

Upgraded hardware will be automatically assigned to your Space once it's built.

```py

>>> api.create_repo(

... repo_id=repo_id,

... repo_type="space",

... space_sdk="gradio"

... space_hardware="cpu-upgrade",

... space_storage="small",

... space_sleep_time="7200", # 2 hours in secs

... )

```

```py

>>> api.duplicate_space(

... from_id=repo_id,

... hardware="cpu-upgrade",

... storage="small",

... sleep_time="7200", # 2 hours in secs

... )

```

**5. Pause and restart your Space**

By default if your Space is running on an upgraded hardware, it will never be stopped. However to avoid getting billed,

you might want to pause it when you are not using it. This is possible using [`pause_space`]. A paused Space will be

inactive until the owner of the Space restarts it, either with the UI or via API using [`restart_space`].

For more details about paused mode, please refer to [this section](https://huggingface.co/docs/hub/spaces-gpus#pause)

```py

# Pause your Space to avoid getting billed

>>> api.pause_space(repo_id=repo_id)

# (...)

# Restart it when you need it

>>> api.restart_space(repo_id=repo_id)

```

Another possibility is to set a timeout for your Space. If your Space is inactive for more than the timeout duration,

it will go to sleep. Any visitor landing on your Space will start it back up. You can set a timeout using

[`set_space_sleep_time`]. For more details about sleeping mode, please refer to [this section](https://huggingface.co/docs/hub/spaces-gpus#sleep-time).

```py

# Put your Space to sleep after 1h of inactivity

>>> api.set_space_sleep_time(repo_id=repo_id, sleep_time=3600)

```

Note: if you are using a 'cpu-basic' hardware, you cannot configure a custom sleep time. Your Space will automatically

be paused after 48h of inactivity.

**Bonus: set a sleep time while requesting hardware**

Upgraded hardware will be automatically assigned to your Space once it's built.

```py

>>> api.request_space_hardware(repo_id=repo_id, hardware=SpaceHardware.T4_MEDIUM, sleep_time=3600)

```

**Bonus: set a sleep time when creating or duplicating the Space!**

```py

>>> api.create_repo(

... repo_id=repo_id,

... repo_type="space",

... space_sdk="gradio"

... space_hardware="t4-medium",

... space_sleep_time="3600",

... )

```

```py

>>> api.duplicate_space(

... from_id=repo_id,

... hardware="t4-medium",

... sleep_time="3600",

... )

```

**6. Add persistent storage to your Space**

You can choose the storage tier of your choice to access disk space that persists across restarts of your Space. This means you can read and write from disk like you would with a traditional hard drive. See [docs](https://huggingface.co/docs/hub/spaces-storage#persistent-storage) for more details.

```py

>>> from huggingface_hub import SpaceStorage

>>> api.request_space_storage(repo_id=repo_id, storage=SpaceStorage.LARGE)

```

You can also delete your storage, losing all the data permanently.

```py

>>> api.delete_space_storage(repo_id=repo_id)

```

Note: You cannot decrease the storage tier of your space once it's been granted. To do so,

you must delete the storage first then request the new desired tier.

**Bonus: request storage when creating or duplicating the Space!**

```py

>>> api.create_repo(

... repo_id=repo_id,

... repo_type="space",

... space_sdk="gradio"

... space_storage="large",

... )

```

```py

>>> api.duplicate_space(

... from_id=repo_id,

... storage="large",

... )

```

## More advanced: temporarily upgrade your Space !

Spaces allow for a lot of different use cases. Sometimes, you might want

to temporarily run a Space on a specific hardware, do something and then shut it down. In

this section, we will explore how to benefit from Spaces to finetune a model on demand.

This is only one way of solving this particular problem. It has to be taken as a suggestion

and adapted to your use case.

Let's assume we have a Space to finetune a model. It is a Gradio app that takes as input

a model id and a dataset id. The workflow is as follows:

0. (Prompt the user for a model and a dataset)

1. Load the model from the Hub.

2. Load the dataset from the Hub.

3. Finetune the model on the dataset.

4. Upload the new model to the Hub.

Step 3. requires a custom hardware but you don't want your Space to be running all the time on a paid

GPU. A solution is to dynamically request hardware for the training and shut it

down afterwards. Since requesting hardware restarts your Space, your app must somehow "remember"

the current task it is performing. There are multiple ways of doing this. In this guide

we will see one solution using a Dataset as "task scheduler".

### App skeleton

Here is what your app would look like. On startup, check if a task is scheduled and if yes,

run it on the correct hardware. Once done, set back hardware to the free-plan CPU and

prompt the user for a new task.

<Tip warning={true}>

Such a workflow does not support concurrent access as normal demos.

In particular, the interface will be disabled when training occurs.

It is preferable to set your repo as private to ensure you are the only user.

</Tip>

```py

# Space will need your token to request hardware: set it as a Secret !

HF_TOKEN = os.environ.get("HF_TOKEN")

# Space own repo_id

TRAINING_SPACE_ID = "Wauplin/dreambooth-training"

from huggingface_hub import HfApi, SpaceHardware

api = HfApi(token=HF_TOKEN)

# On Space startup, check if a task is scheduled. If yes, finetune the model. If not,

# display an interface to request a new task.

task = get_task()

if task is None:

# Start Gradio app

def gradio_fn(task):

# On user request, add task and request hardware

add_task(task)

api.request_space_hardware(repo_id=TRAINING_SPACE_ID, hardware=SpaceHardware.T4_MEDIUM)

gr.Interface(fn=gradio_fn, ...).launch()

else:

runtime = api.get_space_runtime(repo_id=TRAINING_SPACE_ID)

# Check if Space is loaded with a GPU.

if runtime.hardware == SpaceHardware.T4_MEDIUM:

# If yes, finetune base model on dataset !

train_and_upload(task)

# Then, mark the task as "DONE"

mark_as_done(task)

# DO NOT FORGET: set back CPU hardware

api.request_space_hardware(repo_id=TRAINING_SPACE_ID, hardware=SpaceHardware.CPU_BASIC)

else:

api.request_space_hardware(repo_id=TRAINING_SPACE_ID, hardware=SpaceHardware.T4_MEDIUM)

```

### Task scheduler

Scheduling tasks can be done in many ways. Here is an example how it could be done using

a simple CSV stored as a Dataset.

```py

# Dataset ID in which a `tasks.csv` file contains the tasks to perform.

# Here is a basic example for `tasks.csv` containing inputs (base model and dataset)

# and status (PENDING or DONE).

# multimodalart/sd-fine-tunable,Wauplin/concept-1,DONE

# multimodalart/sd-fine-tunable,Wauplin/concept-2,PENDING

TASK_DATASET_ID = "Wauplin/dreambooth-task-scheduler"

def _get_csv_file():

return hf_hub_download(repo_id=TASK_DATASET_ID, filename="tasks.csv", repo_type="dataset", token=HF_TOKEN)

def get_task():

with open(_get_csv_file()) as csv_file:

csv_reader = csv.reader(csv_file, delimiter=',')

for row in csv_reader:

if row[2] == "PENDING":

return row[0], row[1] # model_id, dataset_id

def add_task(task):

model_id, dataset_id = task

with open(_get_csv_file()) as csv_file:

with open(csv_file, "r") as f:

tasks = f.read()

api.upload_file(

repo_id=repo_id,

repo_type=repo_type,

path_in_repo="tasks.csv",

# Quick and dirty way to add a task

path_or_fileobj=(tasks + f"\n{model_id},{dataset_id},PENDING").encode()

)

def mark_as_done(task):

model_id, dataset_id = task

with open(_get_csv_file()) as csv_file:

with open(csv_file, "r") as f:

tasks = f.read()

api.upload_file(

repo_id=repo_id,

repo_type=repo_type,

path_in_repo="tasks.csv",

# Quick and dirty way to set the task as DONE

path_or_fileobj=tasks.replace(

f"{model_id},{dataset_id},PENDING",

f"{model_id},{dataset_id},DONE"

).encode()

)

``` | huggingface/huggingface_hub/blob/main/docs/source/en/guides/manage-spaces.md |

his simple demo takes advantage of Gradio's HighlightedText, JSON and HTML outputs to create a clear NER segmentation. | gradio-app/gradio/blob/main/demo/text_analysis/DESCRIPTION.md |

!---

Copyright 2020 The HuggingFace Team. All rights reserved.

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License.

-->

# Research projects

This folder contains various research projects using 🤗 Transformers. They are not maintained and require a specific

version of 🤗 Transformers that is indicated in the requirements file of each folder. Updating them to the most recent version of the library will require some work.

To use any of them, just run the command

```

pip install -r requirements.txt

```

inside the folder of your choice.

If you need help with any of those, contact the author(s), indicated at the top of the `README` of each folder.

| huggingface/transformers/blob/main/examples/research_projects/README.md |

`@gradio/video`

```javascript

<script>

import { BaseInteractiveVideo, BaseStaticVideo, BasePlayer } from "@gradio/button";

import type { FileData } from "@gradio/upload";

import type { Gradio } from "@gradio/utils";

export let _video: FileData;

</script>

<StaticVideo

value={_video}

{label}

{show_label}

{autoplay}

{show_share_button}

i18n={gradio.i18n}

/>

<Video

value={_video}

{label}

{show_label}

source={"upload"}

{mirror_webcam}

{include_audio}

{autoplay}

i18n={gradio.i18n}

>

<p>Upload Video Here</p>

</Video>

<BasePlayer

src={value.data}

{autoplay}

on:play

on:pause

on:stop

on:end

mirror={false}

{label}

/>

```

| gradio-app/gradio/blob/main/js/video/README.md |

Table Classes

Each `Dataset` object is backed by a PyArrow Table.

A Table can be loaded from either the disk (memory mapped) or in memory.

Several Table types are available, and they all inherit from [`table.Table`].

## Table

[[autodoc]] datasets.table.Table

- validate

- equals

- to_batches

- to_pydict

- to_pandas

- to_string

- field

- column

- itercolumns

- schema

- columns

- num_columns

- num_rows

- shape

- nbytes

## InMemoryTable

[[autodoc]] datasets.table.InMemoryTable

- validate

- equals

- to_batches

- to_pydict

- to_pandas

- to_string

- field

- column

- itercolumns

- schema

- columns

- num_columns

- num_rows

- shape

- nbytes

- column_names

- slice

- filter

- flatten

- combine_chunks

- cast

- replace_schema_metadata

- add_column

- append_column

- remove_column

- set_column

- rename_columns

- select

- drop

- from_file

- from_buffer

- from_pandas

- from_arrays

- from_pydict

- from_batches

## MemoryMappedTable

[[autodoc]] datasets.table.MemoryMappedTable

- validate

- equals

- to_batches

- to_pydict

- to_pandas

- to_string

- field

- column

- itercolumns

- schema

- columns

- num_columns

- num_rows

- shape

- nbytes

- column_names

- slice

- filter

- flatten

- combine_chunks

- cast

- replace_schema_metadata

- add_column

- append_column

- remove_column

- set_column

- rename_columns

- select

- drop

- from_file

## ConcatenationTable

[[autodoc]] datasets.table.ConcatenationTable

- validate

- equals

- to_batches

- to_pydict

- to_pandas

- to_string

- field

- column

- itercolumns

- schema

- columns

- num_columns

- num_rows

- shape

- nbytes

- column_names

- slice

- filter

- flatten

- combine_chunks

- cast

- replace_schema_metadata

- add_column

- append_column

- remove_column

- set_column

- rename_columns

- select

- drop

- from_blocks

- from_tables

## Utils

[[autodoc]] datasets.table.concat_tables

[[autodoc]] datasets.table.list_table_cache_files

| huggingface/datasets/blob/main/docs/source/package_reference/table_classes.mdx |

--

title: Training Stable Diffusion with Dreambooth using Diffusers

thumbnail: /blog/assets/sd_dreambooth_training/thumbnail.jpg

authors:

- user: valhalla

- user: pcuenq

- user: 9of9

guest: true

---

# Training Stable Diffusion with Dreambooth using 🧨 Diffusers

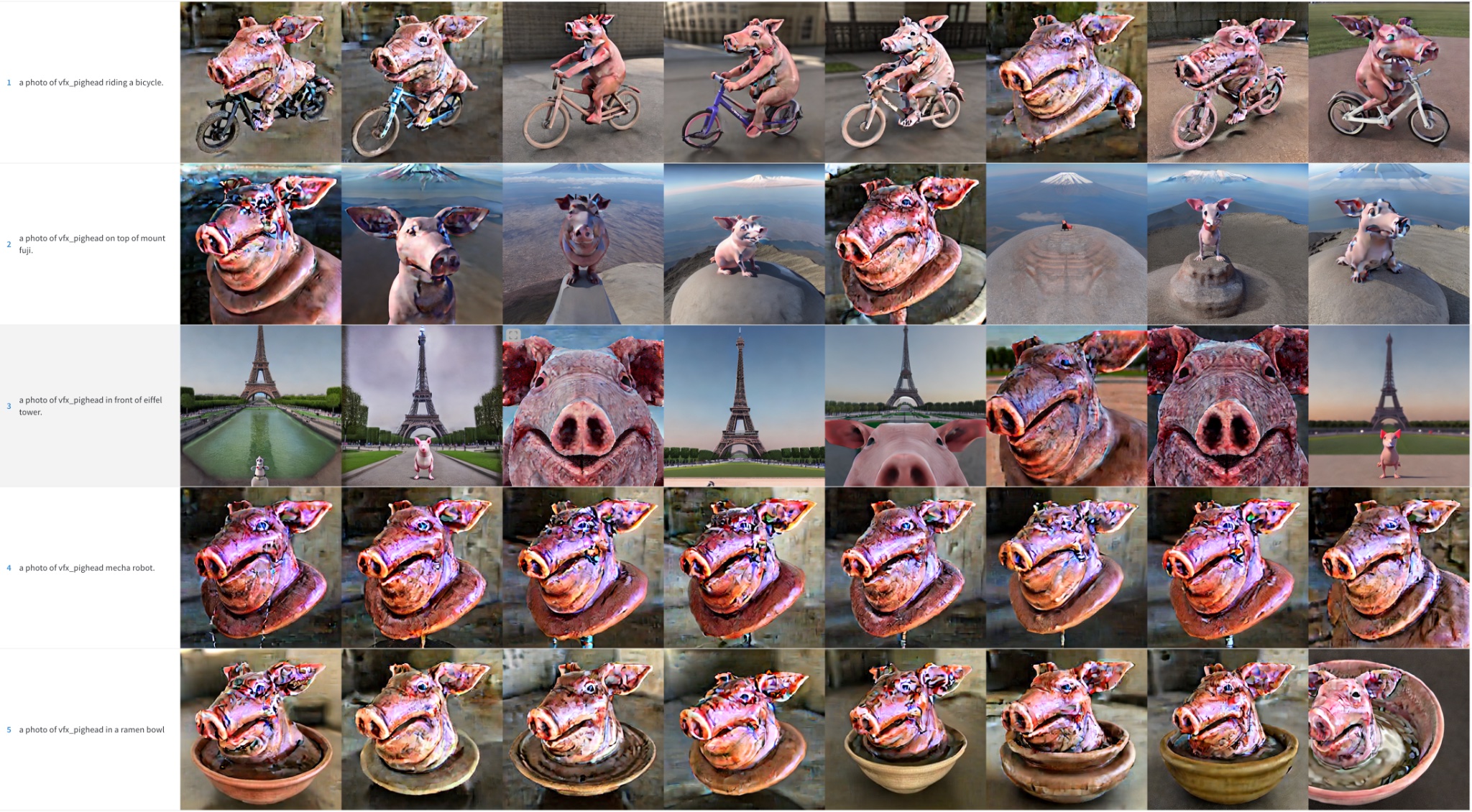

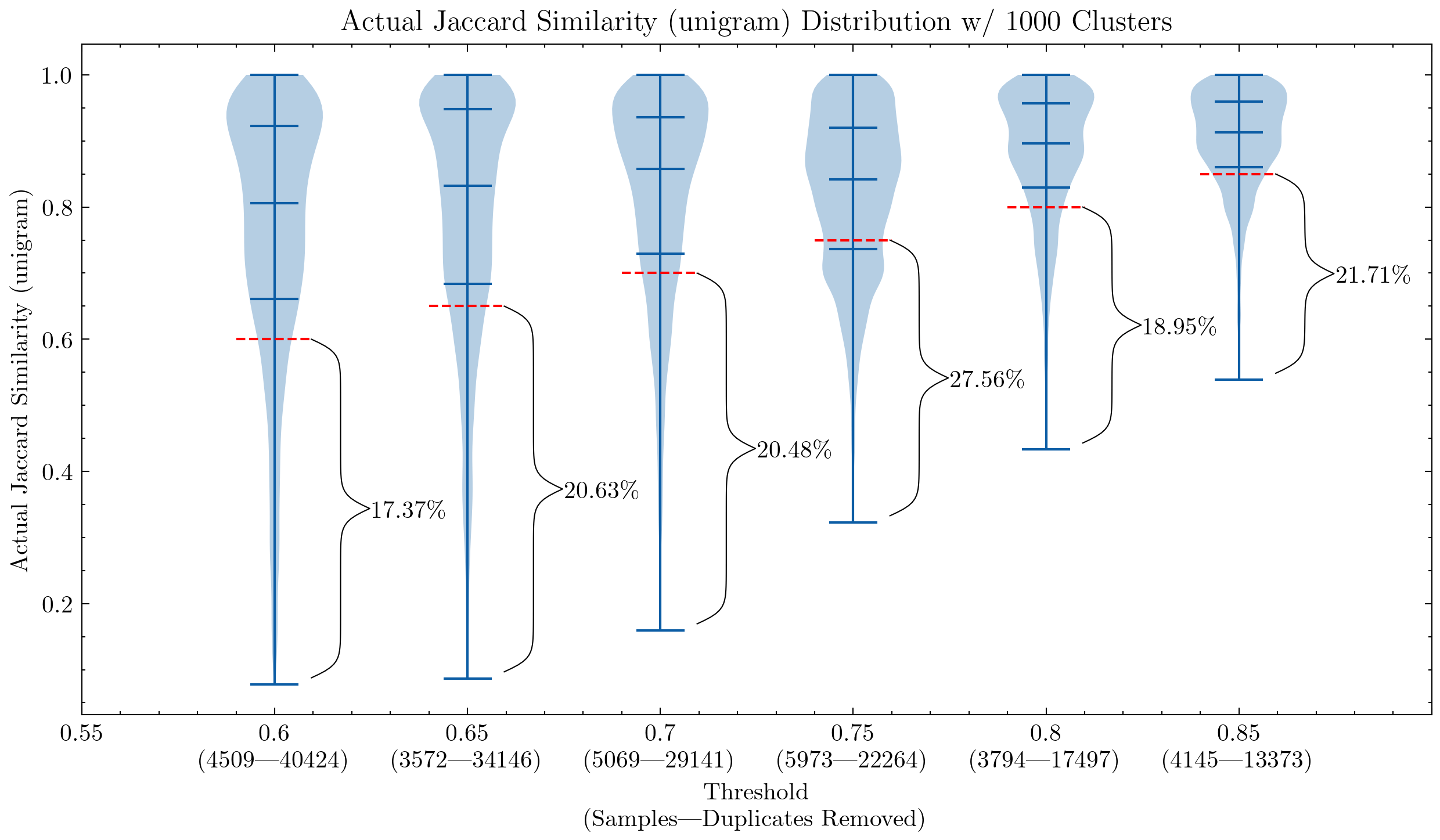

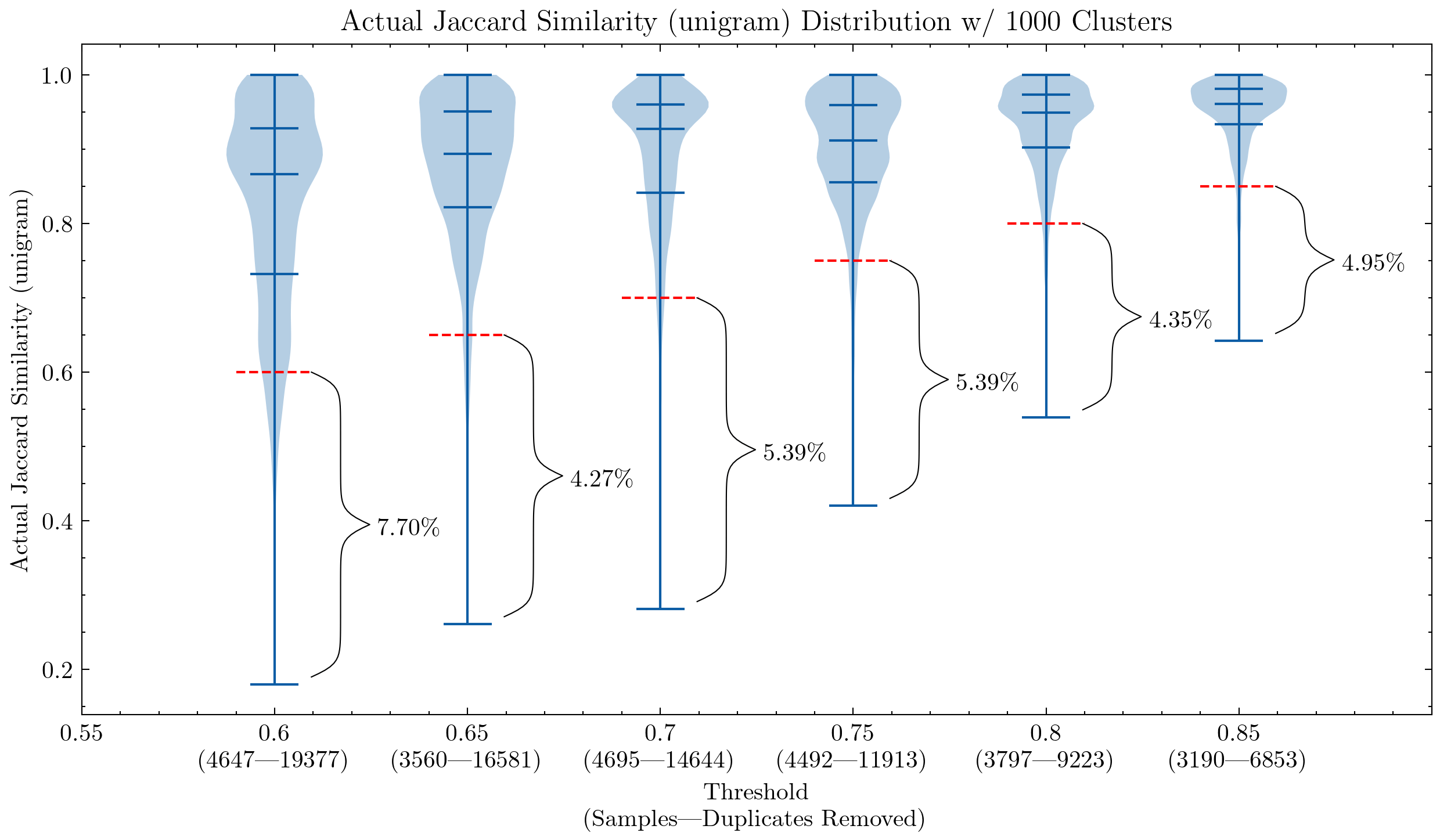

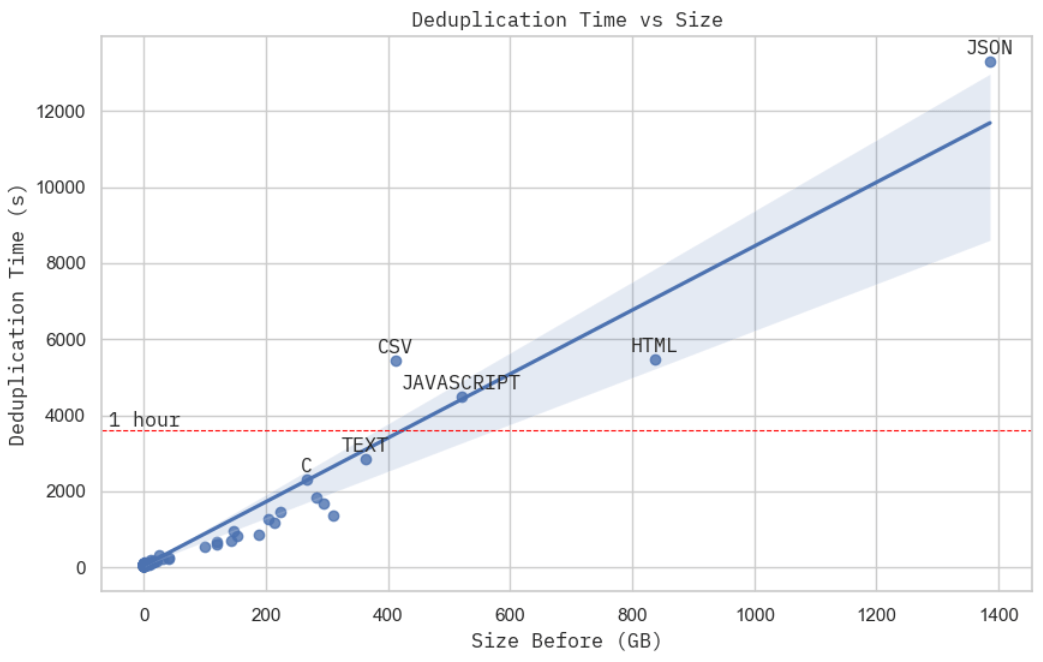

[Dreambooth](https://dreambooth.github.io/) is a technique to teach new concepts to [Stable Diffusion](https://huggingface.co/blog/stable_diffusion) using a specialized form of fine-tuning. Some people have been using it with a few of their photos to place themselves in fantastic situations, while others are using it to incorporate new styles. [🧨 Diffusers](https://github.com/huggingface/diffusers) provides a Dreambooth [training script](https://github.com/huggingface/diffusers/tree/main/examples/dreambooth). It doesn't take long to train, but it's hard to select the right set of hyperparameters and it's easy to overfit.

We conducted a lot of experiments to analyze the effect of different settings in Dreambooth. This post presents our findings and some tips to improve your results when fine-tuning Stable Diffusion with Dreambooth.

Before we start, please be aware that this method should never be used for malicious purposes, to generate harm in any way, or to impersonate people without their knowledge. Models trained with it are still bound by the [CreativeML Open RAIL-M license](https://huggingface.co/spaces/CompVis/stable-diffusion-license) that governs distribution of Stable Diffusion models.

_Note: a previous version of this post was published [as a W&B report](https://wandb.ai/psuraj/dreambooth/reports/Dreambooth-Training-Analysis--VmlldzoyNzk0NDc3)_.

## TL;DR: Recommended Settings

* Dreambooth tends to overfit quickly. To get good-quality images, we must find a 'sweet spot' between the number of training steps and the learning rate. We recommend using a low learning rate and progressively increasing the number of steps until the results are satisfactory.

* Dreambooth needs more training steps for faces. In our experiments, 800-1200 steps worked well when using a batch size of 2 and LR of 1e-6.

* Prior preservation is important to avoid overfitting when training on faces. For other subjects, it doesn't seem to make a huge difference.

* If you see that the generated images are noisy or the quality is degraded, it likely means overfitting. First, try the steps above to avoid it. If the generated images are still noisy, use the DDIM scheduler or run more inference steps (~100 worked well in our experiments).

* Training the text encoder in addition to the UNet has a big impact on quality. Our best results were obtained using a combination of text encoder fine-tuning, low LR, and a suitable number of steps. However, fine-tuning the text encoder requires more memory, so a GPU with at least 24 GB of RAM is ideal. Using techniques like 8-bit Adam, `fp16` training or gradient accumulation, it is possible to train on 16 GB GPUs like the ones provided by Google Colab or Kaggle.

* Fine-tuning with or without EMA produced similar results.

* There's no need to use the `sks` word to train Dreambooth. One of the first implementations used it because it was a rare token in the vocabulary, but it's actually a kind of rifle. Our experiments, and those by for example [@nitrosocke](https://huggingface.co/nitrosocke) show that it's ok to select terms that you'd naturally use to describe your target.

## Learning Rate Impact

Dreambooth overfits very quickly. To get good results, tune the learning rate and the number of training steps in a way that makes sense for your dataset. In our experiments (detailed below), we fine-tuned on four different datasets with high and low learning rates. In all cases, we got better results with a low learning rate.

## Experiments Settings

All our experiments were conducted using the [`train_dreambooth.py`](https://github.com/huggingface/diffusers/tree/main/examples/dreambooth) script with the `AdamW` optimizer on 2x 40GB A100s. We used the same seed and kept all hyperparameters equal across runs, except LR, number of training steps and the use of prior preservation.

For the first 3 examples (various objects), we fine-tuned the model with a batch size of 4 (2 per GPU) for 400 steps. We used a high learning rate of `5e-6` and a low learning rate of `2e-6`. No prior preservation was used.

The last experiment attempts to add a human subject to the model. We used prior preservation with a batch size of 2 (1 per GPU), 800 and 1200 steps in this case. We used a high learning rate of `5e-6` and a low learning rate of `2e-6`.

Note that you can use 8-bit Adam, `fp16` training or gradient accumulation to reduce memory requirements and run similar experiments on GPUs with 16 GB of memory.

### Cat Toy

High Learning Rate (`5e-6`)

Low Learning Rate (`2e-6`)

### Pighead

High Learning Rate (`5e-6`). Note that the color artifacts are noise remnants – running more inference steps could help resolve some of those details.

Low Learning Rate (`2e-6`)

### Mr. Potato Head

High Learning Rate (`5e-6`). Note that the color artifacts are noise remnants – running more inference steps could help resolve some of those details.

Low Learning Rate (`2e-6`)

### Human Face

We tried to incorporate the Kramer character from Seinfeld into Stable Diffusion. As previously mentioned, we trained for more steps with a smaller batch size. Even so, the results were not stellar. For the sake of brevity, we have omitted these sample images and defer the reader to the next sections, where face training became the focus of our efforts.

### Summary of Initial Results

To get good results training Stable Diffusion with Dreambooth, it's important to tune the learning rate and training steps for your dataset.

* High learning rates and too many training steps will lead to overfitting. The model will mostly generate images from your training data, no matter what prompt is used.

* Low learning rates and too few steps will lead to underfitting: the model will not be able to generate the concept we were trying to incorporate.

Faces are harder to train. In our experiments, a learning rate of `2e-6` with `400` training steps works well for objects but faces required `1e-6` (or `2e-6`) with ~1200 steps.

Image quality degrades a lot if the model overfits, and this happens if:

* The learning rate is too high.

* We run too many training steps.

* In the case of faces, when no prior preservation is used, as shown in the next section.

## Using Prior Preservation when training Faces

Prior preservation is a technique that uses additional images of the same class we are trying to train as part of the fine-tuning process. For example, if we try to incorporate a new person into the model, the _class_ we'd want to preserve could be _person_. Prior preservation tries to reduce overfitting by using photos of the new person combined with photos of other people. The nice thing is that we can generate those additional class images using the Stable Diffusion model itself! The training script takes care of that automatically if you want, but you can also provide a folder with your own prior preservation images.

Prior preservation, 1200 steps, lr=`2e-6`.

No prior preservation, 1200 steps, lr=`2e-6`.

As you can see, results are better when prior preservation is used, but there are still noisy blotches. It's time for some additional tricks!

## Effect of Schedulers

In the previous examples, we used the `PNDM` scheduler to sample images during the inference process. We observed that when the model overfits, `DDIM` usually works much better than `PNDM` and `LMSDiscrete`. In addition, quality can be improved by running inference for more steps: 100 seems to be a good choice. The additional steps help resolve some of the noise patches into image details.

`PNDM`, Kramer face

`LMSDiscrete`, Kramer face. Results are terrible!

`DDIM`, Kramer face. Much better

A similar behaviour can be observed for other subjects, although to a lesser extent.

`PNDM`, Potato Head

`LMSDiscrete`, Potato Head

`DDIM`, Potato Head

## Fine-tuning the Text Encoder

The original Dreambooth paper describes a method to fine-tune the UNet component of the model but keeps the text encoder frozen. However, we observed that fine-tuning the encoder produces better results. We experimented with this approach after seeing it used in other Dreambooth implementations, and the results are striking!

Frozen text encoder

Fine-tuned text encoder

Fine-tuning the text encoder produces the best results, especially with faces. It generates more realistic images, it's less prone to overfitting and it also achieves better prompt interpretability, being able to handle more complex prompts.

## Epilogue: Textual Inversion + Dreambooth

We also ran a final experiment where we combined [Textual Inversion](https://textual-inversion.github.io) with Dreambooth. Both techniques have a similar goal, but their approaches are different.

In this experiment we first ran textual inversion for 2000 steps. From that model, we then ran Dreambooth for an additional 500 steps using a learning rate of `1e-6`. These are the results:

We think the results are much better than doing plain Dreambooth but not as good as when we fine-tune the whole text encoder. It seems to copy the style of the training images a bit more, so it could be overfitting to them. We didn't explore this combination further, but it could be an interesting alternative to improve Dreambooth and still fit the process in a 16GB GPU. Feel free to explore and tell us about your results!

| huggingface/blog/blob/main/dreambooth.md |

--

title: 'Faster Text Generation with TensorFlow and XLA'

thumbnail: /blog/assets/91_tf_xla_generate/thumbnail.png

authors:

- user: joaogante

---

# Faster Text Generation with TensorFlow and XLA

<em>TL;DR</em>: Text Generation on 🤗 `transformers` using TensorFlow can now be compiled with XLA. It is up to 100x

faster than before, and [even faster than PyTorch](https://huggingface.co/spaces/joaogante/tf_xla_generate_benchmarks)

-- check the colab below!

<a target="_blank" href="https://colab.research.google.com/github/huggingface/blog/blob/main/notebooks/91_tf_xla_generate.ipynb">

<img src="https://colab.research.google.com/assets/colab-badge.svg" alt="Open In Colab"/>

</a>

## Text Generation

As the quality of large language models increased, so did our expectations of what those models could do. Especially

since the release of OpenAI's [GPT-2](https://openai.com/blog/better-language-models/), models with text

generation capabilities have been in the spotlight. And for legitimate reasons -- these models can be used to

summarize, translate, and they even have demonstrated zero-shot learning capabilities on some language tasks.

This blog post will show how to take the most of this technology with TensorFlow.

The 🤗 `transformers` library started with NLP models, so it is natural that text generation is of utmost

importance to us.

It is part of Hugging Face democratization efforts to ensure it is accessible, easily controllable, and efficient.

There is a previous [blog post](https://huggingface.co/blog/how-to-generate) about the different types of text

generation. Nevertheless, below there's a quick recap of the core functionality -- feel free to

[skip it](#tensorflow-and-xla) if you're

familiar with our `generate` function and want to jump straight into TensorFlow's specificities.

Let's start with the basics. Text generation can be deterministic or stochastic, depending on the

`do_sample` flag. By default it's set to `False`, causing the output to be deterministic, which is also known as

Greedy Decoding.

When it's set to `True`, also known as Sampling, the output will be stochastic, but you can still

obtain reproducible results through the `seed` argument (with the same format as in [stateless TensorFlow random

number generation](https://www.tensorflow.org/api_docs/python/tf/random/stateless_categorical#args)).

As a rule of thumb, you want deterministic generation if you wish

to obtain factual information from the model and stochastic generation if you're aiming at more creative outputs.

```python

# Requires transformers >= 4.21.0;

# Sampling outputs may differ, depending on your hardware.

from transformers import AutoTokenizer, TFAutoModelForCausalLM

tokenizer = AutoTokenizer.from_pretrained("gpt2")

model = TFAutoModelForCausalLM.from_pretrained("gpt2")

model.config.pad_token_id = model.config.eos_token_id

inputs = tokenizer(["TensorFlow is"], return_tensors="tf")

generated = model.generate(**inputs, do_sample=True, seed=(42, 0))

print("Sampling output: ", tokenizer.decode(generated[0]))

# > Sampling output: TensorFlow is a great learning platform for learning about

# data structure and structure in data science..

```

Depending on the target application, longer outputs might be desirable. You can control the length of the generation

output with `max_new_tokens`, keeping in mind that longer generations will require more resources.

```python

generated = model.generate(

**inputs, do_sample=True, seed=(42, 0), max_new_tokens=5

)

print("Limiting to 5 new tokens:", tokenizer.decode(generated[0]))

# > Limiting to 5 new tokens: TensorFlow is a great learning platform for

generated = model.generate(

**inputs, do_sample=True, seed=(42, 0), max_new_tokens=30

)

print("Limiting to 30 new tokens:", tokenizer.decode(generated[0]))

# > Limiting to 30 new tokens: TensorFlow is a great learning platform for

# learning about data structure and structure in data science................

```

Sampling has a few knobs you can play with to control randomness. The most important is `temperature`, which sets the overall entropy

of your output -- values below `1.0` will prioritize sampling tokens with a higher likelihood, whereas values above `1.0`

do the opposite. Setting it to `0.0` reduces the behavior to Greedy Decoding, whereas very large values approximate

uniform sampling.

```python

generated = model.generate(

**inputs, do_sample=True, seed=(42, 0), temperature=0.7

)

print("Temperature 0.7: ", tokenizer.decode(generated[0]))

# > Temperature 0.7: TensorFlow is a great way to do things like this........

generated = model.generate(

**inputs, do_sample=True, seed=(42, 0), temperature=1.5

)

print("Temperature 1.5: ", tokenizer.decode(generated[0]))

# > Temperature 1.5: TensorFlow is being developed for both Cython and Bamboo.

# On Bamboo...

```

Contrarily to Sampling, Greedy Decoding will always pick the most likely token at each iteration of generation.

However, it often results in sub-optimal outputs. You can increase the quality of the results through the `num_beams`

argument. When it is larger than `1`, it triggers Beam Search, which continuously explores high-probability sequences.

This exploration comes at the cost of additional resources and computational time.

```python

generated = model.generate(**inputs, num_beams=2)

print("Beam Search output:", tokenizer.decode(generated[0]))

# > Beam Search output: TensorFlow is an open-source, open-source,

# distributed-source application framework for the

```

Finally, when running Sampling or Beam Search, you can use `num_return_sequences` to return several sequences. For

Sampling it is equivalent to running generate multiple times from the same input prompt, while for Beam Search it

returns the highest scoring generated beams in descending order.

```python

generated = model.generate(**inputs, num_beams=2, num_return_sequences=2)

print(

"All generated hypotheses:",

"\n".join(tokenizer.decode(out) for out in generated)

)

# > All generated hypotheses: TensorFlow is an open-source, open-source,

# distributed-source application framework for the

# > TensorFlow is an open-source, open-source, distributed-source application

# framework that allows

```

The basics of text generation, as you can see, are straightforward to control. However, there are many options

not covered in the examples above, and it's encouraged to read the

[documentation](https://huggingface.co/docs/transformers/main/en/main_classes/text_generation#transformers.generation_tf_utils.TFGenerationMixin.generate)

for advanced use cases.

Sadly, when you run `generate` with TensorFlow, you might notice that it takes a while to execute.

If your target application expects low latency or a large amount of input prompts, running text generation with

TensorFlow looks like an expensive endeavour. 😬

Fear not, for the remainder of this blog post aims to demonstrate that one line of code can make a drastic improvement.

If you'd rather jump straight into action,

[the colab](https://colab.research.google.com/github/huggingface/blog/blob/main/notebooks/91_tf_xla_generate.ipynb)

has an interactive example you can fiddle with!

## TensorFlow and XLA

[XLA](https://www.tensorflow.org/xla), or Accelerated Linear Algebra, is a compiler originally developed to accelerate

TensorFlow models. Nowadays, it is also the compiler behind [JAX](https://github.com/google/jax), and it can even

be [used with PyTorch](https://huggingface.co/blog/pytorch-xla). Although the word "compiler" might sound daunting for

some, XLA is simple to use with TensorFlow -- it comes packaged inside the `tensorflow` library, and it can be

triggered with the `jit_compile` argument in any graph-creating function.

For those of you familiar with TensorFlow 1 🧓, the concept of a TensorFlow graph comes naturally, as it was the only

mode of operation. First, you defined the operations in a declarative fashion to create a graph. Afterwards, you could

pipe inputs through the graph and observe the outputs. Fast, efficient, but painful to debug. With TensorFlow 2 came

Eager Execution and the ability to code the models imperatively -- the TensorFlow team explains the difference in more

detail in [their blog post](https://blog.tensorflow.org/2019/01/what-are-symbolic-and-imperative-apis.html).

Hugging Face writes their TensorFlow models with Eager Execution in mind. Transparency is a core value, and being able

to inspect the model internals at any point is very benefitial to that end. However, that does mean that some uses of

the models do not benefit from the graph mode performance advantages out of the box (e.g. when calling `model(args)`).

Fortunately, the TensorFlow team has users like us covered 🥳! Wrapping a function containing TensorFlow code with

[`tf.function`](https://www.tensorflow.org/api_docs/python/tf/function) will attempt to convert it into a graph when

you call the wrapped function. If you're training a model, calling `model.compile()` (without `run_eagerly=True`) does

precisely that wrapping, so that you benefit from graph mode when you call `model.fit()`. Since `tf.function` can be

used in any function containing TensorFlow code, it means you can use it on functions that go beyond model inference,

creating a single optimized graph.

Now that you know how to create TensorFlow graphs, compiling them with XLA is straightforward -- simply add `jit_compile=True`

as an argument to the functions mentioned above (`tf.function` and `tf.keras.Model.compile`). Assuming everything went well

(more on that below) and that you are using a GPU or a TPU, you will notice that the first call will take a while, but

that the remaining ones are much, much faster. Here's a simple example of a function that performs model inference and some post-processing of its outputs:

```python

# Note: execution times are deeply dependent on hardware -- a 3090 was used here.

import tensorflow as tf

from transformers import AutoTokenizer, TFAutoModelForCausalLM

tokenizer = AutoTokenizer.from_pretrained("gpt2")

model = TFAutoModelForCausalLM.from_pretrained("gpt2")

inputs = tokenizer(["TensorFlow is"], return_tensors="tf")

def most_likely_next_token(inputs):

model_output = model(inputs)

return tf.argmax(model_output.logits[:, -1, :], axis=-1)

print("Calling regular function with TensorFlow code...")

most_likely_next_token(inputs)

# > Execution time -- 48.8 ms

```

In one line, you can create an XLA-accelerated function from the function above.

```python

xla_most_likely_next_token = tf.function(most_likely_next_token, jit_compile=True)

print("Calling XLA function... (for the first time -- will be slow)")

xla_most_likely_next_token(inputs)

# > Execution time -- 3951.0 ms

print("Calling XLA function... (for the second time -- will be fast)")

xla_most_likely_next_token(inputs)

# > Execution time -- 1.6 ms

```

## Text Generation using TensorFlow with XLA

As with any optimization procedure, there is no free lunch -- XLA is no exception. From the perspective of a text

generation user, there is only one technical aspect that you need to keep in mind. Without digging too much into

[details](https://www.tensorflow.org/guide/function#rules_of_tracing), XLA used in this fashion does just-in-time (JIT)

compilation of a `tf.function` when you call it, which relies on polymorphism.

When you compile a function this way, XLA keeps track of the shape and type of every tensor, as well as the data of

every non-tensor function input. The function is compiled to a binary, and every time it is called with the same tensor

shape and type (with ANY tensor data) and the same non-tensor arguments, the compiled function can be reused.

Contrarily, if you call the function with a different shape or type in an input tensor, or if you use a different

non-tensor argument, then a new costly compilation step will take place. Summarized in a simple example:

```python

# Note: execution times are deeply dependent on hardware -- a 3090 was used here.

import tensorflow as tf

@tf.function(jit_compile=True)

def max_plus_constant(tensor, scalar):

return tf.math.reduce_max(tensor) + scalar

# Slow: XLA compilation will kick in, as it is the first call

max_plus_constant(tf.constant([0, 0, 0]), 1)

# > Execution time -- 520.4 ms

# Fast: Not the first call with this tensor shape, tensor type, and exact same

# non-tensor argument

max_plus_constant(tf.constant([1000, 0, -10]), 1)

# > Execution time -- 0.6 ms

# Slow: Different tensor type

max_plus_constant(tf.constant([0, 0, 0], dtype=tf.int64), 1)

# > Execution time -- 27.1 ms

# Slow: Different tensor shape

max_plus_constant(tf.constant([0, 0, 0, 0]), 1)

# > Execution time -- 25.5 ms

# Slow: Different non-tensor argument

max_plus_constant(tf.constant([0, 0, 0]), 2)

# > Execution time -- 24.9 ms

```

In practice, for text generation, it simply means the input should be padded to a multiple of a certain length (so it

has a limited number of possible shapes), and that using different options will be slow for the first time you use

them. Let's see what happens when you naively call generation with XLA.

```python

# Note: execution times are deeply dependent on hardware -- a 3090 was used here.

import time

import tensorflow as tf

from transformers import AutoTokenizer, TFAutoModelForCausalLM

# Notice the new argument, `padding_side="left"` -- decoder-only models, which can

# be instantiated with TFAutoModelForCausalLM, should be left-padded, as they

# continue generating from the input prompt.

tokenizer = AutoTokenizer.from_pretrained(

"gpt2", padding_side="left", pad_token="</s>"

)

model = TFAutoModelForCausalLM.from_pretrained("gpt2")

model.config.pad_token_id = model.config.eos_token_id

input_1 = ["TensorFlow is"]

input_2 = ["TensorFlow is a"]

# One line to create a XLA generation function

xla_generate = tf.function(model.generate, jit_compile=True)

# Calls XLA generation without padding

tokenized_input_1 = tokenizer(input_1, return_tensors="tf") # length = 4

tokenized_input_2 = tokenizer(input_2, return_tensors="tf") # length = 5

print(f"`tokenized_input_1` shape = {tokenized_input_1.input_ids.shape}")

print(f"`tokenized_input_2` shape = {tokenized_input_2.input_ids.shape}")

print("Calling XLA generation with tokenized_input_1...")

print("(will be slow as it is the first call)")

start = time.time_ns()

xla_generate(**tokenized_input_1)

end = time.time_ns()

print(f"Execution time -- {(end - start) / 1e6:.1f} ms\n")

# > Execution time -- 9565.1 ms

print("Calling XLA generation with tokenized_input_2...")

print("(has a different length = will trigger tracing again)")

start = time.time_ns()

xla_generate(**tokenized_input_2)

end = time.time_ns()

print(f"Execution time -- {(end - start) / 1e6:.1f} ms\n")

# > Execution time -- 6815.0 ms

```

Oh no, that's terribly slow! A solution to keep the different combinations of shapes in check is through padding,

as mentioned above. The tokenizer classes have a `pad_to_multiple_of` argument that can be used to achieve a balance

between accepting any input length and limiting tracing.

```python

padding_kwargs = {"pad_to_multiple_of": 8, "padding": True}

tokenized_input_1_with_padding = tokenizer(

input_1, return_tensors="tf", **padding_kwargs

) # length = 8

tokenized_input_2_with_padding = tokenizer(

input_2, return_tensors="tf", **padding_kwargs

) # length = 8

print(

"`tokenized_input_1_with_padding` shape = ",

f"{tokenized_input_1_with_padding.input_ids.shape}"

)

print(

"`tokenized_input_2_with_padding` shape = ",

f"{tokenized_input_2_with_padding.input_ids.shape}"

)

print("Calling XLA generation with tokenized_input_1_with_padding...")

print("(slow, first time running with this length)")

start = time.time_ns()

xla_generate(**tokenized_input_1_with_padding)

end = time.time_ns()

print(f"Execution time -- {(end - start) / 1e6:.1f} ms\n")

# > Execution time -- 6815.4 ms

print("Calling XLA generation with tokenized_input_2_with_padding...")

print("(will be fast!)")

start = time.time_ns()

xla_generate(**tokenized_input_2_with_padding)

end = time.time_ns()

print(f"Execution time -- {(end - start) / 1e6:.1f} ms\n")

# > Execution time -- 19.3 ms

```

That's much better, successive generation calls performed this way will be orders of magnitude faster than before.

Keep in mind that trying new generation options, at any point, will trigger tracing.

```python

print("Calling XLA generation with the same input, but with new options...")

print("(slow again)")

start = time.time_ns()

xla_generate(**tokenized_input_1_with_padding, num_beams=2)

end = time.time_ns()

print(f"Execution time -- {(end - start) / 1e6:.1f} ms\n")

# > Execution time -- 9644.2 ms

```

From a developer perspective, relying on XLA implies being aware of a few additional nuances. XLA shines when the size

of the data structures are known in advance, such as in model training. On the other hand, when their dimensions are

impossible to determine or certain dynamic slices are used, XLA fails to compile. Modern implementations of text

generation are auto-regressive, whose natural behavior is to expand tensors and to abruptly interrupt some operations

as it goes -- in other words, not XLA-friendly by default.

We have [rewritten our entire TensorFlow text generation codebase](https://github.com/huggingface/transformers/pull/17857)

to vectorize operations and use fixed-sized

structures with padding. Our NLP models were also modified to correctly use their positional embeddings in the

presence of padded structures. The result should be invisible to TensorFlow text generation users, except for the

availability of XLA compilation.

## Benchmarks and Conclusions

Above you saw that you can convert TensorFlow functions into a graph and accelerate them with XLA compilation.

Current forms of text generation are simply an auto-regressive functions that alternate between a model forward pass

and some post-processing, producing one token per iteration. Through XLA compilation, the entire process gets

optimized, resulting in faster execution. But how much faster? The [Gradio demo below](https://huggingface.co/spaces/joaogante/tf_xla_generate_benchmarks) contains some benchmarks

comparing Hugging Face's text generation on multiple GPU models for the two main ML frameworks, TensorFlow and PyTorch.

<div class="hidden xl:block">

<div style="display: flex; flex-direction: column; align-items: center;">

<iframe src="https://joaogante-tf-xla-generate-benchmarks.hf.space" frameBorder="0" width="1200px" height="760px" title="Gradio app" allow="accelerometer; ambient-light-sensor; autoplay; battery; camera; document-domain; encrypted-media; fullscreen; geolocation; gyroscope; layout-animations; legacy-image-formats; magnetometer; microphone; midi; oversized-images; payment; picture-in-picture; publickey-credentials-get; sync-xhr; usb; vr ; wake-lock; xr-spatial-tracking" sandbox="allow-forms allow-modals allow-popups allow-popups-to-escape-sandbox allow-same-origin allow-scripts allow-downloads"></iframe>

</div>

</div>

If you explore the results, two conclusions become quickly visible:

1. As this blog post has been building up to here, TensorFlow text generation is much faster when XLA is used. We are

talking about speedups larger than 100x in some cases, which truly demonstrates the power of a compiled graph 🚀

2. TensorFlow text generation with XLA is the fastest option in the vast majority of cases, in some of them by as

much as 9x faster, debunking the myth that PyTorch is the go-to framework for serious NLP tasks 💪

Give [the colab](https://colab.research.google.com/github/huggingface/blog/blob/main/notebooks/91_tf_xla_generate.ipynb)

a go, and enjoy the power of text generation supercharged with XLA!

| huggingface/blog/blob/main/tf-xla-generate.md |

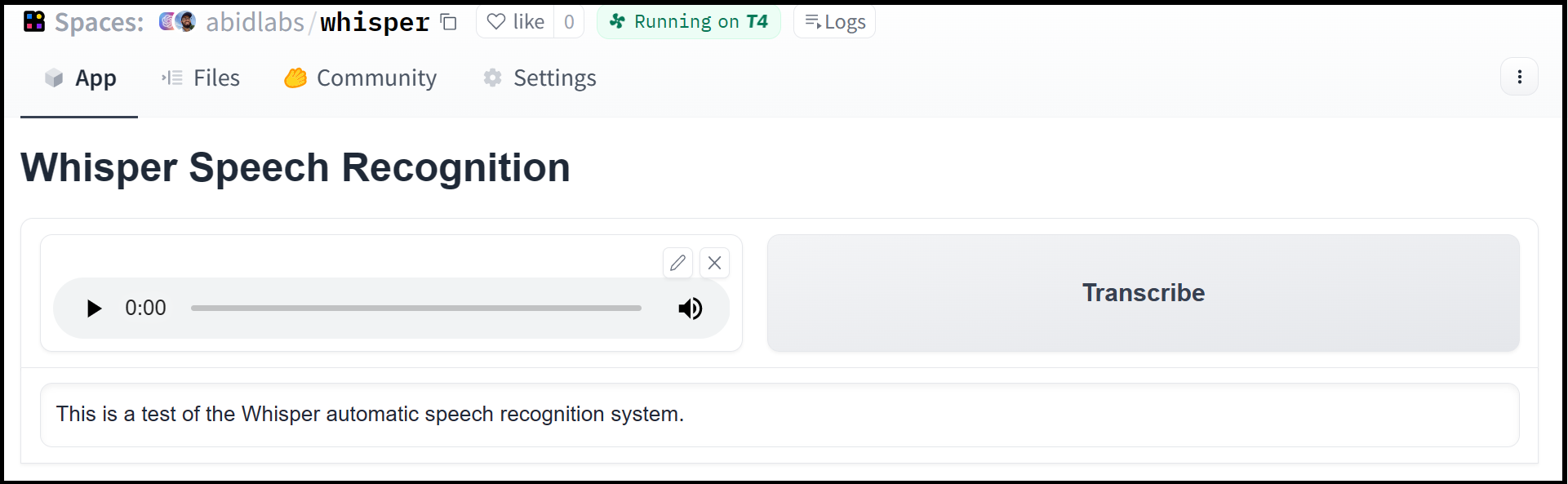

utomatic speech recognition English. Record from your microphone and the app will transcribe the audio. | gradio-app/gradio/blob/main/demo/automatic-speech-recognition/DESCRIPTION.md |

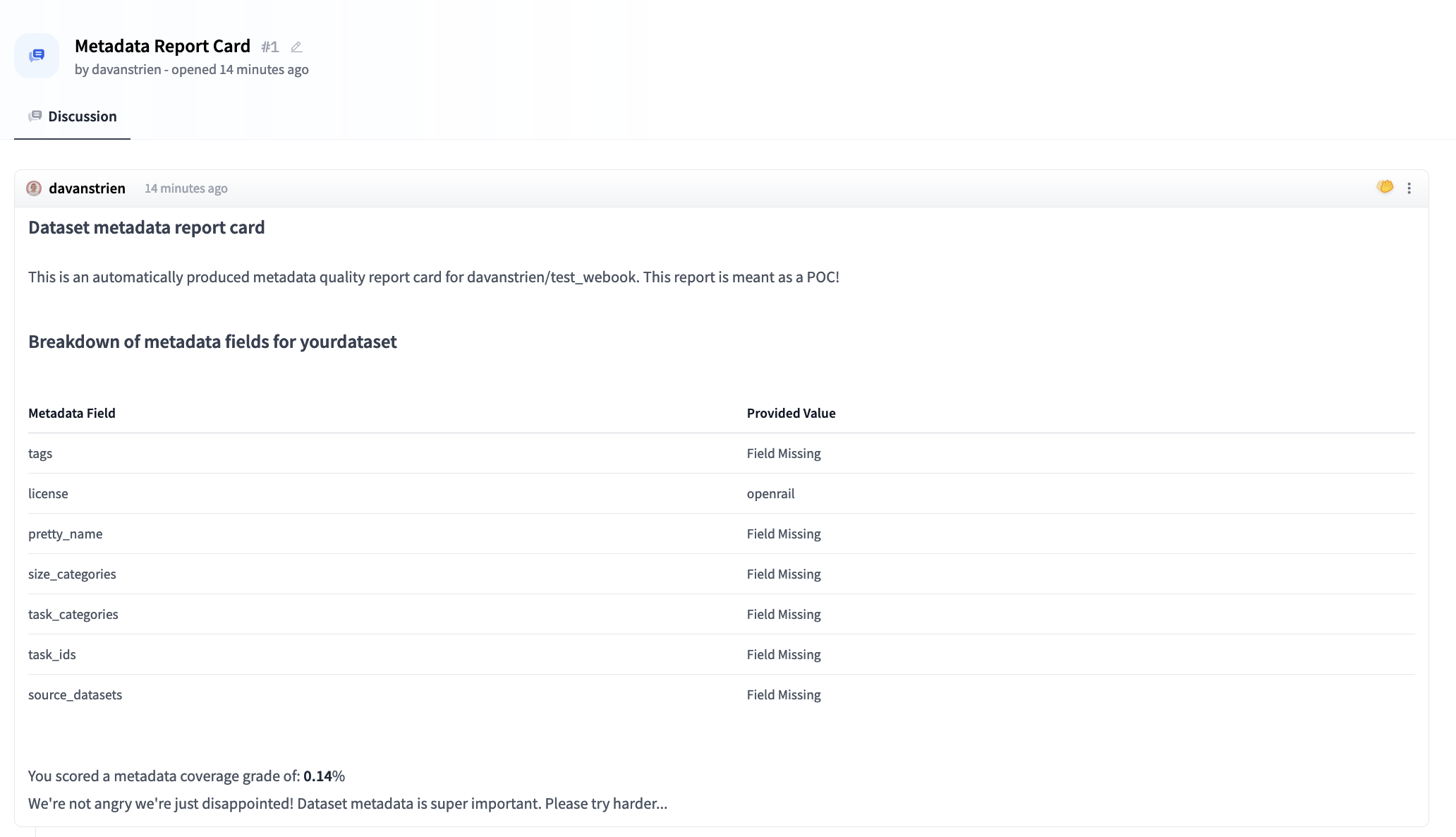

Datasets without language challenge

Related to https://github.com/huggingface/hub-docs/issues/986.

## Context

The Hugging Face Hub hosts hundreds of thousands of public models and datasets. These datasets and models cover a wide range of languages. One of the main ways in which it's possible to know what language a dataset is in is by looking at the `language` field in the dataset's [metadata](https://huggingface.co/docs/hub/datasets-cards#dataset-card-metadata) section of the dataset card.

```yaml

language:

- "List of ISO 639-1 code for your language"

- lang1

pretty_name: "Pretty Name of the Dataset"

tags:

- tag1

- tag2

license: "any valid license identifier"

task_categories:

- task1

```

Having this field filled in is essential for users to find datasets in their language and give a better idea of the languages that the Hub covers. However, the dataset's author has only sometimes filled this field. This challenge is to fill in the `language` field for datasets that don't have it filled in.

## How to contribute?

How can you help improve the coverage of language metadata on the Hub?

For each dataset, the workflow is the following:

1. Find a dataset that doesn't have the `language` field filled in. You can find a list of datasets without the `language` field filled in [here](#datasets-without-language-field-filled-in). We start with datasets that have the most downloads and likes.

2. **Check that the dataset doesn't already have a PR to add a language tag(s).** Someone else may have already started working on it. You can check this by looking in the discussion section of the dataset page.

3. If there is no PR to add language metadata already open, your next step is to identify the language (if possible for the dataset). There are a few main ways you can often identify the language

1. The dataset's name. Often, the name of the dataset will include the language. Sometimes as a full name, i.e. `imdb_german` or sometimes as a language code, i.e. `imdb_de`. You can use that as the language tag if the dataset name includes the language.

2. The dataset card will sometimes mention the language(s) of the dataset explicitly.

3. Many datasets will have an active [dataset viewer](https://huggingface.co/docs/hub/datasets-viewer) for the dataset. This will allow you to see examples from the dataset. You may identify the language by looking at the text examples.

4. Sometimes, the dataset will have a column specifying the language of the text. You can use this column to fill in the language tag(s).

5. If the dataset viewer is available for the dataset, but you don't recognize the language, you can use the [facebook/fasttext-language-identification](https://huggingface.co/facebook/fasttext-language-identification) model or [Google Translate](https://translate.google.com/) to try to identify the language.

4. Once you've identified the language(s) of the dataset, you can add the language tag(s) to the dataset card. You can do this by clicking the `Edit` button on the dataset card. This will open a PR to the dataset repo. You can add the language tag(s) to the `language` field in the dataset card. Some datasets may have multiple languages. Try and add all of the languages you have identified.

5. Once done, open a PR on GitHub to update the table below. Once merged, this will count as a Hacktoberfest contribution! Add the `pr_url` (the one on the Hub) and a status ( , merged, closed) in the PR.

6. Adding a language tag to some of the datasets below may not make sense. If so, add `not relevant` as the link in the `pr_url`. There may also be datasets where you need help with the language. In these cases, you can open a discussion to suggest a language tag(s) is added to the dataset.

## F.A.Q.

### Does it make sense to add language metadata to all datasets?

No! This is why we have focused on datasets with a `task_categories` field indicating that the dataset has a text-related task.

### Can I use a script to automate the process?

While it is possible to use machine learning to help assist this process, see [this blog](https://huggingface.co/blog/huggy-lingo) as an example; checking the accuracy of the PRs you are making is still important.

## What about datasets with multiple languages?

Some datasets may have more than one language. Do your best to add all the languages you can identify in the datasets. If there is a vast number, this may be tricky. In this case, do your best.

## What about code?

Currently, you can add a language tag for `code`. You will need to do this directly in the `YAML` rather than the visual editor since using the visual editor will lead to an auto-completion for the `co` language code (Corsican).

## Can I update the table with new datasets?

Yes, it's fine to add new rows if there are other datasets where it makes sense to have language metadata. However, we'll focus only on datasets with at least ten downloads in the past 30 days to have the most impact. You can see download information alongside the dataset on the Hub website or access this information via the API. For example, to filter datasets to have at least 20 downloads you could do the following

```python

from huggingface_hub import list_datasets

datasets = list_datasets(full=True)

datasets_with_at_least_20_downloads = [dataset for dataset in datasets if dataset.downloads >20]

```

## Datasets without language field filled in

| status | pr_url | hub_id | downloads | likes |

|--------|-----------------------------------------------------------------------------------------------------------------------------------|--------------------------------------------------------------------------------------------------------------------------------------------------------------------|-----------|-------|

| Merged | [here](https://huggingface.co/datasets/sahil2801/CodeAlpaca-20k/discussions/5) | [sahil2801/CodeAlpaca-20k](https://huggingface.co/datasets/sahil2801/CodeAlpaca-20k) | 2124 | 104 |

| | | [facebook/winoground](https://huggingface.co/datasets/facebook/winoground) | 5468 | 57 |

| | | [oscar-corpus/OSCAR-2301](https://huggingface.co/datasets/oscar-corpus/OSCAR-2301) | 7814 | 56 |

| | [here](https://huggingface.co/datasets/HuggingFaceH4/CodeAlpaca_20K/discussions/1) | [HuggingFaceH4/CodeAlpaca_20K](https://huggingface.co/datasets/HuggingFaceH4/CodeAlpaca_20K) | 850 | 36 |

| |[here](https://huggingface.co/datasets/huggan/wikiart/discussions/3) | [huggan/wikiart](https://huggingface.co/datasets/huggan/wikiart) | 344 | 38 |

| |[here](https://huggingface.co/datasets/MMInstruction/M3IT/discussions/7) | [MMInstruction/M3IT](https://huggingface.co/datasets/MMInstruction/M3IT) | 62902 | 47 |

| Merged | [here](https://huggingface.co/datasets/codeparrot/self-instruct-starcoder/discussions/3) | [codeparrot/self-instruct-starcoder](https://huggingface.co/datasets/codeparrot/self-instruct-starcoder) | 454 | 25 |

| | [here](https://huggingface.co/datasets/unaidedelf87777/openapi-function-invocations-25k/discussions/3) | [unaidedelf87777/openapi-function-invocations-25k](https://huggingface.co/datasets/unaidedelf87777/openapi-function-invocations-25k) | 47 | 20 |

| | [here](https://huggingface.co/datasets/Matthijs/cmu-arctic-xvectors/discussions/4) | [Matthijs/cmu-arctic-xvectors](https://huggingface.co/datasets/Matthijs/cmu-arctic-xvectors) | 158508 | 19 |

| | [here](https://huggingface.co/datasets/skg/toxigen-data/discussions/4) | [skg/toxigen-data](https://huggingface.co/datasets/skg/toxigen-data) | 957 | 17 |

| | | [oscar-corpus/colossal-oscar-1.0](https://huggingface.co/datasets/oscar-corpus/colossal-oscar-1.0) | 66 | 17 |

| Merged | [here](https://huggingface.co/datasets/aadityaubhat/GPT-wiki-intro/discussions/2) | [aadityaubhat/GPT-wiki-intro](https://huggingface.co/datasets/aadityaubhat/GPT-wiki-intro) | 267 | 15 |

| | [here](https://huggingface.co/datasets/codeparrot/github-jupyter-code-to-text/discussions/1) | [codeparrot/github-jupyter-code-to-text](https://huggingface.co/datasets/codeparrot/github-jupyter-code-to-text) | 11 | 14 |

| | [here](https://huggingface.co/datasets/cfilt/iitb-english-hindi/discussions/1#651ab7559c4067f3b896564f) | [cfilt/iitb-english-hindi](https://huggingface.co/datasets/cfilt/iitb-english-hindi) | 1147 | 11 |

| | [here](https://huggingface.co/datasets/iamtarun/python_code_instructions_18k_alpaca/discussions/1) | [iamtarun/python_code_instructions_18k_alpaca](https://huggingface.co/datasets/iamtarun/python_code_instructions_18k_alpaca) | 1424 | 10 |

| Merged | [here](https://huggingface.co/datasets/argilla/databricks-dolly-15k-curated-en/discussions/1#651ab6e569d3438f0f246312) | [argilla/databricks-dolly-15k-curated-en](https://huggingface.co/datasets/argilla/databricks-dolly-15k-curated-en) | 9651261 | 9 |

| | | [sander-wood/irishman](https://huggingface.co/datasets/sander-wood/irishman) | 456 | 9 |

| | | [OleehyO/latex-formulas](https://huggingface.co/datasets/OleehyO/latex-formulas) | 46 | 9 |

| Merged | [here](https://huggingface.co/datasets/german-nlp-group/german_common_crawl/discussions/1) | [german-nlp-group/german_common_crawl](https://huggingface.co/datasets/german-nlp-group/german_common_crawl) | 116 | 7 |

| | [here](https://huggingface.co/datasets/kunishou/databricks-dolly-69k-ja-en-translation/discussions/1#651aba1b1c53eaa6dbaca648) | [kunishou/databricks-dolly-69k-ja-en-translation](https://huggingface.co/datasets/kunishou/databricks-dolly-69k-ja-en-translation) | 22 | 7 |

| | | [Muennighoff/flores200](https://huggingface.co/datasets/Muennighoff/flores200) | 93084 | 5 |

| | [here](https://huggingface.co/datasets/nanelimon/turkish-social-media-bullying-dataset/discussions/1#651ae8247d45b917399dbade) | [nanelimon/turkish-social-media-bullying-dataset](https://huggingface.co/datasets/nanelimon/turkish-social-media-bullying-dataset) | 3 | 5 |

| | [here](https://huggingface.co/datasets/vivym/midjourney-prompts/discussions/1) | [vivym/midjourney-prompts](https://huggingface.co/datasets/vivym/midjourney-prompts) | 126 | 4 |

| | | [yuweiyin/FinBench](https://huggingface.co/datasets/yuweiyin/FinBench) | 102 | 4 |

| Merged | [here](https://huggingface.co/datasets/NbAiLab/norwegian-xsum/discussions/2#651b2951b08a2b1588b8d99e) | [NbAiLab/norwegian-xsum](https://huggingface.co/datasets/NbAiLab/norwegian-xsum) | 0 | 4 |

| | [here](https://huggingface.co/datasets/merve/turkish_instructions/discussions/1#651ae7a8cc1c891376b4bb45) | [merve/turkish_instructions](https://huggingface.co/datasets/merve/turkish_instructions) | 36 | 4 |

| | | [tianyang/repobench-c](https://huggingface.co/datasets/tianyang/repobench-c) | 240 | 3 |

| | [here](https://huggingface.co/datasets/HuggingFaceH4/self_instruct/discussions/1) | [HuggingFaceH4/self_instruct](https://huggingface.co/datasets/HuggingFaceH4/self_instruct) | 219 | 3 |

| | [here](https://huggingface.co/datasets/iamtarun/code_instructions_120k_alpaca/discussions/1) | [iamtarun/code_instructions_120k_alpaca](https://huggingface.co/datasets/iamtarun/code_instructions_120k_alpaca) | 141 | 3 |

| | [here](https://huggingface.co/datasets/j0selit0/insurance-qa-en/discussions/2#651ab933aa7da01954bdc21f) | [j0selit0/insurance-qa-en](https://huggingface.co/datasets/j0selit0/insurance-qa-en) | 64 | 3 |

| | | [billray110/corpus-of-diverse-styles](https://huggingface.co/datasets/billray110/corpus-of-diverse-styles) | 18 | 3 |

| | [here](https://huggingface.co/datasets/dmayhem93/agieval-sat-en/discussions/1#651ab8b5e8b2318cdb755b17) | [dmayhem93/agieval-sat-en](https://huggingface.co/datasets/dmayhem93/agieval-sat-en) | 87 | 2 |

| | | [polymer/dolphin-only-gpt-4](https://huggingface.co/datasets/polymer/dolphin-only-gpt-4) | 69 | 2 |

| | [here](https://huggingface.co/datasets/RafaelMPereira/HealthCareMagic-100k-Chat-Format-en/discussions/1#651abaea4dba2d9ed143b11d) | [RafaelMPereira/HealthCareMagic-100k-Chat-Format-en](https://huggingface.co/datasets/RafaelMPereira/HealthCareMagic-100k-Chat-Format-en) | 7 | 2 |

| | [here](https://huggingface.co/datasets/fathyshalab/Dialogsum-german-kurz/discussions/1) | [fathyshalab/Dialogsum-german-kurz](https://huggingface.co/datasets/fathyshalab/Dialogsum-german-kurz) | 0 | 2 |

| | [here](https://huggingface.co/datasets/philschmid/test_german_squad/discussions/1) | [philschmid/test_german_squad](https://huggingface.co/datasets/philschmid/test_german_squad) | 0 | 2 |

| | | [gia-project/gia-dataset](https://huggingface.co/datasets/gia-project/gia-dataset) | 1727 | 1 |

| | [here](https://huggingface.co/datasets/stas/wmt14-en-de-pre-processed/discussions/1#651ab7aa8a5c072ce16774ac) | [stas/wmt14-en-de-pre-processed](https://huggingface.co/datasets/stas/wmt14-en-de-pre-processed) | 423 | 1 |

| | [here](https://huggingface.co/datasets/ajaykarthick/imdb-movie-reviews/discussions/1) | [ajaykarthick/imdb-movie-reviews](https://huggingface.co/datasets/ajaykarthick/imdb-movie-reviews) | 222 | 1 |

| | | [MMInstruction/M3IT-80](https://huggingface.co/datasets/MMInstruction/M3IT-80) | 108 | 1 |

| Merged | [here](https://huggingface.co/datasets/rizerphe/sharegpt-hyperfiltered-3k-llama/discussions/1) | [rizerphe/sharegpt-hyperfiltered-3k-llama](https://huggingface.co/datasets/rizerphe/sharegpt-hyperfiltered-3k-llama) | 35 | 1 |

| | [here](https://huggingface.co/datasets/alvations/globalvoices-en-es/discussions/1#651ab996996b00d2900f310f) | [alvations/globalvoices-en-es](https://huggingface.co/datasets/alvations/globalvoices-en-es) | 33 | 1 |

| | | [ejschwartz/oo-method-test](https://huggingface.co/datasets/ejschwartz/oo-method-test) | 27 | 1 |

| | [here](https://huggingface.co/datasets/soymia/boudoir-dataset/discussions/1) | [soymia/boudoir-dataset](https://huggingface.co/datasets/soymia/boudoir-dataset) | 25 | 1 |

| | | [strombergnlp/offenseval_2020](https://huggingface.co/datasets/strombergnlp/offenseval_2020) | 24 | 1 |

| | [here](https://huggingface.co/datasets/vhtran/de-en-2023/discussions/1#651aba022bc734f0fa0c36af) | [vhtran/de-en-2023](https://huggingface.co/datasets/vhtran/de-en-2023) | 23 | 1 |

| | | [cw1521/ember2018-malware](https://huggingface.co/datasets/cw1521/ember2018-malware) | 17 | 1 |

| | [here](https://huggingface.co/datasets/AgentWaller/german-formatted-oasst1/discussions/1) | [AgentWaller/german-formatted-oasst1](https://huggingface.co/datasets/AgentWaller/german-formatted-oasst1) | 15 | 1 |

| | [here](https://huggingface.co/datasets/Senem/Nostalgic_Sentiment_Analysis_of_YouTube_Comments_Data/discussions/1) | [Senem/Nostalgic_Sentiment_Analysis_of_YouTube_Comments_Data](https://huggingface.co/datasets/Senem/Nostalgic_Sentiment_Analysis_of_YouTube_Comments_Data) | 12 | 1 |

| Merged | [here](https://huggingface.co/datasets/Photolens/oasst1-en/discussions/2#651aba64e8b2318cdb759528) | [Photolens/oasst1-en](https://huggingface.co/datasets/Photolens/oasst1-en) | 10 | 1 |

| | [here](https://huggingface.co/datasets/vhtran/id-en/discussions/1#651ababdc4fdc1c93efb0f2b) | [vhtran/id-en](https://huggingface.co/datasets/vhtran/id-en) | 8 | 1 |

| Merged | [here](https://huggingface.co/datasets/openmachinetranslation/tatoeba-en-fr/discussions/1#651aba96b693acb51958884b) | [openmachinetranslation/tatoeba-en-fr](https://huggingface.co/datasets/openmachinetranslation/tatoeba-en-fr) | 8 | 1 |

| | [here](https://huggingface.co/datasets/vhtran/uniq-de-en/discussions/1#651abb5e2bc734f0fa0c7f44) | [vhtran/uniq-de-en](https://huggingface.co/datasets/vhtran/uniq-de-en) | 5 | 1 |

| | [here](https://huggingface.co/datasets/marksverdhei/wordnet-definitions-en-2021/discussions/1#651abcd1a9e1c4c6cdd06042) | [marksverdhei/wordnet-definitions-en-2021](https://huggingface.co/datasets/marksverdhei/wordnet-definitions-en-2021) | 1 | 1 |

| | [here](https://huggingface.co/datasets/nogyxo/question-answering-ukrainian/discussions/1) | [nogyxo/question-answering-ukrainian](https://huggingface.co/datasets/nogyxo/question-answering-ukrainian) | 1 | 1 |

| | [here](https://huggingface.co/datasets/dandrade/es-en/discussions/1#651ac2720047dc5f7aae8124) | [dandrade/es-en](https://huggingface.co/datasets/dandrade/es-en) | 0 | 1 |

| | [here](https://huggingface.co/datasets/shreevigneshs/iwslt-2023-en-vi-train-split-v1/discussions/1#651ac23fb61121b1283a0402) | [shreevigneshs/iwslt-2023-en-vi-train-split-v1](https://huggingface.co/datasets/shreevigneshs/iwslt-2023-en-vi-train-split-v1) | 0 | 1 |

| Merged | [here](https://huggingface.co/datasets/loresiensis/corpus-en-es/discussions/1#651ac1e328c2633de960131e) | [loresiensis/corpus-en-es](https://huggingface.co/datasets/loresiensis/corpus-en-es) | 0 | 1 |

| Merged | [here](https://huggingface.co/datasets/Photolens/DISC-Med-SFT-en-translated-only-CMeKG/discussions/1#651ac9dfa9a91bf39df7489f) | [Photolens/DISC-Med-SFT-en-translated-only-CMeKG](https://huggingface.co/datasets/Photolens/DISC-Med-SFT-en-translated-only-CMeKG) | 0 | 1 |

| | [here](https://huggingface.co/datasets/joelniklaus/german_rental_agreements/discussions/1) | [joelniklaus/german_rental_agreements](https://huggingface.co/datasets/joelniklaus/german_rental_agreements) | 0 | 1 |

| | [here](https://huggingface.co/datasets/fathyshalab/Dialogsum-german/discussions/1) | [fathyshalab/Dialogsum-german](https://huggingface.co/datasets/fathyshalab/Dialogsum-german) | 0 | 1 |

| | [here](https://huggingface.co/datasets/Harsit/xnli2.0_german/discussions/1) | [Harsit/xnli2.0_german](https://huggingface.co/datasets/Harsit/xnli2.0_german) | 0 | 1 |

| | [here](https://huggingface.co/datasets/typevoid/german-company-addresses/discussions/1) | [typevoid/german-company-addresses](https://huggingface.co/datasets/typevoid/german-company-addresses) | 0 | 1 |

| | [here](https://huggingface.co/datasets/FreedomIntelligence/evol-instruct-italian/discussions/1) | [FreedomIntelligence/evol-instruct-italian](https://huggingface.co/datasets/FreedomIntelligence/evol-instruct-italian) | 0 | 1 |

| Merged | [here](https://huggingface.co/datasets/kmkarakaya/turkishReviews-ds/discussions/1#651ae845eb6c502094745048) | [kmkarakaya/turkishReviews-ds](https://huggingface.co/datasets/kmkarakaya/turkishReviews-ds) | 0 | 1 |

| | | [gia-project/gia-dataset-parquet](https://huggingface.co/datasets/gia-project/gia-dataset-parquet) | 10293 | 0 |

| | [here](https://huggingface.co/datasets/Jackmin108/c4-en-validation/discussions/1#651ab782bf3fb2499d4e8199) | [Jackmin108/c4-en-validation](https://huggingface.co/datasets/Jackmin108/c4-en-validation) | 1131 | 0 |

| | [here](https://huggingface.co/datasets/germank/hh-generated_flan_t5_large_with_features2/discussions/1) | [germank/hh-generated_flan_t5_large_with_features2](https://huggingface.co/datasets/germank/hh-generated_flan_t5_large_with_features2) | 681 | 0 |

| | [here](https://huggingface.co/datasets/germank/hh-rlhf_with_features_flan_t5_large/discussions/1) | [germank/hh-rlhf_with_features_flan_t5_large](https://huggingface.co/datasets/germank/hh-rlhf_with_features_flan_t5_large) | 336 | 0 |

| | | [nimaster/Devign_for_VD](https://huggingface.co/datasets/nimaster/Devign_for_VD) | 239 | 0 |

| | [here](https://huggingface.co/datasets/vhtran/uniq-id-en/discussions/1#651ab8329e0bf1e7f82fd3eb) | [vhtran/uniq-id-en](https://huggingface.co/datasets/vhtran/uniq-id-en) | 118 | 0 |

| | [here](https://huggingface.co/datasets/manu/wmt-en-fr/discussions/1#651ab850e3558015826cde35) | [manu/wmt-en-fr](https://huggingface.co/datasets/manu/wmt-en-fr) | 107 | 0 |

| | | [Jeska/autonlp-data-vaccinfaq](https://huggingface.co/datasets/Jeska/autonlp-data-vaccinfaq) | 104 | 0 |

| | | [alvp/autonlp-data-alberti-stanza-names](https://huggingface.co/datasets/alvp/autonlp-data-alberti-stanza-names) | 102 | 0 |

| | | [alvp/autonlp-data-alberti-stanzas-finetuning](https://huggingface.co/datasets/alvp/autonlp-data-alberti-stanzas-finetuning) | 102 | 0 |

| Merged | [here](https://huggingface.co/datasets/jegormeister/dutch-snli/discussions/1) | [jegormeister/dutch-snli](https://huggingface.co/datasets/jegormeister/dutch-snli) | 90 | 0 |

| | [here](https://huggingface.co/datasets/Iskaj/dutch_corpora_parliament_processed/discussions/1) | [Iskaj/dutch_corpora_parliament_processed](https://huggingface.co/datasets/Iskaj/dutch_corpora_parliament_processed) | 88 | 0 |

| | [here](https://huggingface.co/datasets/mtc/german_seahorse_dataset_with_articles/discussions/1) | [mtc/german_seahorse_dataset_with_articles](https://huggingface.co/datasets/mtc/german_seahorse_dataset_with_articles) | 87 | 0 |

| | [here](https://huggingface.co/datasets/dmayhem93/agieval-logiqa-en/discussions/1#651ab8cd9e0bf1e7f82ffa01) | [dmayhem93/agieval-logiqa-en](https://huggingface.co/datasets/dmayhem93/agieval-logiqa-en) | 86 | 0 |

| | [here](https://huggingface.co/datasets/dmayhem93/agieval-sat-en-without-passage/discussions/1#651ab8efda7605b21396f125) | [dmayhem93/agieval-sat-en-without-passage](https://huggingface.co/datasets/dmayhem93/agieval-sat-en-without-passage) | 86 | 0 |

| | [here](https://huggingface.co/datasets/manu/opus100-en-fr/discussions/1#651ab90de570bf249254d7ae) | [manu/opus100-en-fr](https://huggingface.co/datasets/manu/opus100-en-fr) | 76 | 0 |

| | [here](https://huggingface.co/datasets/manu/french_librispeech_text_only/discussions/1) | [manu/french_librispeech_text_only](https://huggingface.co/datasets/manu/french_librispeech_text_only) | 76 | 0 |

| | [here](https://huggingface.co/datasets/roskoN/stereoset_german/discussions/1) | [roskoN/stereoset_german](https://huggingface.co/datasets/roskoN/stereoset_german) | 74 | 0 |

| | | [ejschwartz/oo-method-test-split](https://huggingface.co/datasets/ejschwartz/oo-method-test-split) | 53 | 0 |

| | | [PierreLepagnol/WRENCH](https://huggingface.co/datasets/PierreLepagnol/WRENCH) | 49 | 0 |

| | | [mammoth-blaze/ParcelSummaryDS](https://huggingface.co/datasets/mammoth-blaze/ParcelSummaryDS) | 49 | 0 |

| | [here](https://huggingface.co/datasets/afkfatih/turkishdataset/discussions/1#651ae795fa4bf59ced650092) | [afkfatih/turkishdataset](https://huggingface.co/datasets/afkfatih/turkishdataset) | 48 | 0 |

| | | [Isaak-Carter/Function_Calling_Private_GG](https://huggingface.co/datasets/Isaak-Carter/Function_Calling_Private_GG) | 43 | 0 |

| | [here](https://huggingface.co/datasets/stas/wmt16-en-ro-pre-processed/discussions/1#651ab96911f562eb7f04aa5e) | [stas/wmt16-en-ro-pre-processed](https://huggingface.co/datasets/stas/wmt16-en-ro-pre-processed) | 40 | 0 |

| Merged | [here](https://huggingface.co/datasets/paoloitaliani/news_articles/discussions/1) | [paoloitaliani/news_articles](https://huggingface.co/datasets/paoloitaliani/news_articles) | 40 | 0 |

| Merged | [here](https://huggingface.co/datasets/pszemraj/simplepile-lite/discussions/1) | [pszemraj/simplepile-lite](https://huggingface.co/datasets/pszemraj/simplepile-lite) | 33 | 0 |

| | [here](https://huggingface.co/datasets/webimmunization/COVID-19-conspiracy-theories-tweets/discussions/2) | [webimmunization/COVID-19-conspiracy-theories-tweets](https://huggingface.co/datasets/webimmunization/COVID-19-conspiracy-theories-tweets) | 31 | 0 |

| | | [rdpahalavan/UNSW-NB15](https://huggingface.co/datasets/rdpahalavan/UNSW-NB15) | 30 | 0 |

| | | [marekk/testing_dataset_article_category](https://huggingface.co/datasets/marekk/testing_dataset_article_category) | 28 | 0 |

| Merged | [here](https://huggingface.co/datasets/Suchinthana/Databricks-Dolly-15k-si-en-mix/discussions/1#651ab9d4c69ca64b8dac2f8e) | [Suchinthana/Databricks-Dolly-15k-si-en-mix](https://huggingface.co/datasets/Suchinthana/Databricks-Dolly-15k-si-en-mix) | 24 | 0 |

| | | [rdpahalavan/CIC-IDS2017](https://huggingface.co/datasets/rdpahalavan/CIC-IDS2017) | 22 | 0 |

| | | [Admin08077/STUPID](https://huggingface.co/datasets/Admin08077/STUPID) | 21 | 0 |

| | [here](https://huggingface.co/datasets/serbog/job_listing_german_cleaned_bert/discussions/1) | [serbog/job_listing_german_cleaned_bert](https://huggingface.co/datasets/serbog/job_listing_german_cleaned_bert) | 20 | 0 |

| | [here](https://huggingface.co/datasets/germank/hh-generated_flan_t5_large_with_features2_flan_t5_large/discussions/1) | [germank/hh-generated_flan_t5_large_with_features2_flan_t5_large](https://huggingface.co/datasets/germank/hh-generated_flan_t5_large_with_features2_flan_t5_large) | 16 | 0 |

| | [here](https://huggingface.co/datasets/W4nkel/turkish-sentiment-dataset/discussions/1#651ae7c3ad11961965111641) | [W4nkel/turkish-sentiment-dataset](https://huggingface.co/datasets/W4nkel/turkish-sentiment-dataset) | 16 | 0 |

| | | [irds/nyt](https://huggingface.co/datasets/irds/nyt) | 15 | 0 |

| | [here](https://huggingface.co/datasets/pere/italian_tweets_500k/discussions/1) | [pere/italian_tweets_500k](https://huggingface.co/datasets/pere/italian_tweets_500k) | 14 | 0 |

| | [here](https://huggingface.co/datasets/generative-newsai/news-unmasked/discussions/1) | [generative-newsai/news-unmasked](https://huggingface.co/datasets/generative-newsai/news-unmasked) | 12 | 0 |

| Merged | [here](https://huggingface.co/datasets/irds/dpr-w100/discussions/1) | [irds/dpr-w100](https://huggingface.co/datasets/irds/dpr-w100) | 12 | 0 |

| | [here](https://huggingface.co/datasets/pere/italian_tweets_10M/discussions/1) | [pere/italian_tweets_10M](https://huggingface.co/datasets/pere/italian_tweets_10M) | 11 | 0 |

| | [here](https://huggingface.co/datasets/vhtran/de-en/discussions/1#651abad1b61121b12838a021) | [vhtran/de-en](https://huggingface.co/datasets/vhtran/de-en) | 8 | 0 |

| | [here](https://huggingface.co/datasets/tbboukhari/Alpaca-in-french/discussions/1) | [tbboukhari/Alpaca-in-french](https://huggingface.co/datasets/tbboukhari/Alpaca-in-french) | 8 | 0 |

| | [here](https://huggingface.co/datasets/ismailiismail/multi_paraphrasing_french/discussions/2) | [ismailiismail/multi_paraphrasing_french](https://huggingface.co/datasets/ismailiismail/multi_paraphrasing_french) | 6 | 0 |